↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many real-world decision-making scenarios involve dynamically allocating resources under uncertainty, such as online advertising or supply chain management. Existing research often focuses on the worst-case regret, which can be overly pessimistic. This paper studies “online contextual decision-making with knapsack constraints (CDMK)”, a framework where an agent makes sequential decisions based on observed requests and unknown external factors to maximize reward while respecting resource limitations. Previous work demonstrated a worst-case regret, but the actual performance can vary considerably depending on the problem instance.

This paper offers a more nuanced perspective. First, it shows that a large gap can exist between a commonly used benchmark (fluid optimum) and the optimal online solution. Second, the authors propose a novel algorithm combining re-solving heuristics and distribution estimation techniques. Under reasonable assumptions, this algorithm achieves a significantly lower regret. Crucially, it maintains a near-optimal regret guarantee even in worst-case scenarios. Finally, the analysis is extended to problems with continuous instead of discrete values for requests and external factors, significantly increasing the model’s realism and applicability.

Key Takeaways#

Why does it matter?#

This paper is crucial because it addresses a critical gap in the understanding of contextual decision-making problems with resource constraints. It challenges the common reliance on worst-case regret bounds by demonstrating that under mild assumptions, far better regret rates are achievable. This opens up new avenues for algorithm design and performance analysis, pushing the boundaries of existing research.

Visual Insights#

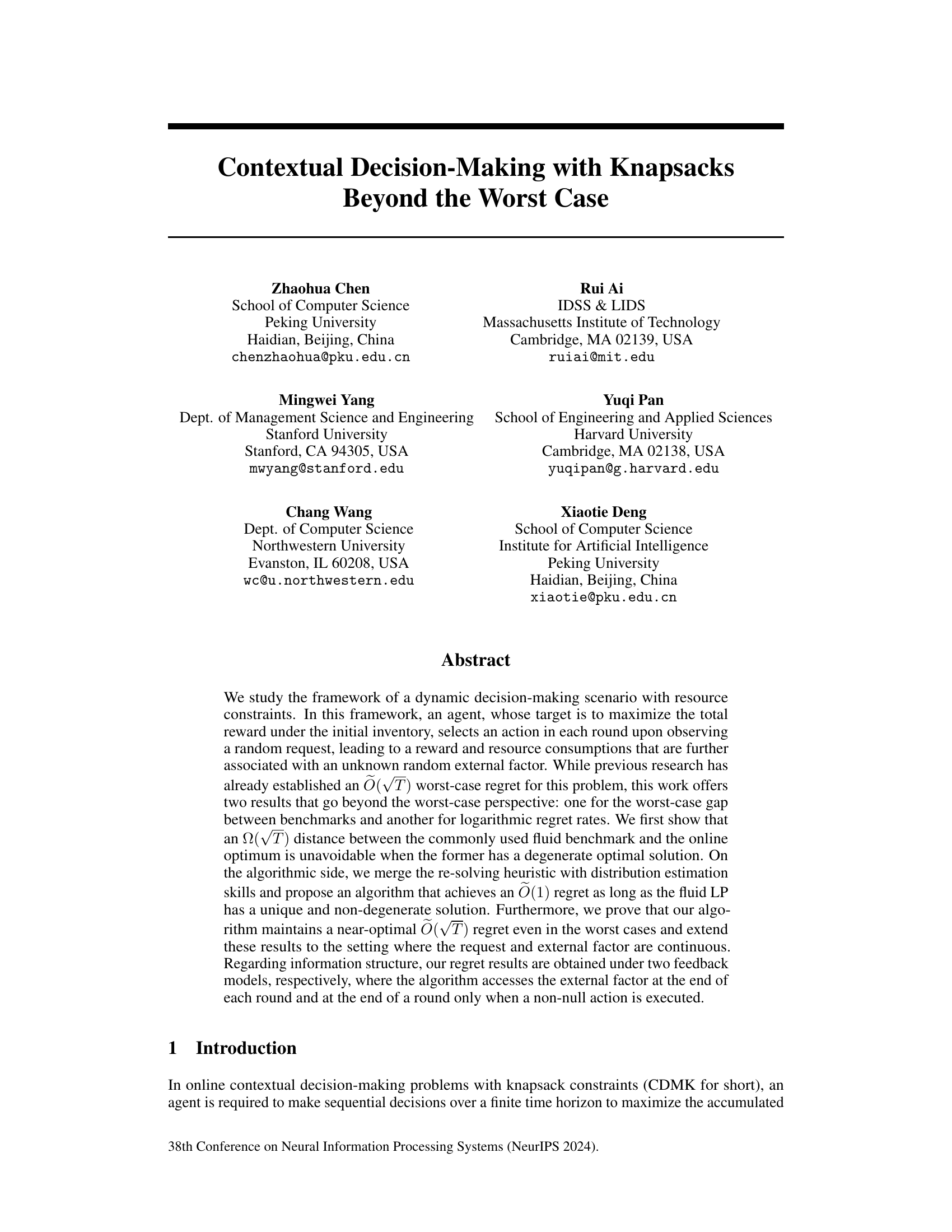

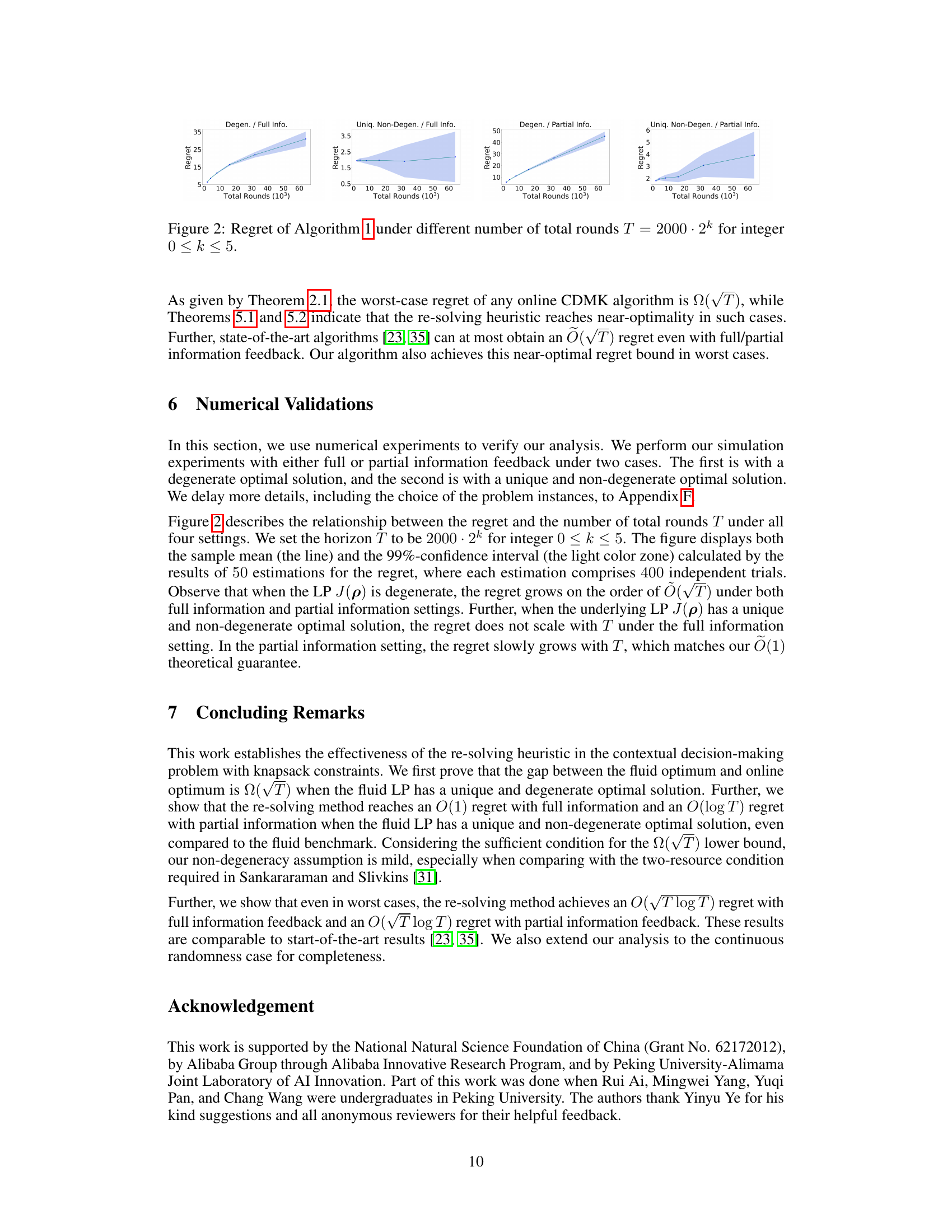

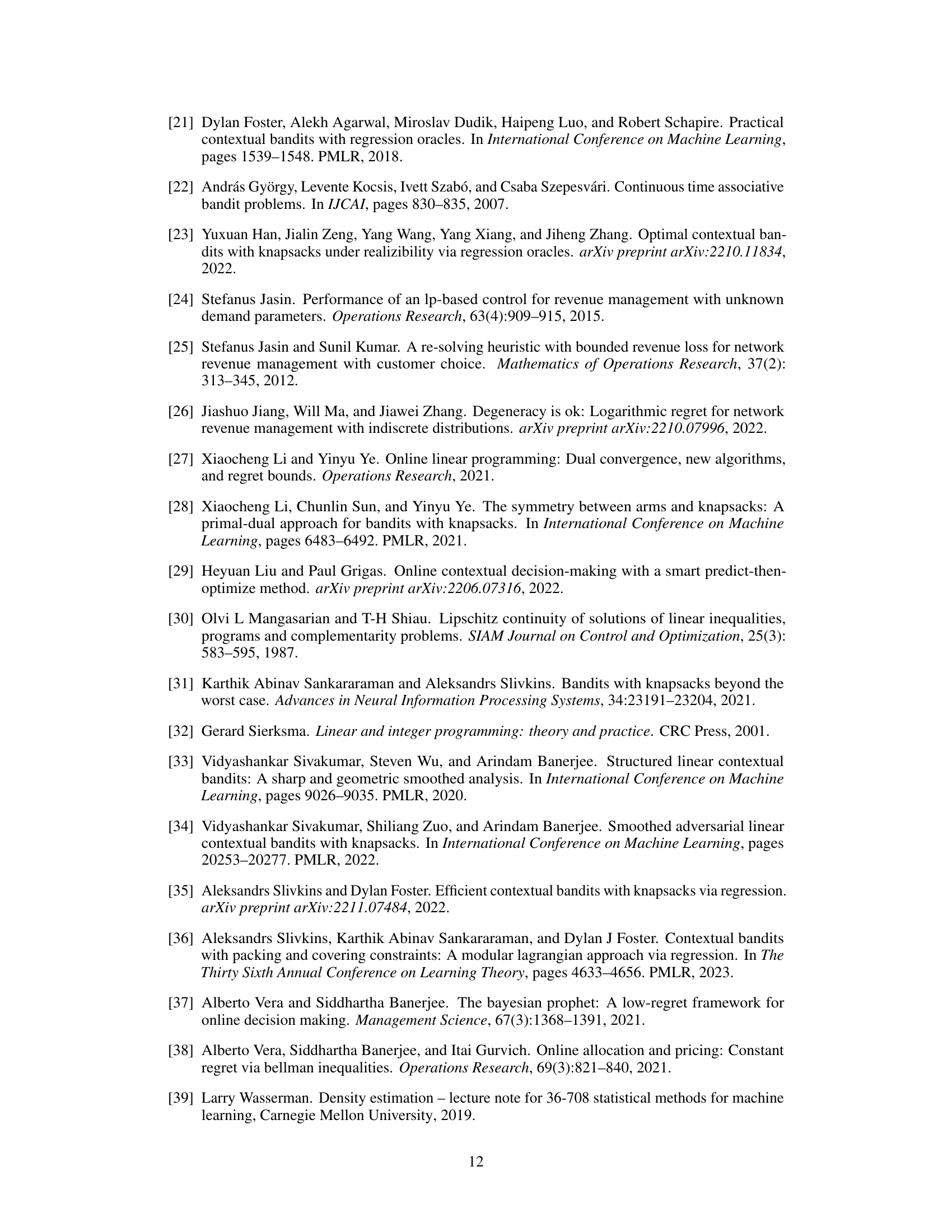

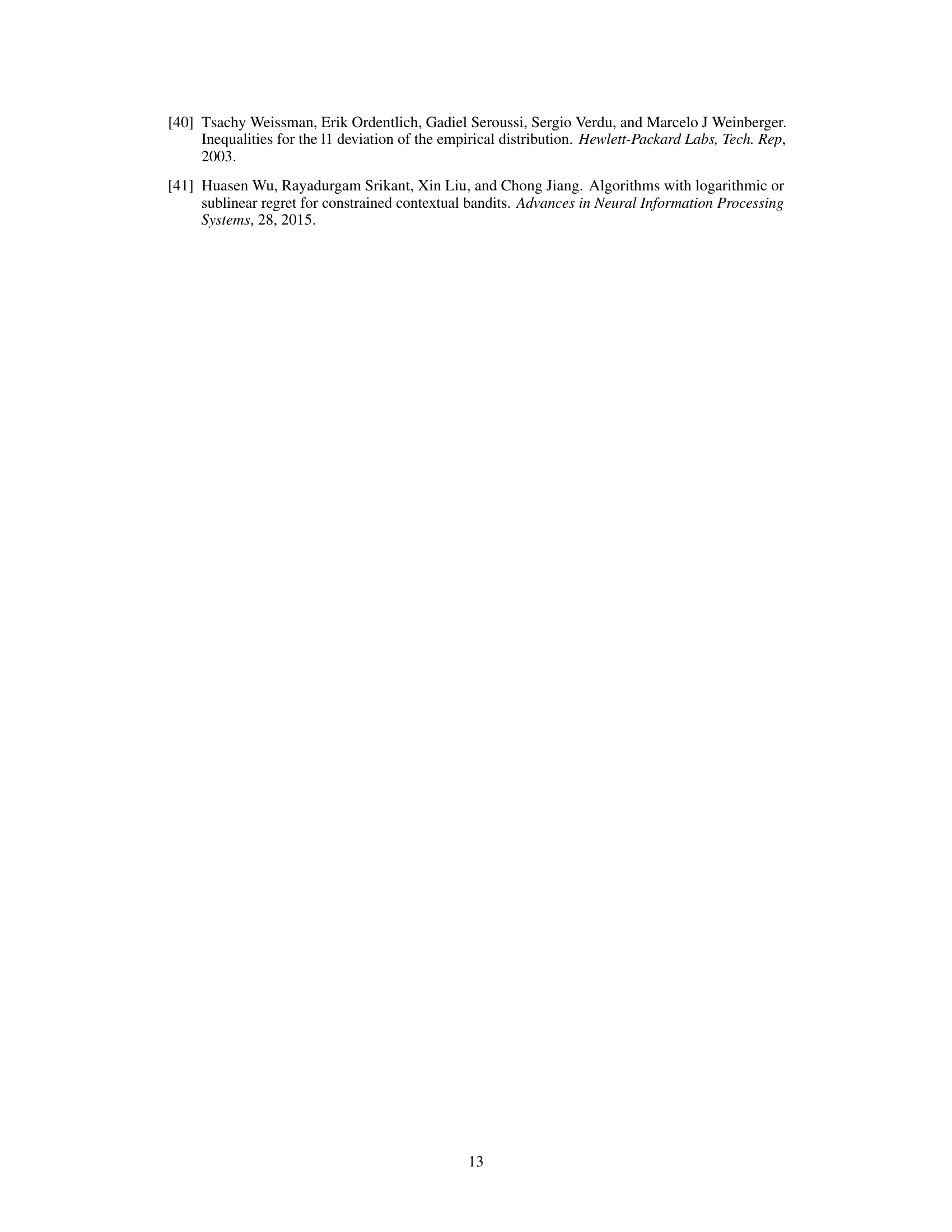

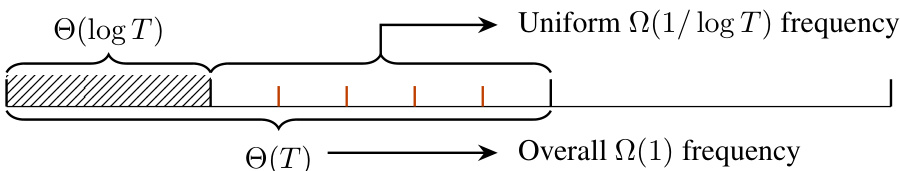

This figure illustrates Lemma 4.1, which provides a lower bound on the frequency with which Algorithm 1 accesses independent samples of the external factor under partial information feedback. The shaded region represents an initial period of Θ(log T) rounds where the access frequency is not guaranteed. After this initial period, a constant probability of accessing the external factor is established, leading to an overall Ω(1) frequency of access for the remainder of the time horizon.

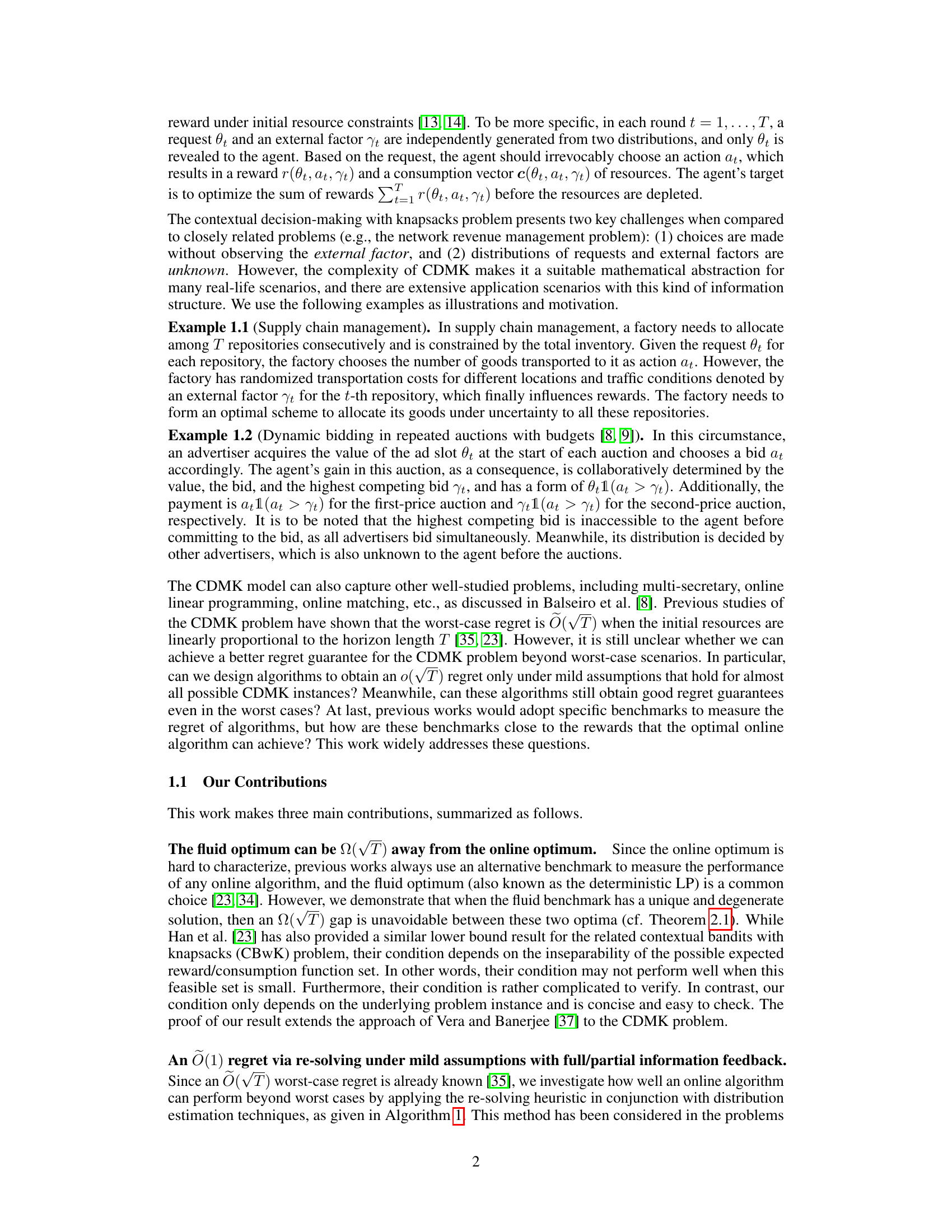

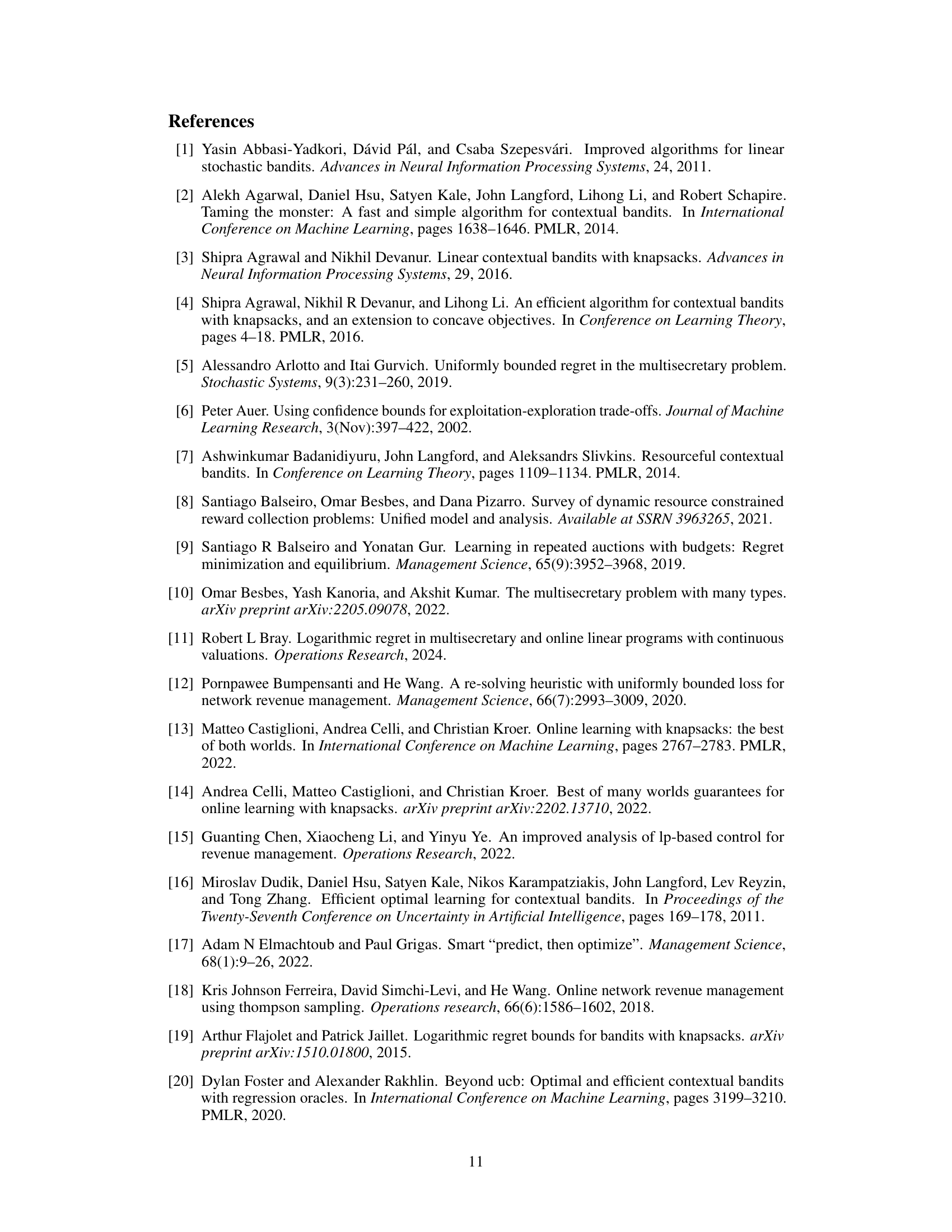

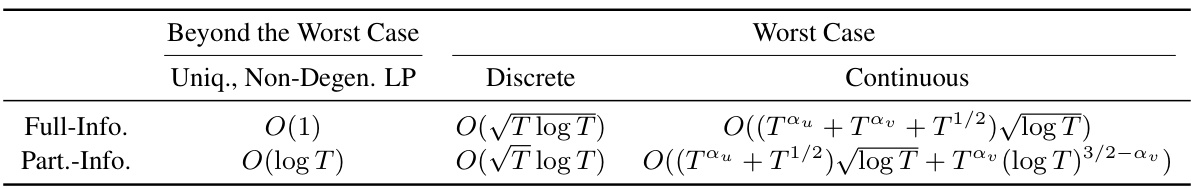

This table summarizes the theoretical results obtained from Algorithm 1 under different information feedback models (full or partial) and problem characteristics (unique, non-degenerate fluid linear program (LP) or worst-case scenarios). It shows the regret bounds achieved in both discrete and continuous settings for the request and external factors. The regret represents the difference between the accumulated reward of the algorithm and the optimal reward. Full-Info refers to full information feedback where the external factor is always observed, while Part-Info indicates partial information feedback, where the external factor is only observed when a non-null action is taken.

Full paper#