TL;DR#

Online learning aims to predict future outcomes accurately using sequential data. A major challenge lies in defining the minimal assumptions under which learnability is possible, especially for diverse concept classes (sets of possible patterns in the data). Previous works often focused on specific cases or made strong assumptions, leaving a gap in understanding general learnability.

This research addresses this gap by providing a complete characterization of the concept classes that are optimistically universally online learnable. It introduces general learning algorithms that work under minimal assumptions on the data for all concept classes, including both ‘realizable’ (data perfectly fits a pattern) and ‘agnostic’ (data may not perfectly fit a pattern) scenarios. The findings demonstrate an equivalence between these two settings regarding minimal assumptions and learnability, thereby significantly advancing our understanding of online learning’s fundamental limits and capabilities.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in online learning because it provides a complete characterization of concept classes learnable under minimal assumptions, resolving a longstanding open problem. It introduces novel learning algorithms and establishes equivalences between agnostic and realizable cases, opening new avenues for designing more efficient and robust online learning systems.

Visual Insights#

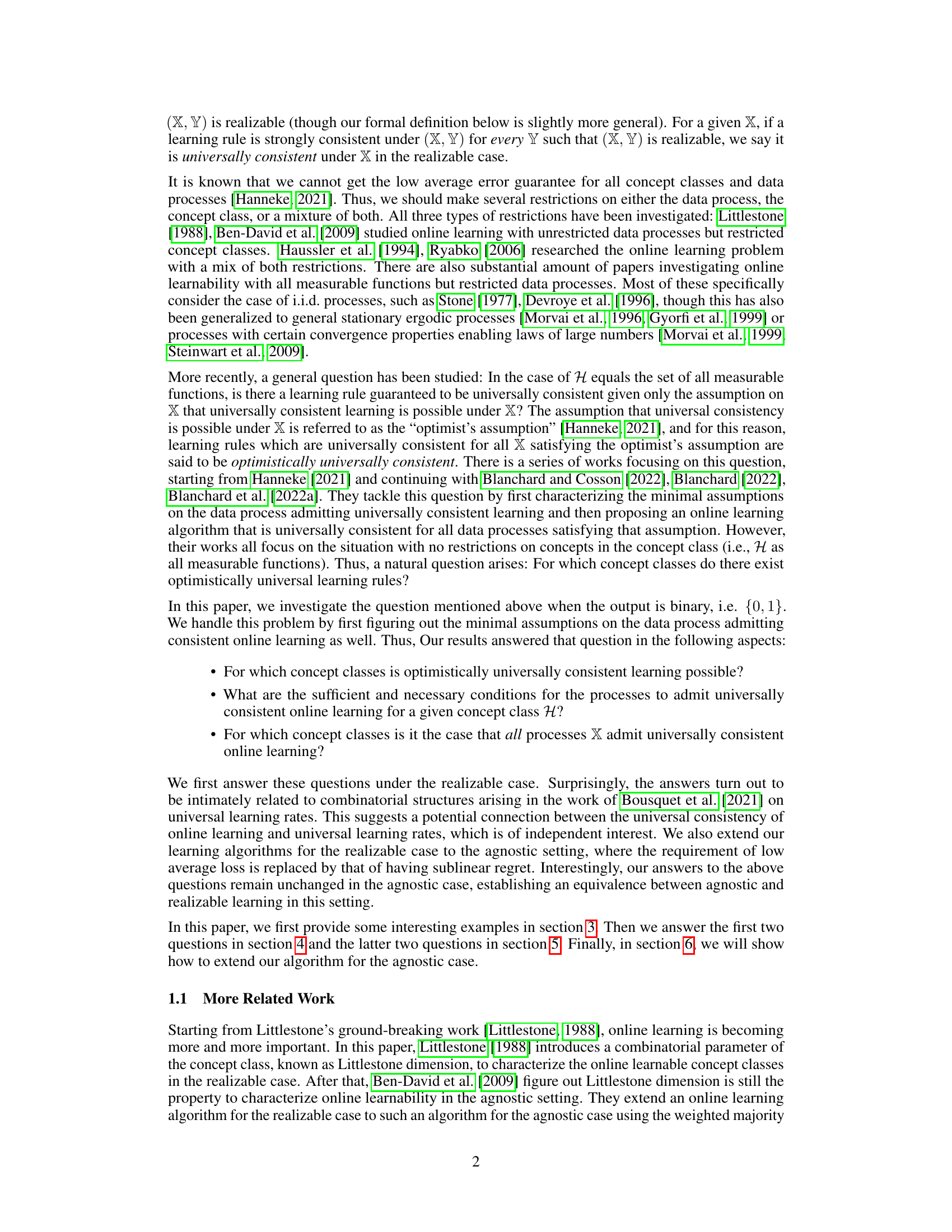

🔼 This algorithm is designed to learn from a winning strategy in a game-theoretic setting. It iteratively refines its understanding by updating its knowledge (U) based on whether its predictions (ŷt) match the true values (Yt). The algorithm incorporates a winning strategy (gu) to guide its predictions. If the prediction is incorrect (ŷt ≠ Yt), it updates its mistake set (L) and adjusts its parameters (k and m) accordingly. The algorithm attempts to reach a state where its predictions consistently match the true values and achieves a low error rate.

read the caption

Algorithm 1: Learning algorithm from winning strategy

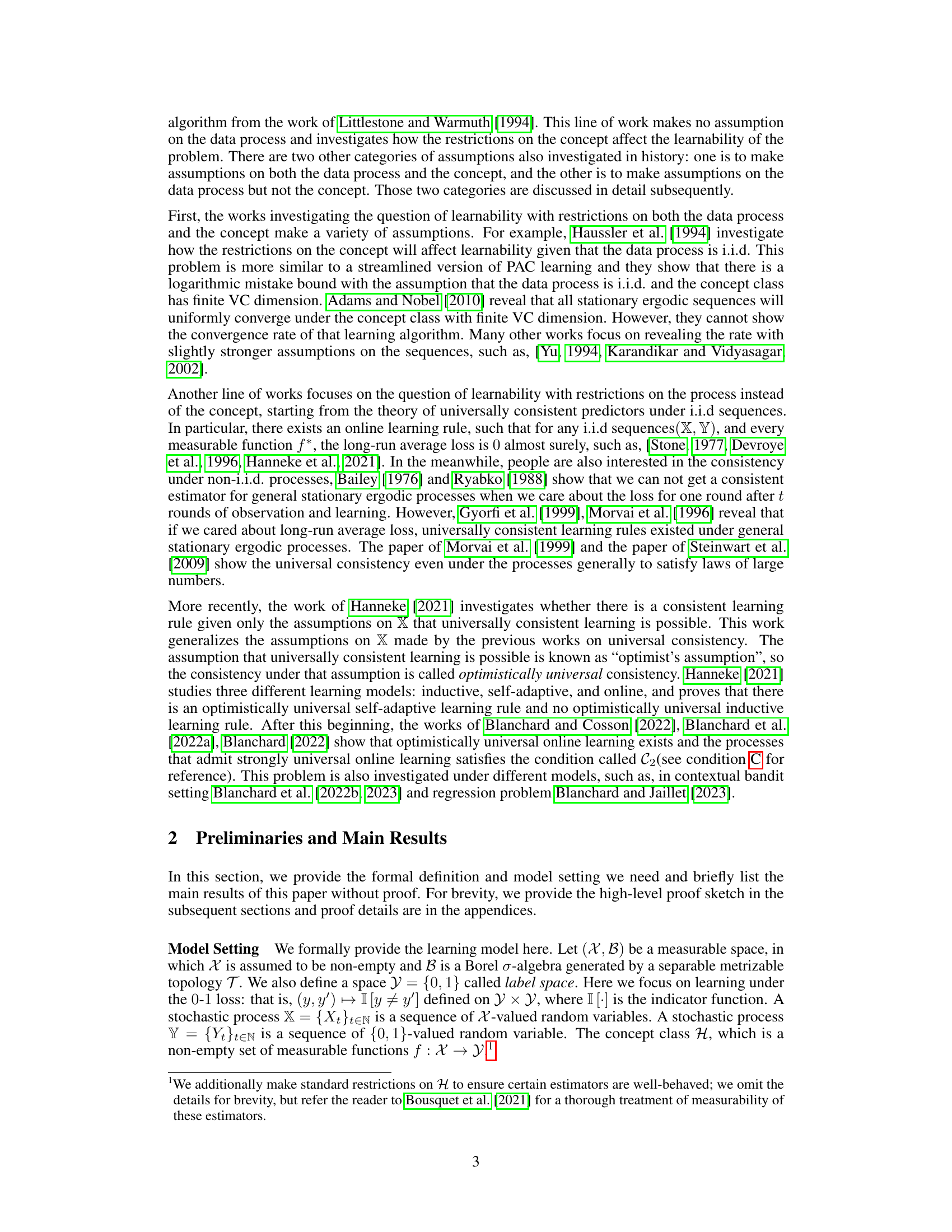

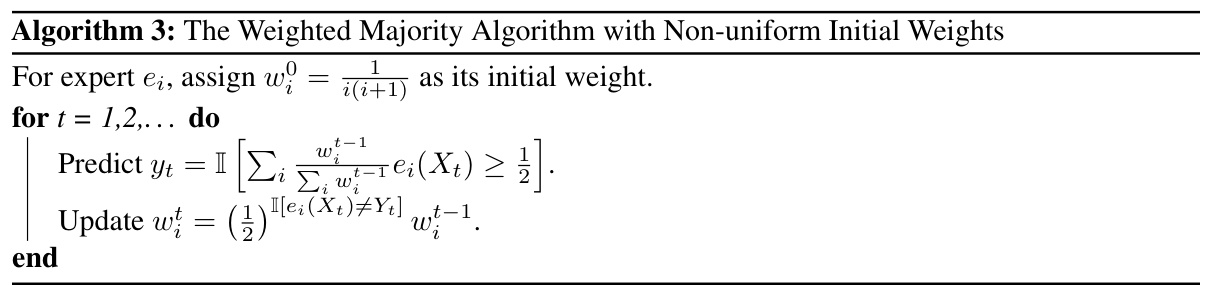

🔼 This algorithm uses a weighted majority approach to prediction, where each expert’s weight is initially set inversely proportional to its index. After each prediction, the weights are updated based on whether the prediction was correct. Experts that consistently make inaccurate predictions will have their weights diminish over time, leading to a greater influence of more accurate experts in future predictions.

read the caption

Algorithm 3: The Weighted Majority Algorithm with Non-uniform Initial Weights

Full paper#