TL;DR#

Many machine learning algorithms, including low-rank approximation and column subset selection, are used in critical decision-making processes. However, these algorithms can exhibit bias, leading to unfair outcomes for different groups. This paper investigates the challenge of creating “socially fair” versions of these algorithms, where the goal is to minimize the maximum loss across all sub-populations. The authors discovered that achieving perfect fairness is computationally very difficult.

This research provides algorithmic solutions to address the difficulty of achieving fairness. They develop efficient approximation algorithms that run in polynomial time, providing a good balance between fairness and efficiency. These algorithms are evaluated empirically, demonstrating their effectiveness in real-world applications. The work contributes to the development of more equitable machine learning techniques, addressing a critical issue of algorithmic fairness.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in machine learning and fairness because it tackles the critical problem of algorithmic bias in low-rank approximation and column subset selection. It reveals the computational hardness of achieving fairness and proposes novel approximation algorithms with strong theoretical guarantees and empirical validation. This opens new avenues for developing fairer and more equitable machine learning models, addressing a significant concern in the field. Its findings directly impact the design of fair algorithms for various applications, offering practical solutions and theoretical insights for future research.

Visual Insights#

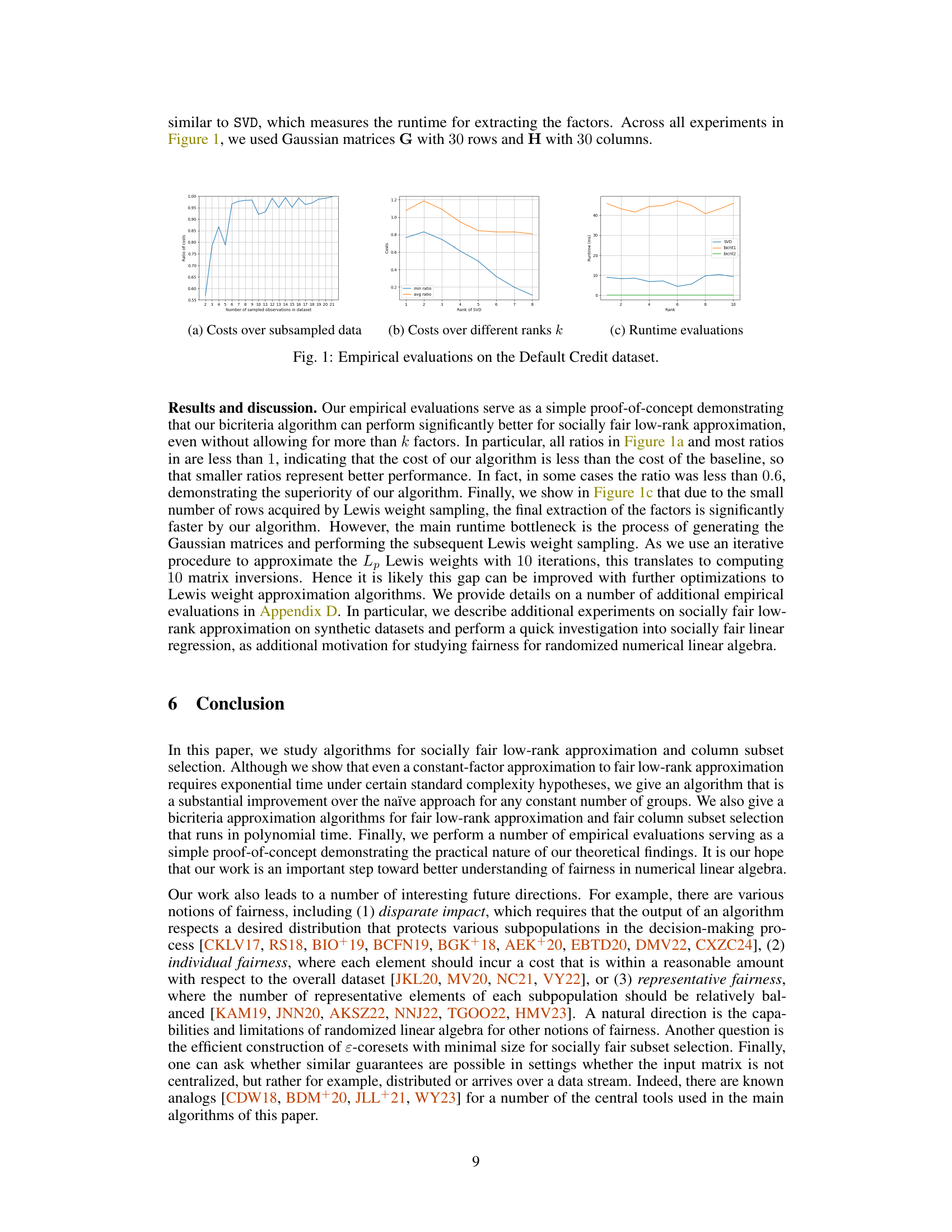

🔼 This figure presents the results of empirical evaluations performed on the Default of Credit Card Clients dataset. It compares the performance of the authors’ bicriteria algorithm for socially fair low-rank approximation against a standard (non-fair) low-rank approximation algorithm. Three subfigures are included: (a) Shows the ratio of costs between the two algorithms across various subsample sizes of the dataset. (b) Displays the ratio of costs for different rank parameters (k) of the SVD algorithm. (c) Compares the runtimes of the two algorithms for different rank parameters (k).

read the caption

Fig. 1: Empirical evaluations on the Default Credit dataset.

Full paper#