TL;DR#

Training accurate 3D object detectors requires vast amounts of labeled LiDAR data, which is expensive and time-consuming to acquire. This paper tackles this challenge by focusing on active 3D object detection, aiming to select only the most useful data points for labeling. Existing methods struggle with data imbalance and the need to cover data from various difficulty levels.

The proposed method, STONE, uses submodular optimization, a technique known for efficiently selecting diverse and representative subsets. STONE consists of a two-stage algorithm that first selects point clouds with diverse gradients and then chooses those that balance the class distribution. Extensive experiments show that STONE outperforms existing methods on real-world datasets, achieving state-of-the-art results while maintaining high computational efficiency.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in 3D object detection and active learning. It introduces a novel submodular optimization framework that significantly reduces the cost of labeling data, a major bottleneck in training accurate 3D object detectors. The framework addresses data imbalance and the need for diverse data, leading to state-of-the-art results. This work opens new avenues for research in active learning applied to other computer vision tasks and explores the use of submodular optimization, potentially leading to more efficient and effective algorithms for data selection.

Visual Insights#

🔼 This figure illustrates the pipeline of the proposed active learning method called STONE. It shows a two-stage process: 1) Gradient-Based Submodular Subset Selection (GBSSS) which selects representative point clouds from the unlabeled data pool using a submodular function based on gradients from hypothetical labels generated by Monte Carlo dropout. This stage aims to maximize the coverage of the unlabeled data while considering object diversity and difficulty levels. 2) Submodular Diversity Maximization for Class Balancing (SDMCB) that selects a subset of the point clouds from stage one to balance the class distribution of the labeled data, making the model robust to class imbalance. The figure also depicts the overall workflow, including initial training, active learning iterations (query rounds), and model retraining with newly labeled data.

read the caption

Figure 1: STONE: An illustrative pipeline of our proposed active learning method for 3D object detection leveraging submodular functions.

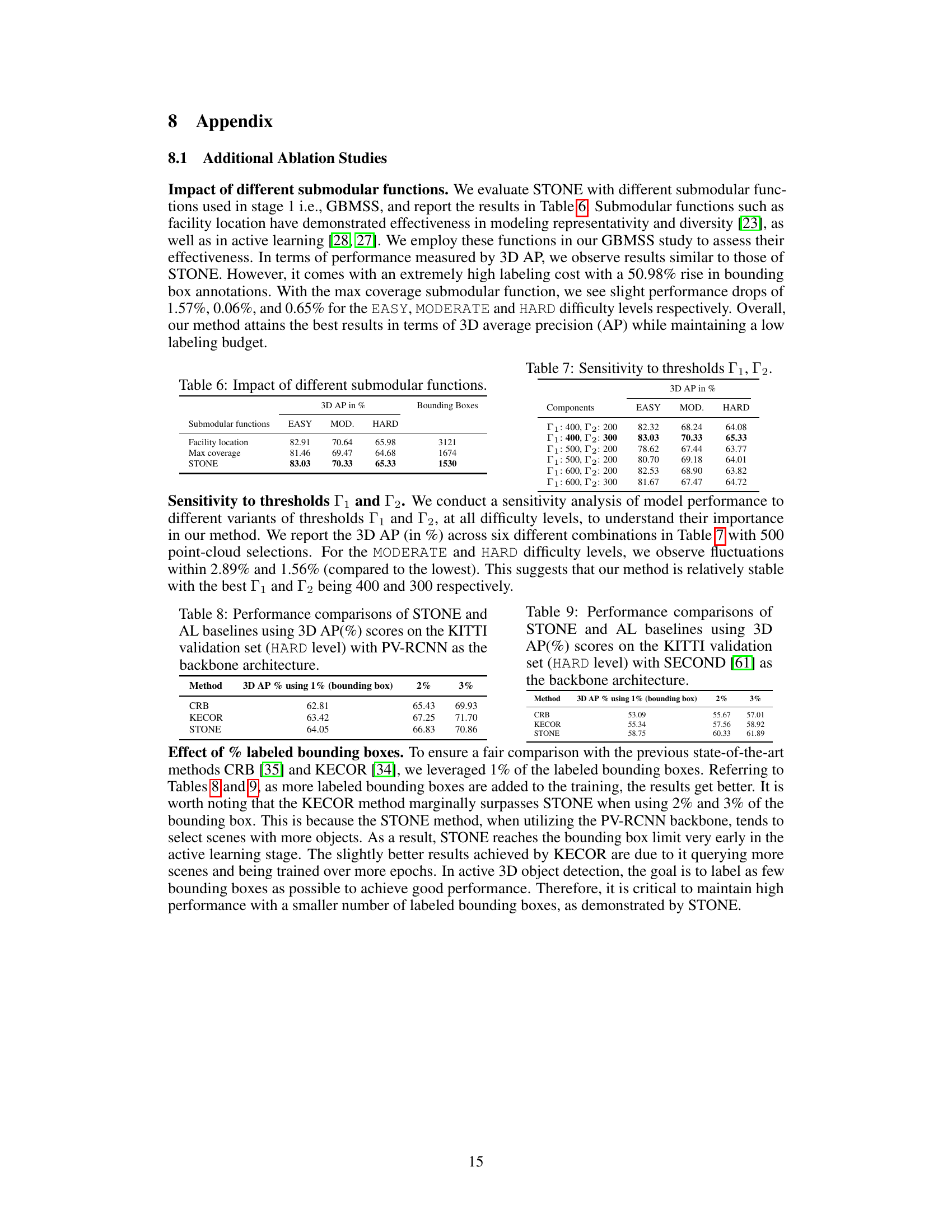

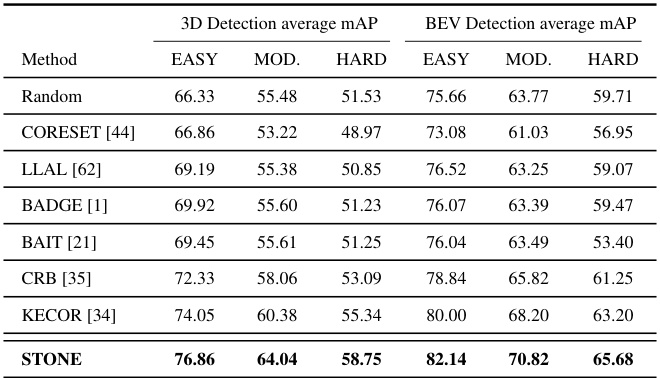

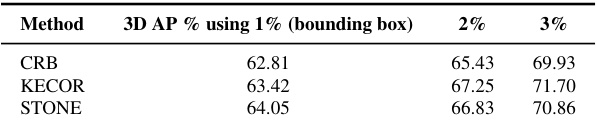

🔼 This table presents a comparison of different active learning methods on the KITTI dataset for 3D object detection. The performance is measured using Average Precision (AP) for different difficulty levels (EASY, MODERATE, HARD) and object categories (CAR, Pedestrian, Cyclist). The results show the 3D AP achieved by each method when only 1% of the bounding boxes are labeled.

read the caption

Table 1: 3D AP(%) scores on KITTI validation set with 1% queried bounding boxes, using PV-RCNN as the backbone detection model.

In-depth insights#

Submodular Active 3D#

The concept of “Submodular Active 3D” combines submodularity, a mathematical property signifying diminishing returns, with active learning techniques applied to three-dimensional data. Submodularity is leveraged to efficiently select informative samples for annotation from a large dataset, crucial in 3D object detection where labeled data is scarce and expensive to obtain. Active learning helps minimize annotation effort by iteratively selecting the most valuable samples to label. In the 3D context, this could involve selecting point clouds or regions representing objects with high uncertainty or those that improve model diversity. The submodular framework ensures that sample selection efficiently balances the trade-off between data coverage and redundancy, resulting in more accurate and cost-effective training of 3D object detectors. The combination of submodularity and active learning is a powerful approach to address the challenges of 3D data annotation, making it a promising area of research.

STONE Framework#

The STONE framework, introduced for active 3D object detection, presents a novel submodular optimization approach to significantly reduce the cost of data labeling. Addressing the challenges of data imbalance and the need for diverse data coverage, STONE leverages submodular functions to select informative point clouds. Its two-stage algorithm efficiently balances label distributions and incorporates varying difficulty levels. This framework showcases state-of-the-art performance on benchmark datasets like KITTI and Waymo, proving its effectiveness and generalizability. The use of submodularity ensures computational efficiency while achieving high accuracy, making it a valuable contribution to the field of active learning.

Gradient-Based Selection#

Gradient-based selection methods leverage the gradients of a model’s loss function to identify informative data points for active learning. The core idea is that data points with high gradients contribute significantly to model updates, thus suggesting their importance for improving model accuracy. A key advantage is that this approach directly incorporates model behavior, providing a more targeted selection compared to uncertainty-based sampling that relies only on prediction confidence. However, gradient-based methods can be computationally expensive since they often require backpropagation through the entire model for each data point. Moreover, class imbalance can significantly bias the gradients, making certain classes appear more important than others. Sophisticated techniques like gradient weighting or class-balanced loss functions are crucial to mitigate this issue and ensure a representative selection across all classes. Therefore, careful consideration of computational cost and class imbalance is necessary for effectively using gradient-based selection in active learning.

Class Balancing#

Class imbalance is a critical challenge in active 3D object detection, where some object categories are far more prevalent in the data than others. Ignoring this imbalance can lead to biased models that perform poorly on under-represented classes. Strategies to address this include hierarchical labeling to ensure balanced representation within individual point clouds, but this doesn’t guarantee balance across all labeled data. The proposed STONE framework uses a submodular optimization approach to tackle this issue directly by selecting unlabeled samples that improve label distribution balance. This involves selecting samples to not just maximize coverage of the data space but also to specifically reduce the existing label imbalance. A key contribution of STONE is its explicit focus on balancing the label distribution, a feature absent in many prior active learning methods for 3D object detection that only consider uncertainty or diversity, making it a significant advancement in addressing this pervasive issue.

Future Work#

Future research directions stemming from this active 3D object detection work could explore more sophisticated submodular functions to better capture the complexities of point cloud data. Investigating alternative optimization strategies beyond greedy algorithms, such as those leveraging parallel processing or distributed computation, could significantly enhance scalability for very large datasets. Incorporating uncertainty modeling techniques directly into the submodular framework might refine sample selection. Moreover, adapting the framework to handle dynamic environments and streaming LiDAR data is crucial for real-world applications. Finally, extending the approach to other modalities, such as combining LiDAR with camera data for multi-modal active learning, would enrich the framework’s capabilities and potentially improve accuracy and robustness.

More visual insights#

More on figures

🔼 This figure compares the cumulative label distribution entropy across different active learning query rounds for three methods: STONE, CRB, and KECOR. It demonstrates that STONE effectively maintains a balanced label distribution, unlike CRB and KECOR, which show an increasing entropy (imbalance) as the number of query rounds increases. This highlights STONE’s ability to address data imbalance, a key challenge in active 3D object detection.

read the caption

Figure 2: The proposed STONE method more effectively maintains the balance of label distribution in active 3D object detection. The plots show the cumulative label distribution entropy values on KITTI [15] validation set split with PV-RCNN [49].

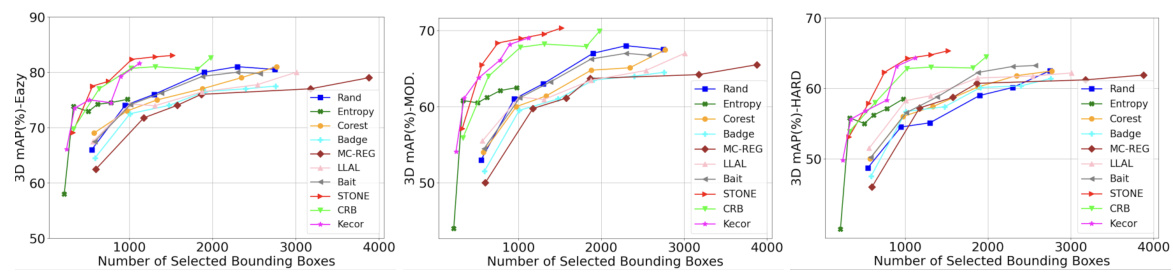

🔼 This figure shows the performance comparison of various active learning (AL) baselines and the proposed STONE method on the KITTI validation dataset using the PV-RCNN backbone. The x-axis represents the number of selected bounding boxes, while the y-axis shows the 3D mean Average Precision (mAP). The figure is divided into three subplots, one for each difficulty level (EASY, MODERATE, HARD) in the KITTI dataset. Each line represents a different AL method, allowing for a visual comparison of their performance across different numbers of selected bounding boxes and difficulty levels.

read the caption

Figure 3: 3D mAP (%) of AL baselines on the KITTI validation set with PV-RCNN.

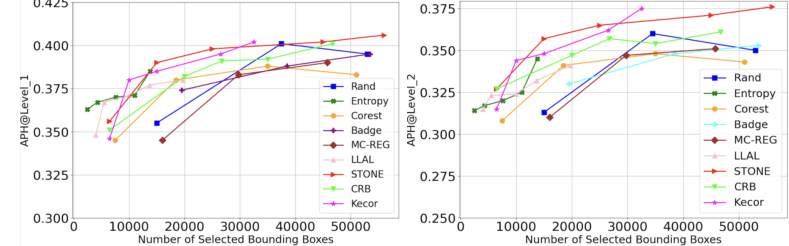

🔼 This figure shows the performance comparison of different active learning (AL) baselines on the Waymo Open validation dataset using PV-RCNN as the backbone detection model. The x-axis represents the number of selected bounding boxes, and the y-axis shows the Average Precision (APH) for two different difficulty levels: Level 1 and Level 2. The plot visually compares the performance of STONE against baselines like Random, Entropy, Coreset, Badge, MC-REG, LLAL, CRB, and KECOR, demonstrating STONE’s superior performance in active 3D object detection.

read the caption

Figure 4: 3D mAP (%) of AL baselines on the Waymo Open validation set with PV-RCNN.

More on tables

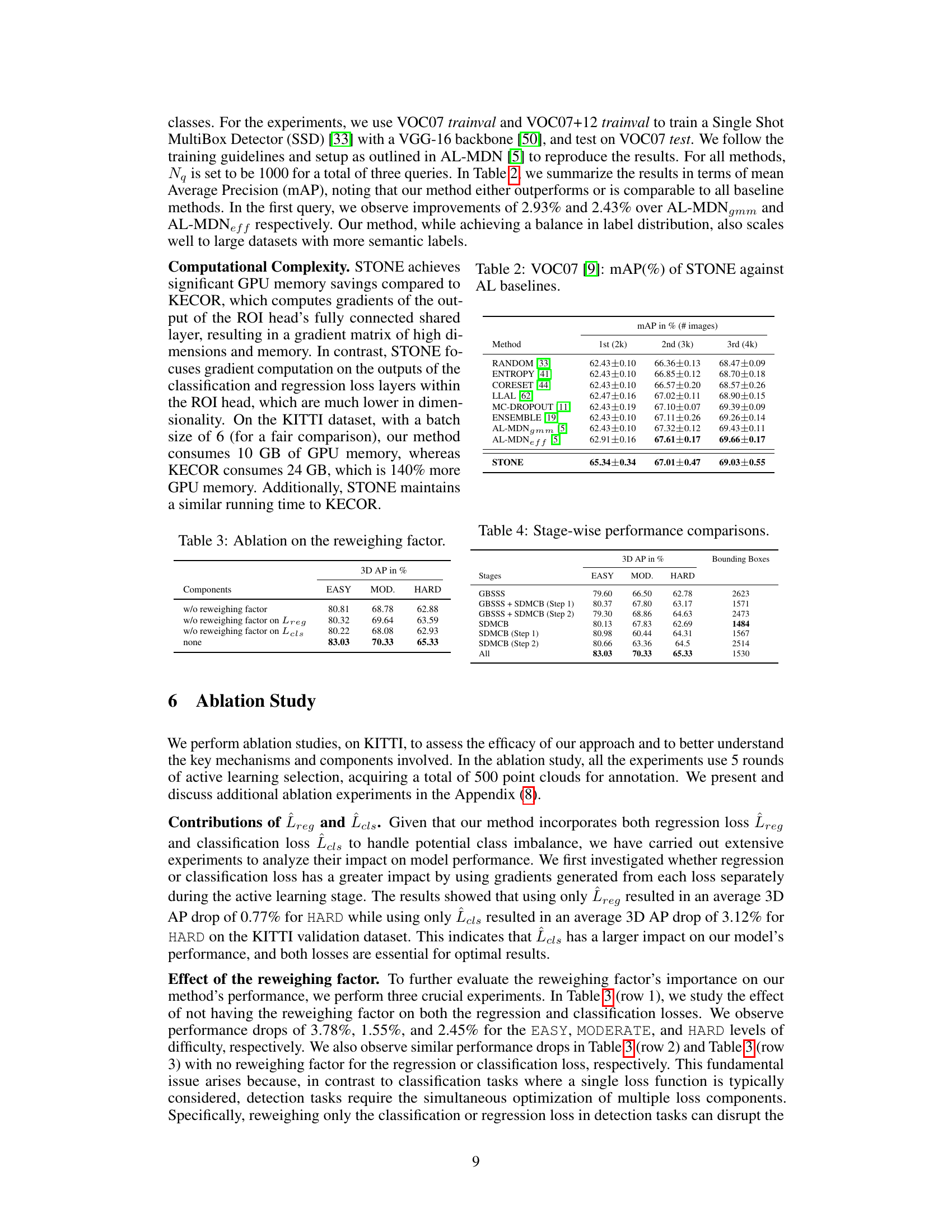

🔼 This table presents the mean Average Precision (mAP) results of the proposed STONE method and several active learning baselines on the PASCAL VOC 2007 dataset for object detection. The results are shown for three query rounds (1st, 2nd and 3rd), each with a different number of labeled images. The table showcases the performance of STONE compared to other state-of-the-art methods in a 2D object detection task, highlighting its competitiveness even on a different benchmark dataset.

read the caption

Table 2: VOC07 [9]: mAP(%) of STONE against AL baselines.

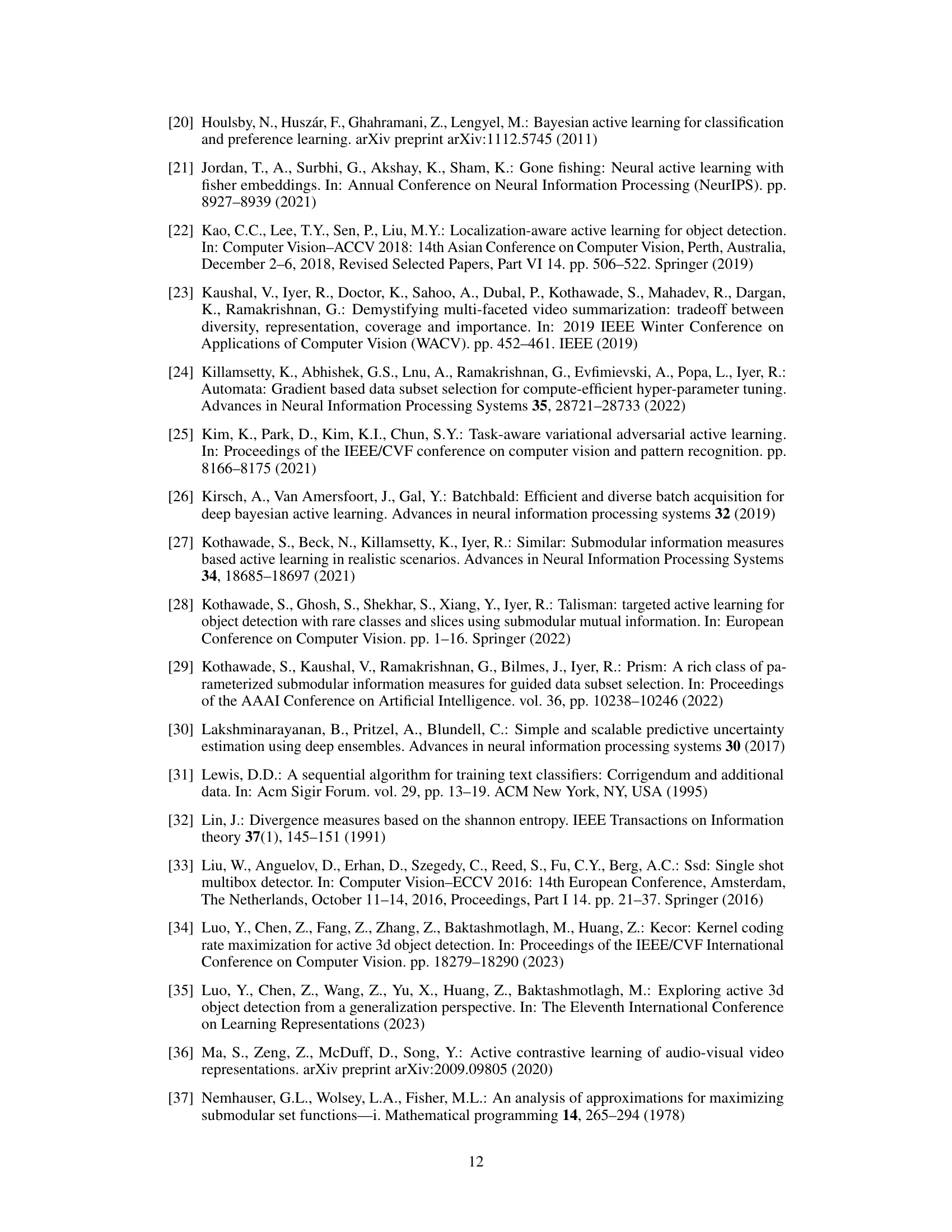

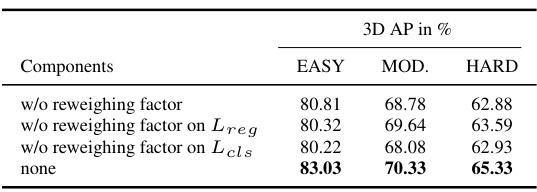

🔼 This table presents the results of ablation experiments conducted to evaluate the impact of the reweighing factors on the performance of the proposed method. It shows the 3D Average Precision (AP) scores obtained across different difficulty levels (EASY, MODERATE, HARD) when using the proposed method with different configurations of reweighing factors. The ’none’ row indicates the performance when no reweighing factors are used. The other rows show the results obtained when removing the reweighing factors for either the regression loss (Lreg) or the classification loss (Lcls) or removing both.

read the caption

Table 3: Ablation on the reweighing factor.

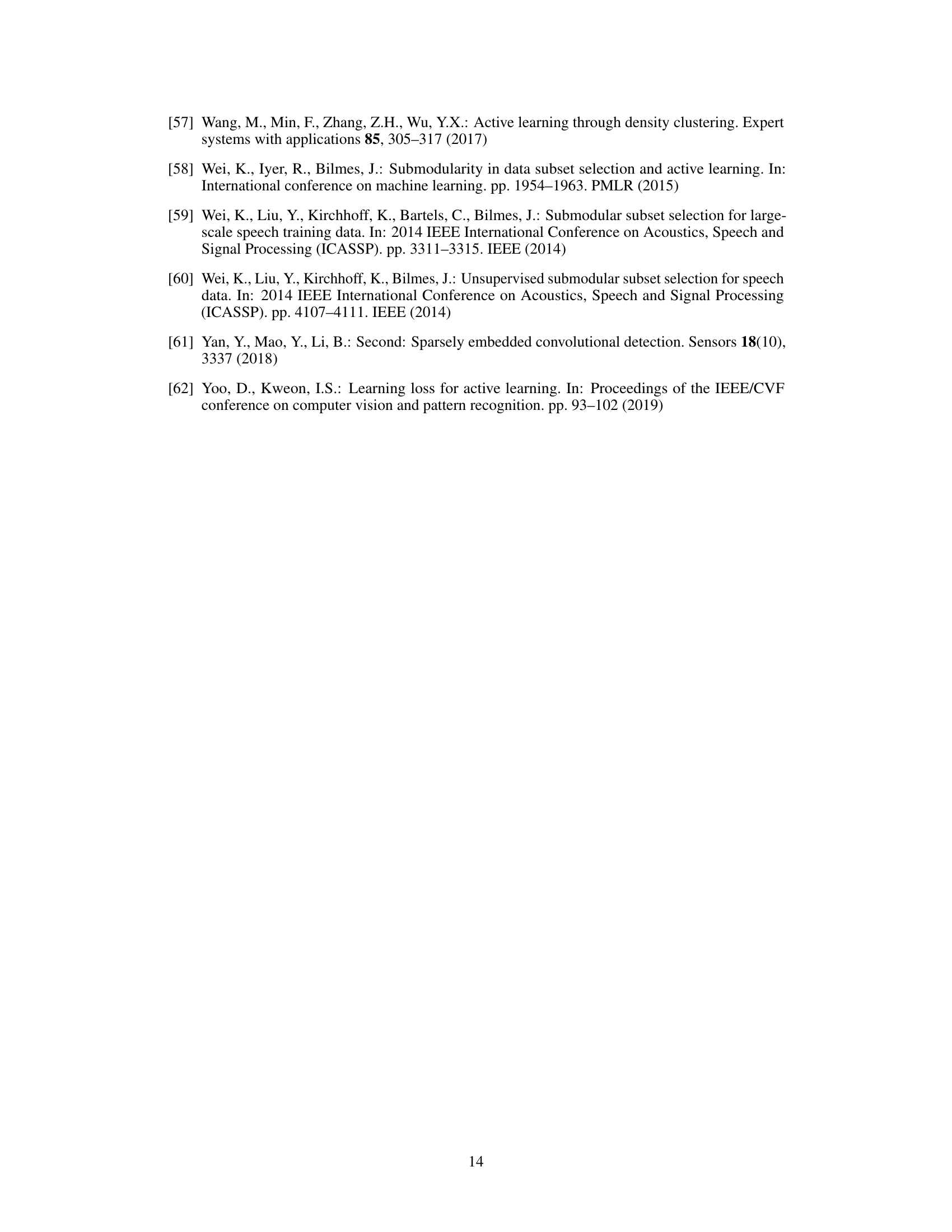

🔼 This table presents a breakdown of the performance of different stages within the STONE active learning framework. It shows the average precision (AP) for each difficulty level (EASY, MOD, HARD) and the total number of bounding boxes annotated at each stage. This allows for a quantitative analysis of the contribution of each stage to the overall performance improvement.

read the caption

Table 4: Stage-wise performance comparisons.

🔼 This table presents the average precision (AP) scores for 3D object detection on the KITTI validation dataset. The results are broken down by difficulty level (EASY, MODERATE, HARD) and viewpoint (3D, BEV). The table compares the performance of the proposed STONE method against several baseline active learning methods, all using the SECOND model as the backbone detector. A lower percentage of queried bounding boxes was used for this experiment compared to the main experiments in the paper.

read the caption

Table 5: 3D mAP(%) scores on KITTI validation set with 1% queried bounding boxes, using SECOND [61] as the backbone detection model.

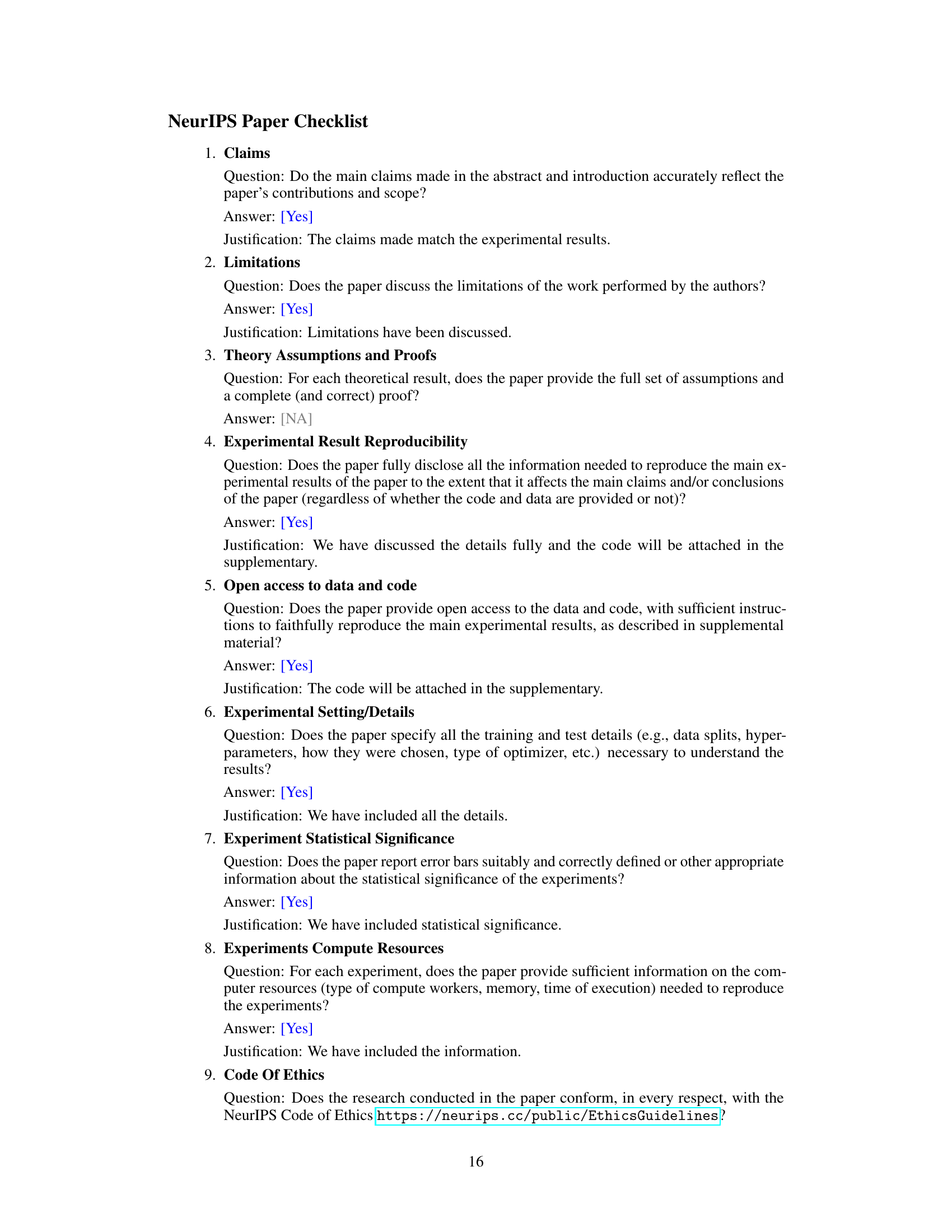

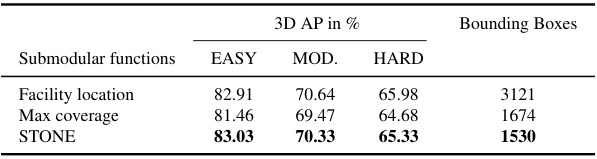

🔼 This table presents a comparison of the performance of STONE using different submodular functions in the Gradient-Based Submodular Subset Selection (GBMSS) stage. It shows that using the proposed STONE submodular function results in the highest 3D average precision (AP) while maintaining a low labeling budget compared to the facility location and max coverage submodular functions.

read the caption

Table 6: Impact of different submodular functions.

🔼 This table shows the sensitivity analysis of the model’s performance to different values of thresholds Γ₁ and Γ₂. The results are presented for all three difficulty levels (EASY, MODERATE, and HARD) and show that the performance is relatively stable across different combinations of Γ₁ and Γ₂.

read the caption

Table 7: Sensitivity to thresholds Γ1, Γ2.

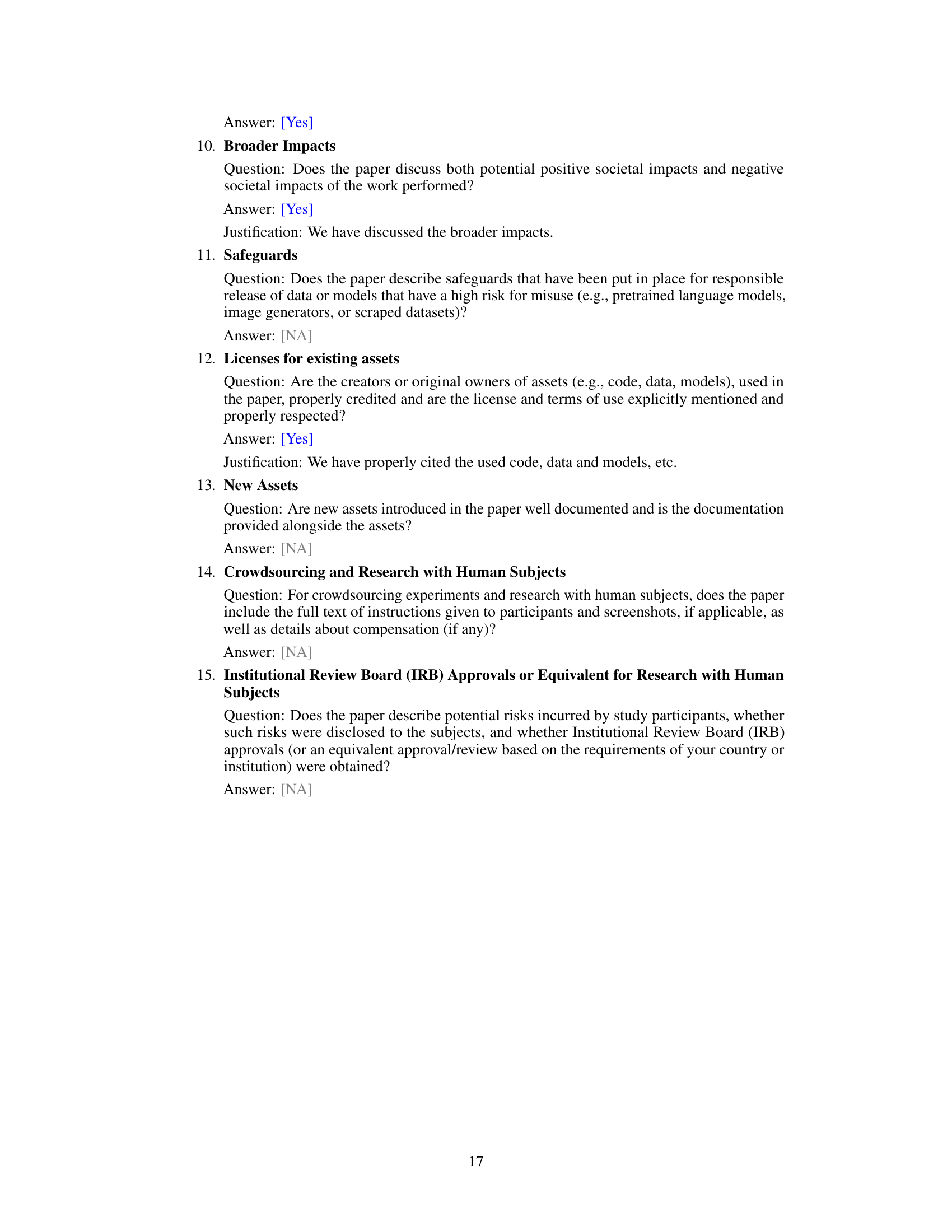

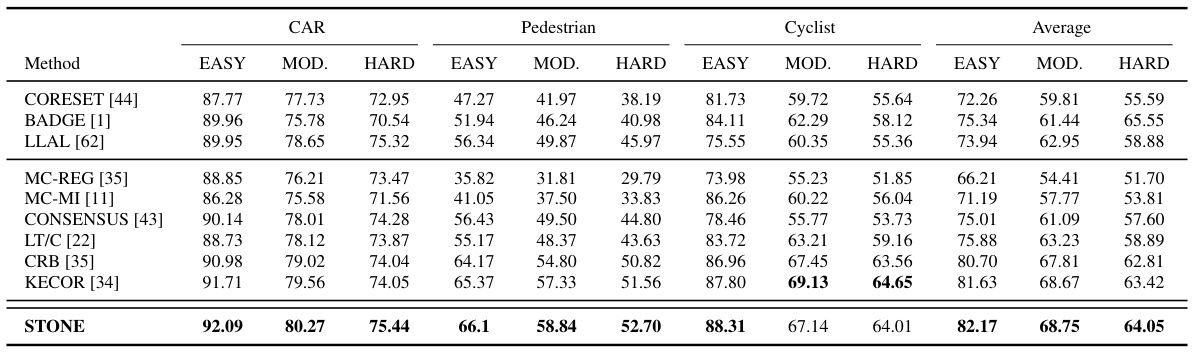

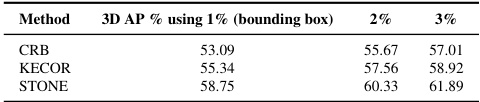

🔼 This table presents a comparison of the performance of the proposed STONE method against other active learning baselines on the KITTI validation dataset, specifically focusing on the ‘HARD’ difficulty level. The comparison is done using the 3D Average Precision (AP) metric, which measures the accuracy of 3D object detection. The results are shown for different percentages of labeled bounding boxes (1%, 2%, and 3%), providing insights into how the methods perform under different labeling budgets.

read the caption

Table 8: Performance comparisons of STONE and AL baselines using 3D AP(%) scores on the KITTI validation set (HARD level) with PV-RCNN as the backbone architecture.

🔼 This table presents the 3D and BEV average precision (AP) scores achieved by different active learning methods on the KITTI validation set. The results are broken down by object class (car, pedestrian, cyclist) and difficulty level (easy, moderate, hard). The backbone detection model used is SECOND [61], and only 1% of the bounding boxes are queried. The table allows for a comparison of STONE’s performance against other state-of-the-art active learning methods.

read the caption

Table 5: 3D mAP(%) scores on KITTI validation set with 1% queried bounding boxes, using SECOND [61] as the backbone detection model.

Full paper#