↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

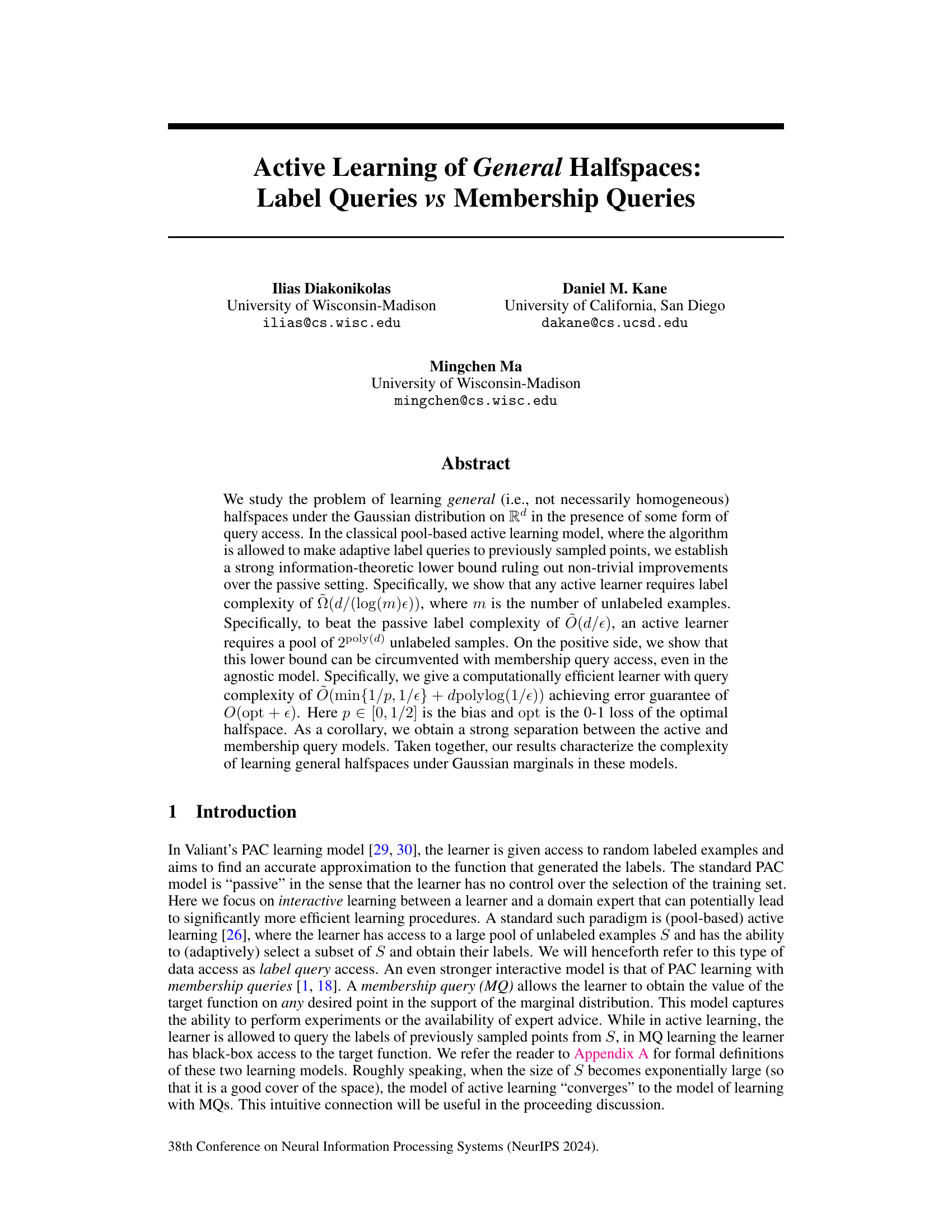

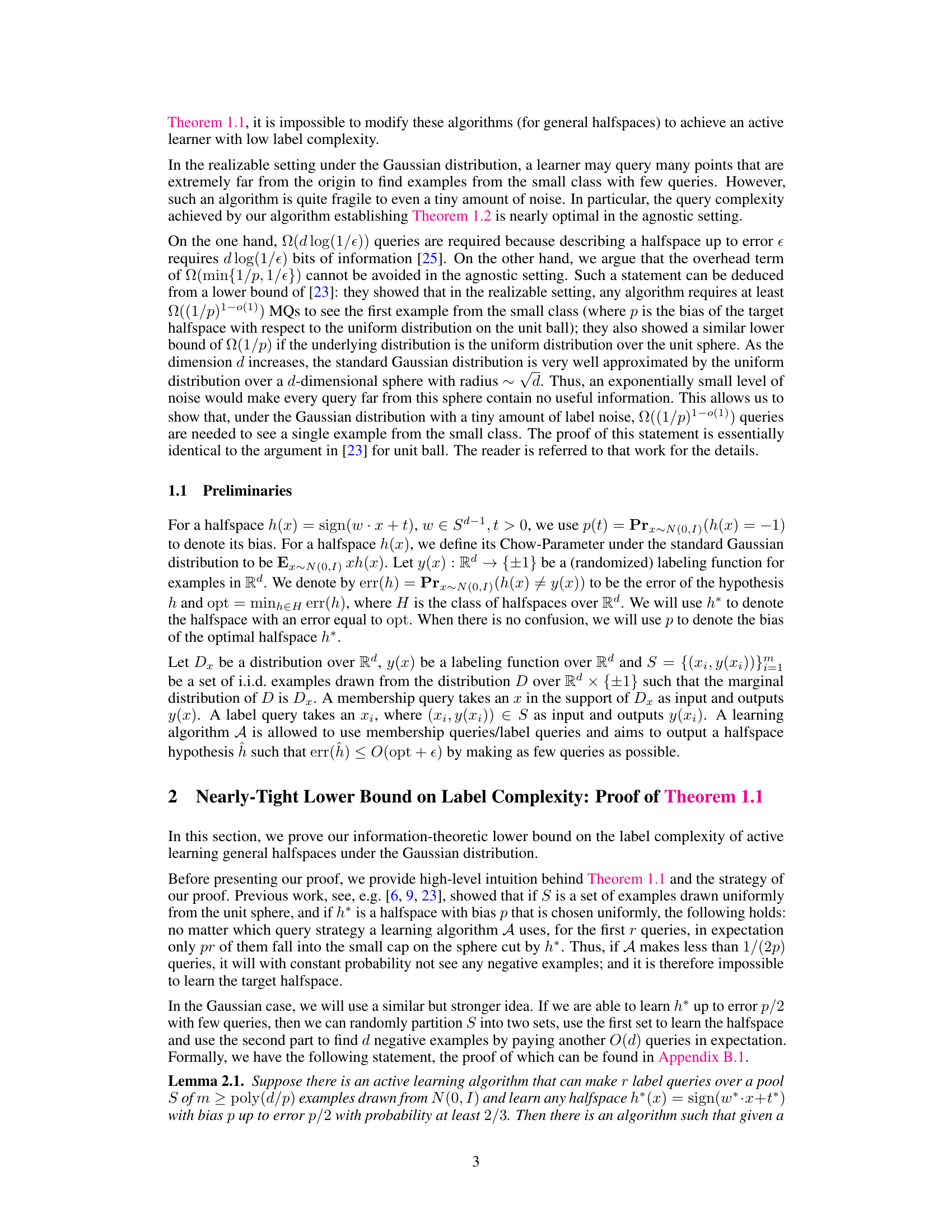

Learning general halfspaces, a fundamental problem in machine learning, is typically explored under the passive learning setting where the algorithm receives randomly labeled examples. Active learning aims to improve efficiency by letting the algorithm adaptively query the labels of selected examples. However, existing research reveals significant challenges in demonstrating substantial improvement from active learning for general halfspaces.

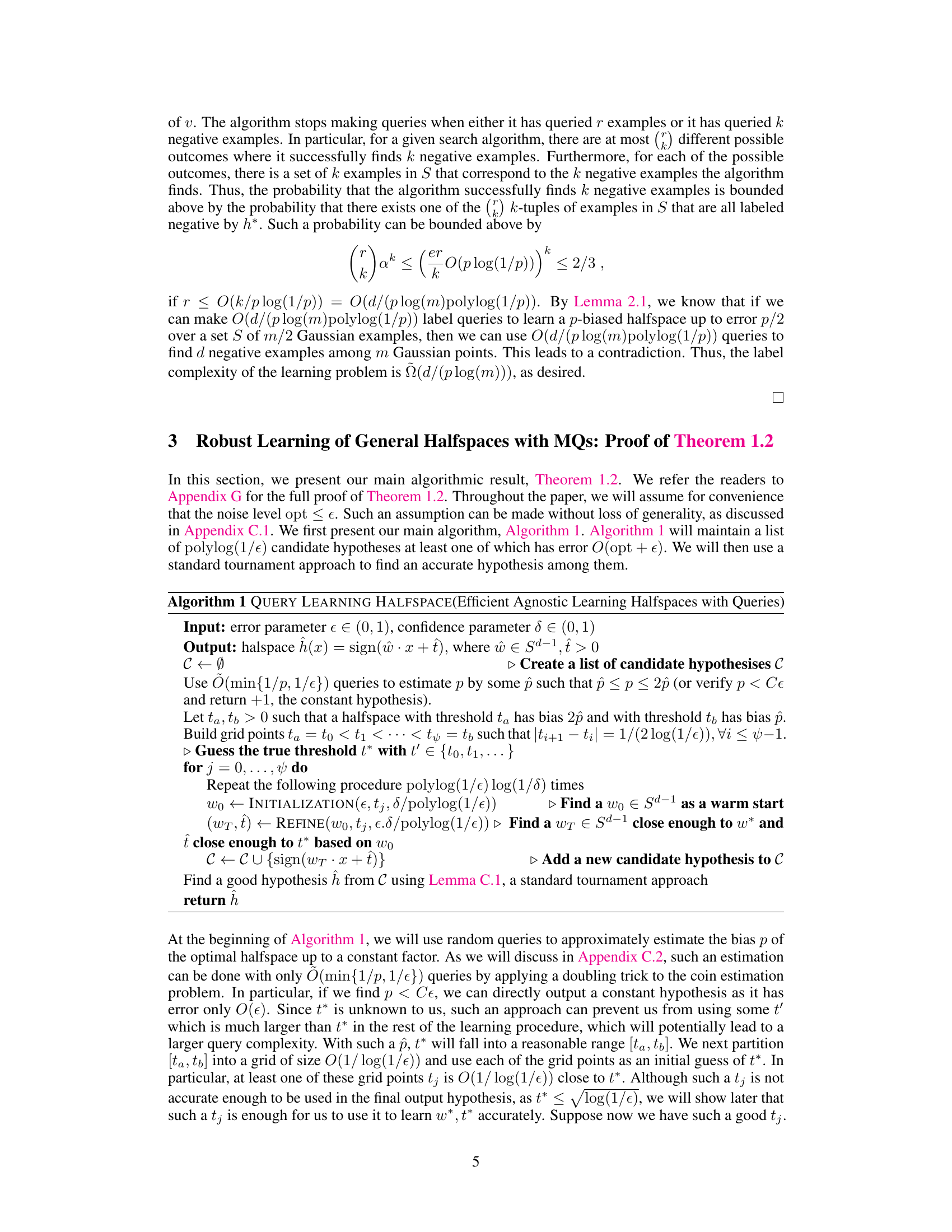

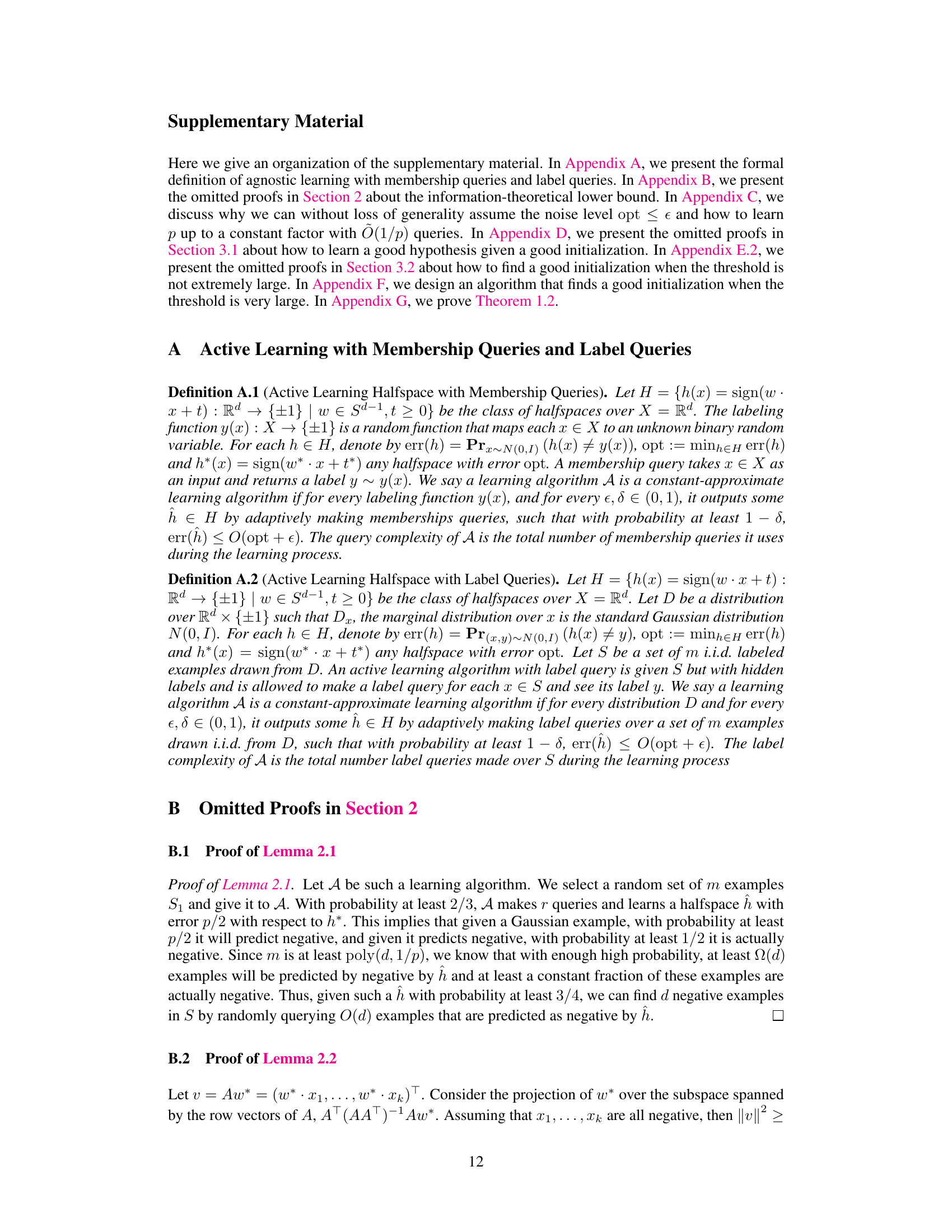

This paper addresses these challenges through a rigorous theoretical analysis. It proves that, under a Gaussian distribution, active learning offers no non-trivial improvement unless exponentially many unlabeled examples are provided. However, by using a stronger query model - membership queries, which allow querying labels for any point - the researchers devise a computationally efficient algorithm achieving a nearly optimal label complexity. This provides a strong separation between active learning and membership query approaches and clarifies the limitations and possibilities of interactive learning in this domain.

Key Takeaways#

Why does it matter?#

This paper is crucial because it resolves a long-standing open question regarding the complexity of active learning for general halfspaces. It provides strong theoretical lower bounds, demonstrating the limitations of active learning in this context. Furthermore, the paper offers a computationally efficient algorithm using membership queries, thereby establishing a strong separation between active and membership query learning models and opening up new avenues for research on interactive learning paradigms.

Visual Insights#

Full paper#