TL;DR#

Out-of-distribution (OoD) detection is crucial for reliable AI systems, ensuring AI models don’t make inaccurate predictions on unseen data. Existing methods, like PCA, often struggle with detecting OoD data because they rely on linear feature separation. This leads to poor performance as real-world data is often non-linear. This research tackles this issue.

The proposed solution utilizes Kernel PCA, a non-linear extension of PCA, to enhance OoD detection. They introduce novel cosine and cosine-Gaussian kernels designed specifically for this task, along with efficient feature mappings to improve computation speed, especially for large datasets. The results demonstrate that this approach outperforms existing methods in terms of both accuracy and efficiency, showcasing its potential for real-world AI applications.

Key Takeaways#

Why does it matter?#

This paper is important because it addresses the limitations of existing out-of-distribution (OoD) detection methods that rely on linear approaches like PCA. By introducing kernel PCA, the researchers provide a more effective way to detect OoD data, which is crucial for the reliability and safety of AI systems. The use of novel kernels and explicit feature mappings also improves the efficiency and scalability of the process. These improvements have a significant impact on the practical deployment of AI models, particularly in security-sensitive applications. The proposed method also opens new avenues for research in kernel methods and non-linear feature learning for various AI tasks.

Visual Insights#

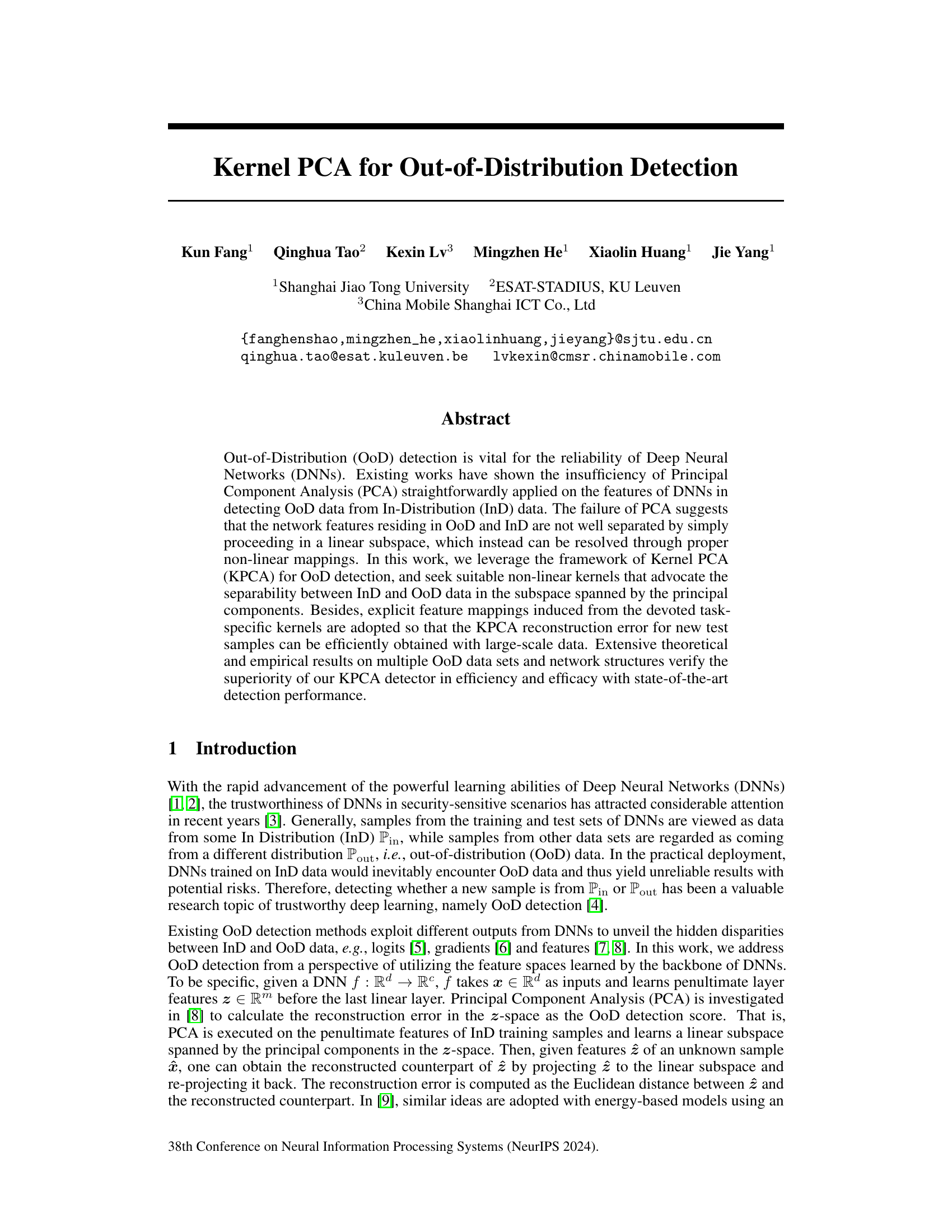

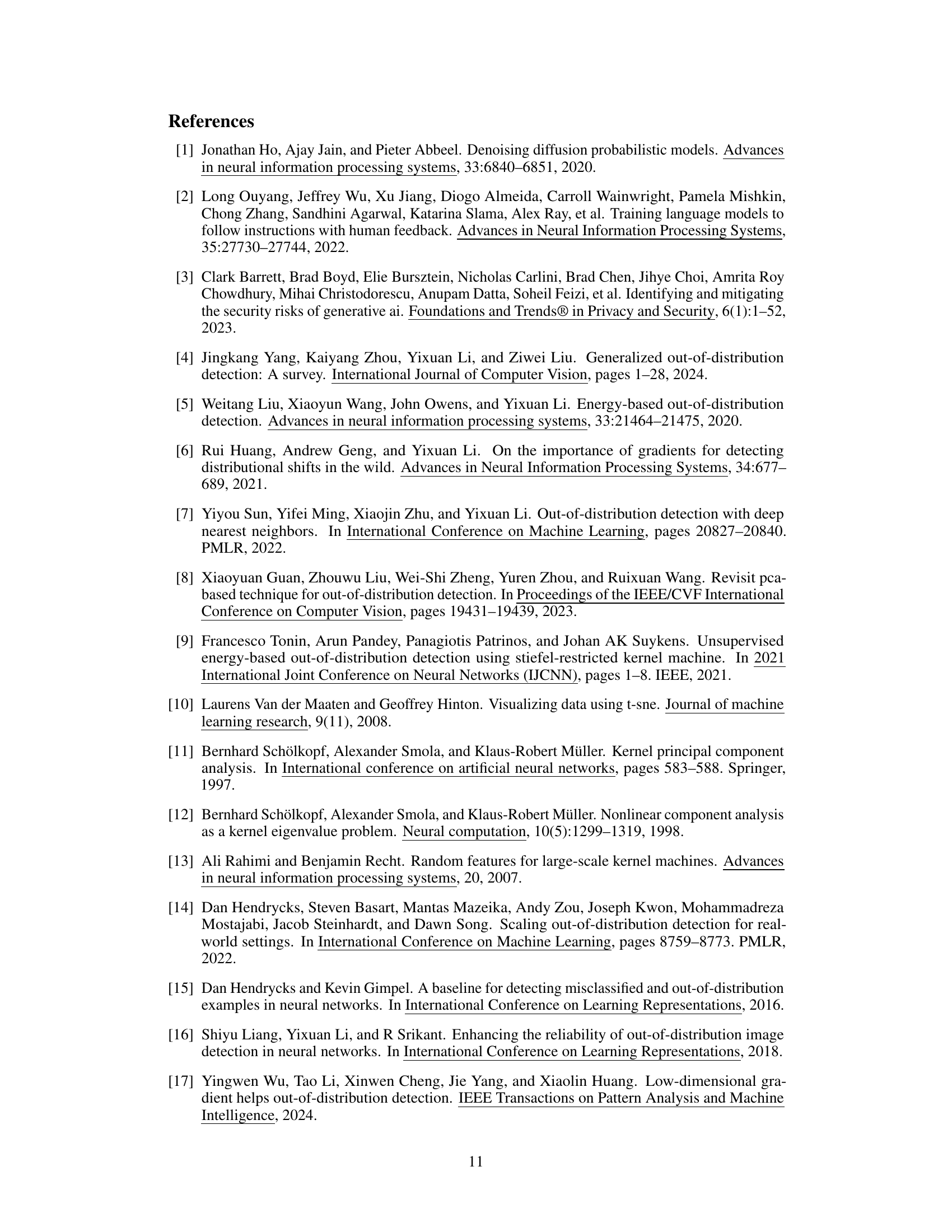

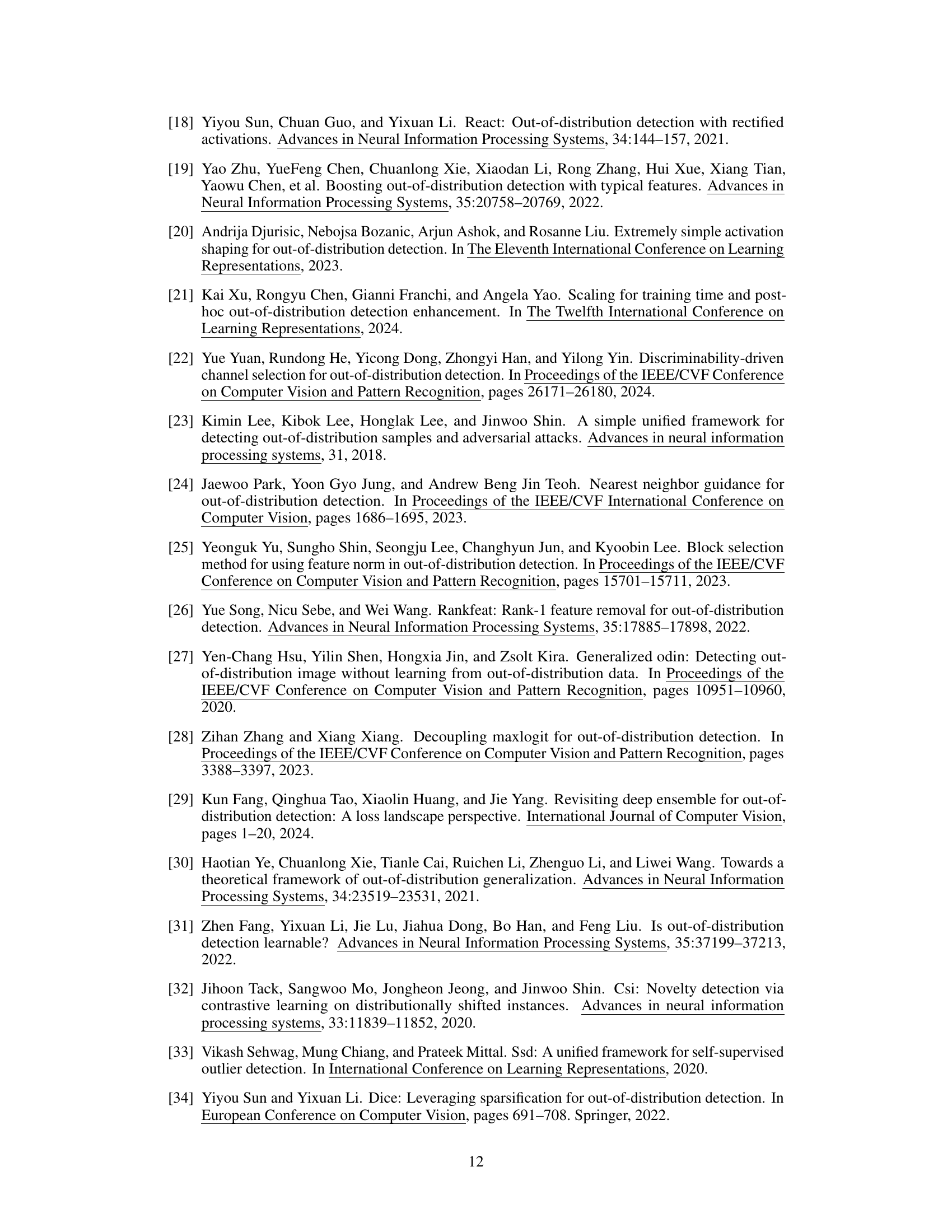

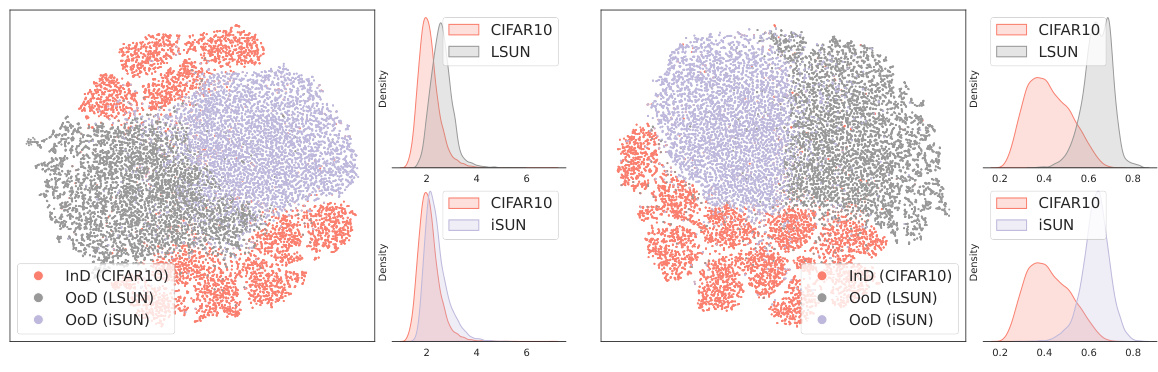

🔼 This figure uses t-SNE to visualize the original features and the features mapped by KPCA. The left panel shows the original features, where InD and OoD data are not well separated. The right panel shows the features after KPCA mapping, where InD and OoD data are clearly separated. The distributions of reconstruction errors for InD and OoD data are also shown, highlighting the improved separability achieved by KPCA.

read the caption

Figure 1: The t-SNE [10] visualization on the original features z (left) and the mapped features (z) (right). Our KPCA detector alleviates the linearly inseparability between InD and OoD features in the original z-space via an explicit feature mapping Φ, and thus substantially improves the OoD detection performance, illustrated by the much more distinguishable reconstruction errors.

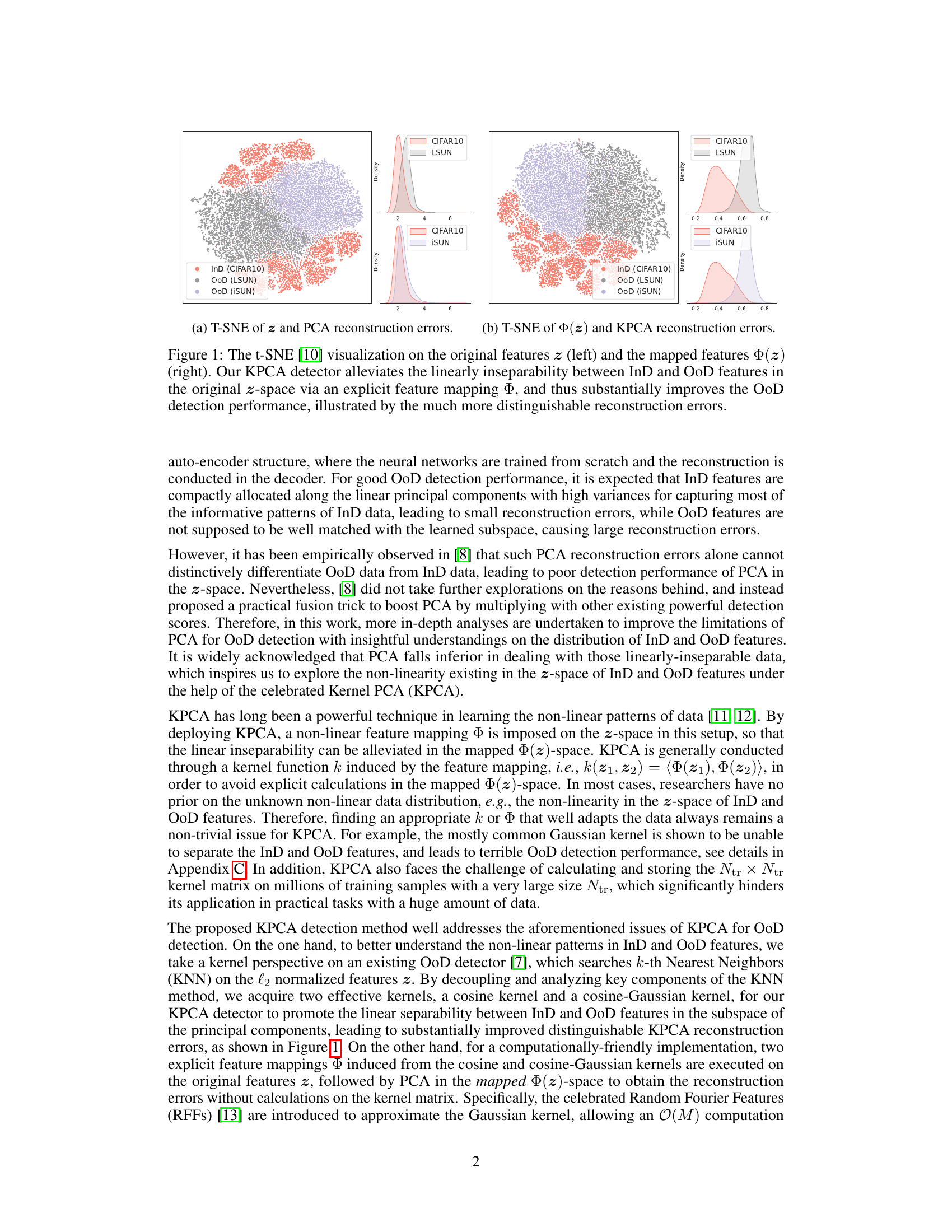

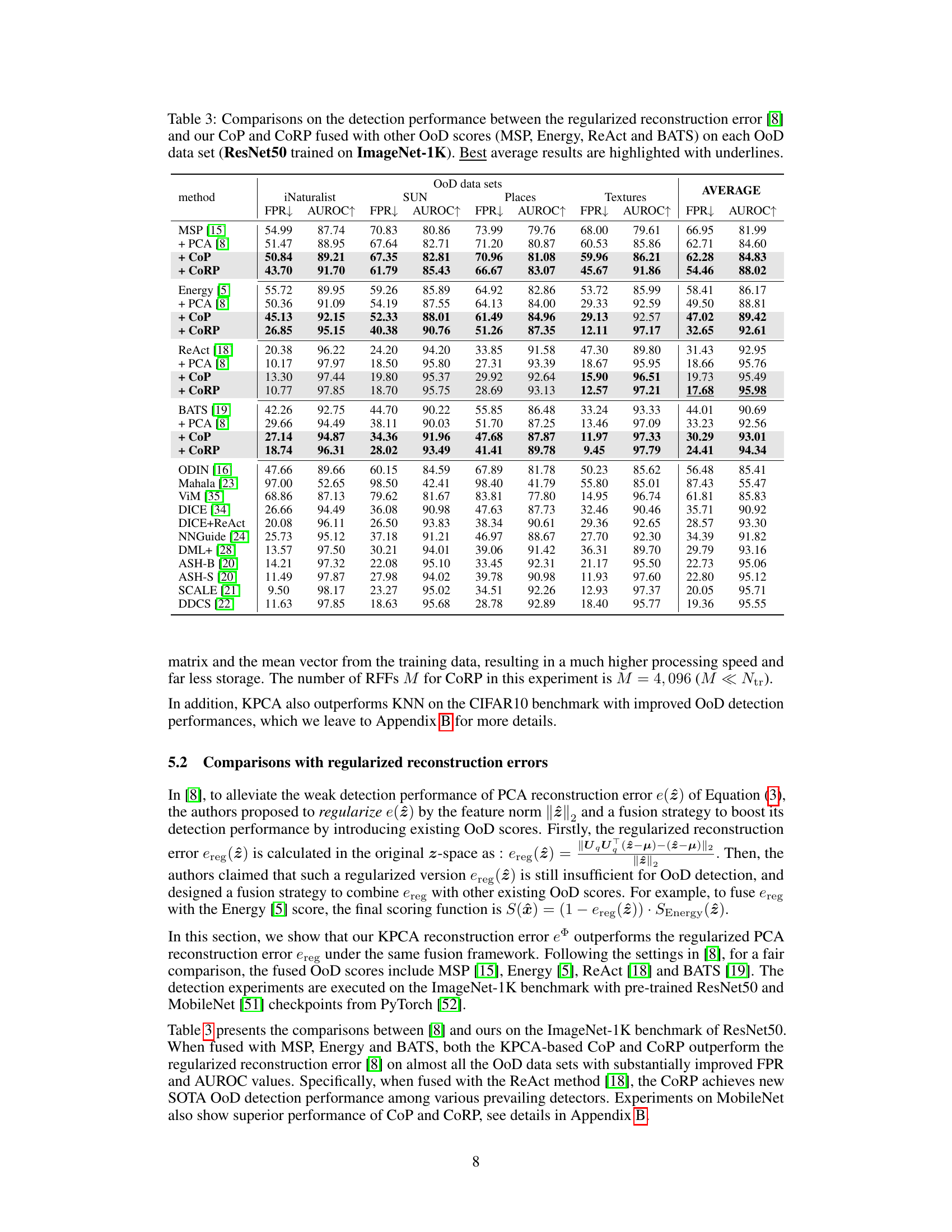

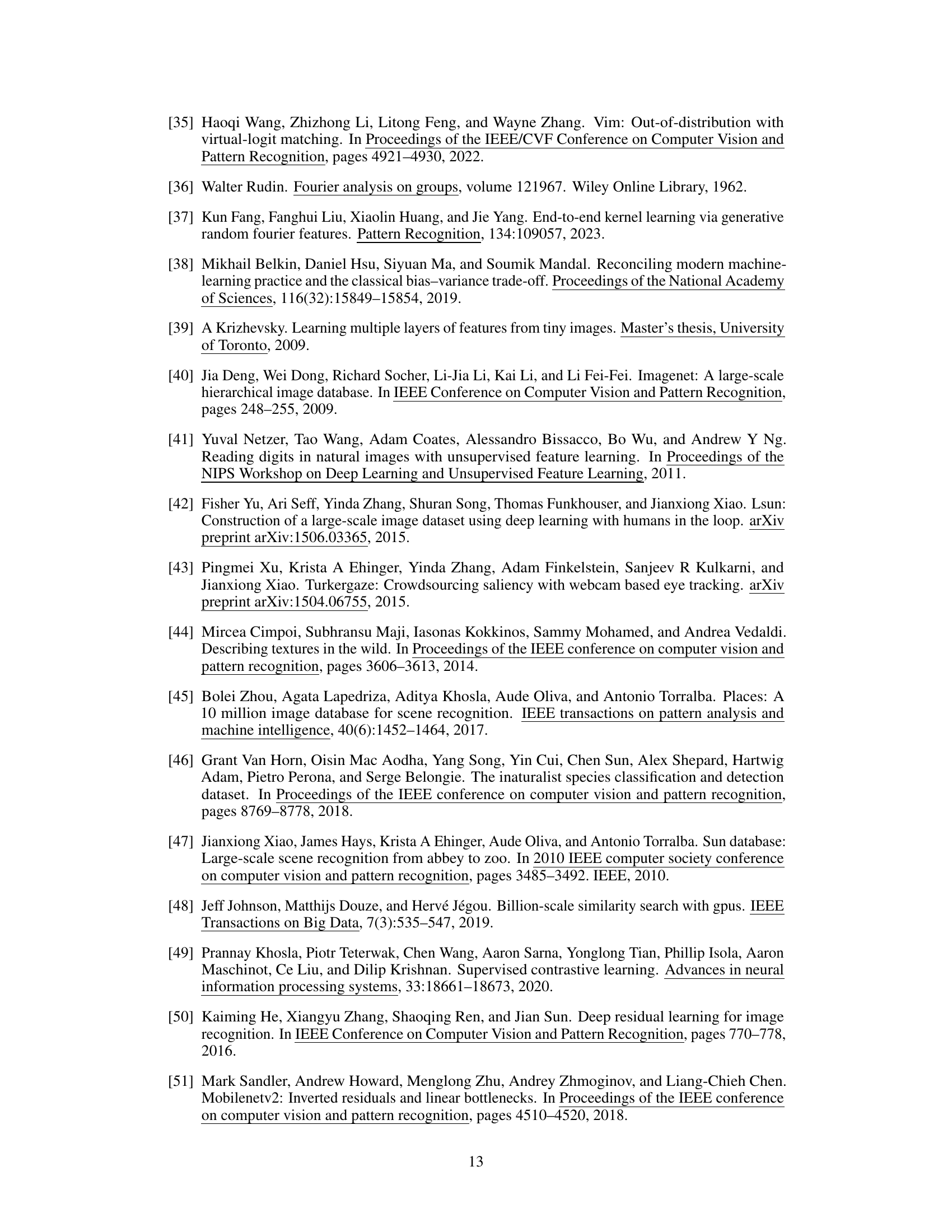

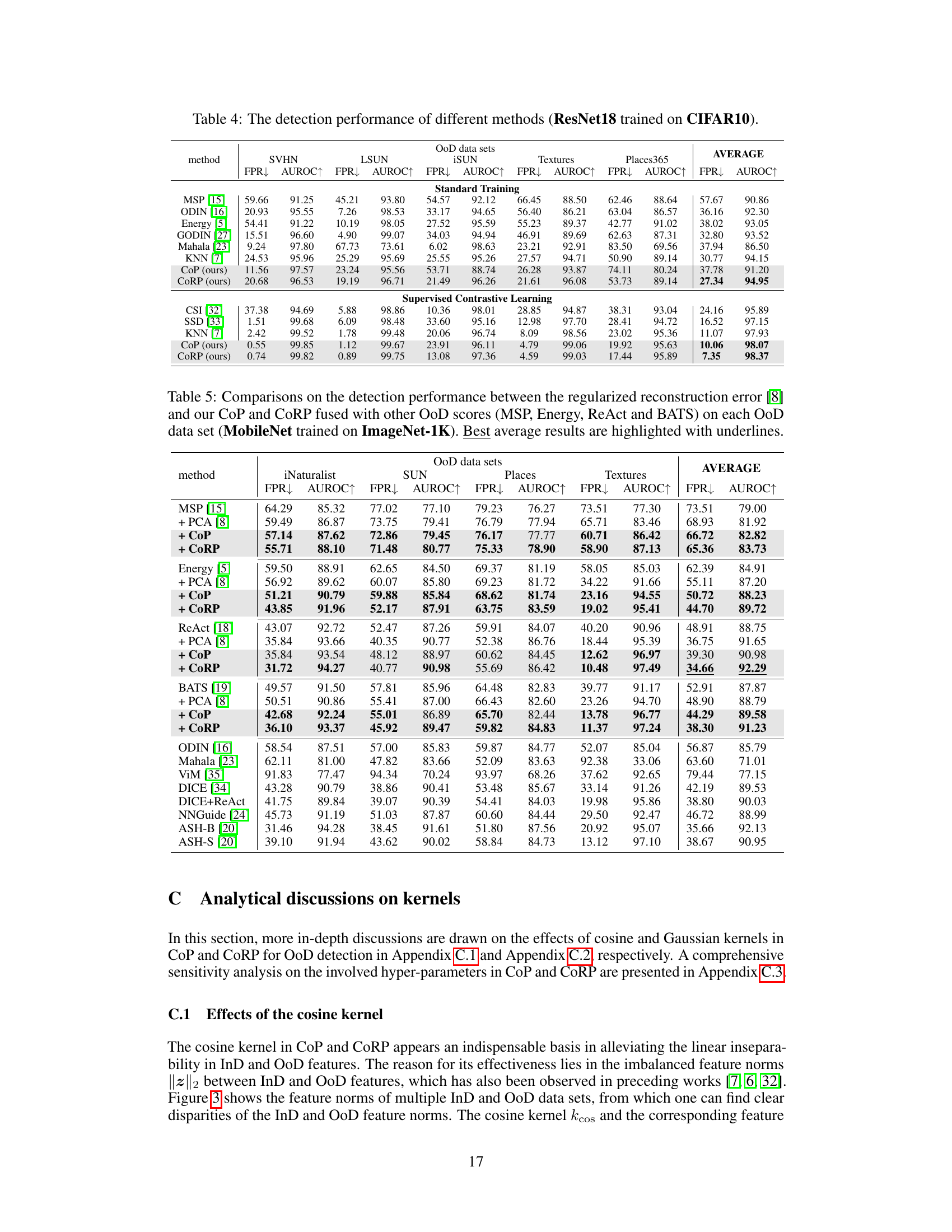

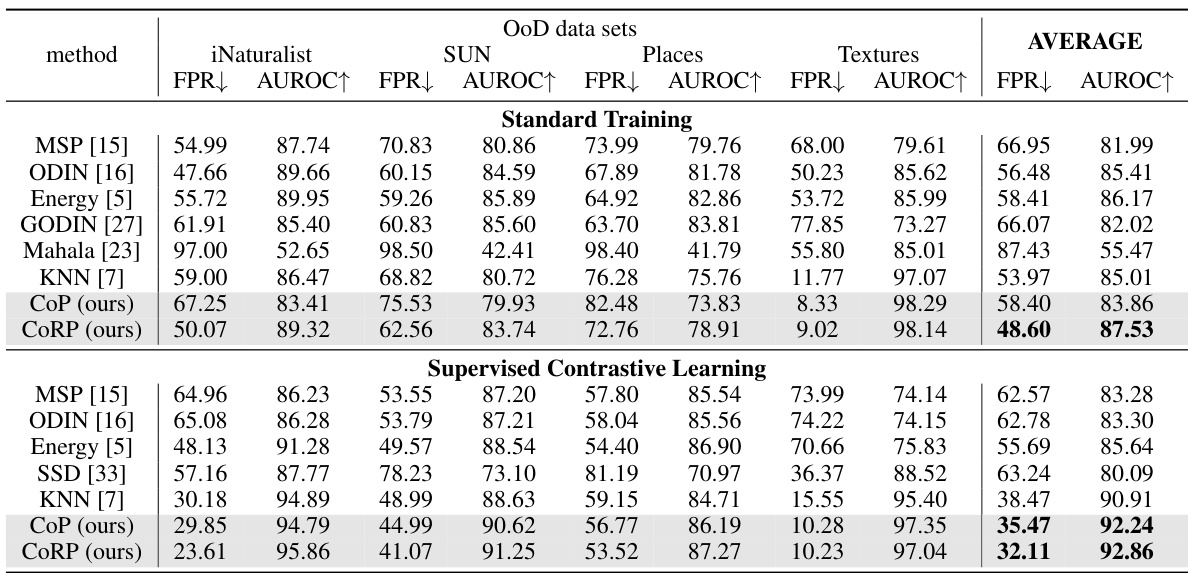

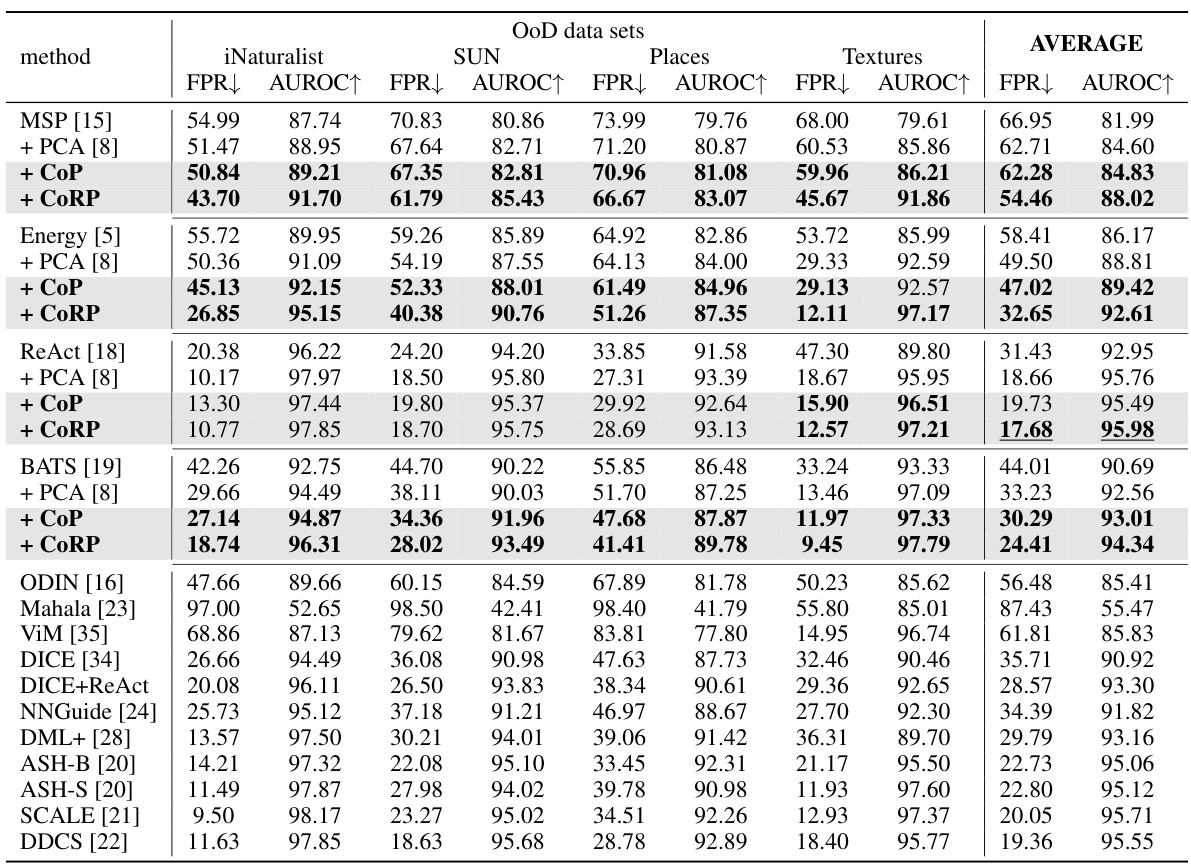

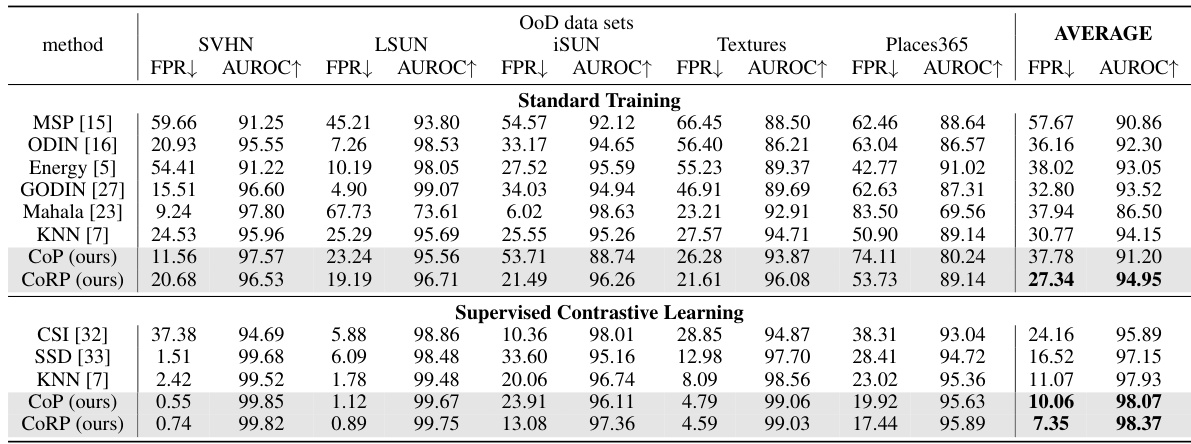

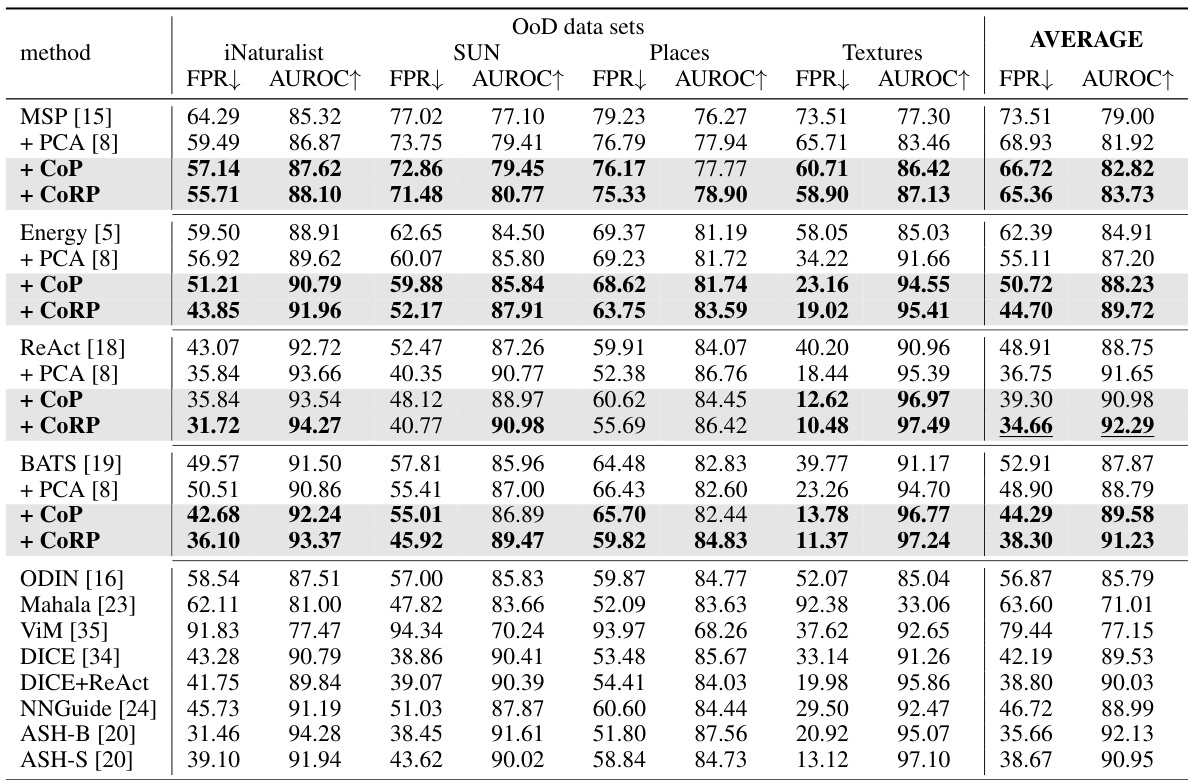

🔼 This table presents a comparison of different out-of-distribution (OoD) detection methods using a ResNet50 model trained on the ImageNet-1K dataset. It shows the performance of each method on various OoD datasets (iNaturalist, SUN, Textures, Places), measured by the False Positive Rate at 95% true positive rate (FPR95) and the Area Under the Receiver Operating Characteristic curve (AUROC). The results are broken down for standard training and supervised contrastive learning approaches, enabling a comprehensive evaluation of the methods’ performance.

read the caption

Table 1: The detection performance of different methods (ResNet50 trained on ImageNet-1K).

In-depth insights#

OoD Detection Methods#

Out-of-distribution (OoD) detection is crucial for reliable deep learning models. Many methods exist, broadly categorized by the type of DNN output they leverage. Logits-based methods analyze the model’s predicted probabilities or logits to identify discrepancies between in-distribution (InD) and OoD data. Gradient-based methods examine the gradients of the loss function, with larger gradients suggesting OoD samples. Feature-based approaches directly analyze the extracted features, seeking patterns that distinguish InD and OoD. These feature methods might involve reconstruction error analysis (e.g., using PCA or KPCA) or feature similarity metrics. The choice of method depends on several factors, including the specific DNN architecture, the characteristics of the datasets, and the computational resources available. There is active research into combining different methods to improve the robustness and accuracy of OoD detection, often involving sophisticated fusion techniques. The ideal method should strike a balance between efficacy, computational efficiency, and explainability.

KPCA for OoD#

The section ‘KPCA for OoD’ likely details the application of Kernel Principal Component Analysis (KPCA) to the problem of Out-of-Distribution (OoD) detection in machine learning models. KPCA’s non-linearity is a key advantage over traditional PCA, addressing PCA’s limitations in separating linearly inseparable in-distribution (InD) and OoD data in feature space. The authors probably explore various kernel functions within the KPCA framework, evaluating their effectiveness in highlighting the differences between InD and OoD data. Specific kernel choices, such as Gaussian or cosine kernels, are likely investigated and compared, focusing on computational efficiency and detection accuracy. The core idea is to use KPCA to learn a non-linear feature subspace where InD and OoD data become more separable, enabling more accurate classification of new samples as InD or OoD. Reconstruction error is probably employed as the key metric for OoD detection. The results likely demonstrate KPCA’s superior performance compared to standard PCA-based methods for OoD detection, showing better separation of InD and OoD data and improved detection accuracy.

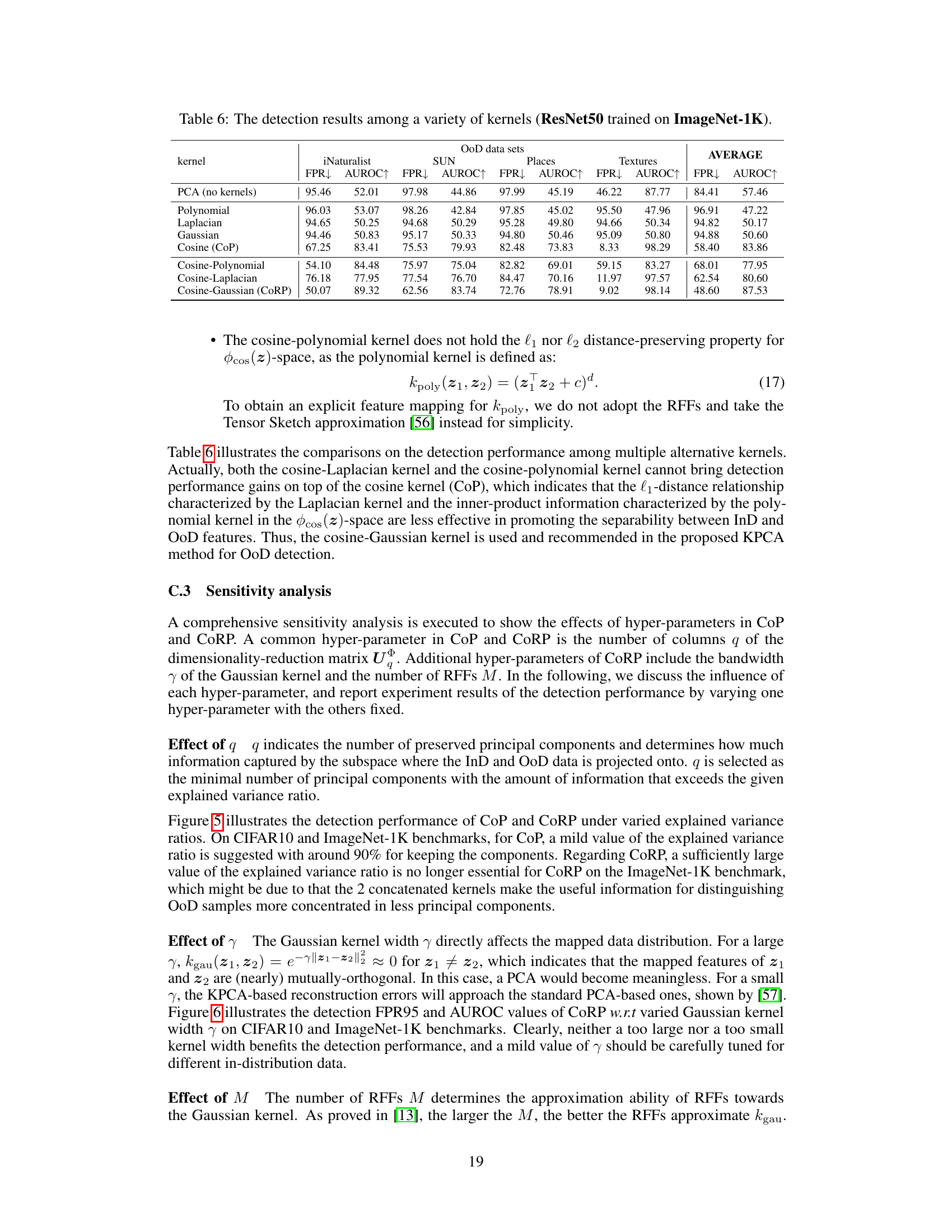

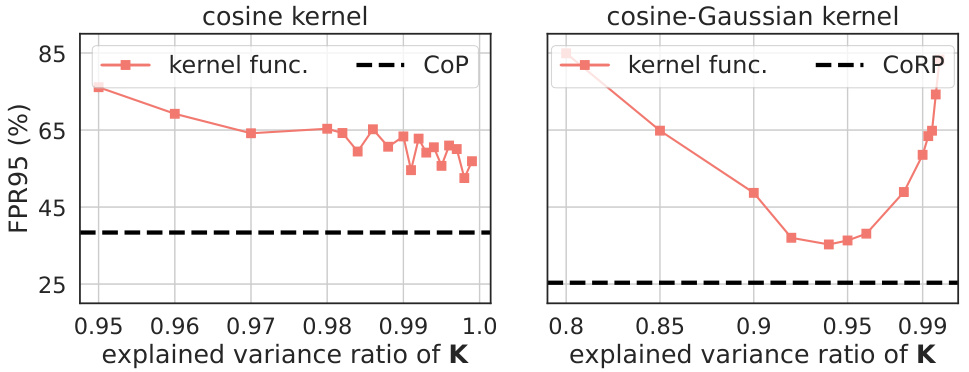

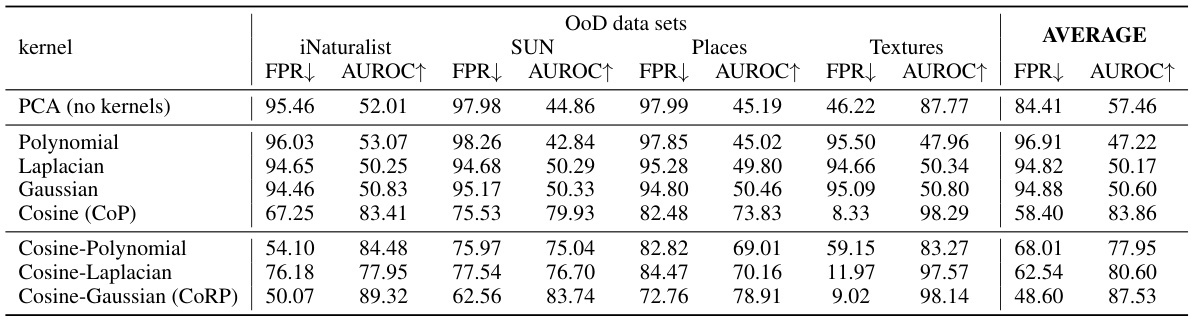

Kernel Function Effects#

A dedicated section analyzing ‘Kernel Function Effects’ within a research paper would delve into how different kernel functions impact the performance of a kernel-based method, such as Kernel PCA for out-of-distribution detection. The analysis would likely involve comparing various kernels (e.g., Gaussian, cosine, Laplacian) in terms of their ability to separate in-distribution and out-of-distribution data. Key aspects would be the computational cost associated with each kernel, their sensitivity to hyperparameters (e.g., bandwidth, degree), and the theoretical properties that influence their performance. The ultimate goal is to determine which kernel yields the most effective and efficient out-of-distribution detection, providing insights into the underlying reasons for any performance differences. A strong analysis would involve both theoretical justifications and empirical evidence using multiple datasets and network architectures. Visualizations such as t-SNE plots would be crucial to illustrate the differences in feature separation achieved by each kernel function. Furthermore, an in-depth exploration of the relationship between kernel selection and the characteristics of the data itself (linear vs. non-linear separability) is essential. The section should conclude with a strong recommendation on optimal kernel choices based on practical performance and theoretical understanding.

Computational Efficiency#

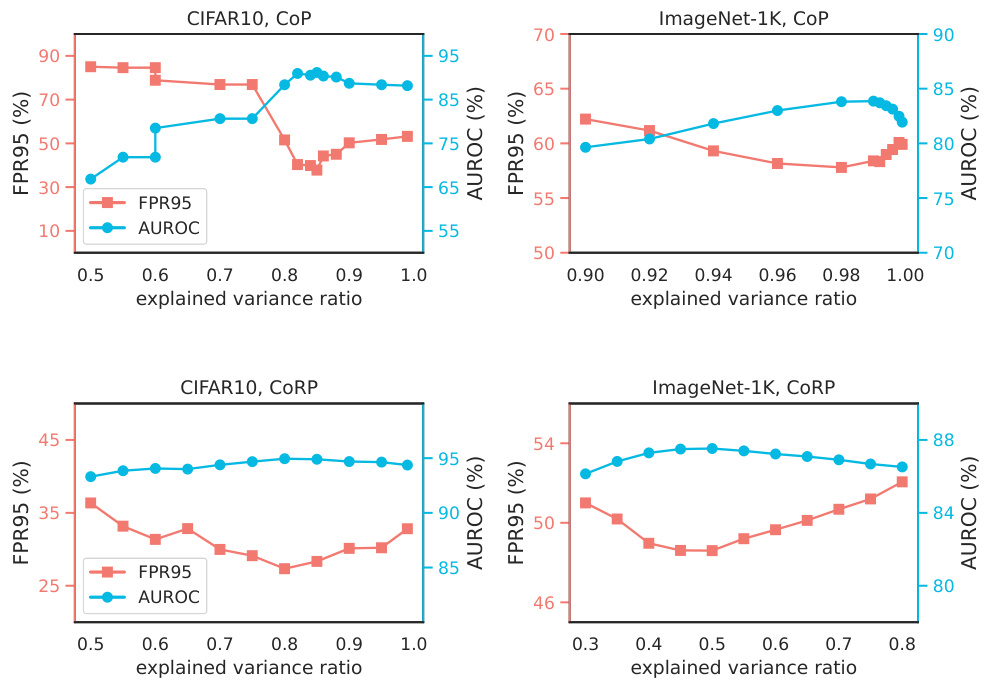

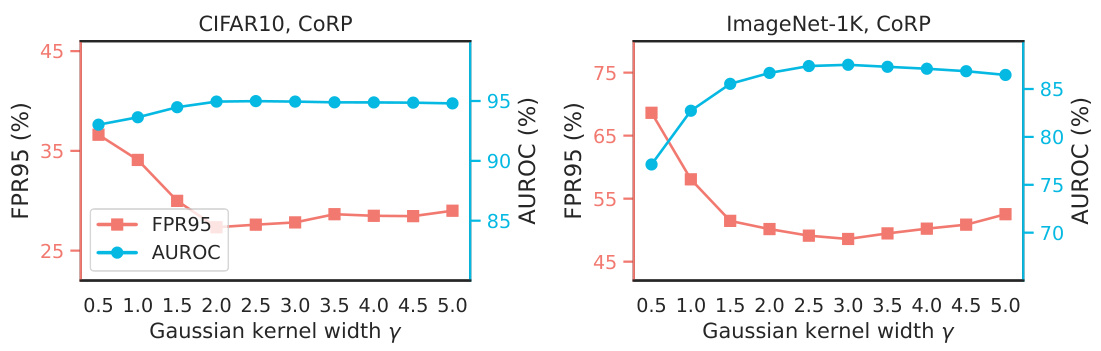

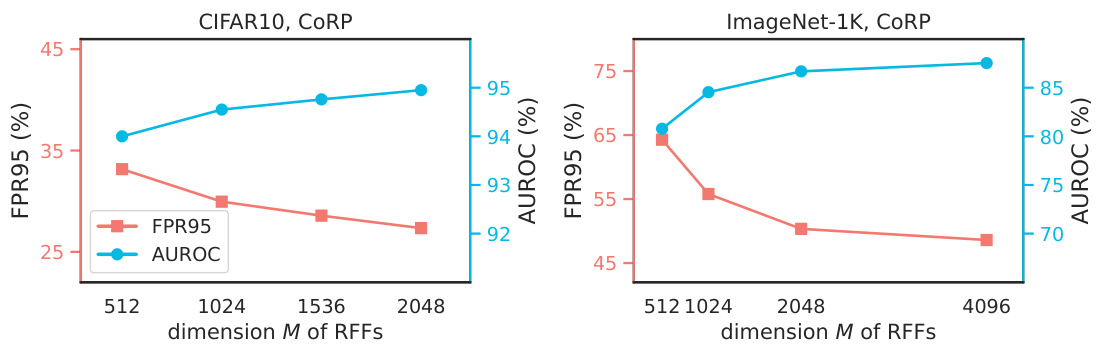

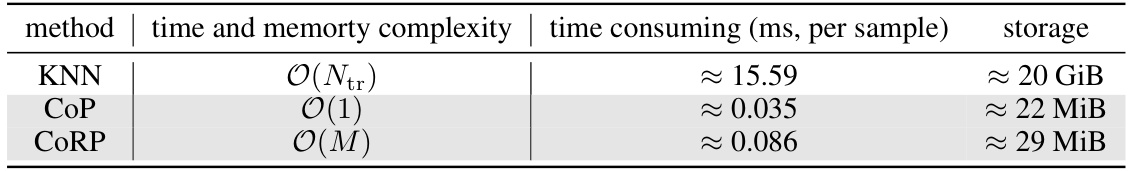

The computational efficiency of the proposed Kernel PCA (KPCA) method for out-of-distribution (OoD) detection is a central theme. The authors address the computational bottleneck of traditional KPCA, which involves calculating and storing a large kernel matrix, by introducing two novel techniques. First, they leverage explicit feature mappings induced from task-specific kernels (cosine and cosine-Gaussian) to avoid the explicit computation in the high-dimensional feature space. Second, they utilize random Fourier features (RFFs) for efficient approximation of the Gaussian kernel, dramatically reducing computational complexity. The result is a KPCA detector with significantly lower time and memory complexity compared to traditional methods like KNN, achieving state-of-the-art OoD detection performance with improved efficiency. The authors explicitly analyze the computational complexity of their approach, highlighting the benefits of this novel design in practical applications involving large-scale datasets. The reduced complexity is a crucial advantage, especially in real-time or resource-constrained settings where efficient OoD detection is vital.

Future Research#

Future research directions stemming from this work could explore several promising avenues. Developing more sophisticated kernel functions specifically tailored for out-of-distribution (OoD) detection is crucial. The current study uses cosine and cosine-Gaussian kernels, but a systematic exploration of other kernel types could significantly improve performance. Furthermore, investigating alternative feature extraction methods beyond penultimate layer features is warranted. Different feature spaces might capture more relevant information to discriminate between InD and OoD data. Incorporating uncertainty estimation into the OoD detection framework would enhance its robustness. Combining the KPCA approach with techniques that quantify the uncertainty associated with predictions would lead to a more reliable OoD detection system. Lastly, and importantly, extending the KPCA method to other types of data such as time-series or text data is a significant next step. The current paper focuses on image data; demonstrating its generalizability and efficacy on various data modalities is key to realizing its wider impact.

More visual insights#

More on figures

🔼 This figure uses t-SNE to visualize the original features and the features mapped by KPCA. The left panel shows the original features, demonstrating poor separation between in-distribution (InD) and out-of-distribution (OoD) data. The right panel shows the KPCA-mapped features, highlighting the improved separability achieved by the KPCA method. This improved separability is further illustrated by the distinct reconstruction error distributions shown below each t-SNE plot. The results showcase KPCA’s effectiveness in enhancing OoD detection performance.

read the caption

Figure 1: The t-SNE [10] visualization on the original features z (left) and the mapped features (z) (right). Our KPCA detector alleviates the linearly inseparability between InD and OoD features in the original z-space via an explicit feature mapping Φ, and thus substantially improves the OoD detection performance, illustrated by the much more distinguishable reconstruction errors.

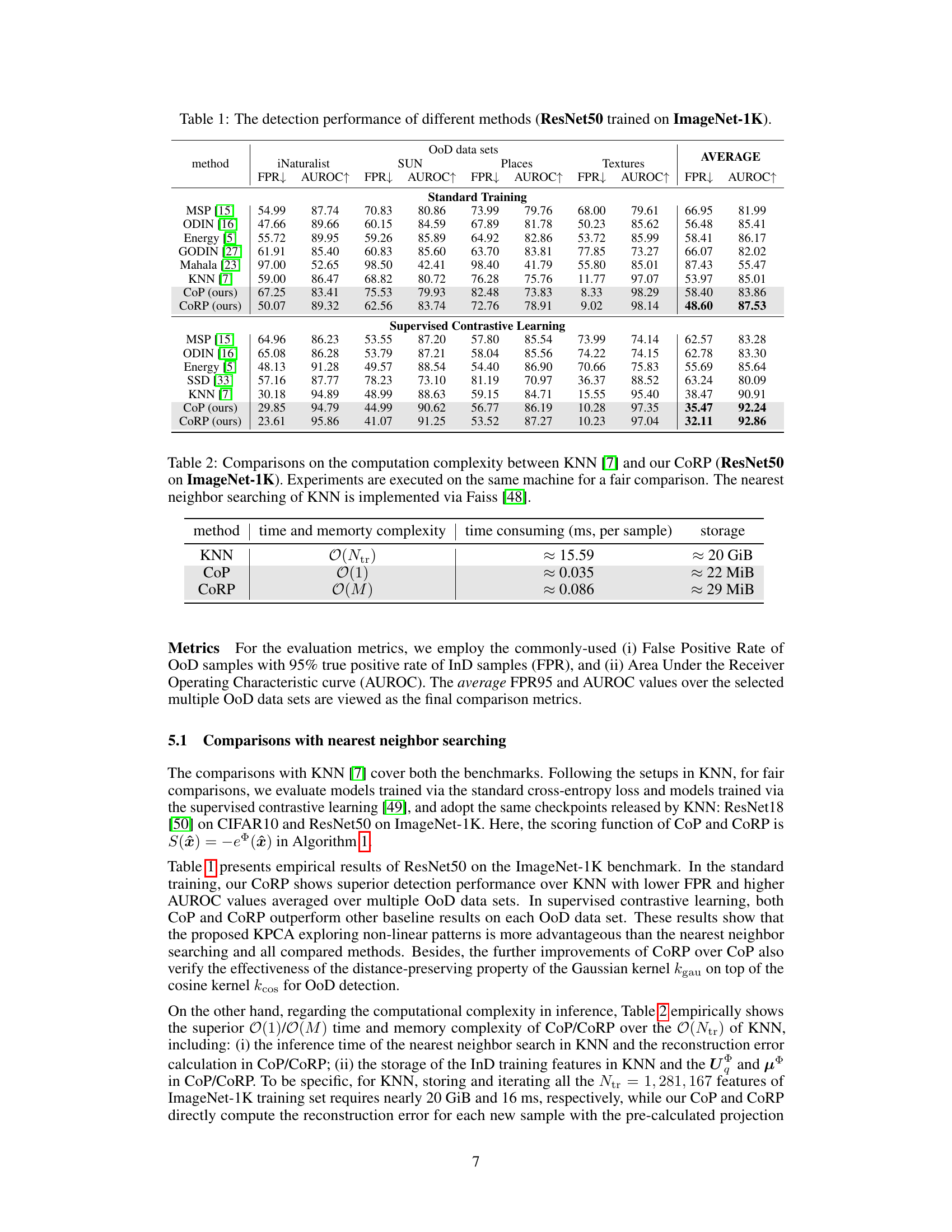

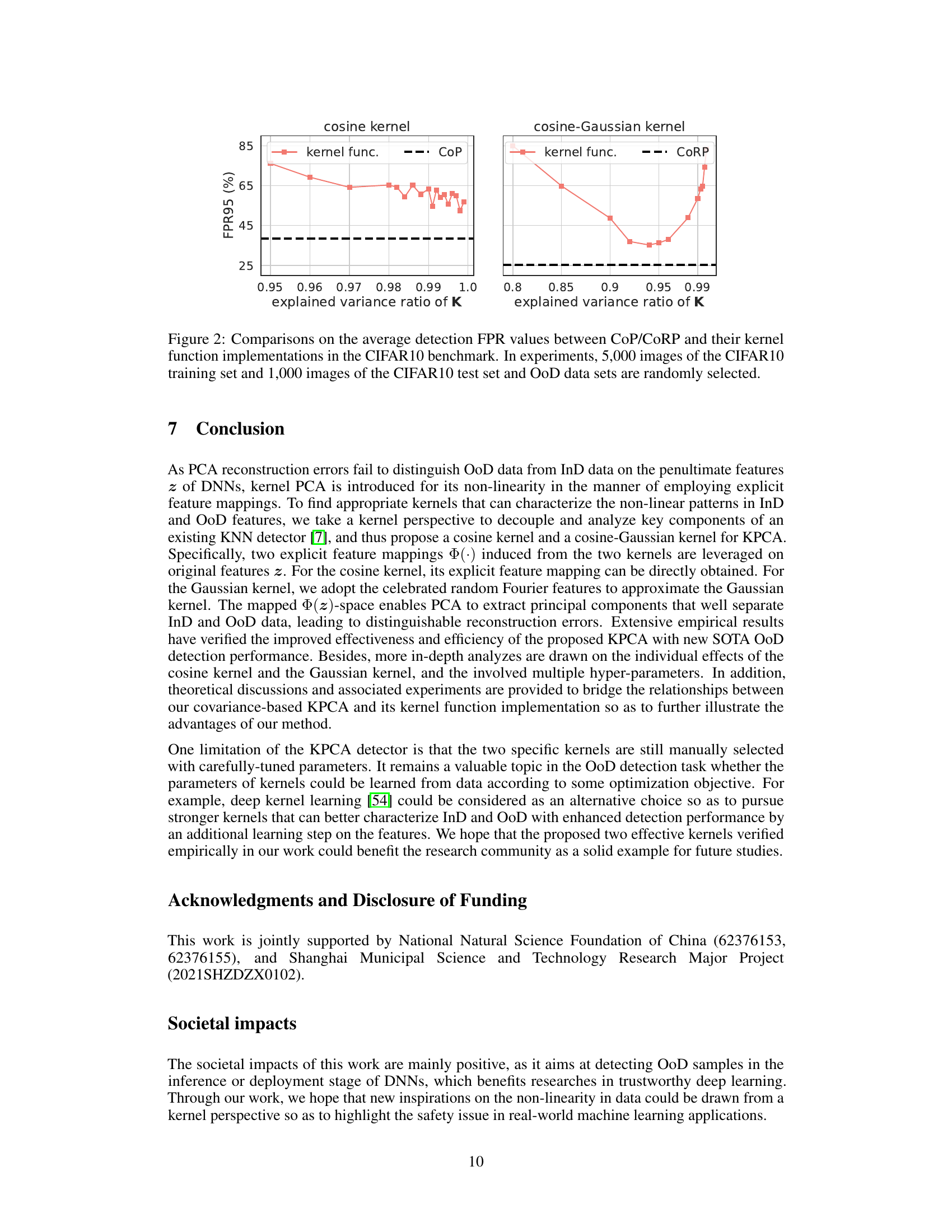

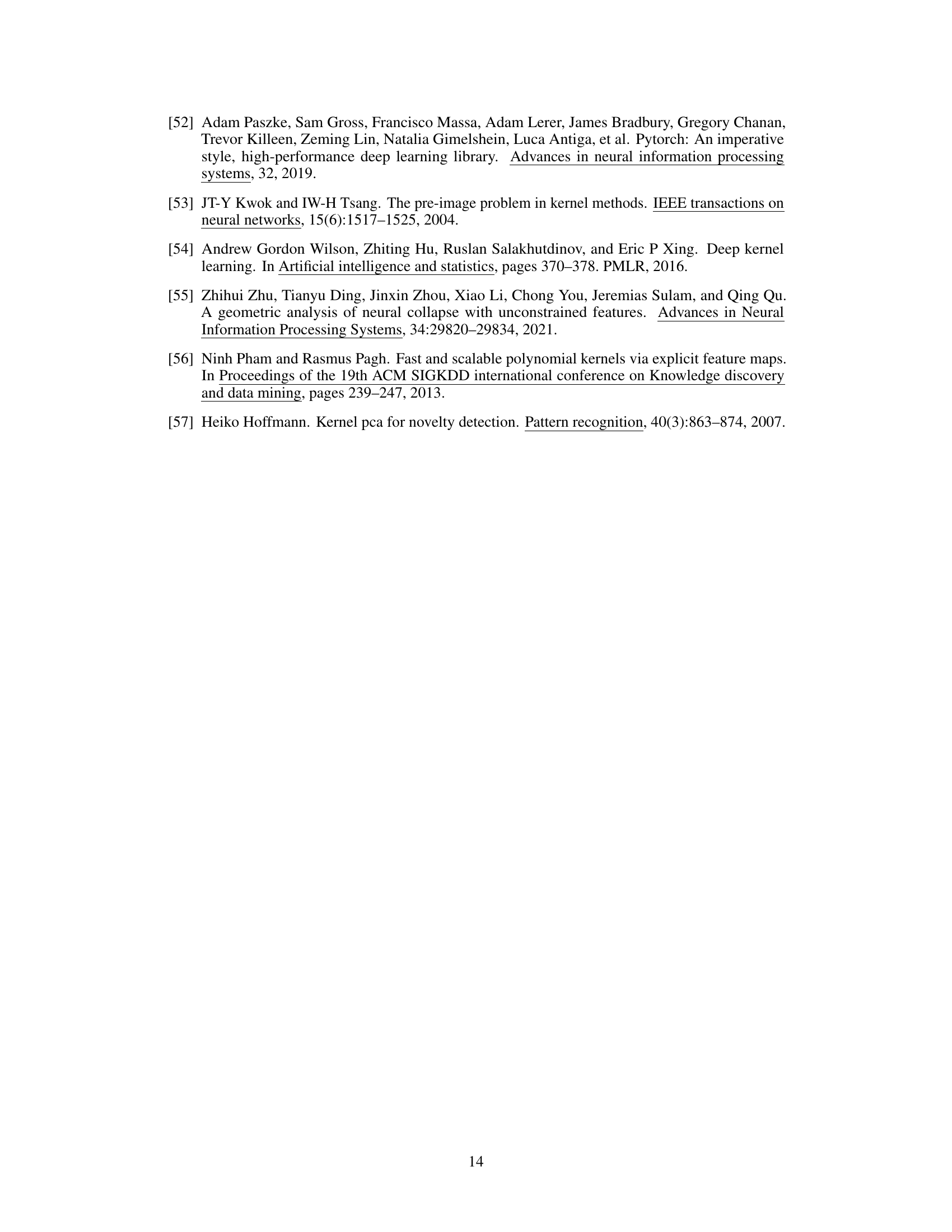

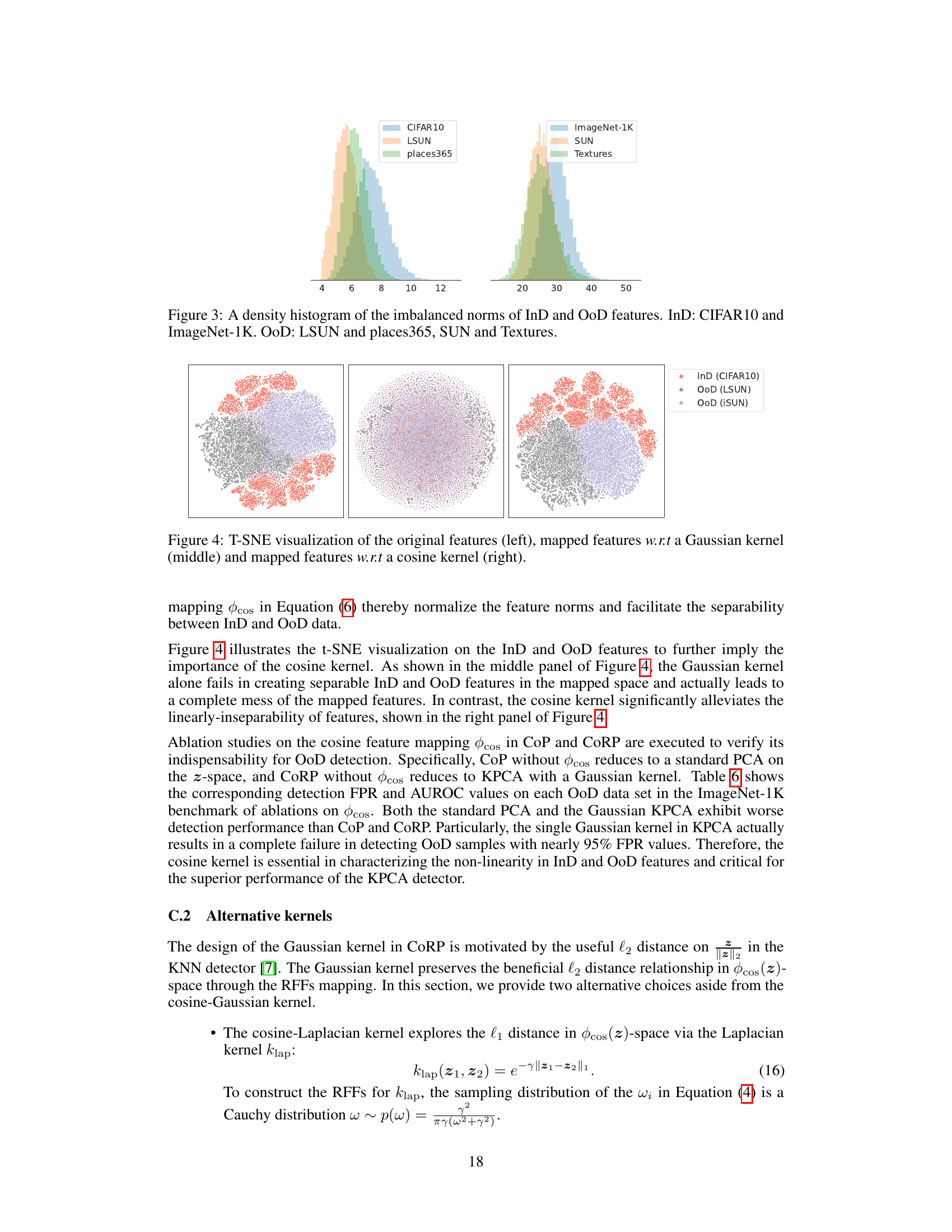

🔼 This figure shows the distribution of feature norms (||z||2) for In-distribution (InD) and out-of-distribution (OoD) datasets. The InD datasets are CIFAR-10 and ImageNet-1K, while the OoD datasets are LSUN, places365, SUN, and Textures. The histograms clearly demonstrate a significant difference in the distribution of feature norms between InD and OoD data, highlighting the imbalanced feature norm property that is exploited by the cosine kernel in the proposed method.

read the caption

Figure 3: A density histogram of the imbalanced norms of InD and OoD features. InD: CIFAR10 and ImageNet-1K. OoD: LSUN and places365, SUN and Textures.

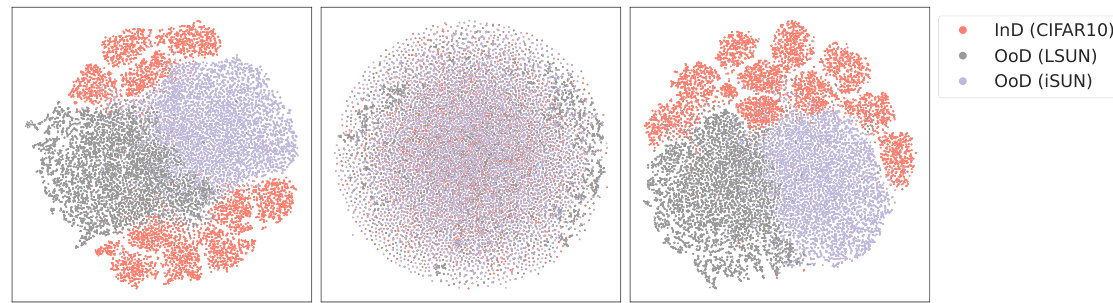

🔼 This figure uses t-SNE to visualize the original and mapped features of in-distribution (InD) and out-of-distribution (OoD) data. The left panel shows the original features, demonstrating a lack of clear separation between InD and OoD data. The right panel displays the features after applying the kernel PCA (KPCA) method with a specific feature mapping (Φ). The KPCA mapping effectively separates InD and OoD data points, making the OoD detection task easier. The color-coding helps to distinguish the three types of data: InD, OoD (LSUN), and OoD (iSUN).

read the caption

Figure 1: The t-SNE [10] visualization on the original features z (left) and the mapped features (z) (right). Our KPCA detector alleviates the linearly inseparability between InD and OoD features in the original z-space via an explicit feature mapping Φ, and thus substantially improves the OoD detection performance, illustrated by the much more distinguishable reconstruction errors.

🔼 This figure uses t-SNE to visualize the original features (z) and the features mapped by KPCA (Φ(z)). The left panel shows the original features, where InD and OoD data are mixed. The right panel shows the mapped features, where InD and OoD data are clearly separated. This separation is achieved by using KPCA with the proposed cosine and cosine-Gaussian kernels, which effectively maps the data into a subspace where the linear inseparability between InD and OoD features is reduced. The figure demonstrates the effectiveness of the KPCA detector in improving OoD detection performance, as the reconstruction errors are significantly more distinguishable between InD and OoD data after the non-linear mapping.

read the caption

Figure 1: The t-SNE [10] visualization on the original features z (left) and the mapped features (z) (right). Our KPCA detector alleviates the linearly inseparability between InD and OoD features in the original z-space via an explicit feature mapping Φ, and thus substantially improves the OoD detection performance, illustrated by the much more distinguishable reconstruction errors.

🔼 This figure uses t-SNE to visualize the original features (z) and the mapped features ((z)) from a deep neural network. The left panel shows the original features, illustrating the difficulty in linearly separating in-distribution (InD) and out-of-distribution (OoD) data points. The right panel shows the features after applying the proposed KPCA’s feature mapping (Φ). The improved separation of InD and OoD data in the mapped feature space highlights the effectiveness of the KPCA method in enhancing out-of-distribution detection. The improved separability is reflected in the significantly more distinct reconstruction errors for InD and OoD data, making classification easier.

read the caption

Figure 1: The t-SNE [10] visualization on the original features z (left) and the mapped features (z) (right). Our KPCA detector alleviates the linearly inseparability between InD and OoD features in the original z-space via an explicit feature mapping Φ, and thus substantially improves the OoD detection performance, illustrated by the much more distinguishable reconstruction errors.

🔼 This figure uses t-SNE to visualize the original features (z) and the mapped features (Φ(z)) of in-distribution (InD) and out-of-distribution (OoD) data. The left panel shows the original features, demonstrating poor separation between InD and OoD data. The right panel shows the mapped features using the KPCA method, clearly showing improved separation between InD and OoD data due to the use of the non-linear mapping Φ. The improved separation leads to more distinguishable reconstruction errors which improves the overall performance of the OoD detector.

read the caption

Figure 1: The t-SNE [10] visualization on the original features z (left) and the mapped features (z) (right). Our KPCA detector alleviates the linearly inseparability between InD and OoD features in the original z-space via an explicit feature mapping Φ, and thus substantially improves the OoD detection performance, illustrated by the much more distinguishable reconstruction errors.

More on tables

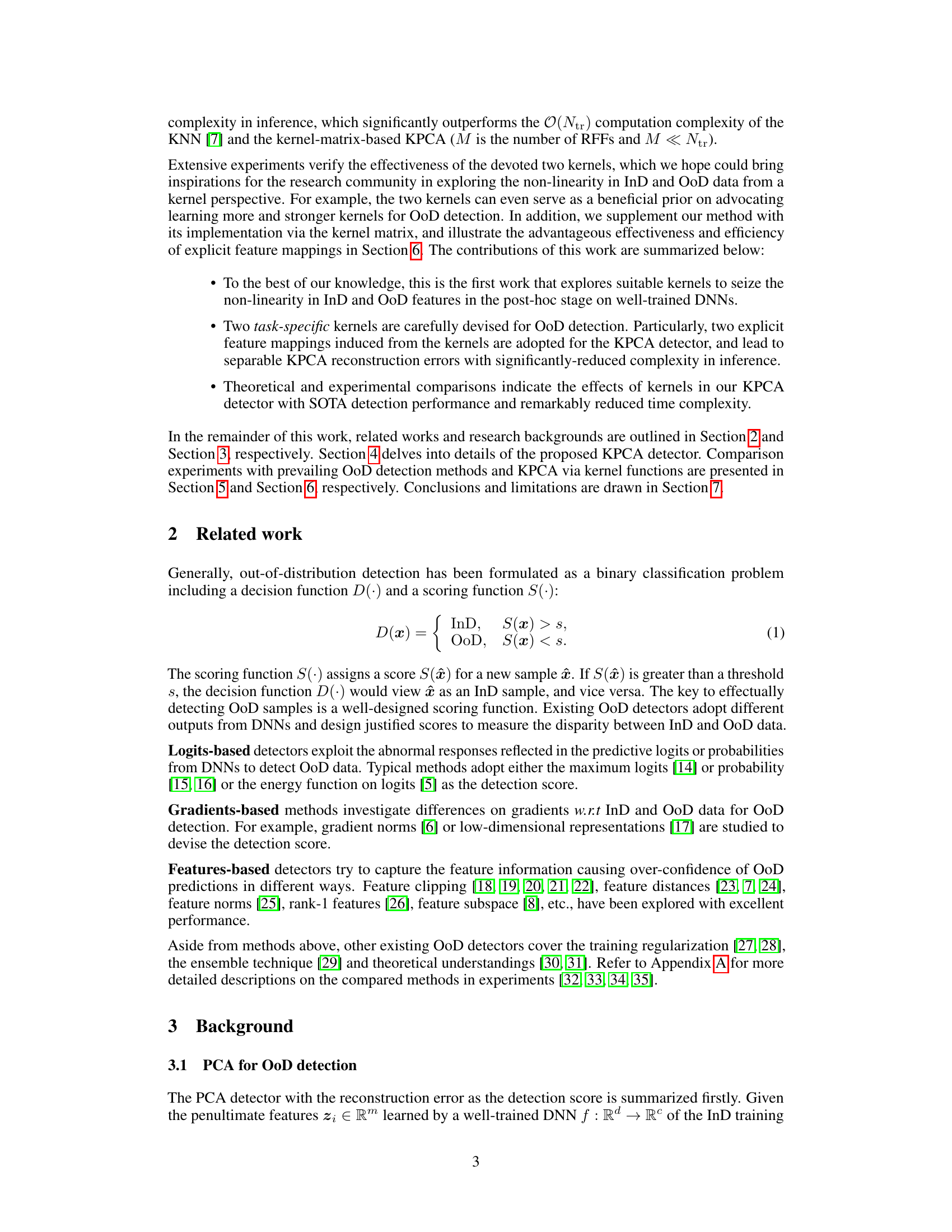

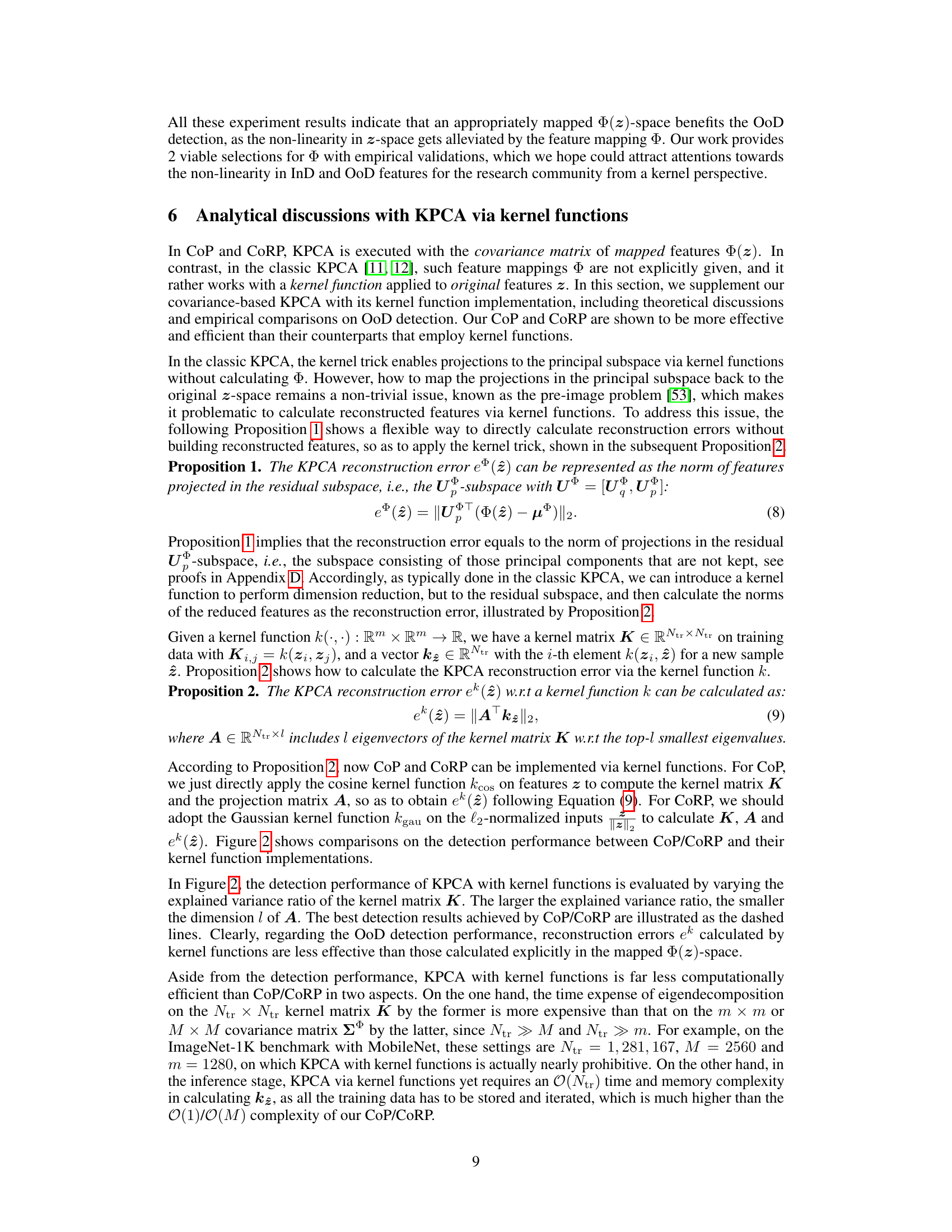

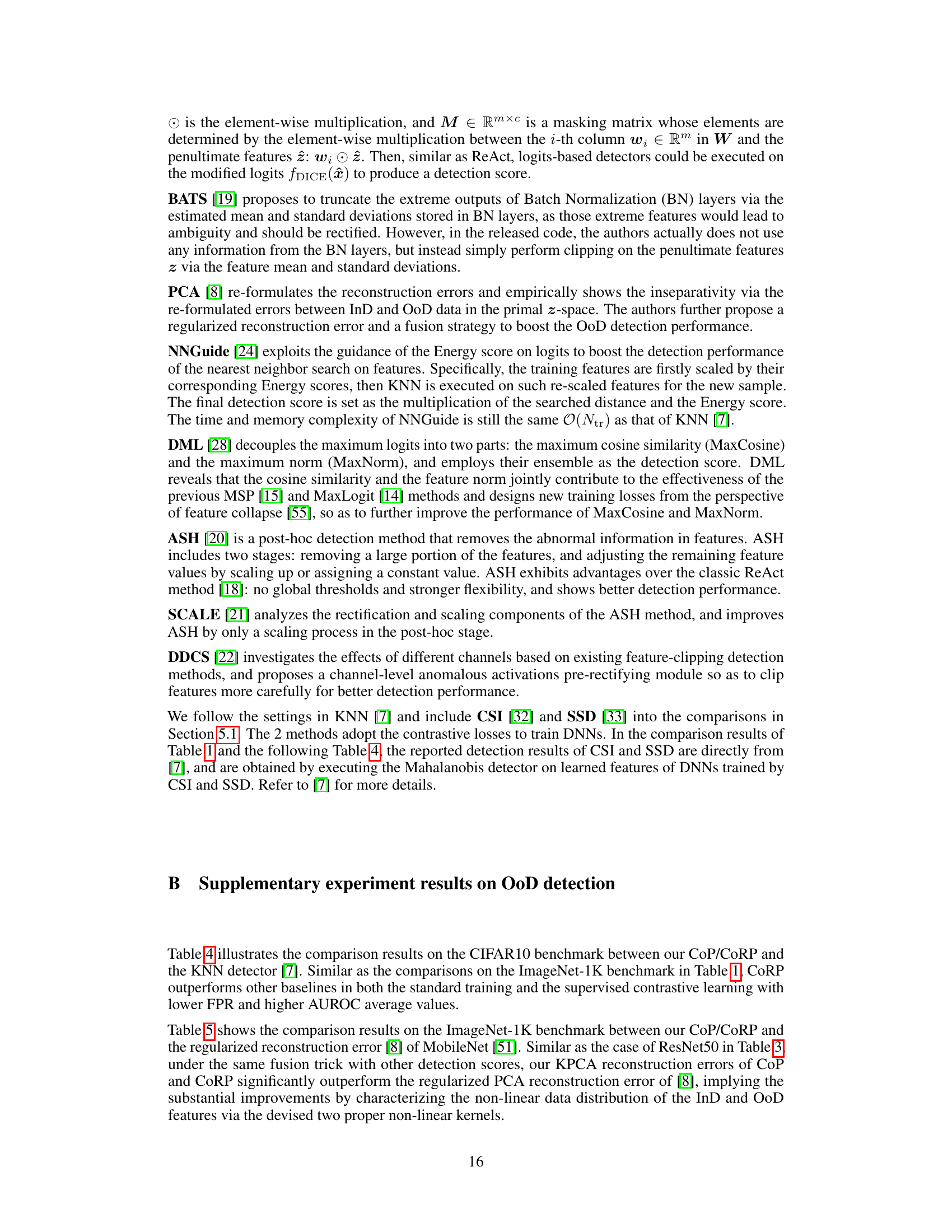

🔼 This table compares the computational complexity (time and memory) of the proposed method CORP and the existing KNN method for out-of-distribution detection using ResNet50 on ImageNet-1k. It highlights the significant reduction in computational cost achieved by CORP compared to KNN.

read the caption

Table 2: Comparisons on the computation complexity between KNN [7] and our CORP (ResNet50 on ImageNet-1K). Experiments are executed on the same machine for a fair comparison. The nearest neighbor searching of KNN is implemented via Faiss [48].

🔼 This table presents a comparison of the out-of-distribution (OoD) detection performance of several methods, including the proposed methods (CoP and CoRP), on the ImageNet-1K dataset using ResNet50. The results are broken down by OoD dataset (iNaturalist, SUN, Textures, Places) and metric (FPR, AUROC). Two training scenarios are shown: standard training and supervised contrastive learning. The table helps illustrate the superiority of the proposed methods.

read the caption

Table 1: The detection performance of different methods (ResNet50 trained on ImageNet-1K).

🔼 This table presents a comparison of the out-of-distribution (OoD) detection performance of several methods, including the proposed CoP and CoRP, using ResNet50 trained on ImageNet-1K. The performance is evaluated on five different OoD datasets (iNaturalist, SUN, Textures, Places) using the metrics of False Positive Rate at 95% true positive rate (FPR95) and Area Under the Receiver Operating Characteristic curve (AUROC). The results are shown for both standard training and supervised contrastive learning.

read the caption

Table 1: The detection performance of different methods (ResNet50 trained on ImageNet-1K).

🔼 This table presents a comparison of the out-of-distribution (OoD) detection performance of various methods using ResNet50, a deep convolutional neural network, trained on the ImageNet-1K dataset. The comparison is made across five different OoD datasets (iNaturalist, SUN, Places, Textures, and AVERAGE). The results are presented in terms of the False Positive Rate (FPR) at 95% true positive rate (AUROC) and the Area Under the Receiver Operating Characteristic curve (AUROC). The table also includes separate results for standard training and supervised contrastive learning methods, to demonstrate the effects of different training methodologies.

read the caption

Table 1: The detection performance of different methods (ResNet50 trained on ImageNet-1K).

🔼 This table presents the results of out-of-distribution (OoD) detection experiments using different methods on the ImageNet-1K dataset. ResNet50 is used as the backbone network. The table compares the performance across several OoD datasets (iNaturalist, SUN, Textures, Places) using both standard and supervised contrastive learning training methods. Metrics used include False Positive Rate (FPR) at 95% true positive rate (FPR95) and Area Under the Receiver Operating Characteristic curve (AUROC). The results showcase the performance of the proposed KPCA method (CoP and CoRP) against existing state-of-the-art methods.

read the caption

Table 1: The detection performance of different methods (ResNet50 trained on ImageNet-1K).

Full paper#