TL;DR#

Verifying the safety and reliability of neural networks is paramount, especially for safety-critical applications. Existing methods often struggle with the computational complexity of large-scale networks, thus limiting their effectiveness. In particular, cutting-plane methods, while effective, often rely on external solvers which do not scale well to larger networks.

This paper introduces BICCOS, a novel approach to neural network verification that addresses these limitations. BICCOS leverages the logical relationships between neurons within a branch-and-bound search tree to generate efficient cutting planes directly, without relying on external solvers. It significantly improves verification efficiency and scalability, especially for large networks, consistently outperforming state-of-the-art verifiers on a range of benchmarks. The improved performance is achieved by using a multi-tree search to generate more effective cuts, strengthening cuts to increase their effectiveness, and applying a breadth-first search strategy.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in neural network verification. It introduces BICCOS, a novel and scalable method significantly improving verification efficiency, especially for large networks previously intractable. This opens new avenues in safety-critical applications by enabling rigorous verification of complex neural network models, a critical need in AI safety and reliability research. The findings are highly relevant to current trends in cutting plane methods and branch-and-bound techniques, potentially influencing the development of new, highly effective verifiers.

Visual Insights#

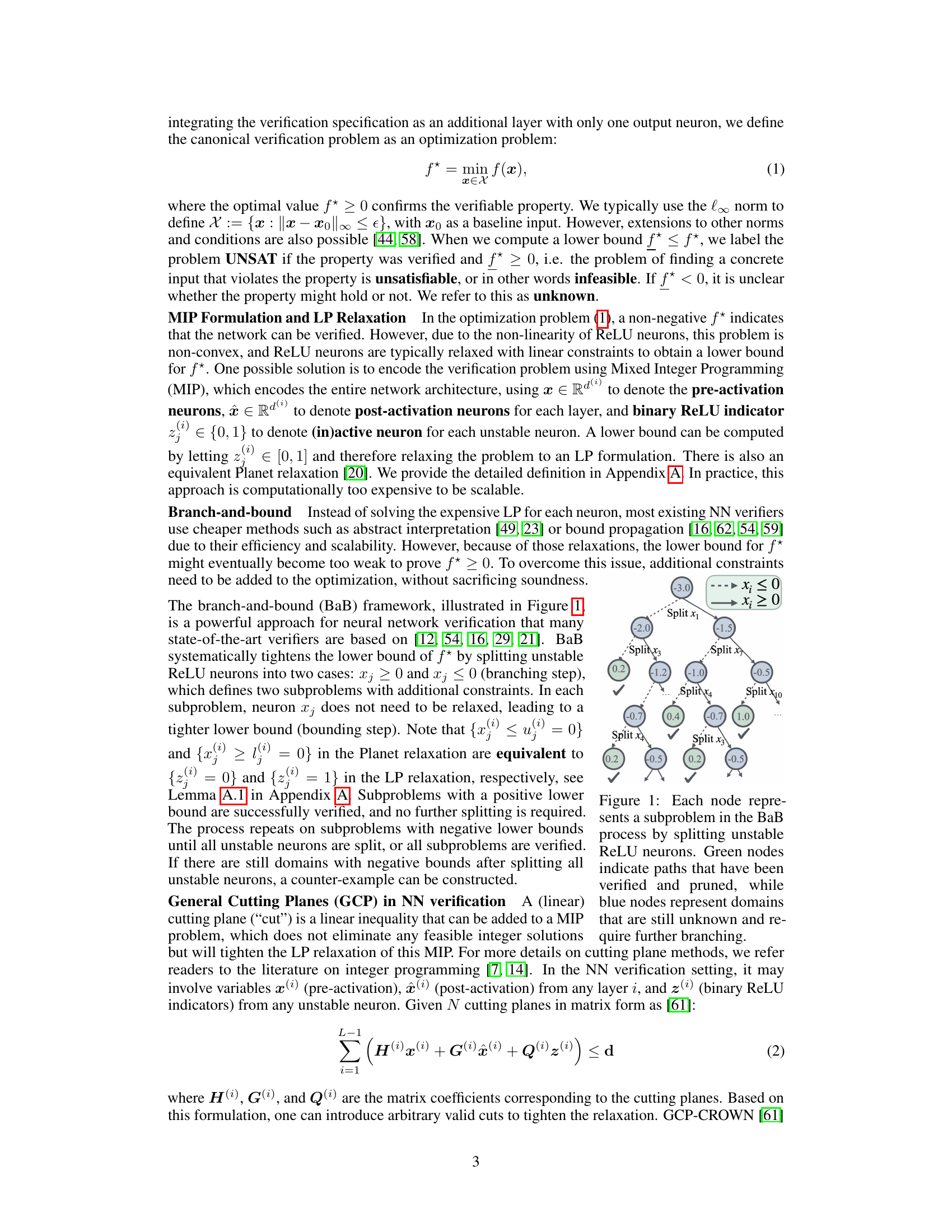

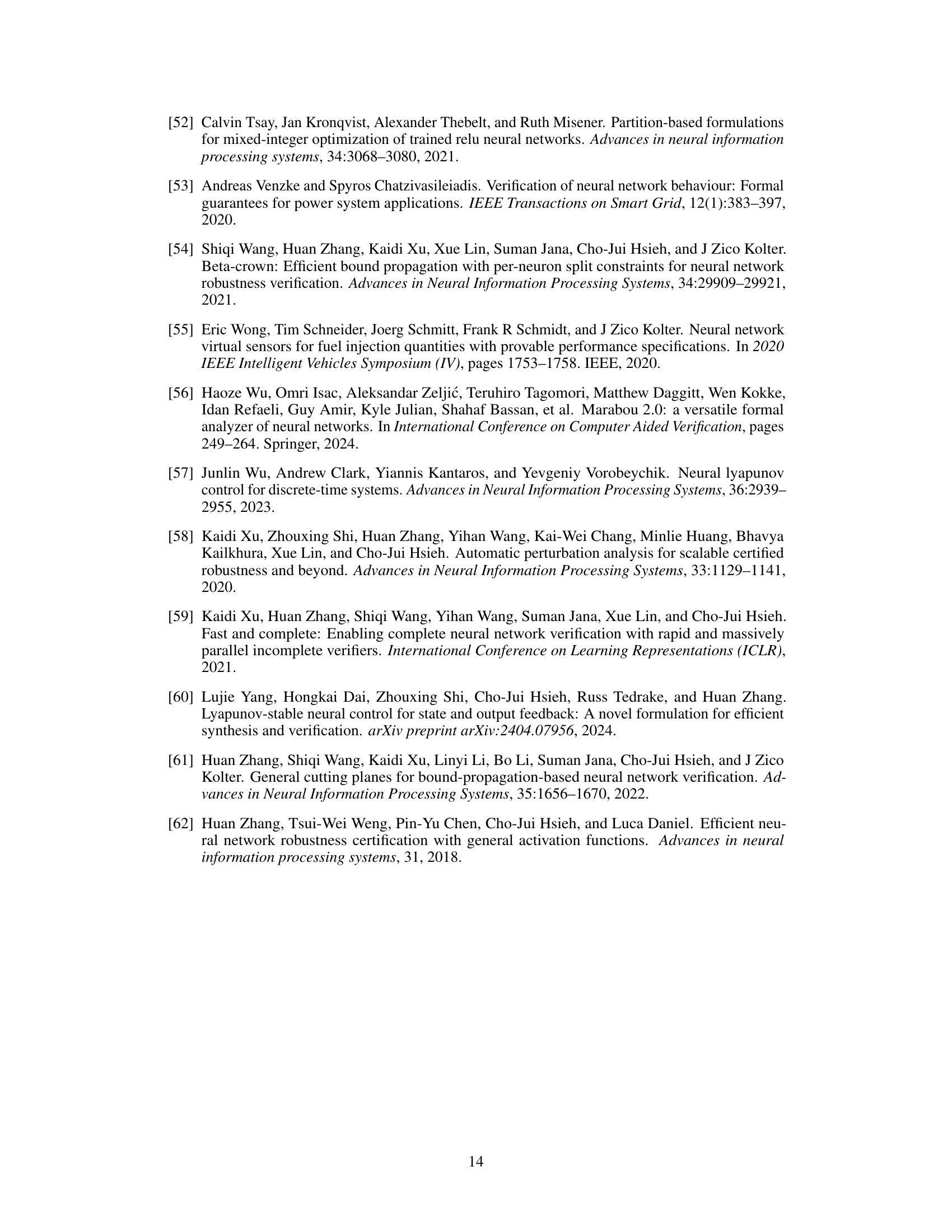

🔼 This figure illustrates the branch and bound (BaB) process for neural network verification. Each node in the tree represents a subproblem, created by splitting an unstable ReLU neuron into two subproblems representing the neuron being active or inactive. Green nodes represent subproblems that have been solved and pruned from the search tree, while blue nodes are still being explored. The process continues until all subproblems are solved or the specified property has been verified.

read the caption

Figure 1: Each node represents a subproblem in the BaB process by splitting unstable ReLU neurons. Green nodes indicate paths that have been verified and pruned, while blue nodes represent domains that are still unknown and require further branching.

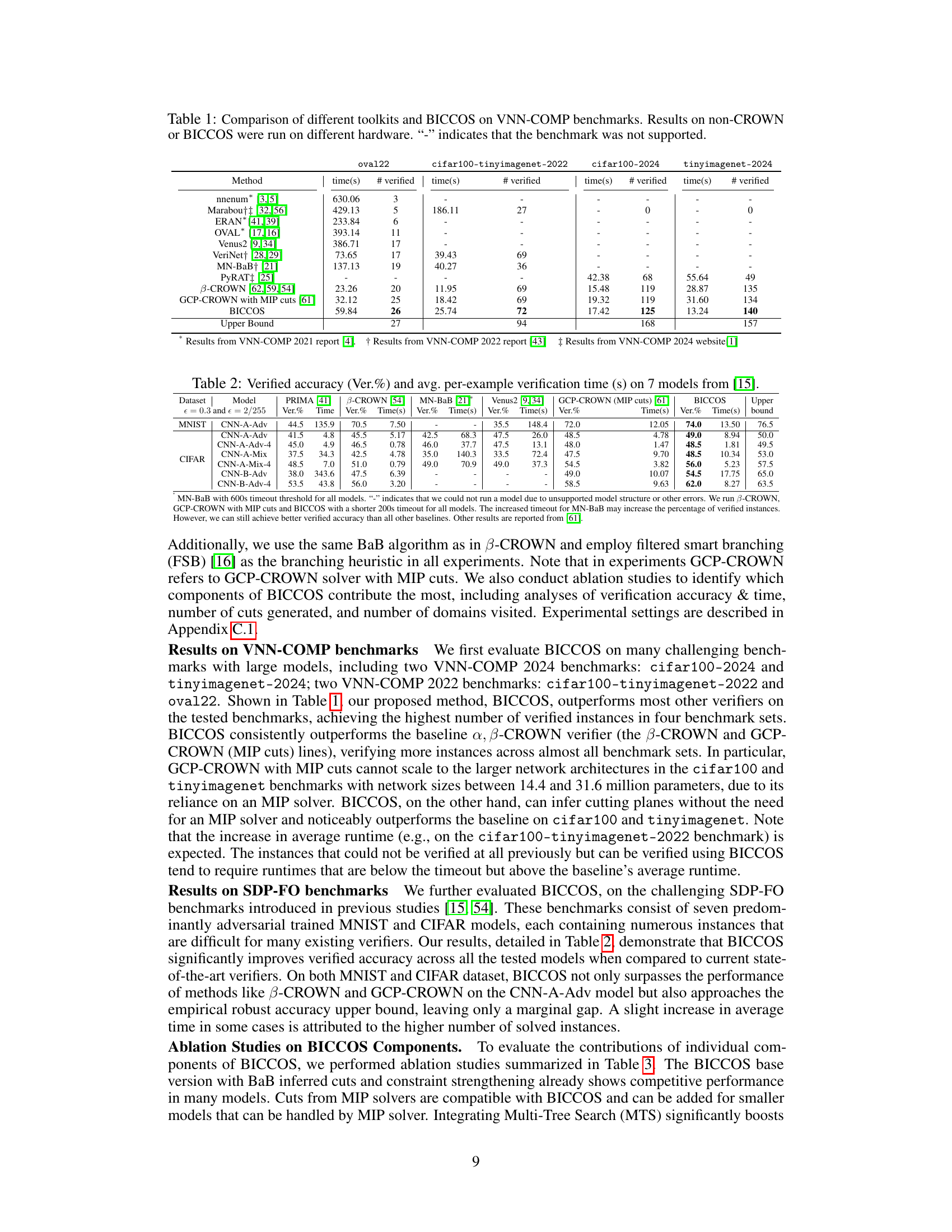

🔼 This table compares the performance of various neural network verification tools on the VNN-COMP benchmarks. The tools include nnenum, Marabou, ERAN, OVAL, Venus2, VeriNet, MN-BaB, and PyRAT, along with the baseline methods B-CROWN and GCP-CROWN (using MIP cuts). The main focus is on the scalability and effectiveness of the proposed BICCOS method, indicated by the number of verified instances and the verification time for each benchmark. The ‘-’ indicates that a specific method did not support a given benchmark.

read the caption

Table 1: Comparison of different toolkits and BICCOS on VNN-COMP benchmarks. Results on non-CROWN or BICCOS were run on different hardware. '-' indicates that the benchmark was not supported.

In-depth insights#

Scalable NN Verify#

Scalable neural network verification (NNV) is a crucial challenge due to the complexity of modern networks. The heading “Scalable NN Verify” suggests a focus on methods that can efficiently handle large-scale neural networks, a significant limitation of many existing techniques. Existing methods often rely on Mixed Integer Programming (MIP) solvers, which struggle with the computational cost of large networks, limiting their applicability. A scalable solution is critical for deploying NNs in safety-critical applications, where verification is essential. The core of a scalable approach likely involves leveraging the structure of the NN and the verification problem, developing more efficient algorithms and data structures, possibly incorporating techniques like abstract interpretation or specialized bound propagation methods to avoid computationally expensive exact solutions. The development of effective cutting plane techniques, tailored to the specifics of NNV, is a promising area, as they can significantly improve the efficiency of branch-and-bound algorithms by reducing the search space. A multi-tree search strategy could also enhance scalability by exploring multiple search paths in parallel, generating cuts which are then shared across all branches, ultimately leading to faster convergence and improved accuracy.

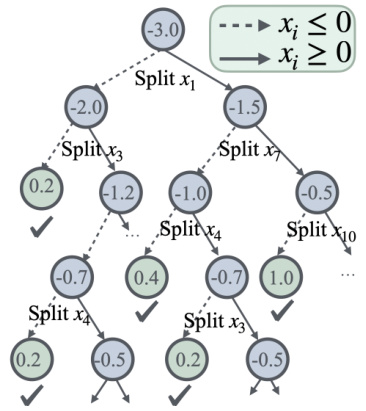

BICCOS: A New Cut#

BICCOS, presented as a novel cutting-plane method for neural network verification, offers a significant advancement by directly leveraging the structure of the branch-and-bound search tree. Unlike previous methods relying on external MIP solvers, BICCOS infers cuts from the logical relationships within already verified subproblems. This approach generates problem-specific cuts, enhancing scalability. The method’s strength stems from its ability to strengthen cuts by identifying and removing less influential neurons, thus refining the constraints and improving the effectiveness of the cuts generated. Furthermore, the introduction of a multi-tree search strategy allows BICCOS to proactively identify more cutting planes before the main BaB phase, further narrowing the search space and accelerating convergence. This combination of inferred cuts, constraint strengthening, and multi-tree search allows BICCOS to achieve improved verification results, particularly with large-scale neural networks previously intractable for existing cutting-plane techniques. The core innovation lies in its ability to learn from past successes, significantly improving efficiency and effectiveness in neural network verification.

Constraint Strengthening#

The constraint strengthening technique significantly enhances the efficiency of the Branch-and-bound Inferred Cuts with Constraint Strengthening (BICCOS) algorithm. It leverages the fact that not all branched neurons in a verified subproblem are necessarily crucial for establishing UNSAT. By identifying and removing less influential neurons, BICCOS generates tighter, more effective cutting planes. This process involves computing influence scores for each neuron based on the improvement in lower bounds after their inclusion in the split, allowing the algorithm to strategically remove neurons with minimal impact. This reduces the dimensionality of the cuts, making them more effective at pruning the search space. The heuristic approach to neuron elimination ensures that the algorithm identifies the optimal neurons to remove, and that the cuts are still helpful. This iterative refinement is a key contribution, demonstrating the practical applicability of BICCOS to large-scale problems that traditional cutting plane methods struggle to handle. The effectiveness of this technique is validated in experimental results, showing a significant improvement in verification performance compared to existing state-of-the-art methods.

Multi-Tree Search#

The proposed “Multi-Tree Search” strategy represents a significant departure from traditional branch-and-bound (BaB) approaches for neural network verification. Instead of exploring a single search tree, it proactively initiates multiple BaB processes in parallel, acting as a pre-solving phase. This parallel exploration allows the algorithm to identify effective cutting planes more rapidly. Each tree explores different branching decisions, and the cutting planes discovered in one tree become universally applicable and strengthen the pruning in others, accelerating convergence towards verification. The algorithm intelligently prioritizes trees for expansion based on their lower bounds, directing computational resources to the most promising search paths, thus improving overall efficiency and scalability. The combination of multi-tree search with constraint strengthening further enhances its effectiveness, making it well-suited for large-scale models where a single tree approach might struggle.

Limitations & Future#

A research paper’s “Limitations & Future” section would critically examine the study’s shortcomings. Scalability is often a key limitation, especially with deep learning models. The computational cost of verification can be prohibitive for large networks. Generalizability is another concern; a method’s effectiveness on a specific dataset doesn’t guarantee its performance across diverse datasets or network architectures. Algorithm specifics may restrict applicability. For example, reliance on specific activation functions limits use cases. The “Future” aspect should propose avenues for improvement. This could include exploring more efficient algorithms, developing techniques for handling larger and more complex networks, investigating alternative verification approaches, and broadening the scope to encompass diverse activation functions and network topologies. Addressing limitations and outlining promising future directions builds credibility and provides valuable guidance for future research in the field.

More visual insights#

More on figures

🔼 This figure illustrates three key aspects of the BICCOS algorithm. (a) shows how a cut inferred from an UNSAT path in a branch-and-bound tree might not improve bounds in other subproblems. (b) demonstrates the constraint strengthening technique, where unnecessary variables are removed from a cut to make it more effective. (c) shows the multi-tree search approach, where multiple search trees are explored simultaneously to identify more cuts.

read the caption

Figure 2: (2a): Inferred cut from UNSAT paths during BaB and why it fails in regular BaB. (2b): Constraint strengthening with Neuron Elimination Heuristic. (2c): Multi-tree search.

🔼 This figure illustrates three key concepts of the BICCOS algorithm. (a) shows how a cut inferred from an UNSAT path in the branch-and-bound tree might not improve bounds if not strengthened. (b) demonstrates constraint strengthening by identifying and removing unnecessary variables from the cut. (c) depicts multi-tree search, where multiple trees explore different branching decisions, allowing cuts to be discovered and shared, improving efficiency.

read the caption

Figure 2: (2a): Inferred cut from UNSAT paths during BaB and why it fails in regular BaB. (2b): Constraint strengthening with Neuron Elimination Heuristic. (2c): Multi-tree search.

🔼 This figure illustrates three key aspects of the BICCOS algorithm. (a) shows how a cut inferred from an UNSAT path in a branch-and-bound tree might not improve bounds in other parts of the tree, highlighting the need for constraint strengthening. (b) demonstrates the constraint strengthening process using a neuron elimination heuristic, where less influential neurons are removed to create a more effective cut. (c) illustrates the multi-tree search strategy, where multiple search trees are explored in parallel to generate more cuts that can then be shared between trees.

read the caption

Figure 2: (2a): Inferred cut from UNSAT paths during BaB and why it fails in regular BaB. (2b): Constraint strengthening with Neuron Elimination Heuristic. (2c): Multi-tree search.

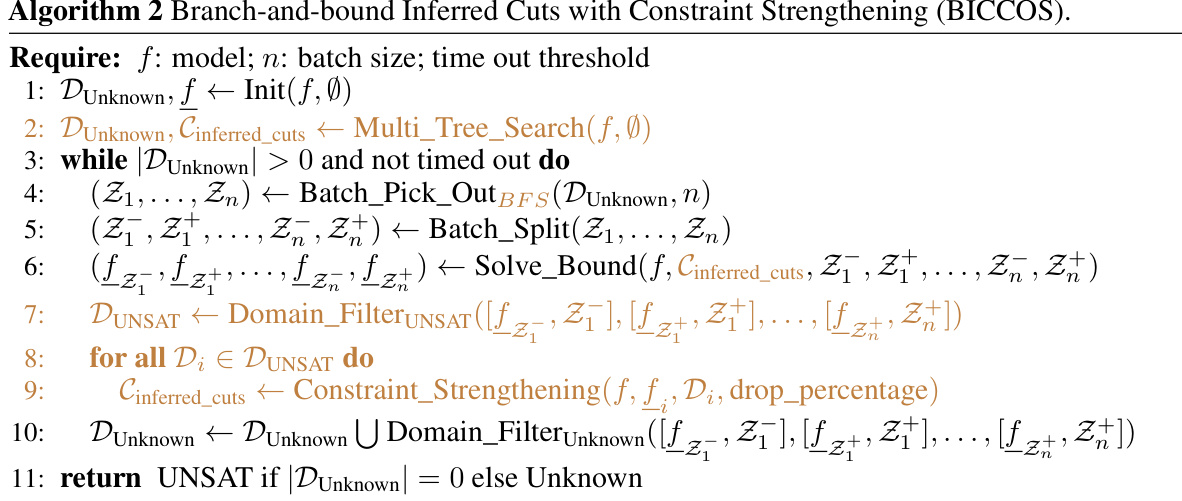

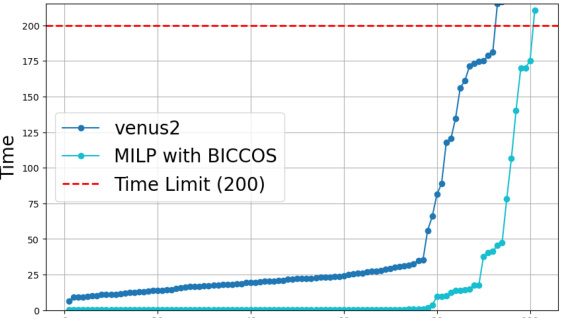

🔼 This figure compares the performance of Venus2 and a Mixed Integer Linear Programming (MILP) solver using BICCOS cuts on four benchmarks. The MILP solver is used for a fair comparison, eliminating the speed advantage of GPU-accelerated bound propagation used in some methods. The results show that the MILP solver with BICCOS cuts consistently outperforms Venus2, demonstrating the efficacy of BICCOS, especially for larger models that Venus2 struggles to handle.

read the caption

Figure 3: Comparison of Venus2 and MILP with BICCOS. For a fair comparison, we do not use GPU-accelerated bound propagation but use a MILP solver (same as in Venus2) to solve the verification problem with our BICCOS cuts. In all 4 benchmarks, MILP with BICCOS cuts is faster than Venus (MILP with their proposed cuts), illustrating the effectiveness. Note that Venus2 can hardly scale to larger models presented in our paper, such as those on cifar100 and tinyimagenet datasets.

🔼 This figure compares the performance of Venus2 and a MILP solver using BICCOS cuts on four benchmarks. A key finding is that the MILP solver with BICCOS cuts is significantly faster than Venus2, highlighting BICCOS’s efficiency. The figure also notes that Venus2 struggles with larger network models, a problem that BICCOS overcomes.

read the caption

Figure 3: Comparison of Venus2 and MILP with BICCOS. For a fair comparison, we do not use GPU-accelerated bound propagation but use a MILP solver (same as in Venus2) to solve the verification problem with our BICCOS cuts. In all 4 benchmarks, MILP with BICCOS cuts is faster than Venus (MILP with their proposed cuts), illustrating the effectiveness. Note that Venus2 can hardly scale to larger models presented in our paper, such as those on cifar100 and tinyimagenet datasets.

🔼 This figure compares the performance of Venus2 and MILP with BICCOS in solving the verification problem. A key difference is that MILP with BICCOS uses a MILP solver (like Venus2), rather than relying on GPU-accelerated bound propagation. The results demonstrate that the MILP solver with BICCOS cuts is faster and more effective than Venus2, especially for larger models. This indicates the scalability and effectiveness of BICCOS.

read the caption

Figure 3: Comparison of Venus2 and MILP with BICCOS. For a fair comparison, we do not use GPU-accelerated bound propagation but use a MILP solver (same as in Venus2) to solve the verification problem with our BICCOS cuts. In all 4 benchmarks, MILP with BICCOS cuts is faster than Venus (MILP with their proposed cuts), illustrating the effectiveness. Note that Venus2 can hardly scale to larger models presented in our paper, such as those on cifar100 and tinyimagenet datasets.

🔼 This figure compares the performance of Venus2 and MILP with BICCOS on four benchmarks. It shows that using a MILP solver with BICCOS cuts results in faster verification than Venus2, which uses a different method for generating cuts. The figure also highlights the scalability limitations of Venus2 when dealing with larger neural network models.

read the caption

Figure 3: Comparison of Venus2 and MILP with BICCOS. For a fair comparison, we do not use GPU-accelerated bound propagation but use a MILP solver (same as in Venus2) to solve the verification problem with our BICCOS cuts. In all 4 benchmarks, MILP with BICCOS cuts is faster than Venus (MILP with their proposed cuts), illustrating the effectiveness. Note that Venus2 can hardly scale to larger models presented in our paper, such as those on cifar100 and tinyimagenet datasets.

🔼 This figure illustrates three key concepts of the BICCOS algorithm. (a) shows how a cut inferred from an UNSAT path in the branch-and-bound tree might not improve bounds because it doesn’t exclude any additional subproblems. (b) demonstrates constraint strengthening, where unnecessary branches are removed from an UNSAT path to create a stronger cut. (c) depicts multi-tree search, where multiple search trees are explored in parallel to generate more cuts, which are then used to improve bounds across all trees.

read the caption

Figure 2: (2a): Inferred cut from UNSAT paths during BaB and why it fails in regular BaB. (2b): Constraint strengthening with Neuron Elimination Heuristic. (2c): Multi-tree search.

More on tables

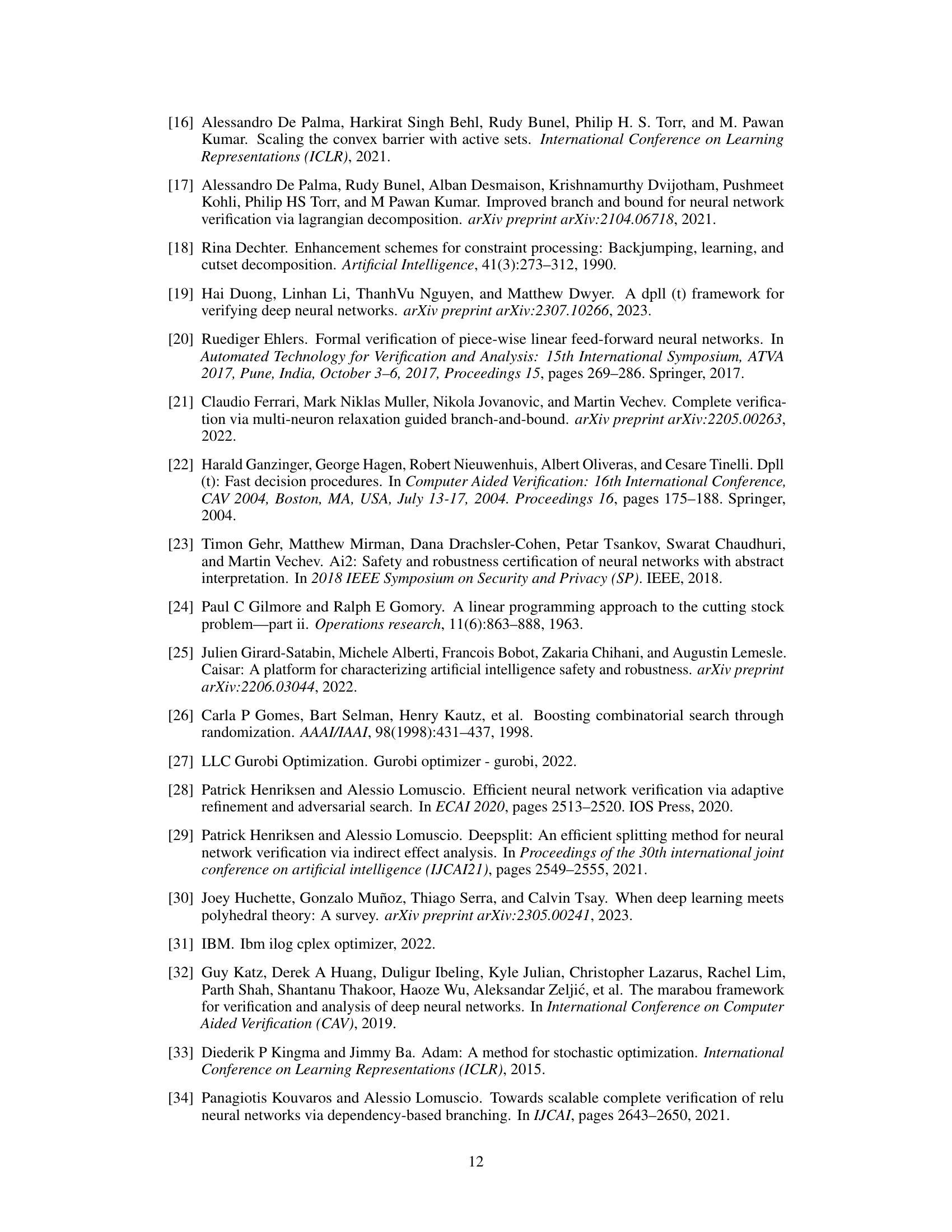

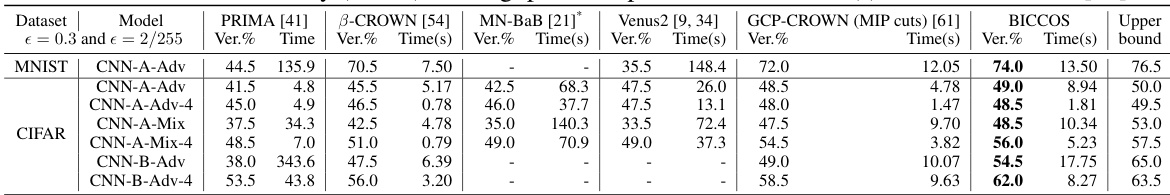

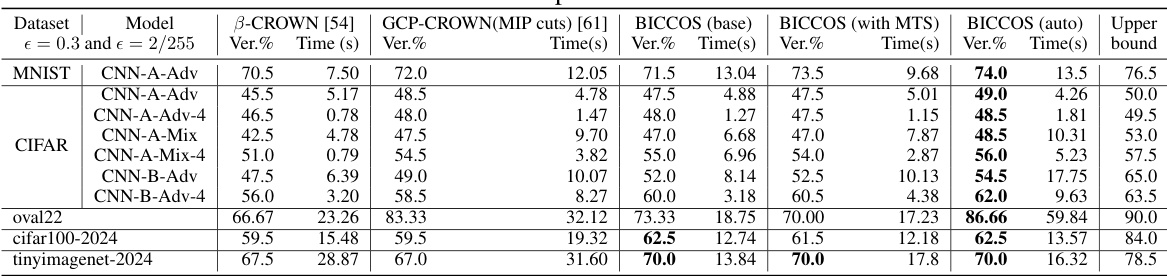

🔼 This table presents the verified accuracy and average per-example verification time for seven different models. The models were tested using various verification methods including PRIMA, B-CROWN, MN-BaB, Venus2, GCP-CROWN (with MIP cuts), and BICCOS. The results show a comparison of the performance of these different methods across various models and highlight the improvement offered by BICCOS.

read the caption

Table 2: Verified accuracy (Ver.%) and avg. per-example verification time (s) on 7 models from [15].

🔼 This table compares the performance of various neural network verification tools, including BICCOS, on several benchmarks from the VNN-COMP competition. The tools are evaluated based on their verification time and the number of instances successfully verified. The table highlights BICCOS’s superior performance, particularly on larger network architectures where other tools struggle.

read the caption

Table 1: Comparison of different toolkits and BICCOS on VNN-COMP benchmarks. Results on non-CROWN or BICCOS were run on different hardware. '-' indicates that the benchmark was not supported.

🔼 This table compares the performance of various neural network verification tools on several VNN-COMP benchmarks. It shows the verification time and the number of verified instances for each tool on different benchmark datasets. The tools compared include several state-of-the-art methods (nnenum, Marabou, ERAN, OVAL, Venus2, VeriNet, MN-BaB, PyRAT, B-CROWN, GCP-CROWN with MIP cuts) and the proposed BICCOS method. The table highlights BICCOS’s superior performance, particularly on larger networks that other cutting-plane methods struggle with.

read the caption

Table 1: Comparison of different toolkits and BICCOS on VNN-COMP benchmarks. Results on non-CROWN or BICCOS were run on different hardware. '-' indicates that the benchmark was not supported.

Full paper#