↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Backdoor attacks in federated learning, where malicious clients manipulate the global model, pose a significant threat. Existing defenses often assume homogeneous data distributions or rely on validation datasets, limiting their applicability to real-world, heterogeneous settings. Furthermore, these methods may struggle with adaptive attacks or cause conflicts with standard federated optimization strategies.

This paper introduces Fisher Discrepancy Cluster and Rescale (FDCR), a novel defense mechanism. FDCR leverages Fisher Information to assess the importance of model parameters in local distributions. By identifying significant discrepancies between benign and malicious clients in parameter importance, FDCR effectively isolates and mitigates the influence of malicious updates. The method also prioritizes the rescaling of important parameters, enhancing model robustness and addressing the limitations of previous methods. The effectiveness of FDCR is demonstrated through comprehensive experiments in various heterogeneous settings under various backdoor attacks.

Key Takeaways#

Why does it matter?#

This paper is important because it proposes a novel defense mechanism against backdoor attacks in heterogeneous federated learning, a critical issue hindering the wider adoption of this technology. The method is effective, efficient, and addresses the limitations of existing defense methods, opening new avenues for research.

Visual Insights#

The figure demonstrates the difference in parameter importance between benign and malicious clients. The left panel shows a similarity matrix representing the pairwise similarity of parameter importance across clients, where benign and malicious clients show distinct patterns. The right panel displays the distribution of parameter importance values, highlighting that benign and malicious clients emphasize different parameters, supporting the core idea of the proposed method (FDCR).

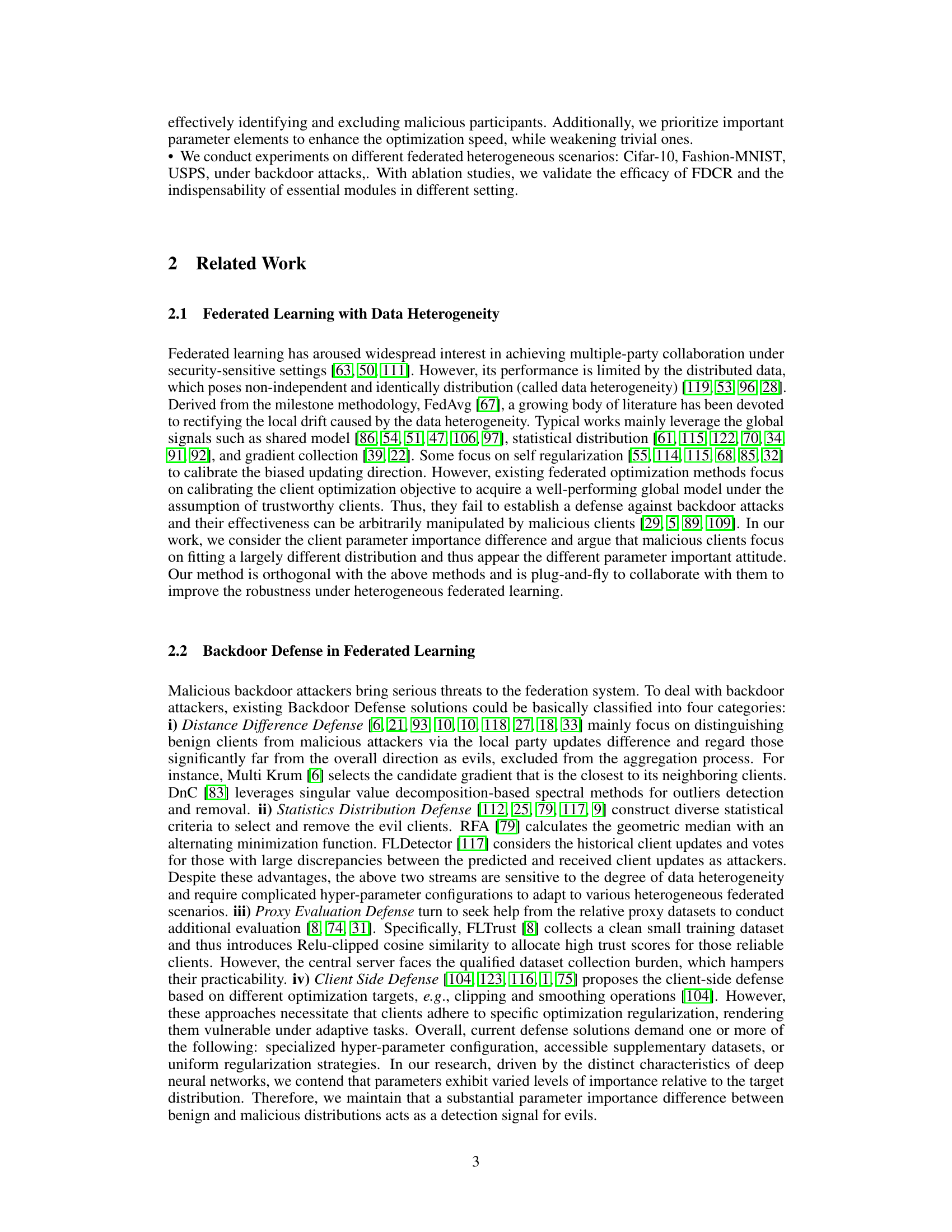

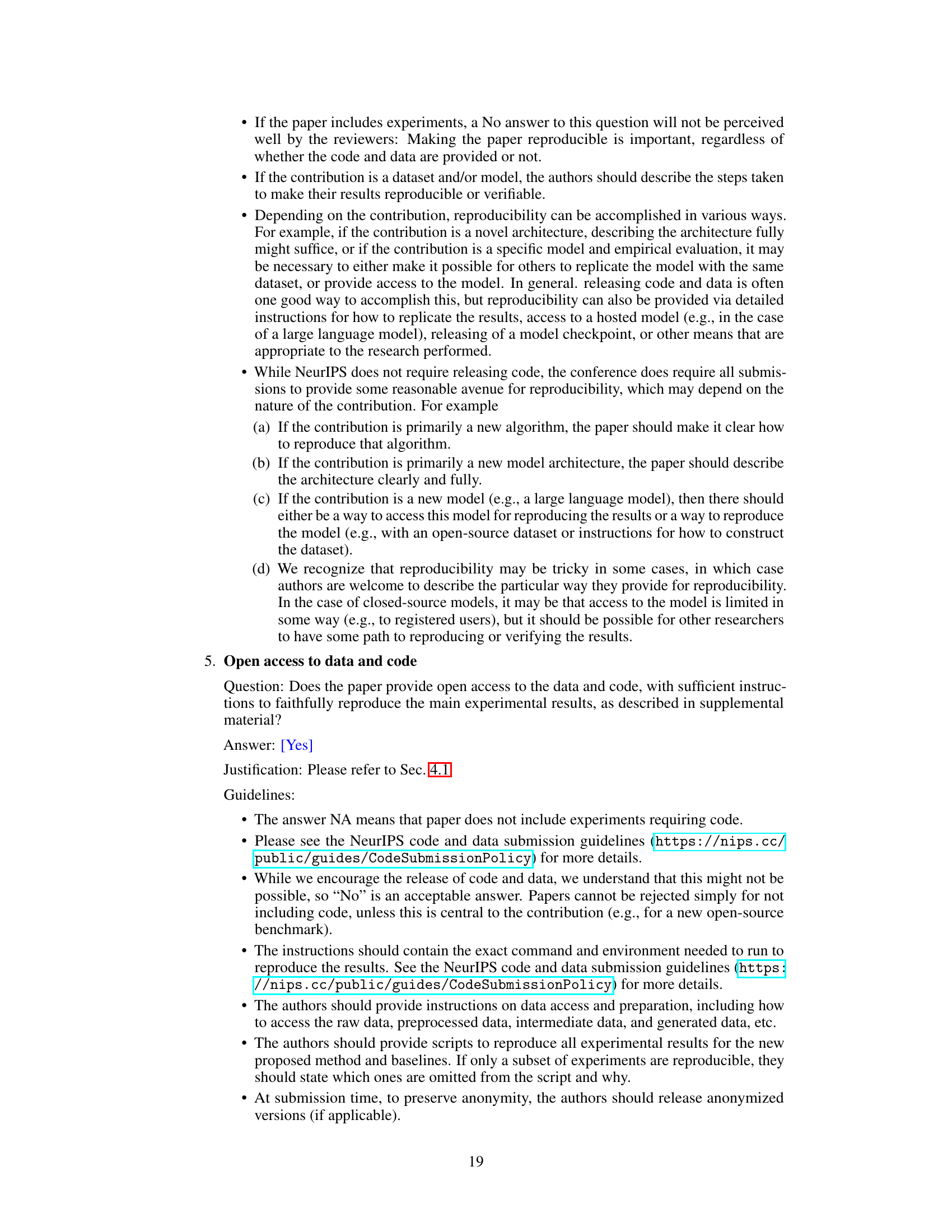

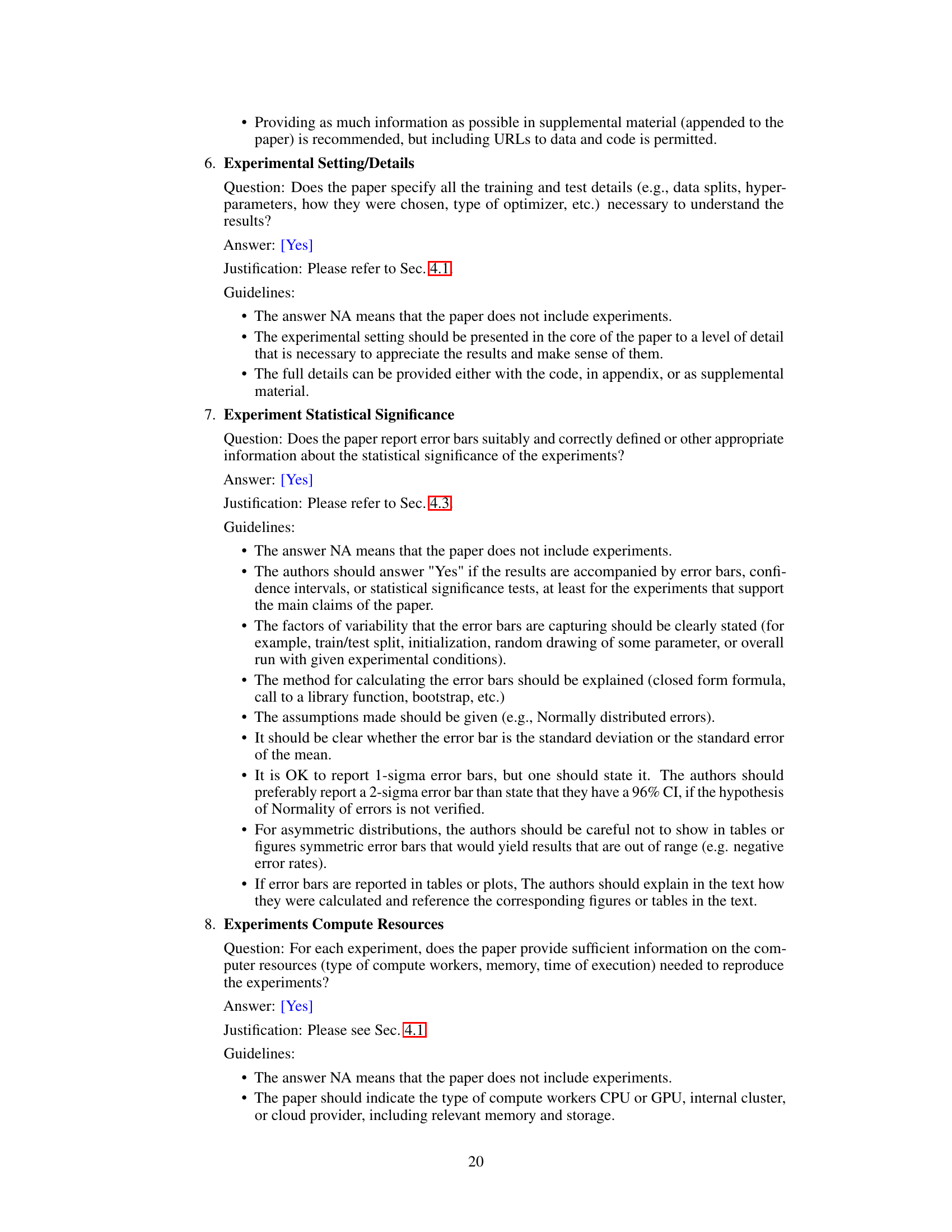

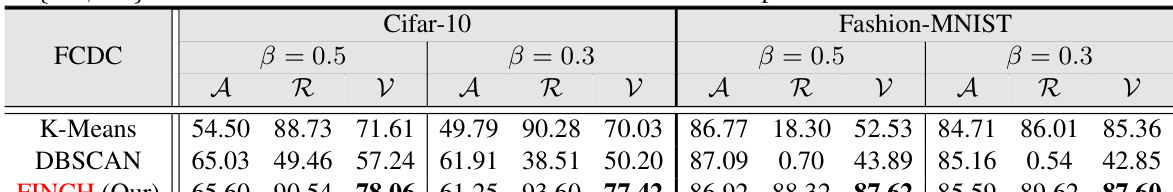

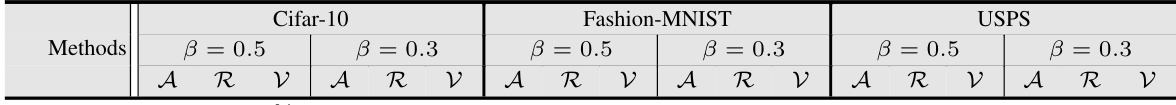

This table compares three different clustering strategies (K-Means, DBSCAN, and FINCH) used in the Fisher Client Discrepancy Cluster (FCDC) method. The comparison is done using two datasets (Cifar-10 and Fashion-MNIST) with two different levels of data heterogeneity (β = 0.5 and β = 0.3), and a malicious client ratio of 30%. The results presented are Accuracy (A), Backdoor Failure Rate (R), and a combined metric (V) that balances these two. The table helps to demonstrate the effectiveness of the chosen clustering method (FINCH) for identifying malicious clients in the context of the FDCR algorithm.

In-depth insights#

Backdoor Attacks#

Backdoor attacks, a severe threat to federated learning, involve malicious actors surreptitiously embedding triggers into a model. These triggers cause the model to behave maliciously only when a specific input pattern is present, otherwise exhibiting normal behavior. The paper focuses on defense mechanisms against these attacks in heterogeneous environments where data is non-identical and independently distributed across clients. Heterogeneity complicates defenses as existing methods often assume homogeneous data. The authors propose a novel approach based on parameter importance analysis to detect and mitigate backdoor attacks. This shows a potential avenue for future research and development of more robust defense strategies. The key is to identify and leverage the inherent differences in parameter sensitivity between benign and malicious client updates to enhance security in federated learning models.

Heterogeneous FL#

Heterogeneous Federated Learning (FL) presents unique challenges compared to its homogeneous counterpart. Data heterogeneity, where clients possess non-identically and independently distributed (non-IID) data, significantly impacts model accuracy and convergence. System heterogeneity, encompassing differences in clients’ computational capabilities and network conditions, further complicates the training process. Effective heterogeneous FL necessitates robust algorithms that can handle skewed data distributions and adapt to varying client resources. This often involves techniques like personalized federated learning, which tailors the model to individual client data, or adaptive aggregation methods that weight client updates based on data quality or resource availability. Addressing these challenges is crucial for realizing the full potential of FL in real-world applications, as it allows participation of diverse and decentralized entities with varying data characteristics and computing resources. Robustness to adversarial attacks is another key concern in heterogeneous FL, requiring strategies to identify and mitigate malicious clients that may intentionally corrupt the model training. Therefore, research in this area focuses on developing robust and efficient algorithms that can ensure accuracy, fairness, and security.

Parameter Importance#

The concept of ‘Parameter Importance’ in the context of a machine learning model, particularly within a federated learning setting, is crucial for understanding model behavior and robustness. Different parameters contribute differently to the model’s overall performance, and identifying these differences is essential. In the paper, the authors likely explore how the importance of parameters varies across different data distributions, specifically highlighting disparities between benign and malicious data. This is a significant area of research because malicious actors may manipulate specific parameters to inject backdoors or otherwise compromise the model’s integrity. By quantifying parameter importance, a defense mechanism can be developed that selectively re-weights or filters updates from unreliable clients, focusing on the most critical parameters. Understanding the interplay of parameter importance and data heterogeneity is key to building robust and secure federated learning systems that are resilient to adversarial attacks. The paper likely presents a novel method for assessing and utilizing parameter importance, leading to enhanced backdoor defense capabilities.

FDCR Method#

The FDCR (Fisher Discrepancy Cluster and Rescale) method is a novel defense mechanism against backdoor attacks in federated learning. It leverages Fisher Information to quantify the importance of parameters within local models, identifying discrepancies between benign and malicious client updates. By clustering clients based on these discrepancies, FDCR effectively isolates and mitigates the influence of malicious actors. Furthermore, a rescaling mechanism prioritizes updates to important parameters, accelerating adaptation and reducing the impact of trivial elements. This two-pronged approach of client identification and parameter re-weighting makes FDCR particularly effective in heterogeneous federated learning environments where data distributions vary significantly across clients, a scenario commonly exploited by backdoor attacks. The effectiveness of FDCR is demonstrated through experiments on diverse scenarios, showcasing its ability to enhance robustness against backdoor attacks.

Future Work#

Future research directions stemming from this work could explore extending FDCR’s applicability to more complex attack scenarios, such as those involving multiple backdoor triggers or adaptive attackers. Investigating the robustness of FDCR under various data heterogeneity levels and network structures is crucial. Additionally, a deeper theoretical analysis examining the relationship between Fisher Information and parameter importance in heterogeneous federated learning could provide valuable insights. Developing more efficient and scalable methods for computing Fisher Information would be beneficial for large-scale deployments. Finally, exploring the generalizability of FDCR to other federated learning tasks beyond backdoor defense, and comparing its performance against other defense mechanisms is a promising avenue for future research.

More visual insights#

More on figures

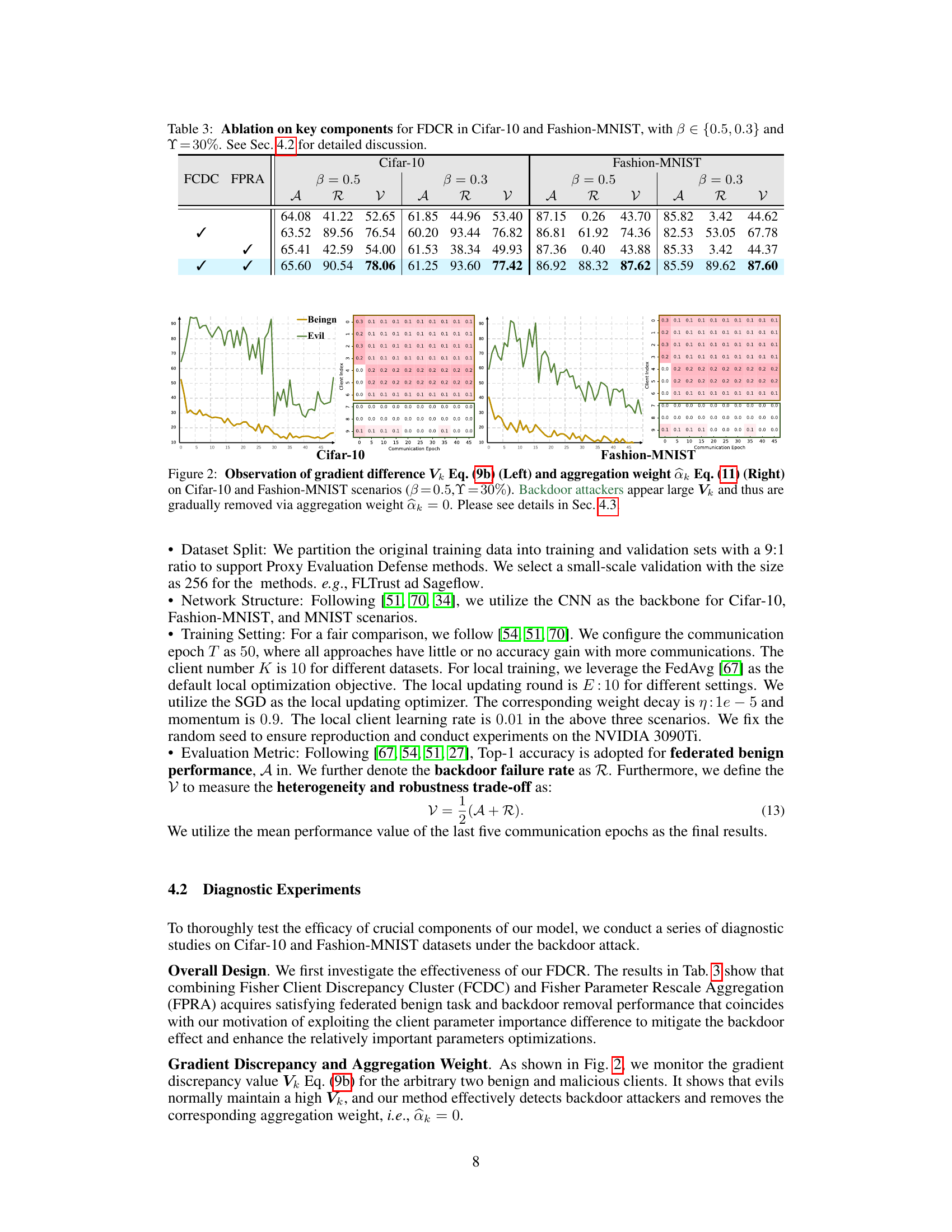

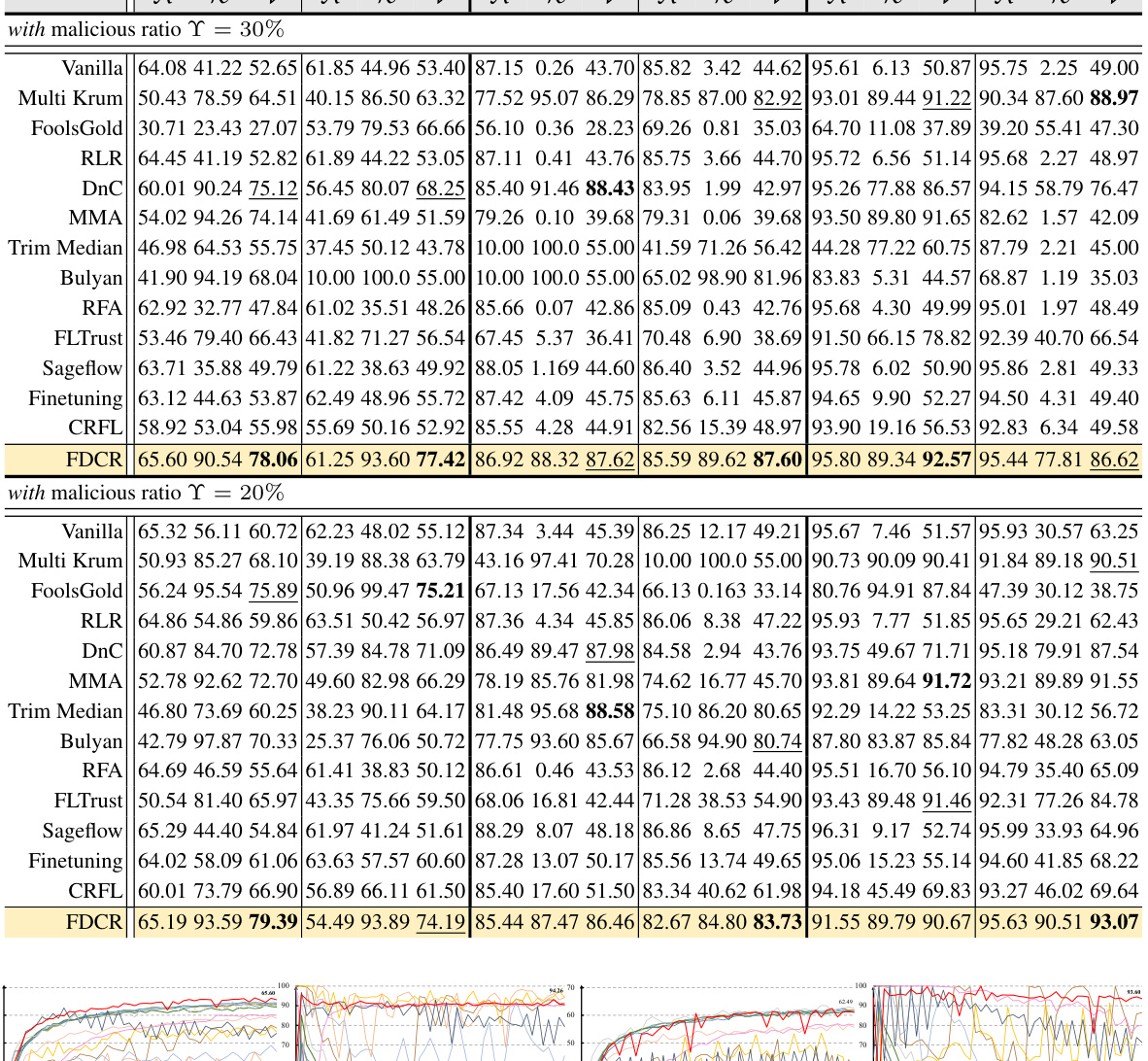

This figure shows the gradient difference (Vk) and aggregation weight (ak) over communication rounds for both Cifar-10 and Fashion-MNIST datasets. It illustrates how the proposed FDCR method identifies and mitigates backdoor attacks. The left plots show that malicious clients (labeled as ‘Evil’) exhibit significantly larger gradient differences (Vk) compared to benign clients (‘Benign’). The right plots demonstrate how the aggregation weights (ak) for malicious clients are reduced to zero over time, effectively removing their influence on the global model update. This visualization supports the effectiveness of the FDCR method in identifying and excluding malicious clients from the federated learning process.

This figure displays the gradient difference (Vk) and aggregation weight (ak) across communication rounds for Cifar-10 and Fashion-MNIST datasets. It visually demonstrates how the proposed FDCR method identifies and mitigates backdoor attacks by showing that malicious clients exhibit a significantly larger gradient difference (Vk) compared to benign clients. Consequently, their aggregation weights (ak) are reduced to near zero, effectively removing their influence on the global model updates.

More on tables

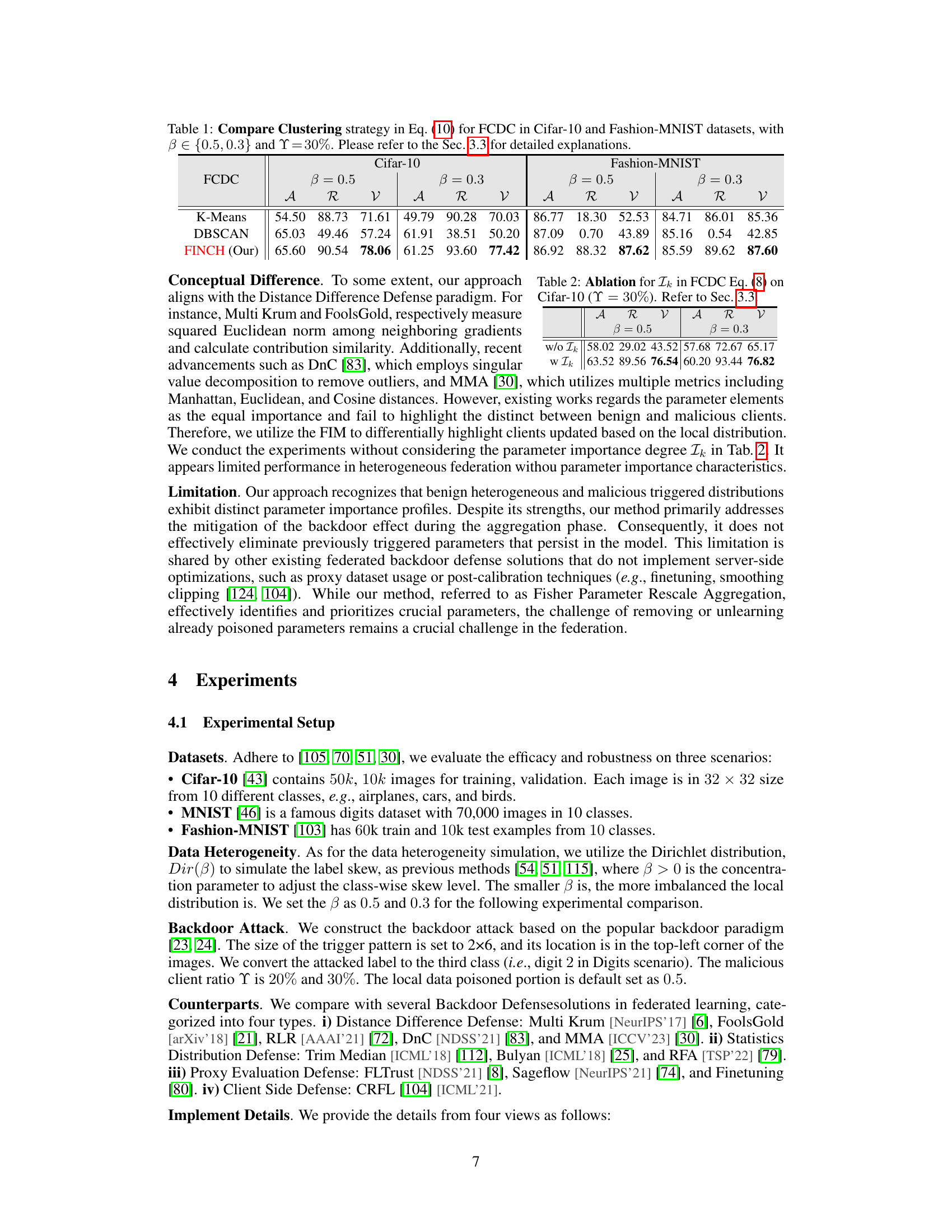

This table presents an ablation study on the impact of parameter importance (Ik) in the Fisher Client Discrepancy Cluster (FCDC) method. It compares the performance (accuracy (A), recall (R), and F1-score (V)) of the FCDC method with and without considering parameter importance (Ik) on the Cifar-10 dataset under backdoor attack conditions where 30% of the clients are malicious (Υ = 30%). The results show the improvement by incorporating Ik into the model.

This table compares the performance of three clustering strategies (K-Means, DBSCAN, and FINCH) used in the Fisher Client Discrepancy Cluster (FCDC) method. The comparison is done across two datasets (Cifar-10 and Fashion-MNIST) with varying degrees of data heterogeneity (β = 0.5 and β = 0.3) and a fixed malicious client ratio (Y = 30%). The results are presented in terms of accuracy (A), backdoor failure rate (R), and a combined metric (ν) that balances accuracy and robustness. Section 3.3 provides more details on the experimental setup and interpretation of these results.

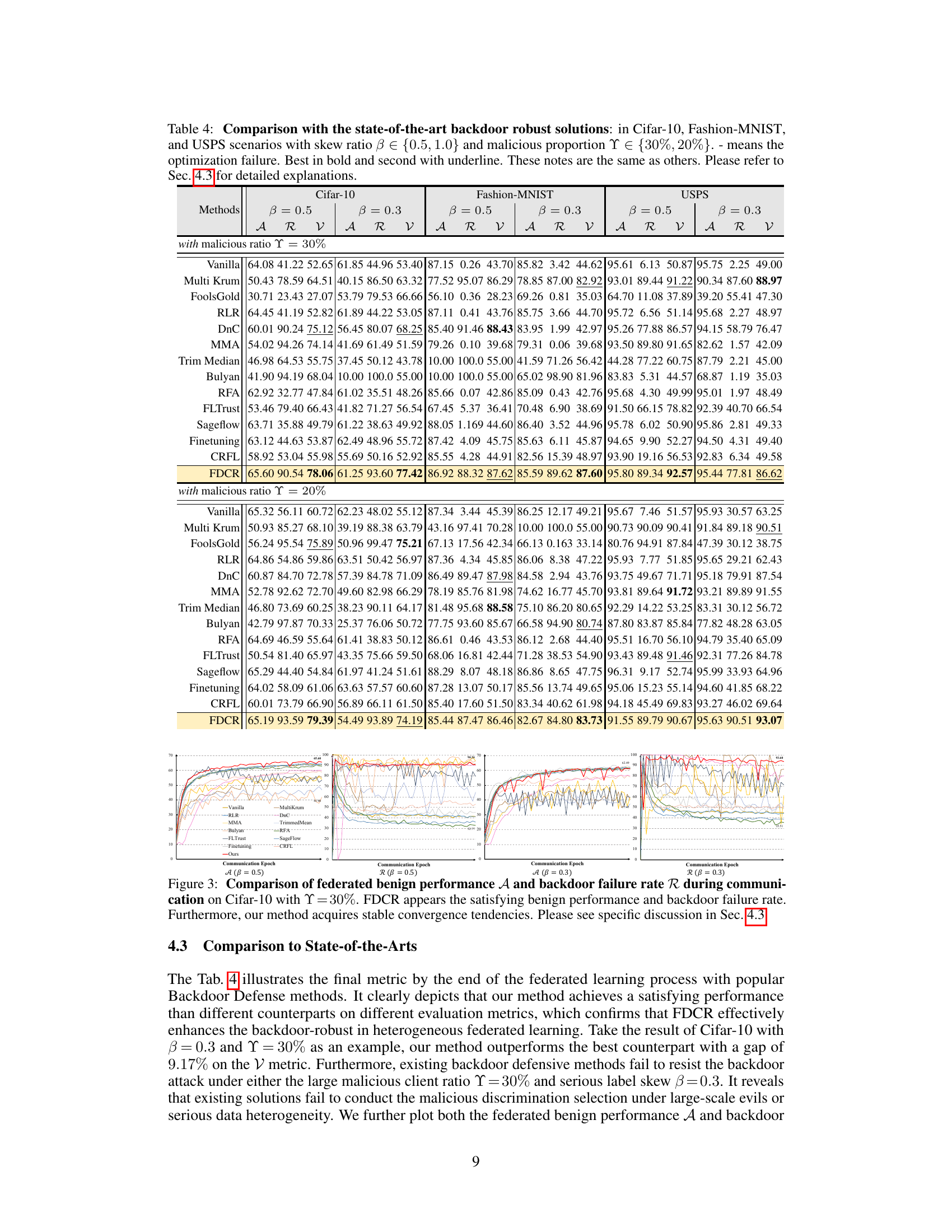

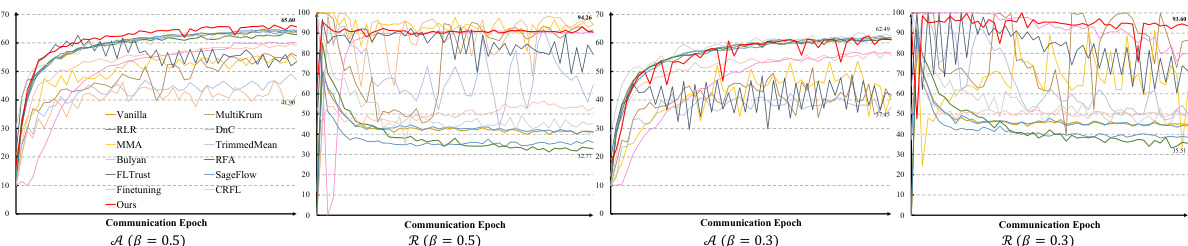

This table compares the performance of the proposed FDCR method against various state-of-the-art backdoor defense solutions on three datasets (Cifar-10, Fashion-MNIST, USPS) under different data heterogeneity (beta = 0.5, 1.0) and malicious client ratios (20%, 30%). The metrics used are Accuracy (A), Backdoor Failure Rate (R), and a combined metric (nu). The table highlights the superior performance of FDCR in mitigating backdoor attacks.

Full paper#