↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Domain adaptation in object detection struggles with the lack of labeled data in new domains. Existing methods often rely on selecting the best model from a validation or test set, which isn’t practical for real-world use. This makes unsupervised model selection crucial for the wider adoption of domain adaptive object detection.

This paper introduces a novel approach called Detection Adaptation Score (DAS). DAS leverages the concept of flat minima—models in these regions tend to generalize better—to estimate model performance without target labels. It combines two scores: Flatness Index Score (FIS) measuring model variance, and Prototypical Distance Ratio (PDR) evaluating the model’s transferability and discriminability. Experiments show that DAS strongly correlates with actual model performance, offering an effective tool for unsupervised model selection in domain adaptive object detection.

Key Takeaways#

Why does it matter?#

This paper is crucial because it proposes a novel unsupervised model selection approach for domain adaptive object detection, a significant challenge in real-world applications where labeled target domain data is scarce. Its method offers a practical solution and opens new avenues for research on model generalization and domain adaptation.

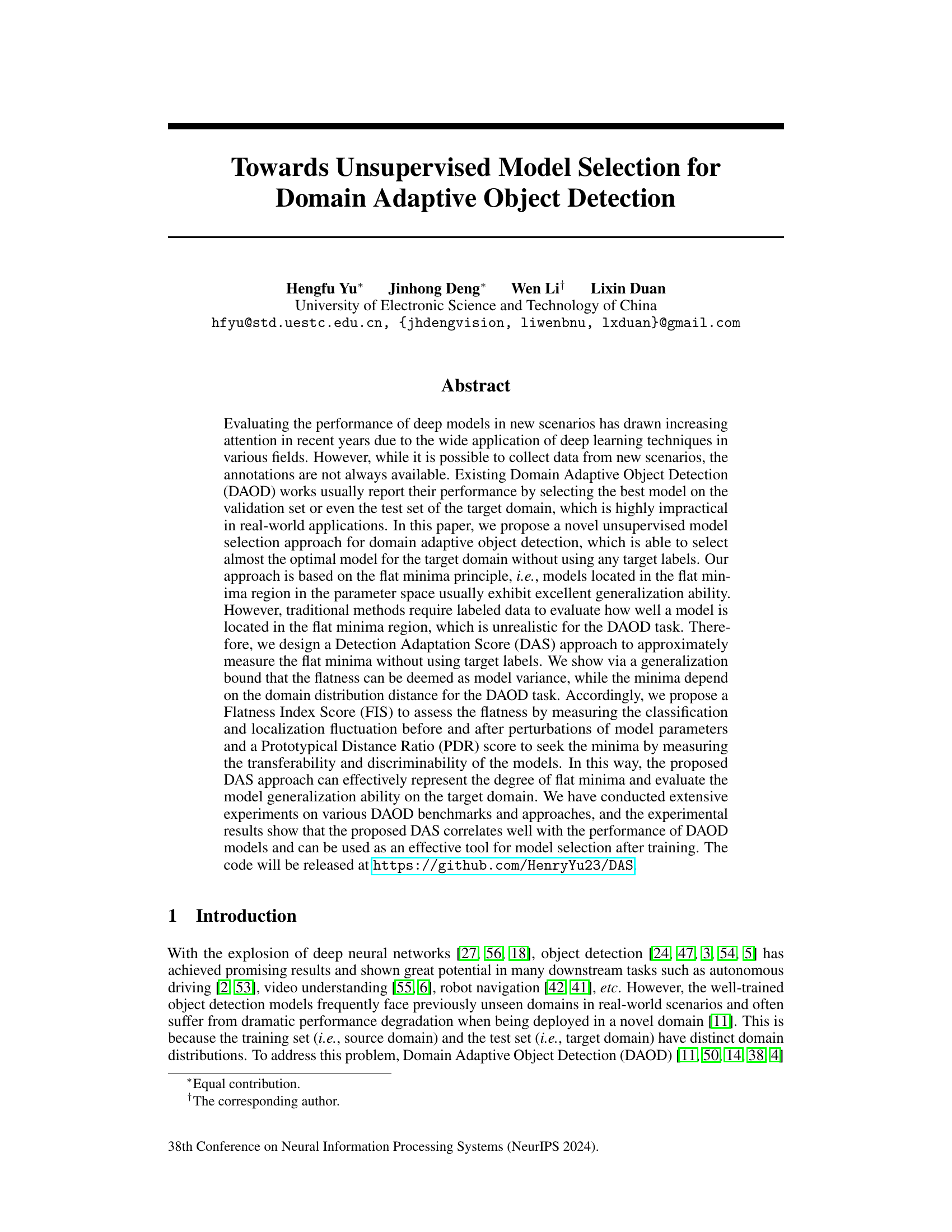

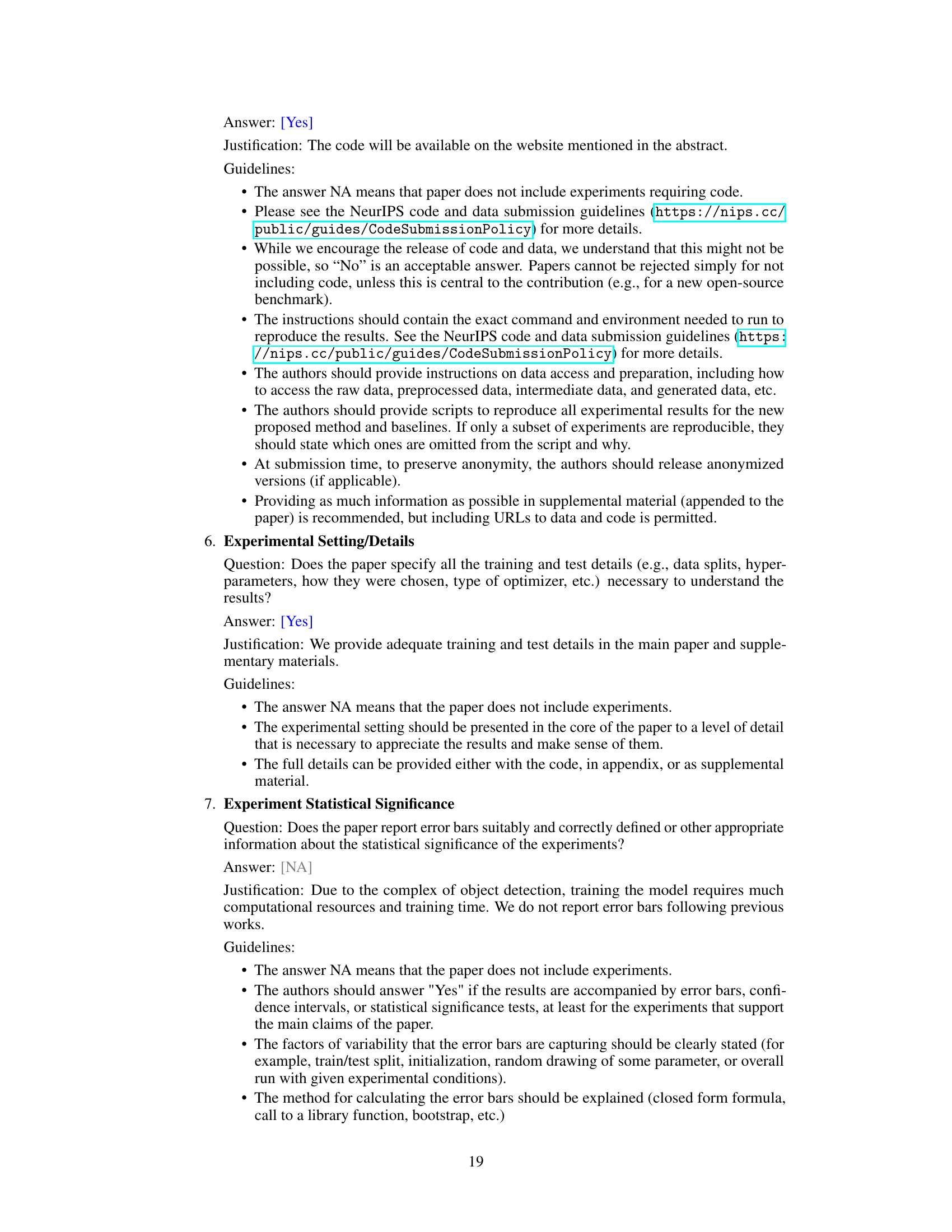

Visual Insights#

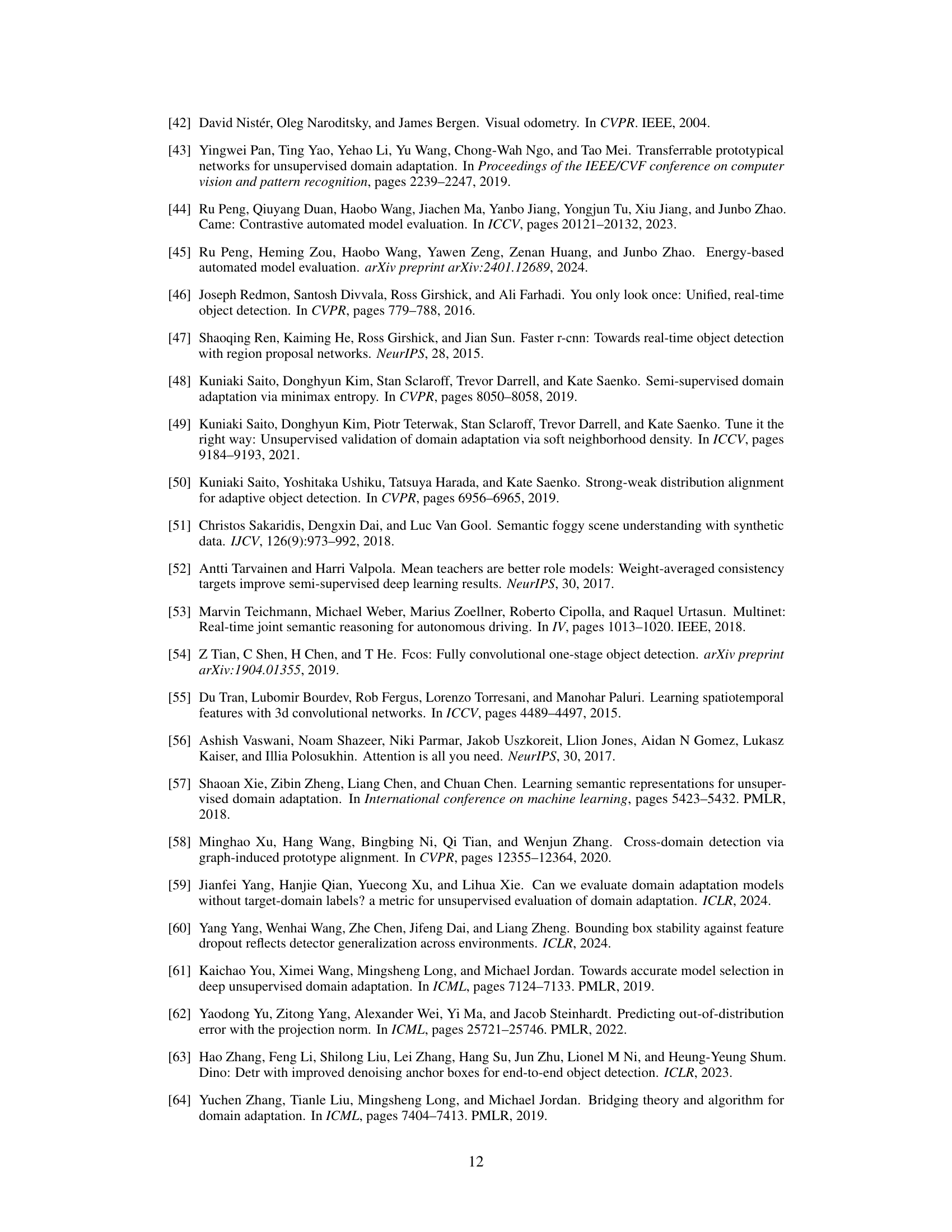

This figure shows two subfigures. Subfigure (a) illustrates the performance degradation of the classic Domain Adaptive Object Detection (DAOD) method, AT [38], during training on the Real-to-Art (P2C) adaptation task, highlighting the issue of performance drop as the training progresses. It also demonstrates the superiority of the proposed Detection Adaptation Score (DAS) in selecting checkpoints without using any target domain labels. Subfigure (b) explains the motivation behind the proposed work, illustrating the architecture of the proposed DAS method, which combines a Prototypical Distance Ratio (PDR) score and a Flatness Index Score (FIS) to evaluate model performance in an unsupervised manner, effectively replacing the need for annotations in DAOD model evaluation.

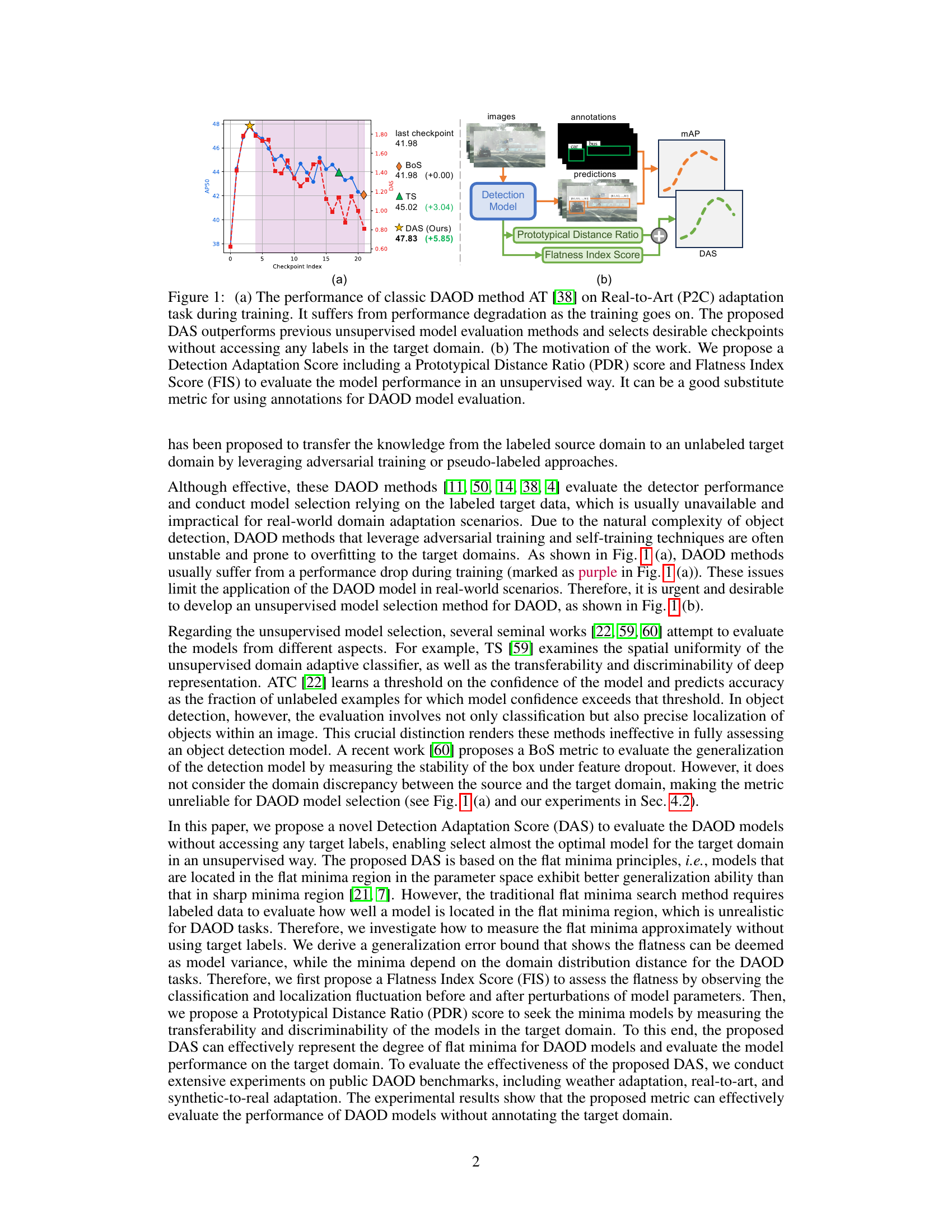

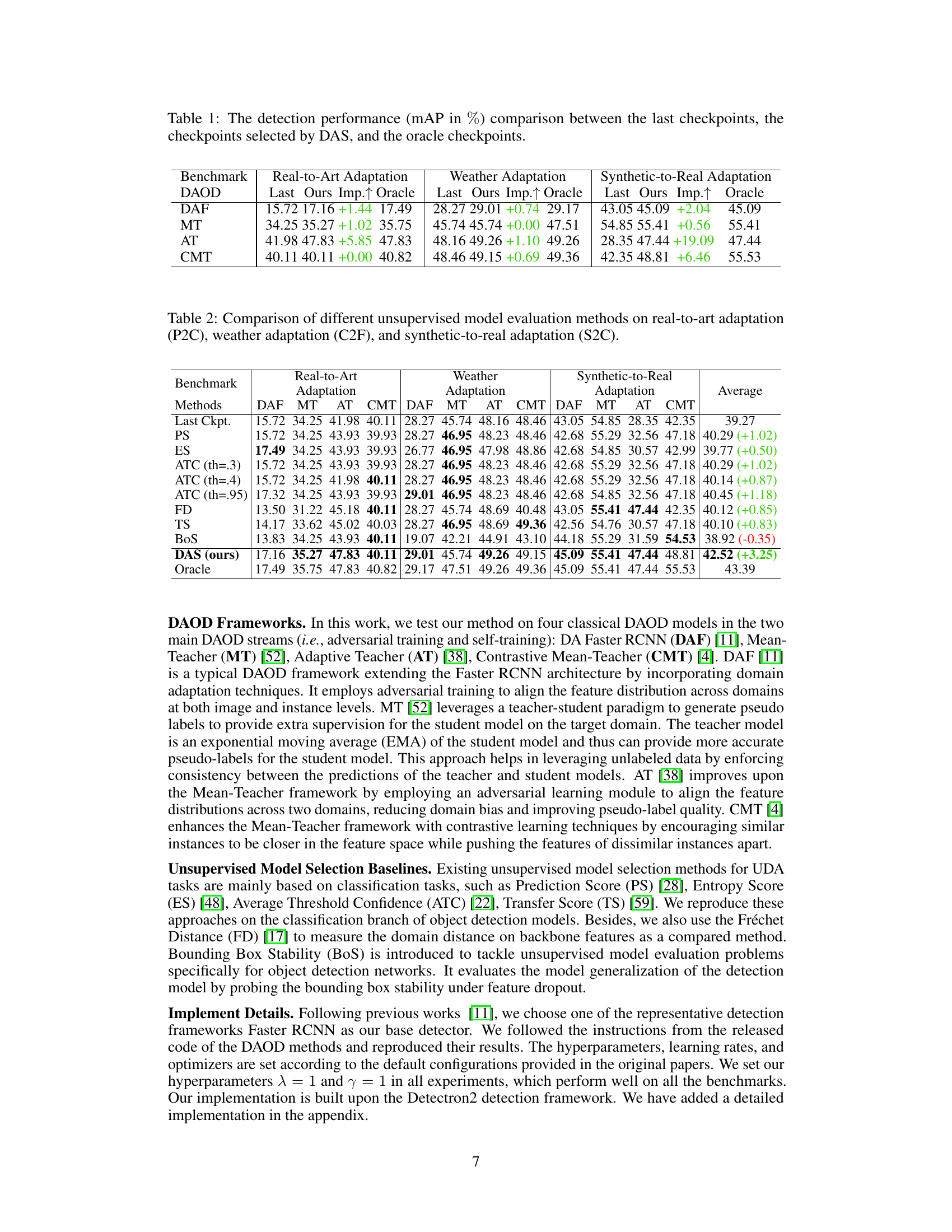

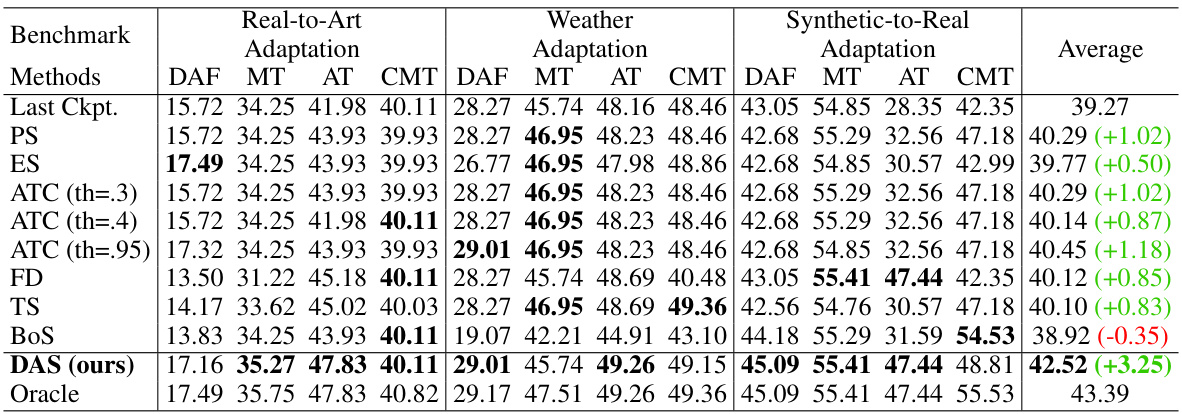

This table presents a comparison of the mean Average Precision (mAP) achieved by different Domain Adaptive Object Detection (DAOD) methods. Three key checkpoints are compared for each method: the last checkpoint reached during training, the checkpoint selected using the proposed Detection Adaptation Score (DAS) method, and an oracle checkpoint (representing optimal performance, obtained using target domain labels). The table shows the mAP for each checkpoint and the improvement gained by using the DAS-selected checkpoint compared to the final training checkpoint. This demonstrates the effectiveness of the DAS in selecting high-performing models without relying on target domain labels.

In-depth insights#

Unsupervised DAOD#

Unsupervised Domain Adaptive Object Detection (DAOD) presents a significant challenge in machine learning. Traditional DAOD methods heavily rely on labeled data from the target domain, which is often unavailable or expensive to obtain. Unsupervised DAOD aims to address this limitation by developing techniques that can adapt models to new domains without requiring target domain annotations. This requires innovative approaches to model selection and evaluation, possibly leveraging techniques like measuring model flatness, transferability, and discriminability. A key focus is on developing robust metrics that can accurately estimate a model’s performance on the target domain using only source domain data and unlabeled target data. This could involve analyzing the model’s behavior under perturbations or comparing its predictions to prototypes generated from the unlabeled target data. Successfully tackling unsupervised DAOD would greatly broaden the applicability of DAOD to real-world scenarios where labeled target data is scarce, leading to more robust and adaptable object detection systems. The development of effective unsupervised model selection methods is crucial to identifying the optimal model without relying on potentially unavailable target domain labels.

Flat Minima Focus#

The concept of “Flat Minima Focus” in a research paper likely centers on the idea that deep learning models with parameters residing in flat minima of the loss landscape tend to generalize better. Flat minima are characterized by a relatively wide region of parameter space around the minimum loss value, meaning that small perturbations to the model’s parameters do not significantly affect its performance. This contrasts with sharp minima, where even slight changes can result in substantial performance degradation. The focus on flat minima, therefore, suggests a methodology or analysis designed to identify or promote models with this desirable property. The paper likely explores techniques to either directly find such models or to indirectly encourage their emergence during training, which could involve techniques like regularization or specific optimization strategies. Identifying and promoting flat minima often translates to enhanced robustness and generalization ability in unseen data or domains, mitigating issues of overfitting and improving model stability in real-world applications. The research likely presents empirical evidence supporting the benefits of the “Flat Minima Focus,” demonstrating improved performance metrics compared to models optimized for sharp minima.

DAS: Novel Metric#

The proposed Detection Adaptation Score (DAS) presents a novel approach to unsupervised model selection in domain adaptive object detection (DAOD). It cleverly leverages the principle of flat minima, suggesting that models residing in flatter regions of the parameter space tend to generalize better. Instead of relying on unavailable target domain labels, DAS ingeniously employs a Flatness Index Score (FIS) to assess model robustness against perturbations and a Prototypical Distance Ratio (PDR) to measure transferability and discriminability. The combination of FIS and PDR effectively estimates the model’s generalization ability without target annotations, making it a highly practical tool for real-world DAOD applications. The effectiveness of DAS is thoroughly validated through experiments across several benchmark datasets and DAOD methods, showcasing its strong correlation with actual DAOD performance and highlighting its potential to significantly improve model selection in this challenging field.

Benchmark Results#

A dedicated ‘Benchmark Results’ section in a research paper provides crucial validation for the proposed methods. It should present a comprehensive comparison against established state-of-the-art techniques. Clear metrics are vital; these should be consistently applied across all methods, highlighting both the strengths and weaknesses of each approach. The selection of benchmarks is also critical; they should be relevant, sufficiently challenging, and representative of the problem domain. Statistical significance should be demonstrated (e.g., confidence intervals or p-values). The discussion should go beyond a simple table of numbers, providing insightful analysis of the results and explaining any unexpected or particularly noteworthy findings. Visualizations such as graphs or charts can significantly enhance understanding and help uncover trends. Finally, the results section should acknowledge any limitations of the benchmark process itself and suggest potential avenues for future work.

Future Work: DAOD#

Future research in Domain Adaptive Object Detection (DAOD) could significantly benefit from exploring more sophisticated unsupervised model selection techniques. Improving the robustness and generalization ability of existing methods is crucial, potentially through advancements in flat minima detection or the development of novel metrics that better capture the nuances of domain transfer. Investigating how to effectively leverage limited labeled target data to improve model selection accuracy is also essential for real-world applicability. Furthermore, future research should focus on developing more efficient and scalable approaches to address the computational challenges associated with training and evaluating DAOD models, potentially employing techniques like active learning or transfer learning. Finally, exploring the application of DAOD to more diverse and challenging scenarios, such as those involving significant variations in viewpoint or illumination, will be critical for extending the practical impact of DAOD to a broader range of applications.

More visual insights#

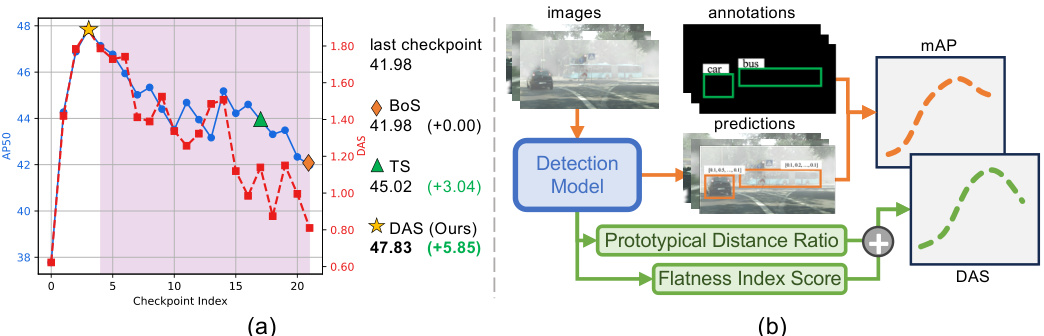

More on figures

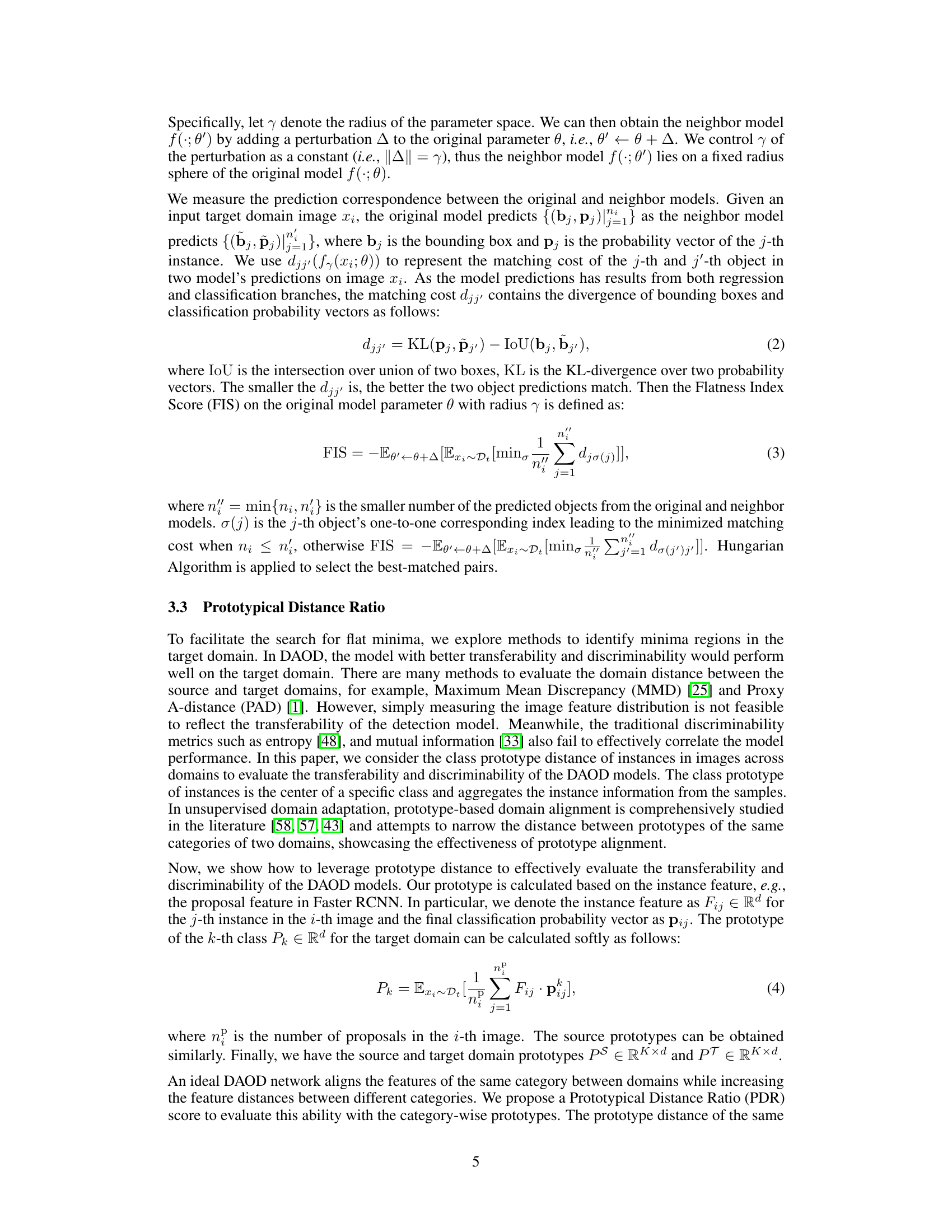

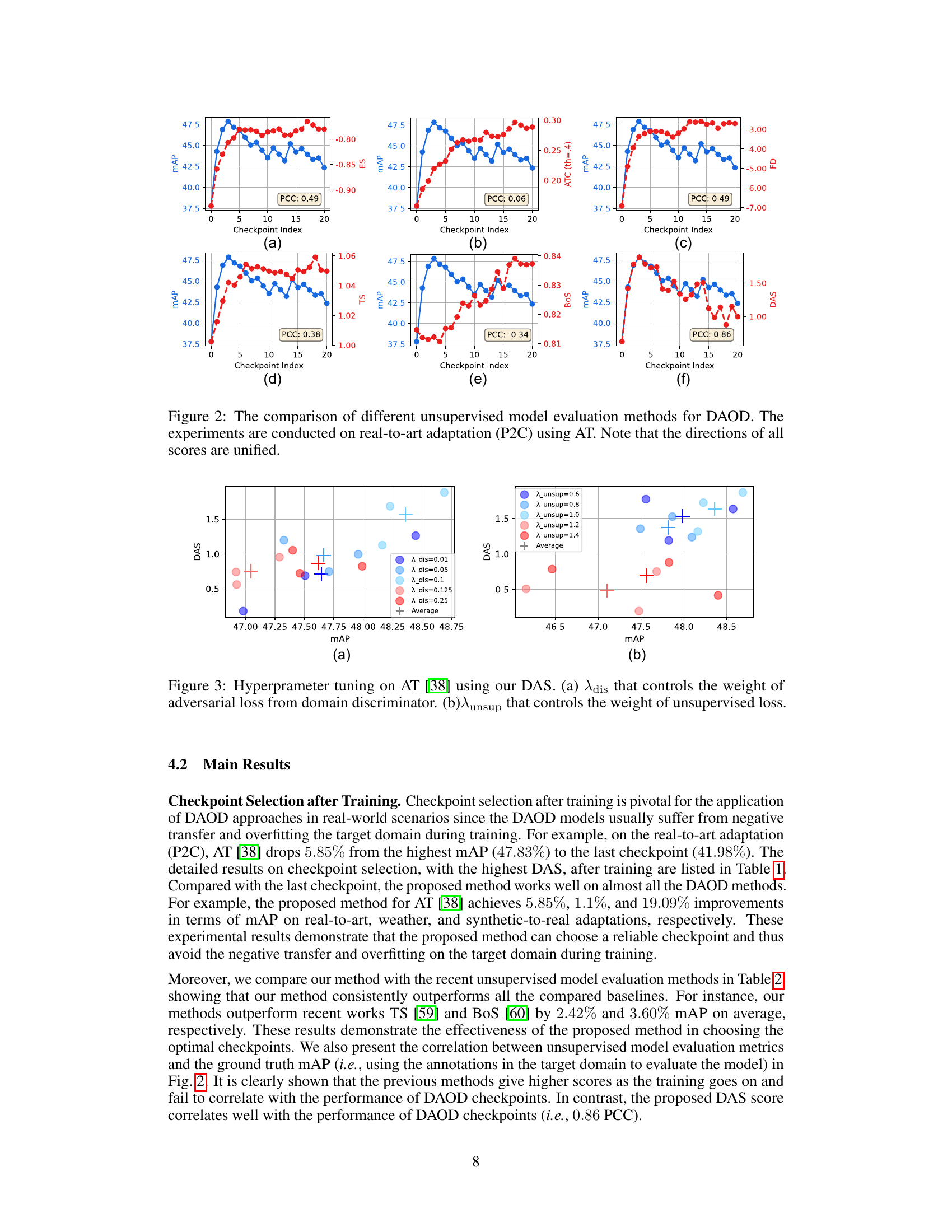

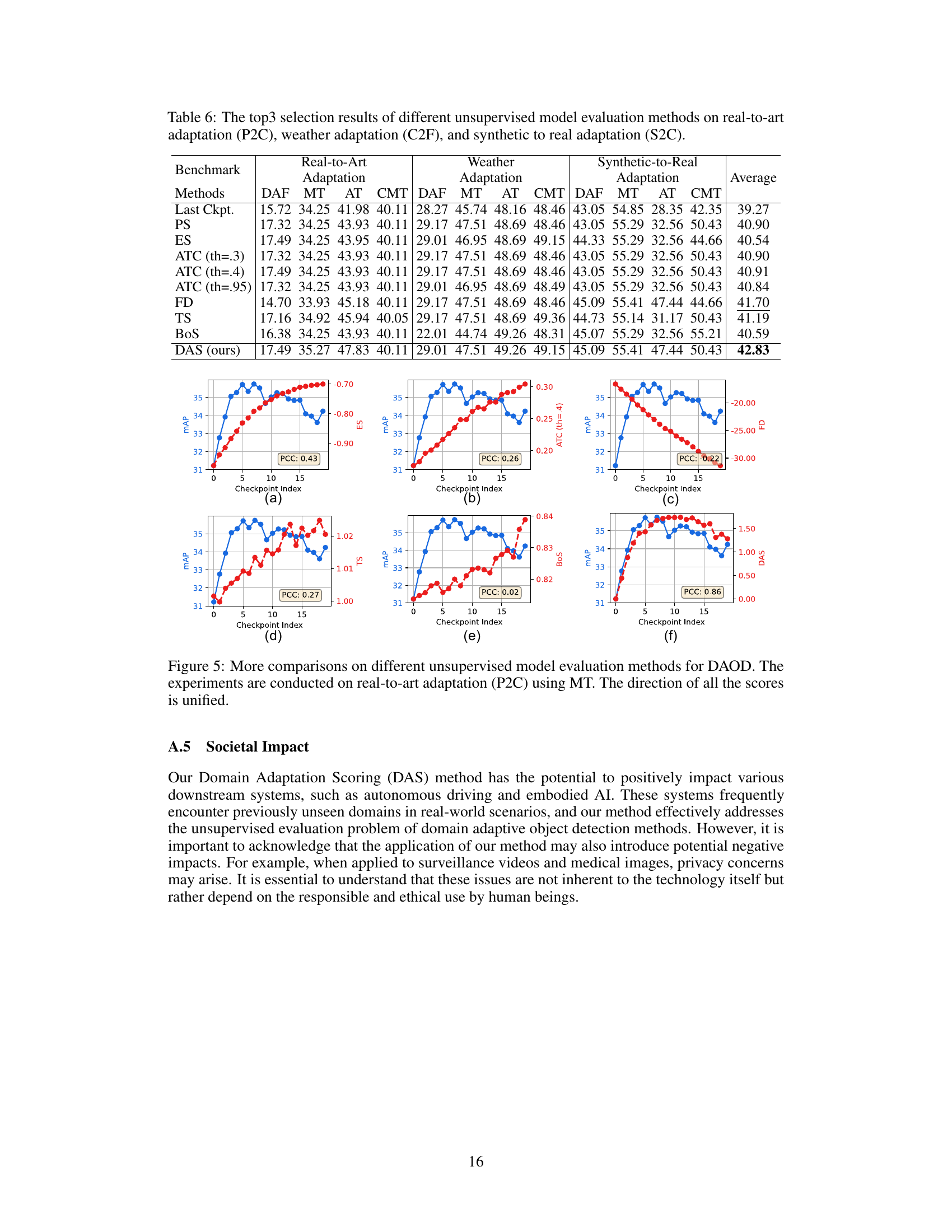

This figure compares different unsupervised model evaluation methods for Domain Adaptive Object Detection (DAOD) on the Real-to-Art adaptation task using the Adaptive Teacher (AT) method. It shows the performance (mAP) of the AT model at various checkpoints during training, along with the scores of different evaluation methods (PS, ES, ATC, BoS, DAS). The goal is to find an effective method that accurately predicts the model’s performance on the target domain without using any target domain labels. The plot shows that the proposed Detection Adaptation Score (DAS) correlates best with the actual mAP, indicating it’s superior to other methods for unsupervised model evaluation.

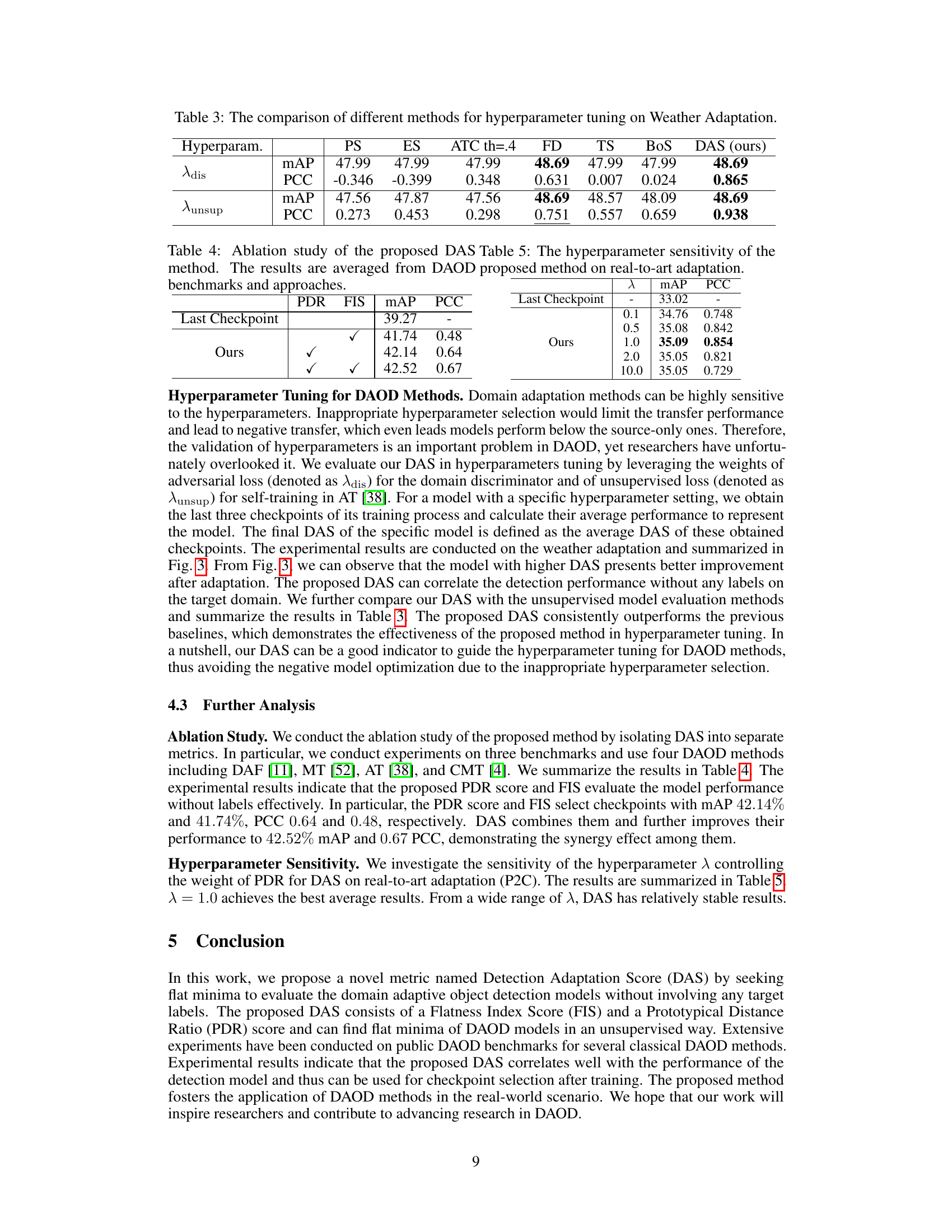

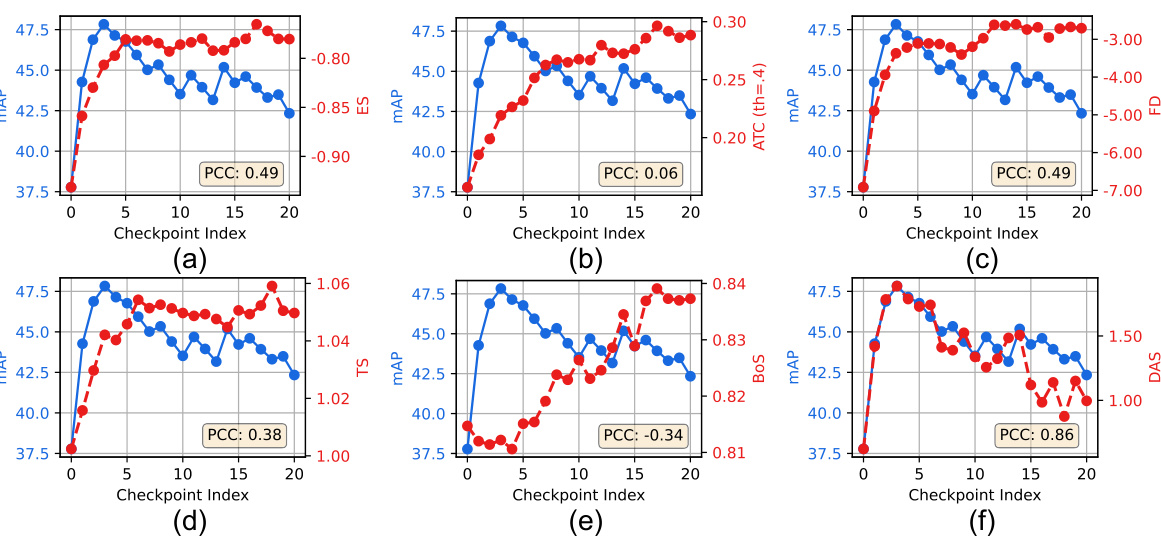

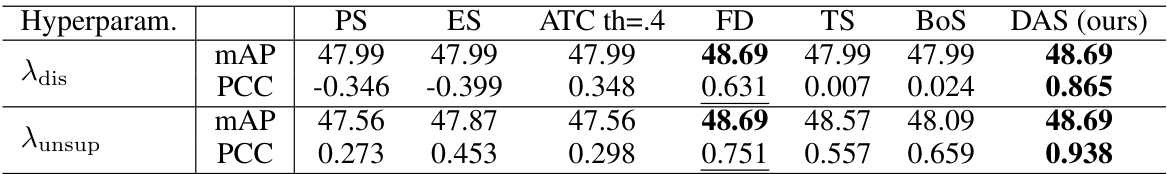

This figure shows the results of hyperparameter tuning experiments conducted using the proposed Detection Adaptation Score (DAS) method on the Adaptive Teacher (AT) object detection model. Two hyperparameters are tuned: λdis, which controls the weight of the adversarial loss from the domain discriminator; and λunsup, which controls the weight of the unsupervised loss. The plots illustrate the impact of varying these hyperparameters on the DAS score and the mean average precision (mAP) achieved, demonstrating how DAS can be used for effective hyperparameter tuning in domain adaptation.

This figure compares the performance gap between the last checkpoint of the training process and the oracle checkpoint (using ground truth labels) for three different domain adaptation scenarios: real-to-art, weather, and synthetic-to-real. The bars represent the difference in mAP between the last checkpoint and the oracle checkpoint for each of four different DAOD frameworks. The figure demonstrates the significant performance gains achievable by using the proposed DAS method to select optimal checkpoints.

More on tables

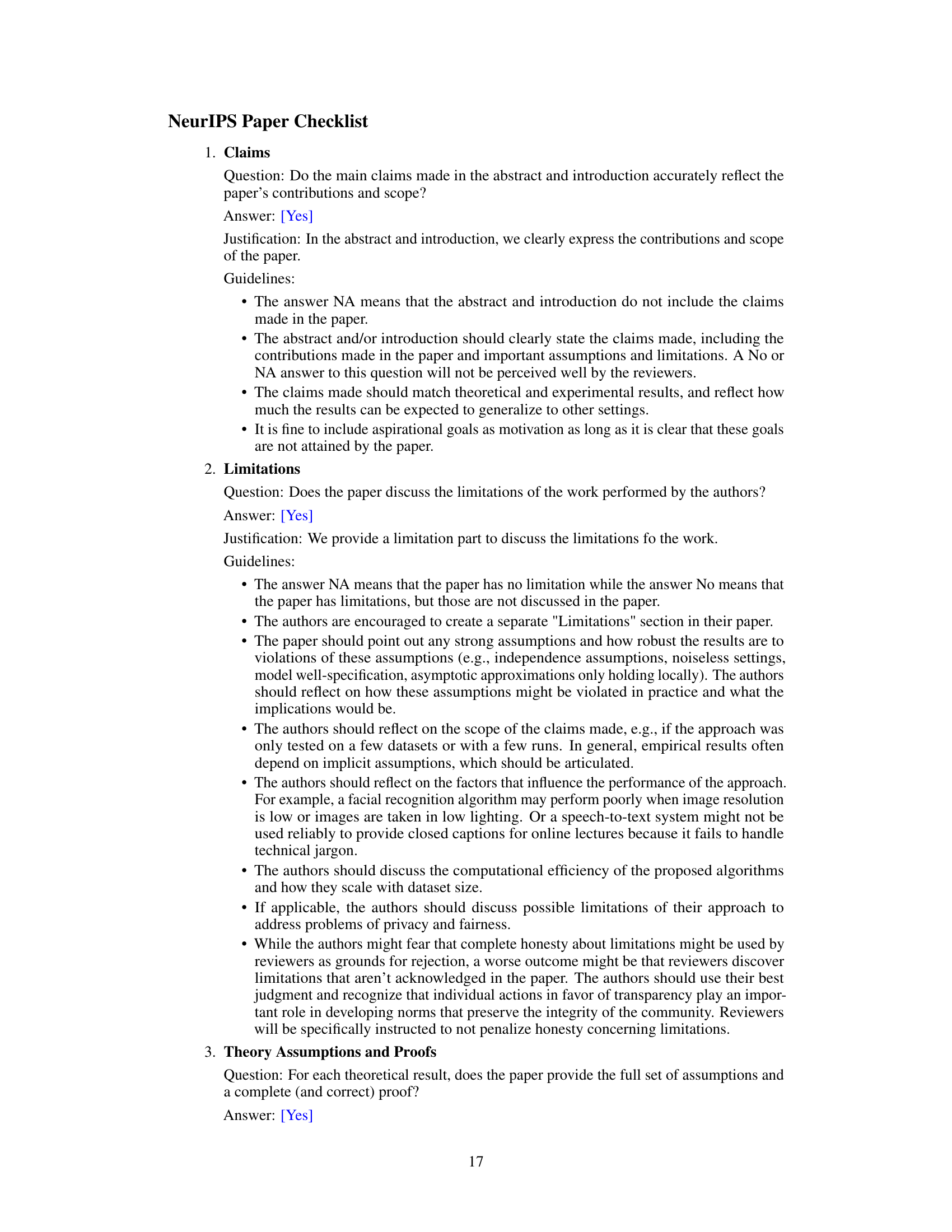

This table compares the mean Average Precision (mAP) of object detection models across three domain adaptation scenarios: Real-to-Art, Weather, and Synthetic-to-Real. It shows the performance of the last checkpoint of model training, the checkpoint selected using the proposed Detection Adaptation Score (DAS) method, and an ‘oracle’ checkpoint (the best performing checkpoint identified using target domain labels, which is usually unavailable in real-world scenarios). The improvement achieved by DAS over the last checkpoint is also indicated. This comparison highlights the effectiveness of DAS in selecting high-performing models without the need for target domain annotations.

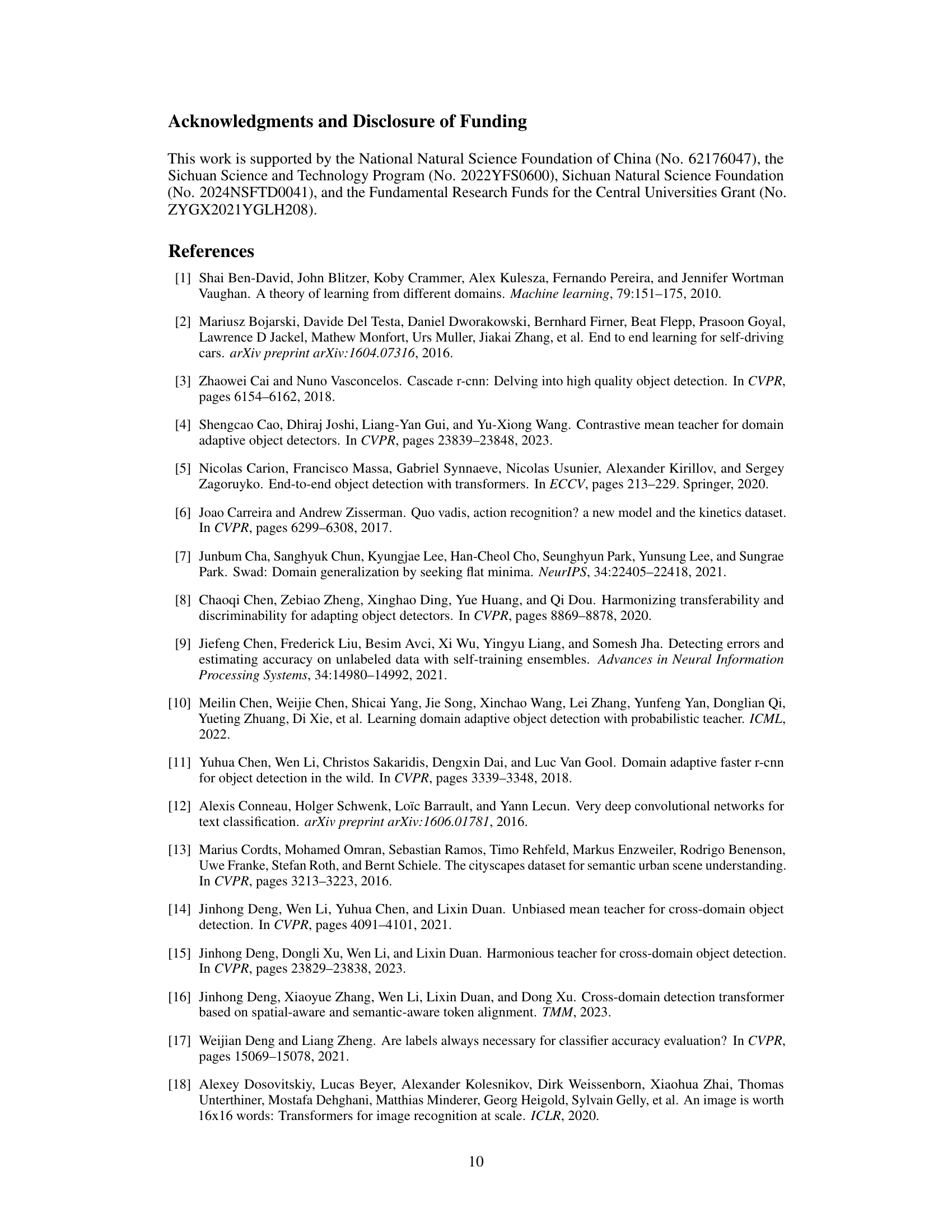

This table compares the performance of different methods for hyperparameter tuning on the Weather Adaptation task. It shows the mean Average Precision (mAP) and Pearson Correlation Coefficient (PCC) for each method across different hyperparameters (λ_dis and λ_unsup). The results highlight that the proposed DAS method outperforms other methods in terms of both mAP and PCC, indicating its superior performance in hyperparameter tuning for this specific domain adaptation task.

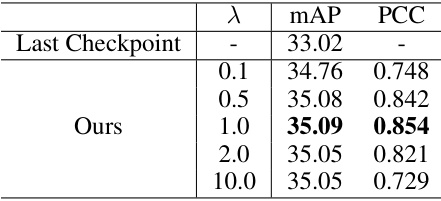

This table shows the impact of the hyperparameter λ (lambda) on the performance of the proposed Detection Adaptation Score (DAS) method on the real-to-art adaptation task. The mAP (mean Average Precision) and PCC (Pearson Correlation Coefficient) values are reported for different values of λ, ranging from 0.1 to 10.0. The results highlight the sensitivity of the method to the hyperparameter and demonstrate that a value of λ = 1.0 yields the best overall performance.

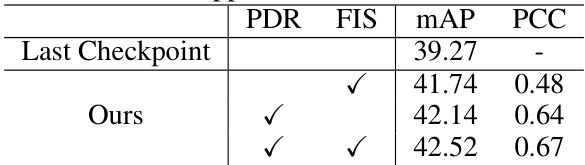

This table presents the ablation study of the proposed Detection Adaptation Score (DAS) method. The results are averaged across multiple DAOD (Domain Adaptive Object Detection) benchmarks and approaches, showing the impact of different components of DAS on the overall performance. It demonstrates the effectiveness of combining the Flatness Index Score (FIS) and the Prototypical Distance Ratio (PDR) to improve the model selection.

This table compares the mean Average Precision (mAP) of object detection models on three different domain adaptation tasks (Real-to-Art, Weather, and Synthetic-to-Real). It shows the performance of the last checkpoint during training, the checkpoint selected by the proposed Detection Adaptation Score (DAS) method, and the optimal checkpoint (oracle) as determined by using annotations from the target domain. The ‘Imp.↑’ column indicates the improvement in mAP achieved by DAS compared to the last checkpoint. This table demonstrates the effectiveness of the DAS in selecting high-performing checkpoints without relying on target domain annotations.

Full paper#