TL;DR#

Existing sEMG-based gesture recognition systems suffer from high latency, energy consumption, and sensitivity to variations in real-world conditions. The inherent instability of sEMG signals and distribution shifts across different forearm postures make creating robust models challenging. This limits the applicability of sEMG in real-world applications.

SpGesture, a novel framework based on Spiking Neural Networks (SNNs), addresses these challenges. It introduces a Spiking Jaccard Attention mechanism to enhance feature representation and a Source-Free Domain Adaptation technique to improve model robustness. Experimental results on a new sEMG dataset show that SpGesture achieves high accuracy and real-time performance (latency below 100ms), outperforming existing methods. The code for SpGesture is publicly available.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in sEMG-based gesture recognition and neuromorphic computing. It addresses the critical issues of high latency and energy consumption in existing sEMG systems, offering a novel and efficient solution. The introduction of source-free domain adaptation to spiking neural networks is a significant advance, opening up new avenues for research in robust and adaptable machine learning models. Its real-time performance and high accuracy on a new sEMG dataset make it highly relevant to current trends and future developments in human-computer interaction.

Visual Insights#

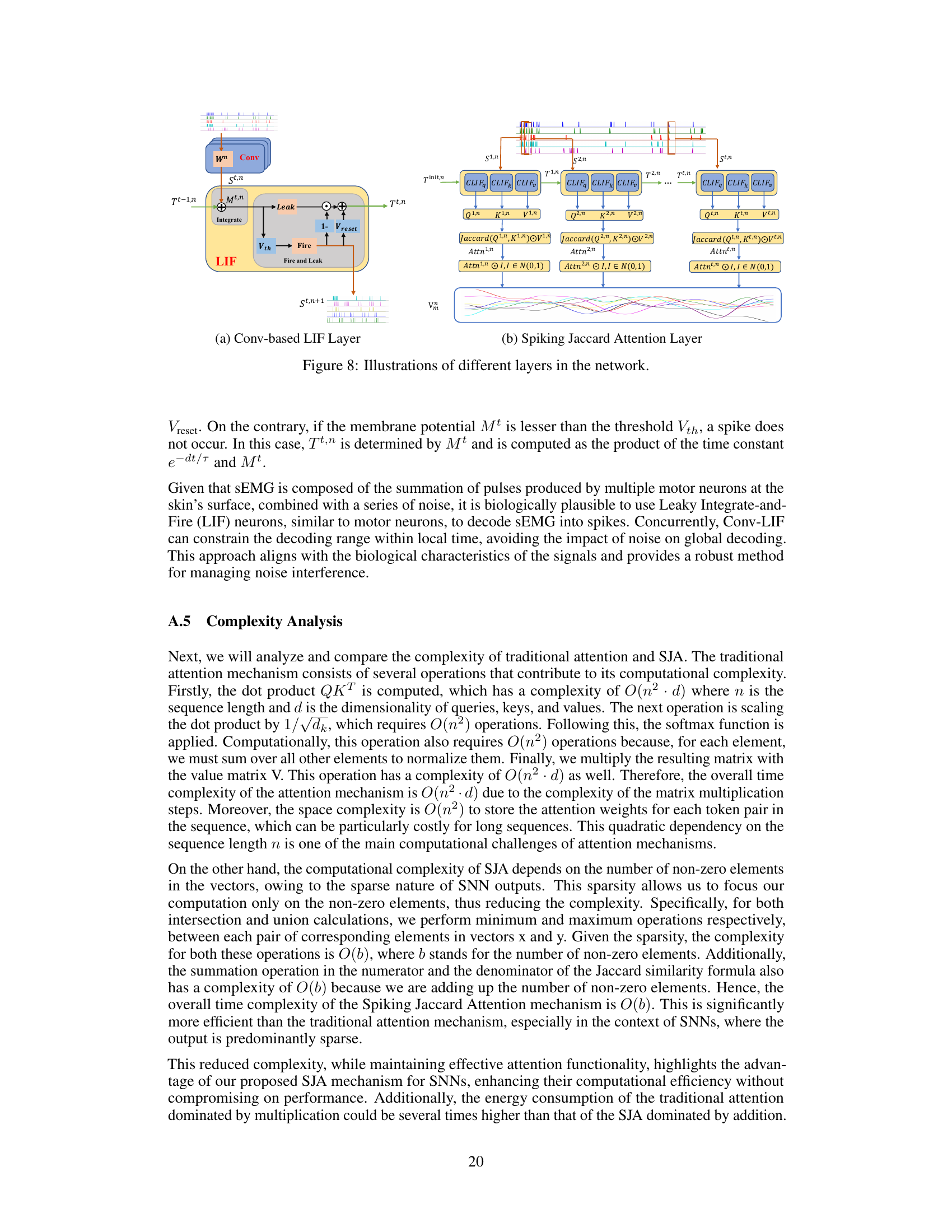

🔼 This figure illustrates the architecture of the proposed Spiking Jaccard Attention Neural Network. Raw surface electromyography (sEMG) data is initially converted into spike trains using a convolutional leaky integrate-and-fire (ConvLIF) layer. These spike trains then pass through multiple ConvLIF layers with varying numbers of channels (N and 2N) to extract features. The extracted features then undergo a Spiking Jaccard Attention mechanism before classification by a multi-layer perceptron (MLP) and finally a LIF classifier layer. A membrane potential memory component is also shown, highlighting a key element of the Source-Free Domain Adaptation strategy.

read the caption

Figure 1: The pipeline of Jaccard Attention Spike Neural Network: Raw sEMG Data is first encoded into Spike Signals using ConvLIF. These signals pass through ConvLIF layers with N and 2N channels. The processed data then goes through the Spiking Jaccard Attention mechanism.

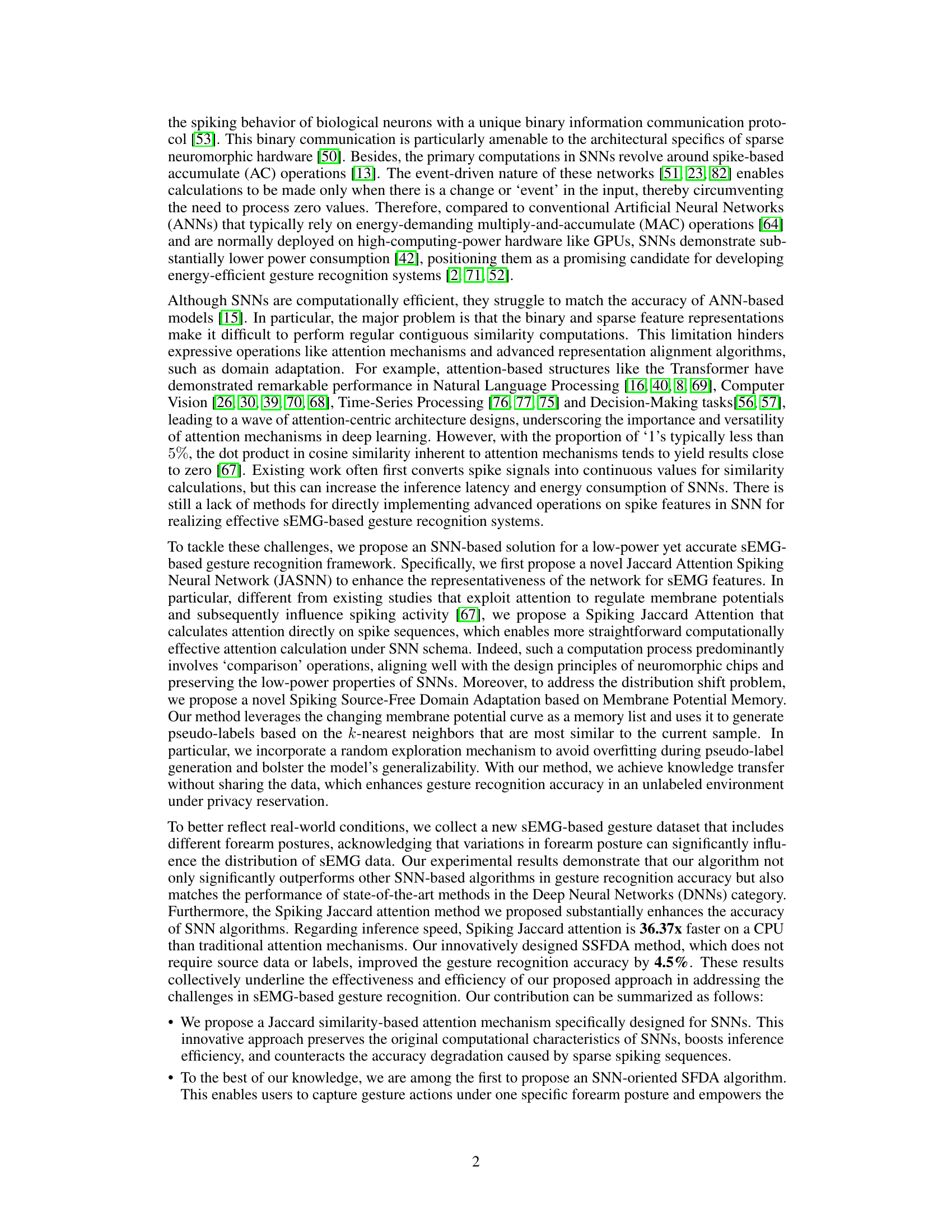

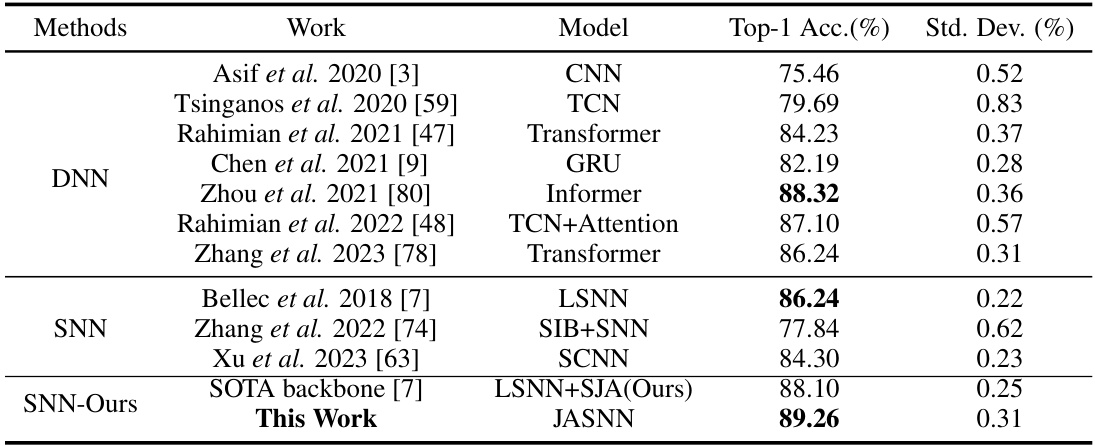

🔼 This table compares the performance of the proposed SpGesture method against various state-of-the-art methods for sEMG-based gesture recognition. It shows the top-1 accuracy and standard deviation achieved by different models, including Convolutional Neural Networks (CNNs), Temporal Convolutional Networks (TCNs), Transformers, GRUs, and Informers, as well as various Spiking Neural Networks (SNNs). The table highlights SpGesture’s superior performance compared to other DNN and SNN baselines.

read the caption

Table 1: Comparison with previous works on sEMG-based gesture estimation.

In-depth insights#

sEMG Gesture recog#

Surface electromyography (sEMG) based gesture recognition is a field of study focused on using electrical signals from muscles to control devices. sEMG signals offer a natural and intuitive interaction modality, but challenges remain. Existing methods often suffer from high computational latency and energy consumption. Additionally, sEMG signals are inherently unstable and sensitive to distribution shifts, affecting model robustness. New approaches are exploring Spiking Neural Networks (SNNs) due to their energy efficiency and potential for real-time processing. However, SNNs face challenges in achieving comparable accuracy to traditional Artificial Neural Networks (ANNs). Therefore, current research focuses on improving SNN performance through techniques such as attention mechanisms and domain adaptation, to create robust and efficient sEMG-based gesture recognition systems suitable for real-world applications. The field continues to seek efficient algorithms and hardware implementations to improve accuracy, latency, and energy efficiency of the technology.

Spiking Jaccard Attn#

The proposed Spiking Jaccard Attention mechanism offers a novel approach to attention in Spiking Neural Networks (SNNs). Traditional attention mechanisms struggle with the sparsity inherent in SNNs, often requiring conversion to continuous representations which negates the energy efficiency benefits of SNNs. Spiking Jaccard Attention directly computes attention using the Jaccard similarity measure on spike trains, avoiding this costly conversion. This not only preserves the energy efficiency of SNNs but also aligns well with the binary operations found in neuromorphic hardware. The Jaccard similarity is particularly well-suited for binary data like spike trains. Furthermore, it offers improved computational efficiency compared to traditional attention methods because it leverages the sparsity of spike trains, directly calculating similarity between pairs of spike sequences. The use of a querying mechanism further refines the focus on relevant temporal aspects of the data. Overall, the Spiking Jaccard Attention represents a significant advancement in applying attention mechanisms effectively to SNNs, enhancing accuracy and maintaining the energy efficiency that is a primary advantage of this type of neural network.

SFDA in SNNs#

The application of Source-Free Domain Adaptation (SFDA) to Spiking Neural Networks (SNNs) presents a unique challenge and opportunity. SFDA’s core strength is adapting a model to a new domain without access to the original source data, crucial for privacy-sensitive applications. However, SNNs, with their binary and sparse nature, pose difficulties for traditional SFDA methods which often rely on continuous representations and complex similarity calculations. This paper innovatively tackles this by using membrane potential as a memory list for pseudo-labeling, enabling knowledge transfer without source data sharing, and introducing a novel Spiking Jaccard Attention mechanism. This attention mechanism is designed to work effectively with the sparse nature of SNNs, enhancing feature representation and significantly improving accuracy. The combined approach of Spiking Jaccard Attention and the memory-based SFDA is particularly effective in scenarios with distribution shifts, such as those caused by varying forearm postures in sEMG gesture recognition. The effectiveness is demonstrated by achieving state-of-the-art accuracy, showcasing the potential of this approach to improve the practicality and robustness of SNNs for real-world applications.

Forearm Posture Var#

The heading ‘Forearm Posture Var’ likely refers to a section exploring the impact of different forearm positions on surface electromyography (sEMG) signals used for gesture recognition. This is a crucial consideration because forearm posture significantly affects muscle activation patterns, leading to variations in the measured sEMG data. The research likely investigates how these variations impact the accuracy and robustness of gesture recognition models. The analysis might involve comparing model performance across various postures, potentially revealing the need for robust models that generalize well across different positions. Furthermore, the study might explore techniques to mitigate the negative effects of forearm posture changes, such as data augmentation or domain adaptation strategies, to improve model generalizability and real-world applicability. Dataset composition and the inclusion of diverse forearm postures during data collection would be a critical aspect of this analysis. The results might demonstrate the limitations of models trained on limited postures and highlight the importance of considering forearm posture variability during model development and testing for improved sEMG-based gesture recognition.

Future Research#

Future research directions stemming from this work could explore extending the Spiking Source-Free Domain Adaptation (SSFDA) method to handle diverse distribution shifts beyond forearm postures. Investigating the robustness of the SSFDA and Spiking Jaccard Attention (SJA) across a broader range of SNN architectures would further validate their generalizability and highlight potential limitations. Evaluating performance on neuromorphic hardware is crucial for realizing the energy efficiency benefits of SNNs. Furthermore, exploring alternative attention mechanisms and incorporating other advanced techniques from deep learning could potentially enhance accuracy and robustness. Finally, expanding the dataset to include a wider variety of gestures and subjects, alongside a more comprehensive analysis of the impact of noise and electrode placement, would make the model more practical and reliable for real-world applications.

More visual insights#

More on figures

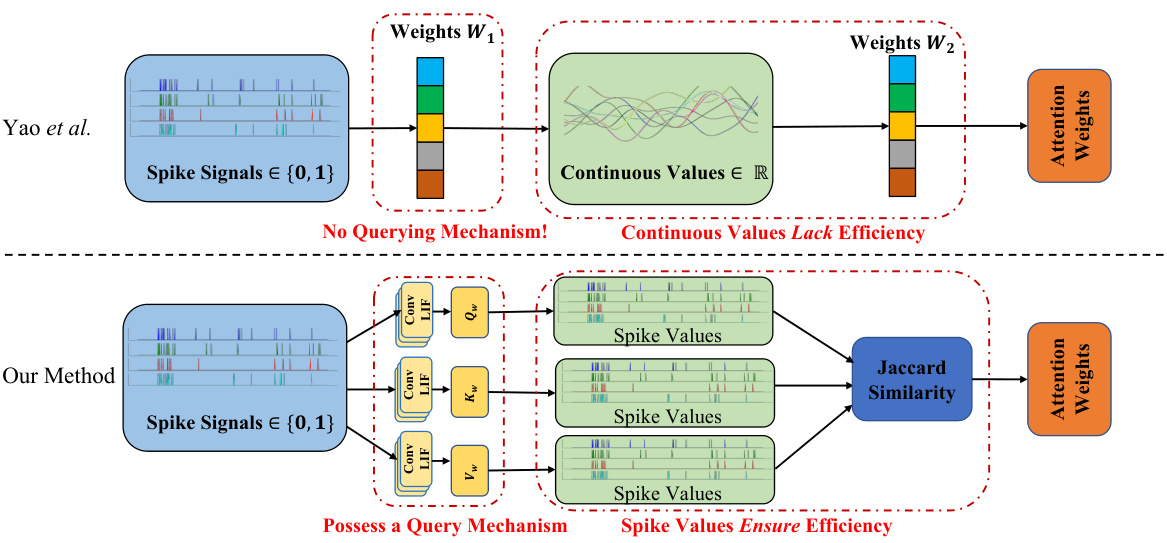

🔼 This figure compares the proposed Spiking Jaccard Attention (SJA) module with the existing MA-SNN attention module. MA-SNN uses fully connected layers and pooling, resulting in continuous intermediate values and reduced efficiency. In contrast, SJA utilizes spike values, which improves efficiency and accuracy by enabling direct computation of Jaccard similarity on the sparse spike trains.

read the caption

Figure 2: Comparison of MA-SNN and Spiking Jaccard Attention Modules. MA-SNN [67] uses fully connected layers with pooling but lacks a querying mechanism, leading to continuous intermediate values and lower efficiency. Our Spiking Jaccard Attention uses spike values for intermediate representations, enhancing efficiency and accuracy.

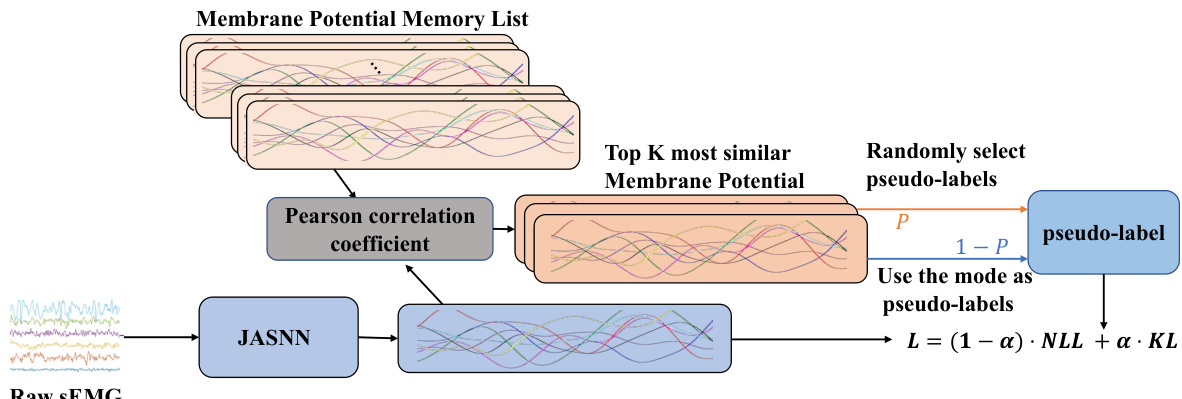

🔼 This figure illustrates the Spiking Source-Free Domain Adaptation (SSFDA) process. Raw sEMG data is fed into the JASNN model. The model’s membrane potential is recorded and stored in a ‘Membrane Potential Memory List’. For each new unlabeled sample, the k-nearest neighbors from this memory are found using Pearson correlation. A probabilistic approach generates pseudo-labels based on these neighbors (using the mode or random selection). The model is then trained using a loss function combining Smooth Negative Log-Likelihood (SNLL) and Kullback-Leibler (KL) divergence. The memory list is updated after each training epoch.

read the caption

Figure 3: Computation flow of Spiking Source-Free Domain Adaptation. The process starts with selecting the k-nearest samples from the membrane potential memory using the Pearson correlation coefficient. Probabilistic Label Generation then produces pseudo-labels based on these k samples. Gradients are computed with Smooth NLL and KL divergence loss. The membrane potential memory list is updated at each epoch's end.

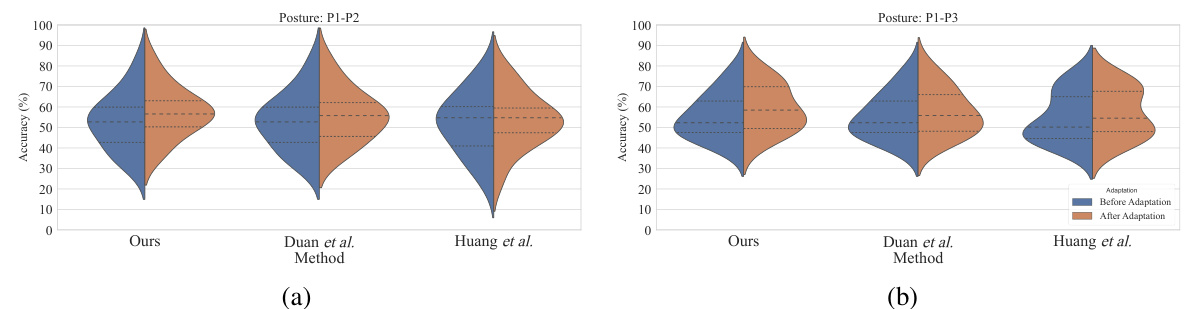

🔼 Violin plots showing the accuracy of three different domain adaptation methods (Ours, Duan et al., Huang et al.) before and after applying Spiking Source-Free Domain Adaptation (SSFDA) on two different scenarios. The plots compare performance when the model is trained on forearm posture P1 and tested on forearm postures P2 (4a) and P3 (4b). The results illustrate the impact of SSFDA in improving robustness and generalizability across different forearm postures.

read the caption

Figure 4: Comparison of performance before and after applying SSFDA for various methodologies: Figures 4a and 4b are Violin Plots demonstrating this disparity.

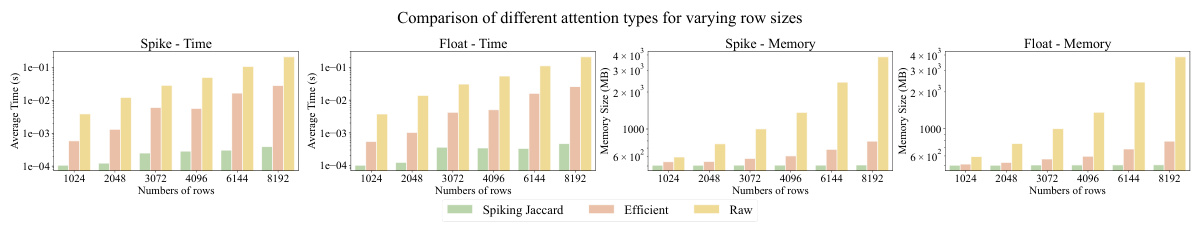

🔼 This figure compares the inference speed and RAM usage of three different attention mechanisms (Raw Attention, Efficient Attention, and Spiking Jaccard Attention) using both spike and float data types. The results demonstrate that the Spiking Jaccard Attention method is significantly more efficient than the other two methods in terms of both speed and memory usage, especially as the amount of data increases.

read the caption

Figure 5: Inference speed and RAM usage comparison between spike and float data for Raw Attention [60], Efficient Attention [54], and our Spiking Jaccard Attention: The first column shows inference time for float data, and the second for spike data. The third and fourth columns show RAM usage for these data types. The x-axis represents different data row counts, and the y-axis is logarithmic to highlight performance differences. Each experiment was conducted 100 times, with averaged results.

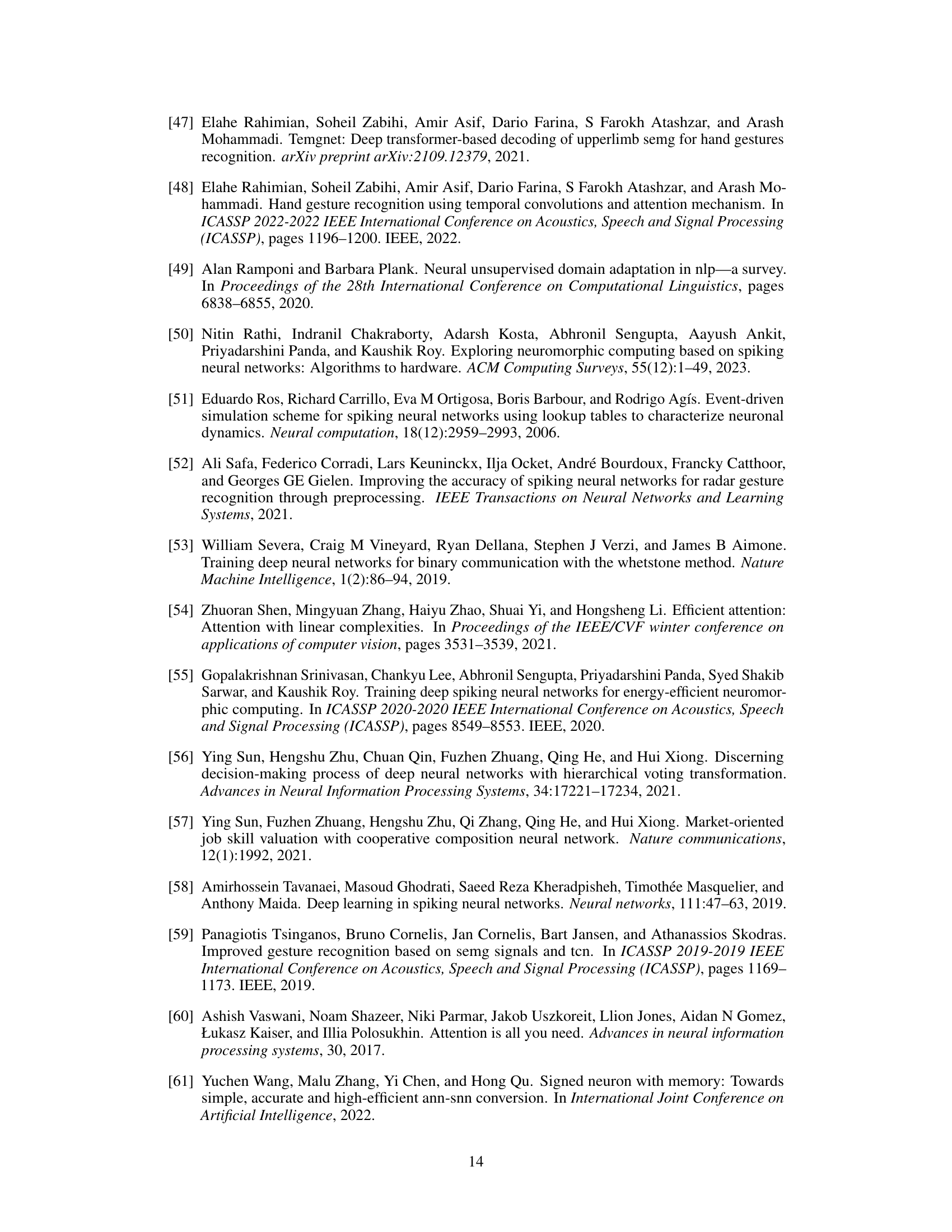

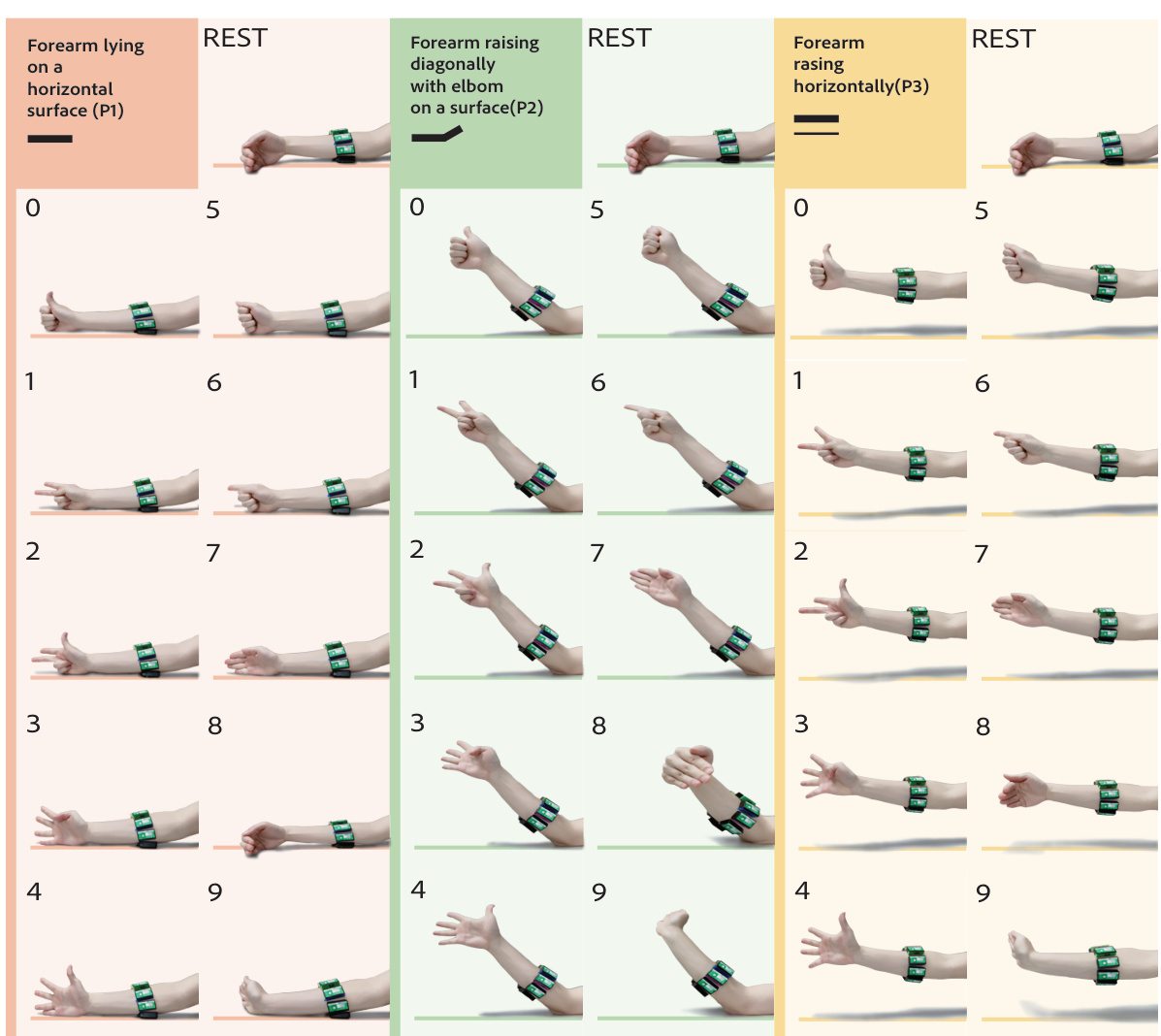

🔼 This figure shows an overview of the dataset used in the SpGesture research. It displays ten different hand gestures performed across three different forearm postures (horizontal, diagonal, and elevated). Each gesture is represented by a number (0-9), and each forearm posture has a unique color-coded background. The ‘REST’ column shows images of the hand in a relaxed state before and after performing each gesture.

read the caption

Figure 6: Overview of our dataset: the compilation contains sEMG data for ten distinct actions, each across three postures. Varied background colors represent distinct forearm postures, while the digits ranging from 0 to 9 correspond to specific gesture actions. The 'Rest' label at the top denotes a static hand gesture when no action is being performed.

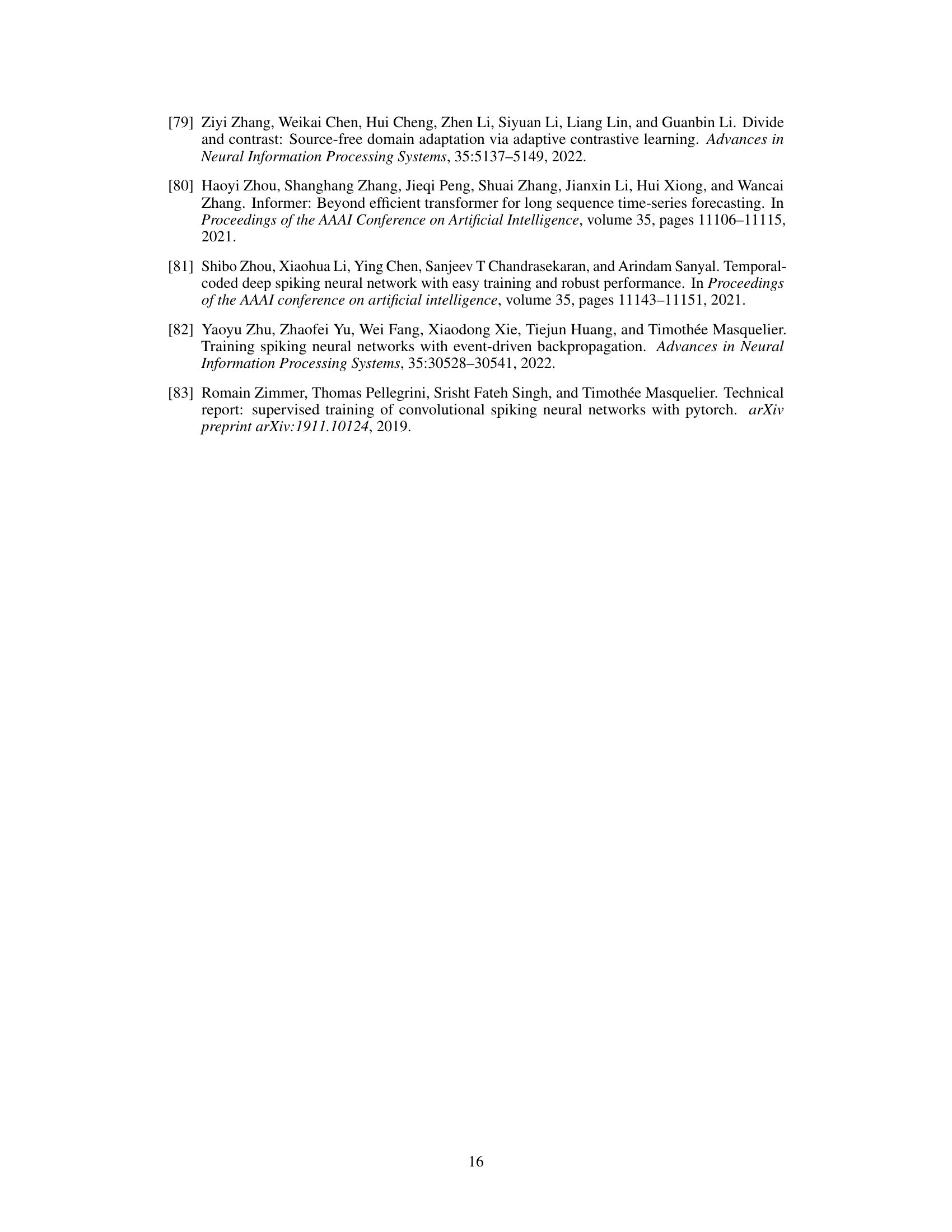

🔼 The figure shows a summary of the collected sEMG data. The left side shows three different forearm postures. The middle section shows the raw sEMG data from one subject for the three postures and the right section shows the RMS features extracted from the sEMG data.

read the caption

Figure 7: Summary of our collected data: The three postures on the left illustrate distinct preparatory arm actions. The central data graph represents the sEMG data captured from a subject under the three postures, with unique colors assigned to different channels. The data graph on the right showcases the acquired data after Root Mean Square (RMS) processing.

🔼 This figure illustrates the architecture of the proposed Jaccard Attentive Spiking Neural Network (JASNN). Raw surface electromyography (sEMG) data is initially converted into spike signals using a convolutional leaky integrate-and-fire (ConvLIF) encoder. These spike signals then pass through multiple ConvLIF layers with varying numbers of channels (N and 2N), extracting features and increasing dimensionality. Finally, a Spiking Jaccard Attention mechanism is applied to focus on the most relevant features before classification.

read the caption

Figure 1: The pipeline of Jaccard Attention Spike Neural Network: Raw sEMG Data is first encoded into Spike Signals using ConvLIF. These signals pass through ConvLIF layers with N and 2N channels. The processed data then goes through the Spiking Jaccard Attention mechanism.

🔼 This figure presents a comparison of model performance before and after applying Spiking Source-Free Domain Adaptation (SSFDA) using three different methodologies. The violin plots show the distribution of accuracy across different subjects for two different scenarios: (a) comparing model trained on posture 1’s performance on posture 2, and (b) comparing model trained on posture 1’s performance on posture 3. The results demonstrate the effectiveness of SSFDA in improving model robustness by reducing the performance gap between different forearm postures. The proposed method shows significantly improved accuracy and reduced variance compared to other methods.

read the caption

Figure 4: Comparison of performance before and after applying SSFDA for various methodologies: Figures 4a and 4b are Violin Plots demonstrating this disparity.

🔼 This figure compares the performance of three different pseudo-label generation methods (Ours, Duan et al., and Huang et al.) for Spiking Source-Free Domain Adaptation (SSFDA) before and after applying the adaptation technique. Violin plots are used to show the distribution of accuracy across 14 subjects for two scenarios: when transferring models trained on forearm posture P1 to postures P2 (Figure 4a) and P3 (Figure 4b). The plots demonstrate that the proposed method (Ours) shows a significant improvement in accuracy and reduced variability across subjects compared to the other methods, after applying SSFDA.

read the caption

Figure 4: Comparison of performance before and after applying SSFDA for various methodologies: Figures 4a and 4b are Violin Plots demonstrating this disparity.

🔼 This figure illustrates the architecture of the proposed Jaccard Attentive Spiking Neural Network (JASNN). Raw surface electromyography (sEMG) data is initially converted into spike signals using a convolutional leaky integrate-and-fire (ConvLIF) encoder. These spike signals then pass through multiple ConvLIF layers, with varying numbers of channels (N and 2N). The processed data is subsequently fed into a Spiking Jaccard Attention module, which enhances the network’s ability to focus on relevant features and improve its performance.

read the caption

Figure 1: The pipeline of Jaccard Attention Spike Neural Network: Raw sEMG Data is first encoded into Spike Signals using ConvLIF. These signals pass through ConvLIF layers with N and 2N channels. The processed data then goes through the Spiking Jaccard Attention mechanism.

Full paper#