↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many real-world problems involve dynamically changing reward structures, such as online advertising or dynamic pricing. The ‘bandits with knapsacks’ (BwK) problem models such scenarios, but existing solutions often assume stationary reward distributions or impose strict constraints on how the rewards can change over time. These assumptions limit the applicability of these methods to real-world problems, where reward structures often shift gradually or in sudden changes. This paper tackles these issues by considering piecewise-stationary environments where the reward structure changes between periods of stability.

The authors introduce a novel algorithm called IRES-CM that cleverly reserves resources based on an estimated reward-consumption ratio. This approach cleverly balances exploration and exploitation across different phases of the reward distribution. The key contribution is a theoretical guarantee showing that this algorithm achieves a near-optimal competitive ratio (a measure of performance relative to an ideal algorithm) that depends only on the ratio between the minimum and maximum reward earned per unit resource, without strong assumptions on how often the distribution changes. This result significantly advances the understanding and handling of non-stationary BwK problems.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on bandit algorithms and online resource allocation because it introduces a novel algorithm for handling piecewise-stationary environments. It addresses the limitations of existing methods by providing a provably near-optimal competitive ratio without requiring strong assumptions about the non-stationarity, opening up new avenues for research in dynamic environments.

Visual Insights#

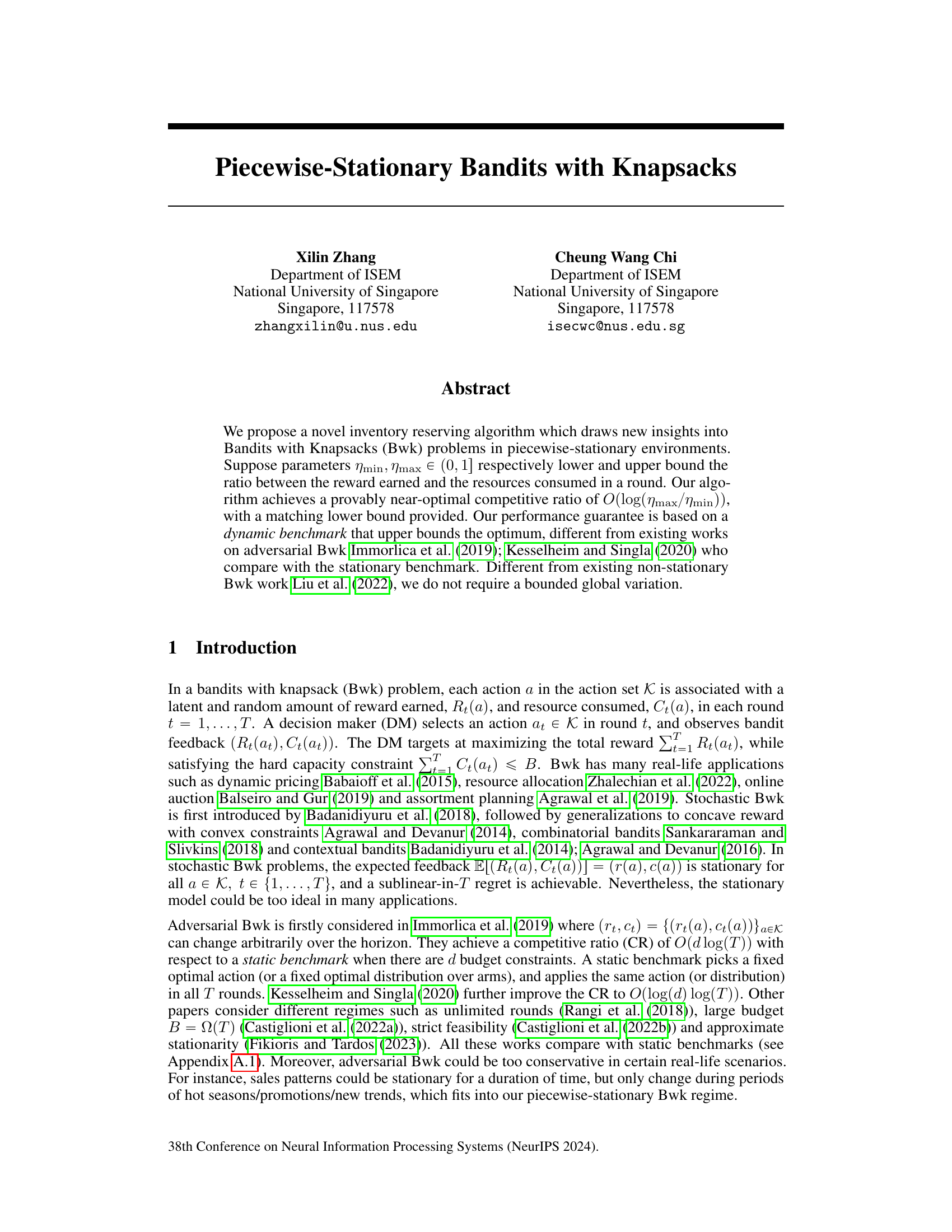

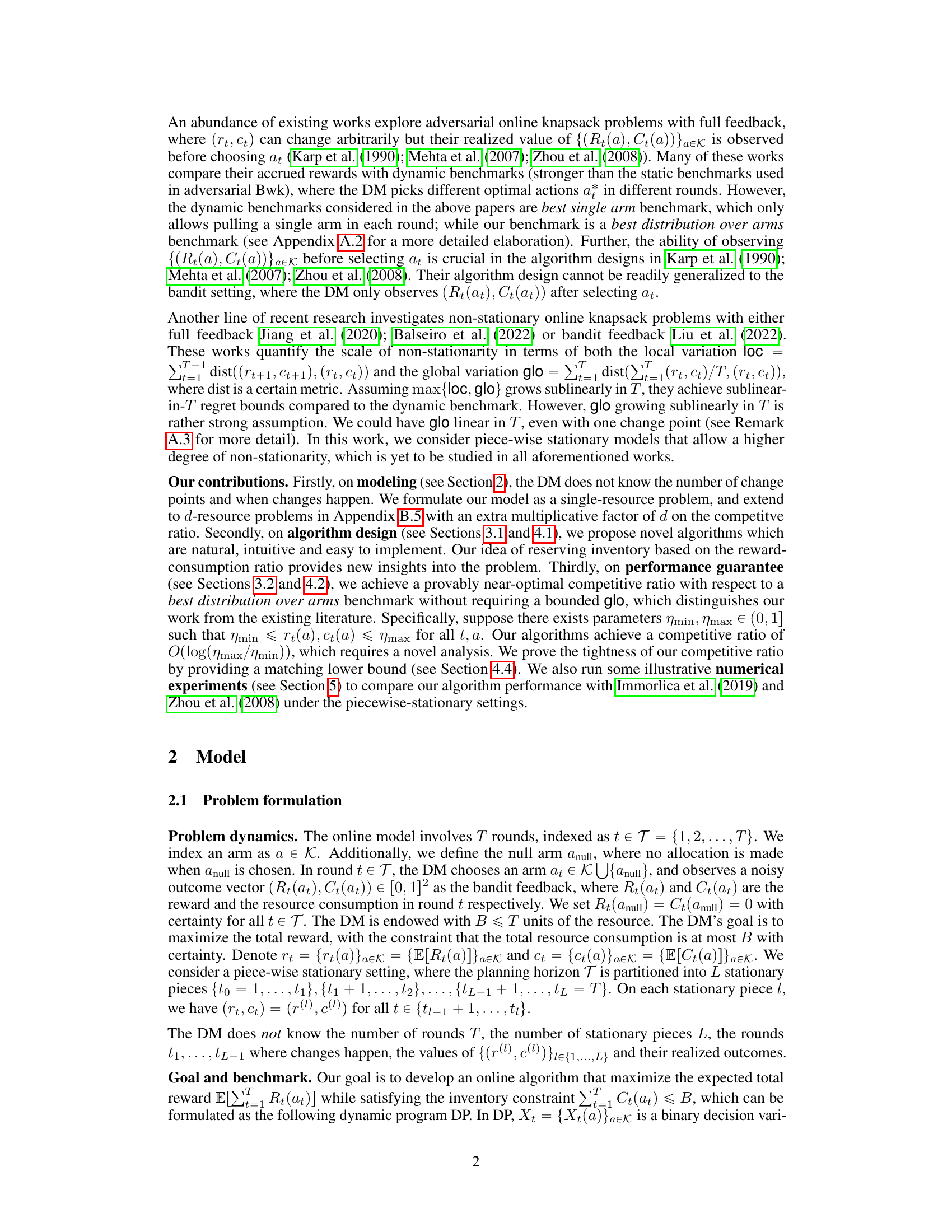

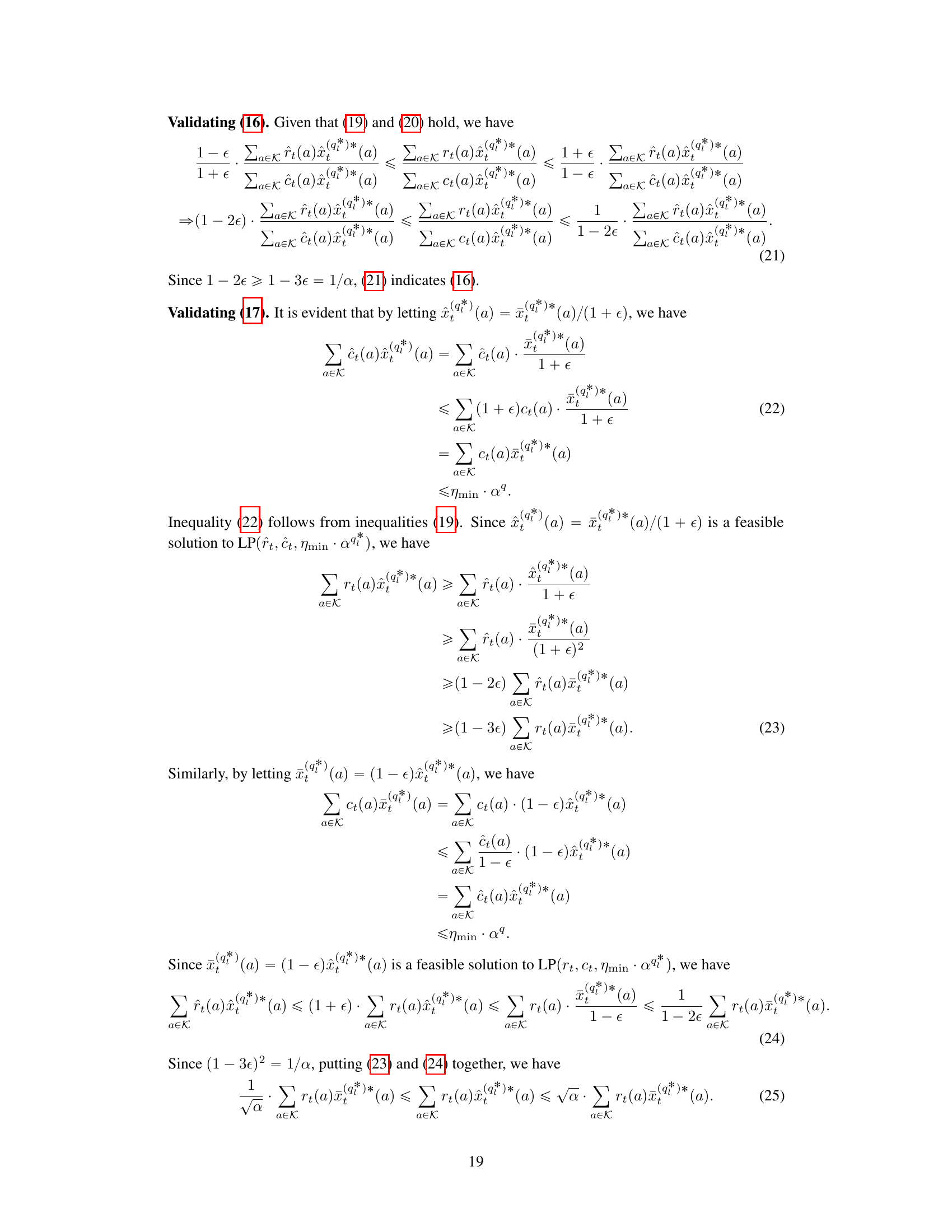

This figure compares the performance of three algorithms (IRES-CM, Immorlica et al. 2019, and Zhou et al. 2008) on a piecewise-stationary bandit with knapsack problem. Two scenarios are shown: one where the optimal solution uses a single arm, and one where it uses a mixture of arms. Each line represents the average cumulative reward over 10 simulations, with shaded areas indicating the variance. The dotted lines represent the linear program (LP) benchmark (FA). The results show that IRES-CM generally outperforms the other two algorithms, particularly in the mixed arms scenario, suggesting its effectiveness in this more complex setting.

Full paper#