TL;DR#

Online convex optimization (OCO) aims to minimize an algorithm’s cumulative loss compared to a benchmark. Dynamic regret, a more challenging problem, considers a sequence of benchmarks. Current research lacks a unifying framework for analyzing and designing dynamic regret algorithms; existing algorithms often lead to pessimistic bounds. This paper addresses these shortcomings.

The paper introduces a novel reduction that shows dynamic regret minimization is equivalent to static regret in a higher-dimensional space. This equivalence is exploited to create a framework for obtaining improved guarantees and to prove the impossibility of certain types of regret guarantees. The framework provides a clear way to quantify and reason about the trade-offs between loss variance and comparator sequence variability, and thus leads to a new algorithm with improved guarantees.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in online convex optimization because it establishes an equivalence between static and dynamic regret minimization, providing a novel framework to design and analyze algorithms. This simplifies the study of dynamic regret and opens avenues for developing algorithms with improved guarantees by leveraging existing results in static regret. The research also highlights fundamental trade-offs between penalties due to comparator variability and loss variance, which informs future research directions.

Visual Insights#

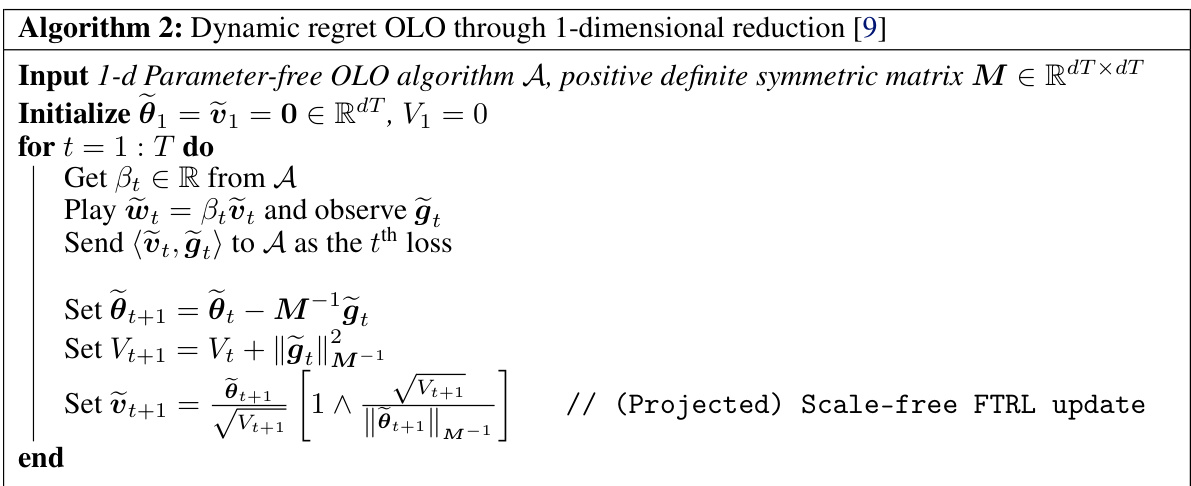

🔼 This algorithm uses a 1-dimensional parameter-free online learning algorithm and embeds the comparator sequence into a higher-dimensional space to achieve dynamic regret guarantees. It iteratively updates its prediction by considering the current loss, a positive definite symmetric matrix M, and a scale-free FTRL update. The algorithm dynamically adjusts its step size and prediction based on the accumulated loss and variance.

read the caption

Algorithm 2: Dynamic regret OLO through 1-dimensional reduction [9]

Full paper#