↗ OpenReview ↗ NeurIPS Homepage ↗ Hugging Face ↗ Chat

TL;DR#

Current HDR novel view synthesis (NVS) methods struggle with slow inference and long training times, limiting real-time applications. Existing approaches, primarily based on Neural Radiance Fields (NeRF), are computationally expensive due to their ray-tracing process. The high computational cost hinders their use in applications demanding real-time performance, such as AR/VR, gaming, and filmmaking. This necessitates the development of more efficient HDR NVS methods capable of generating high-quality HDR images rapidly.

To tackle these challenges, the researchers introduce HDR-GS (High Dynamic Range Gaussian Splatting), a novel framework for HDR NVS. HDR-GS leverages the speed advantages of Gaussian splatting while employing a novel Dual Dynamic Range (DDR) Gaussian point cloud model. This model uses spherical harmonics to represent HDR colors and an MLP-based tone-mapper for LDR color rendering. The HDR and LDR outputs are fed into Parallel Differentiable Rasterization (PDR) processes to construct HDR and LDR images. Experiments demonstrate that HDR-GS surpasses existing techniques with a 1000x speed improvement in inference while maintaining comparable, or even better, image quality. This makes HDR-GS a significant advancement in the field of HDR NVS.

Key Takeaways#

Why does it matter?#

This paper is crucial because it significantly advances high dynamic range (HDR) novel view synthesis (NVS), a field currently limited by slow speeds and lengthy training times. By achieving a 1000x speedup in inference while maintaining high image quality, HDR-GS opens new possibilities for real-time applications in AR/VR, gaming, and film. Its innovative approach using Gaussian splatting offers a promising alternative to traditional NeRF-based methods, sparking further research into efficient HDR rendering techniques.

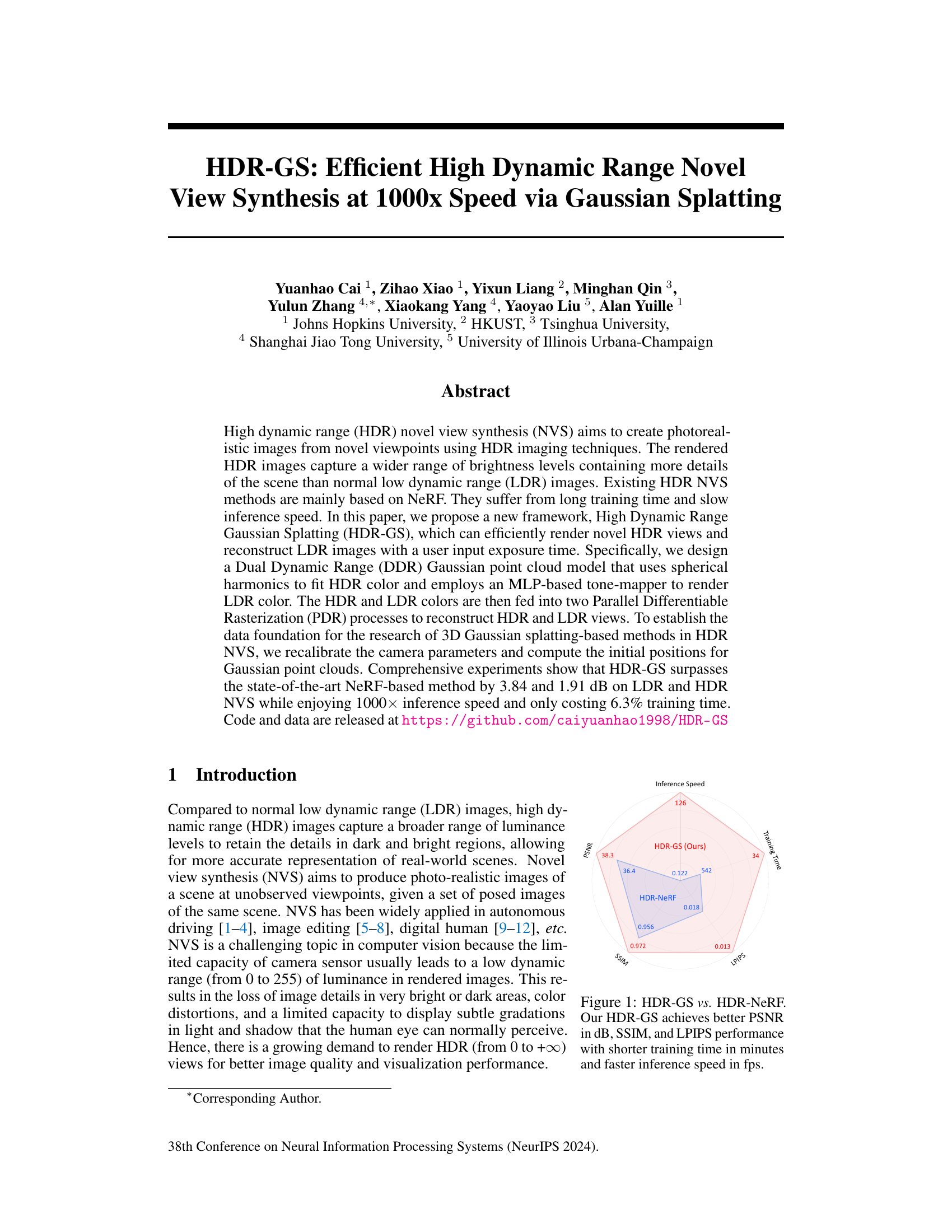

Visual Insights#

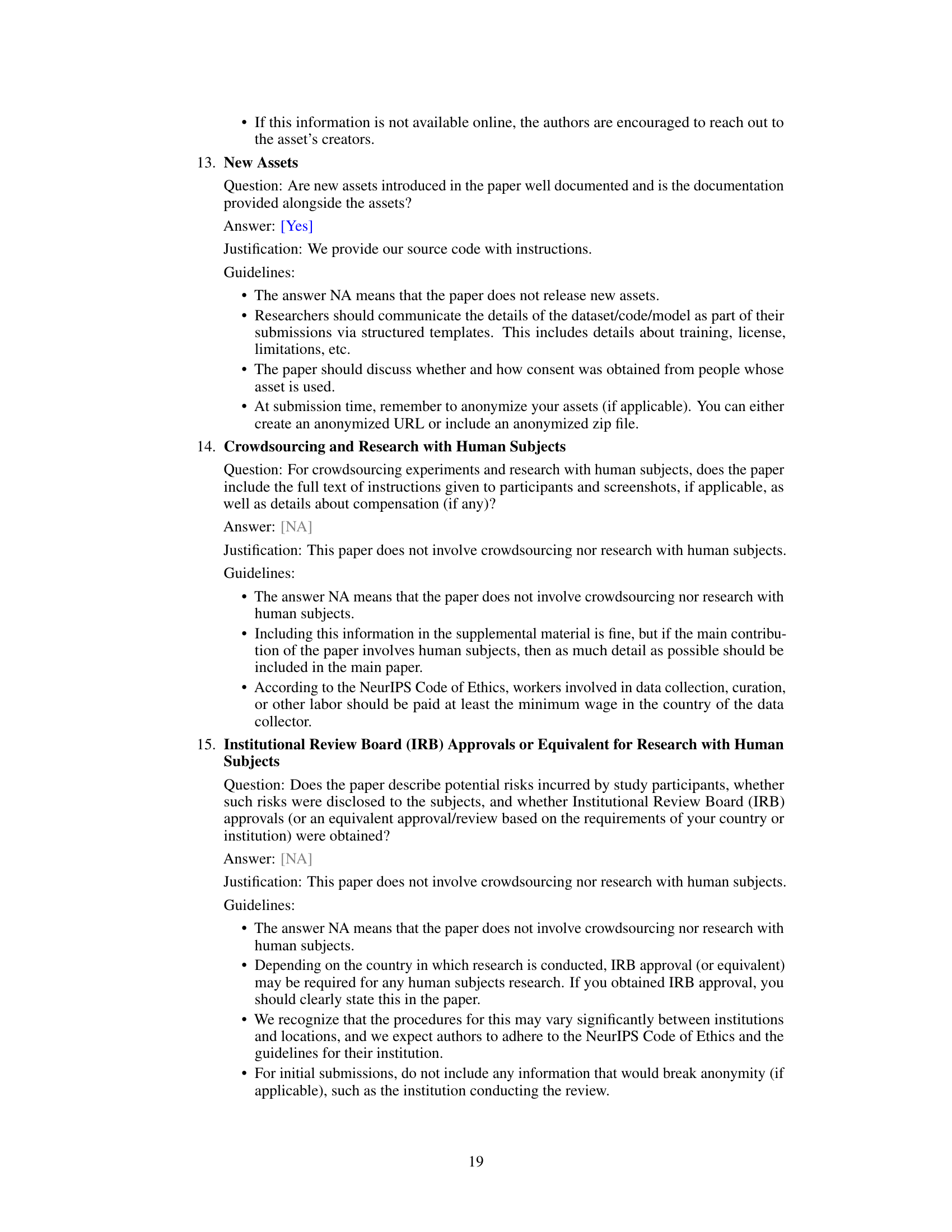

This figure compares the performance of HDR-GS and HDR-NeRF across multiple metrics: PSNR (Peak Signal-to-Noise Ratio), SSIM (Structural Similarity Index), LPIPS (Learned Perceptual Image Patch Similarity), training time, and inference speed. HDR-GS shows significant improvements in all metrics compared to HDR-NeRF. Specifically, HDR-GS achieves a higher PSNR, SSIM, and lower LPIPS, indicating better image quality. Furthermore, HDR-GS boasts much faster inference speed (frames per second) and shorter training time (minutes), making it significantly more efficient.

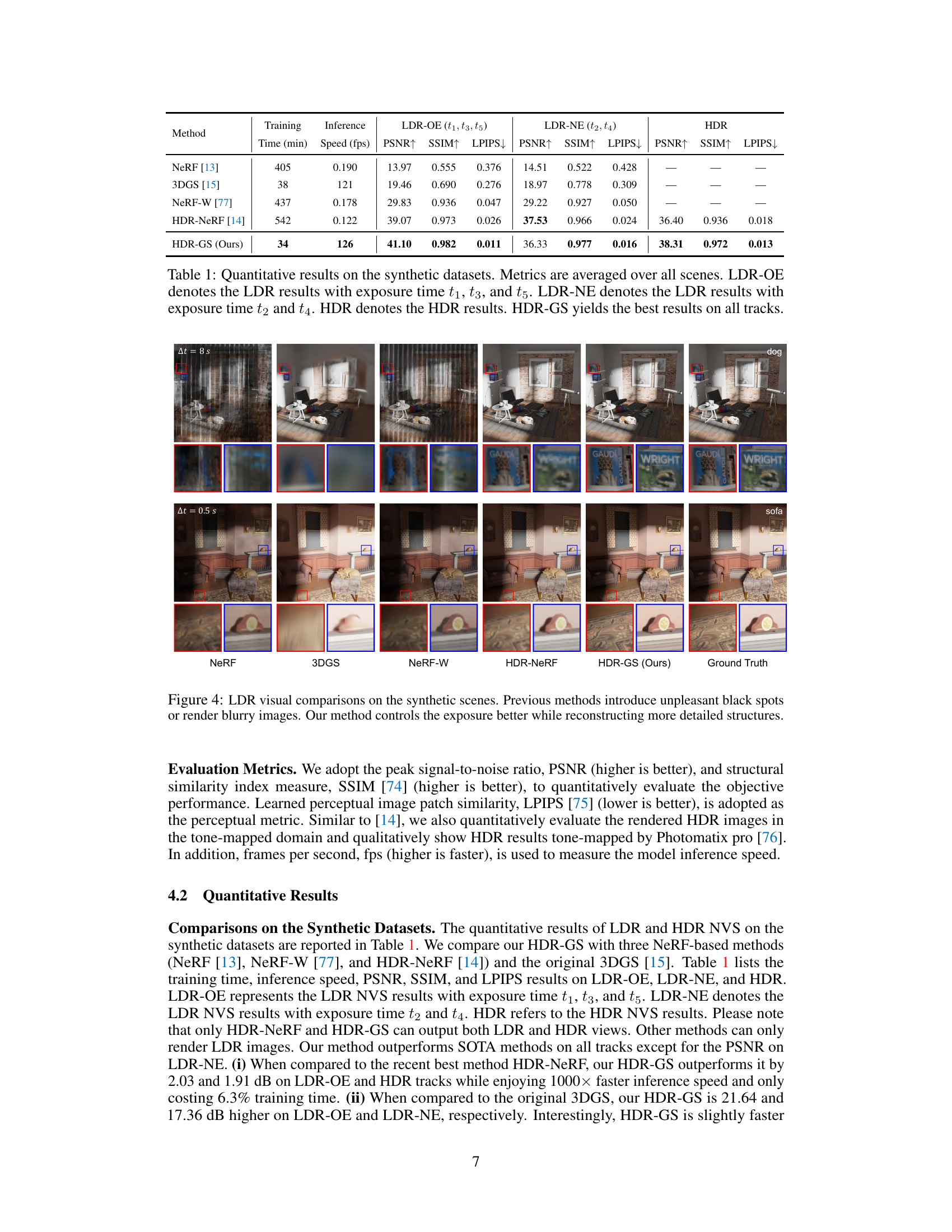

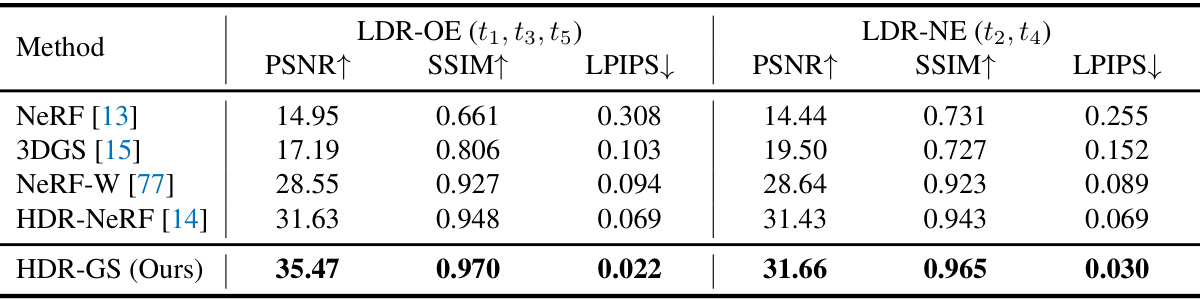

This table presents a quantitative comparison of different novel view synthesis (NVS) methods on synthetic datasets. Metrics include training time, inference speed (frames per second), Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), and Learned Perceptual Image Patch Similarity (LPIPS). The results are broken down into Low Dynamic Range (LDR) results obtained with exposure times t1, t3, t5 (LDR-OE) and t2, t4 (LDR-NE), and High Dynamic Range (HDR) results. The table highlights the superior performance of HDR-GS across all metrics.

In-depth insights#

HDR-GS Overview#

HDR-GS, or High Dynamic Range Gaussian Splatting, presents a novel approach to novel view synthesis (NVS) by leveraging the efficiency of Gaussian splatting. Its core innovation lies in a Dual Dynamic Range (DDR) Gaussian point cloud model, capable of representing both HDR and LDR information simultaneously. This allows for the generation of photorealistic HDR images and controllable LDR views with varying exposure times. The method addresses limitations of previous NeRF-based HDR NVS techniques, achieving significantly faster inference speed (1000x) while maintaining state-of-the-art quality. The DDR model, combined with parallel differentiable rasterization (PDR), is key to HDR-GS’s performance, enabling high-quality HDR reconstruction and exposure control. Furthermore, HDR-GS tackles data limitations by recalibrating camera parameters and employing Structure from Motion (SfM) to establish a robust initialization for Gaussian point clouds. This comprehensive approach makes HDR-GS a significant advancement in efficient, high-quality HDR NVS.

DDR Point Clouds#

The concept of “DDR Point Clouds” suggests a novel approach to representing 3D scenes, likely in the context of high dynamic range (HDR) imaging. It implies a data structure capable of simultaneously encoding both high and low dynamic range information within individual points. This dual representation likely allows for flexible tone mapping and rendering, catering to various display capabilities and desired visual effects. The use of spherical harmonics to fit HDR color within each point is a likely method employed, enhancing the accuracy of HDR color representation. This system likely uses a tone-mapping MLP to convert from the HDR to LDR color spaces. Efficiency is probably key here, aiming to surpass limitations of traditional ray tracing methods commonly used in HDR novel view synthesis. In essence, DDR Point Clouds provide a unified representation that handles the challenges of HDR rendering effectively and with improved speed.

PDR Processes#

Parallel Differentiable Rasterization (PDR) processes are a core component of the HDR-GS framework, enabling the simultaneous rendering of High Dynamic Range (HDR) and Low Dynamic Range (LDR) images. The parallelism in PDR is crucial for efficiency, allowing HDR and LDR views to be generated concurrently, significantly improving the overall speed. The differentiability of the rasterization process is key for training, allowing gradients to flow back through the rendering pipeline, which is essential for optimizing the Gaussian point cloud model. The PDR processes integrate tightly with the Dual Dynamic Range (DDR) Gaussian point cloud model, taking HDR and LDR color outputs as inputs to render realistic images with controllable exposure. This approach is more efficient than traditional methods, which typically handle HDR and LDR rendering separately. The integration of HDR and LDR in a parallel framework enhances flexibility, allowing HDR-GS to accommodate diverse applications, ranging from AR/VR to film production, by providing both HDR quality and flexible LDR outputs.

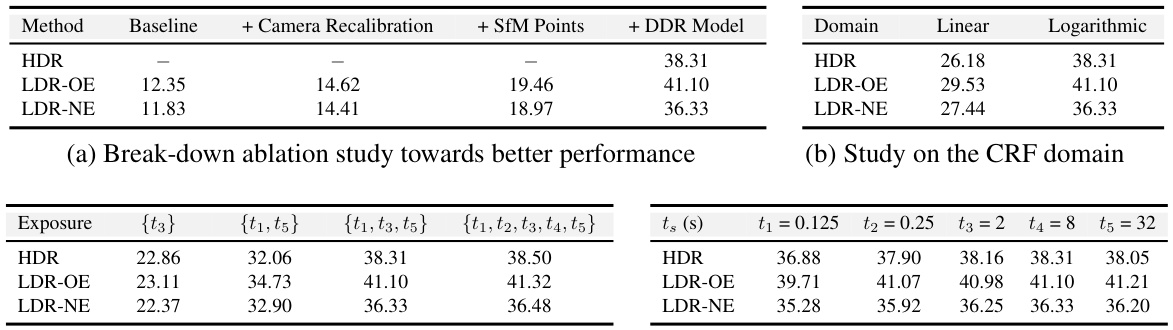

Ablation Studies#

Ablation studies systematically remove components of a model to assess their individual contributions. In the context of a research paper, this section would typically detail experiments where specific modules, features, or hyperparameters are disabled or altered. The results illustrate the impact of each component on the overall performance, helping to isolate the effects and validate design choices. A strong ablation study demonstrates a deep understanding of the model architecture. It can reveal unexpected interactions, identify redundant parts, and pinpoint crucial elements that significantly affect the output quality. Furthermore, carefully designed ablation experiments offer strong evidence supporting the proposed methods’ effectiveness. This is achieved by showing a clear performance degradation when key components are removed, thereby highlighting their necessity. The transparency and thoroughness of ablation studies directly contribute to the reproducibility and reliability of the research findings.

Future of HDR-GS#

The future of HDR-GS (High Dynamic Range Gaussian Splatting) looks promising, particularly in real-time applications where speed is paramount. Further research could focus on enhancing the model’s ability to handle complex scenes with diverse lighting conditions and dynamic objects. Improving the robustness to noisy or incomplete data is also crucial for wider applicability. Exploring alternative neural network architectures and optimization techniques could lead to improved performance. The development of efficient tone mapping strategies for various display devices will enhance the realism of rendered images. Integrating HDR-GS with other computer vision tasks, such as depth estimation and semantic segmentation, offers exciting opportunities for creating more immersive and interactive experiences. Finally, investigation into the potential of HDR-GS in different modalities beyond visible light, such as infrared or multispectral imaging, could lead to groundbreaking advancements.

More visual insights#

More on figures

This figure compares the point clouds and rendered views of 3DGS and HDR-GS. The top row shows the results from the original 3DGS method, illustrating blurry LDR views and color distortions in the point cloud, stemming from training on images with varying exposures. The inability of 3DGS to control exposure is also highlighted. The bottom row presents the HDR-GS results, demonstrating clear HDR images with 3D consistency and the ability to render LDR views with user-specified exposure times.

This figure illustrates the pipeline of the HDR-GS method. It shows three stages: (a) recalibration and initialization using SfM to obtain camera parameters and initialize 3D Gaussian point clouds; (b) a dual dynamic range Gaussian point cloud model that uses spherical harmonics for HDR color and MLPs for tone mapping LDR colors from HDR colors with a controllable exposure time; and (c) parallel differentiable rasterization processes for rendering both HDR and LDR views from the model.

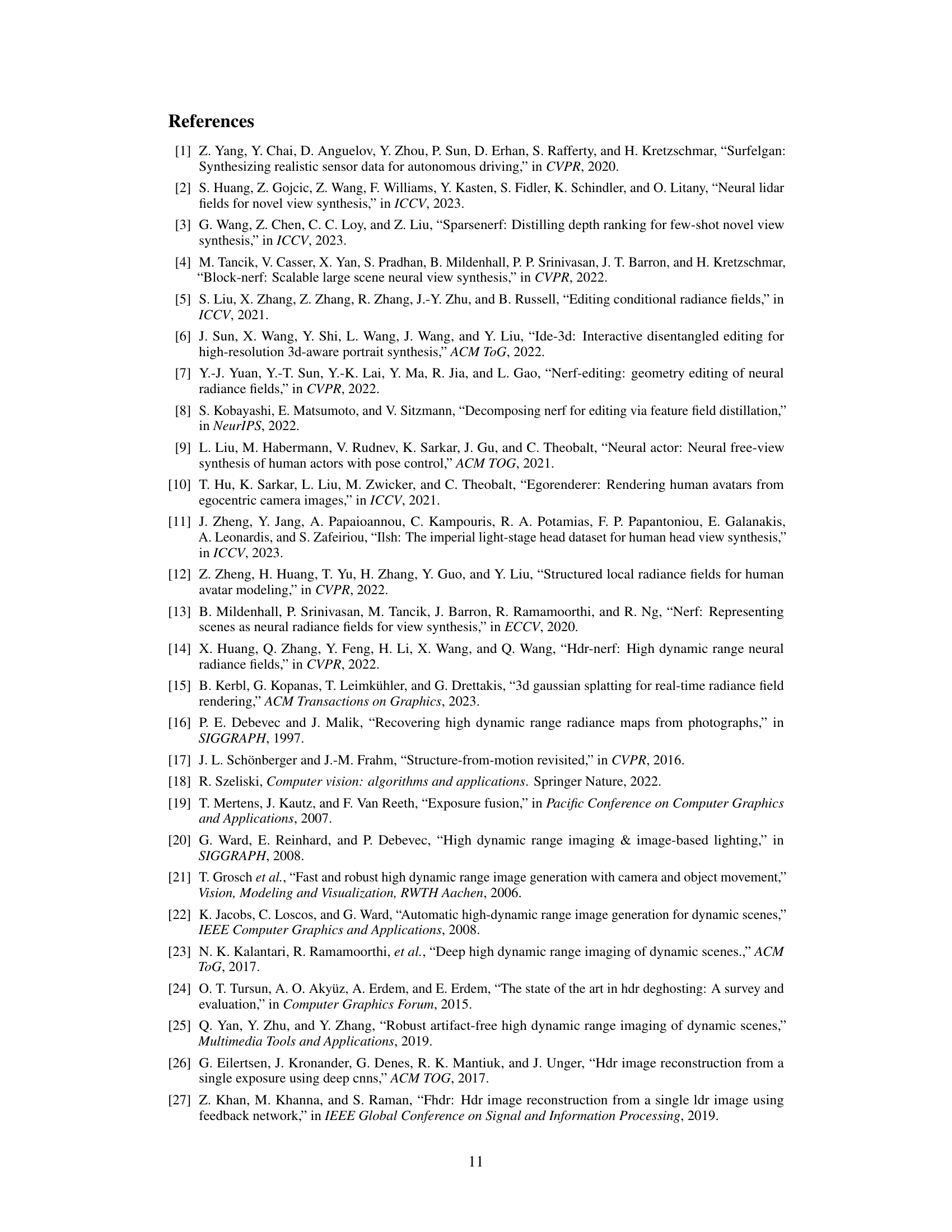

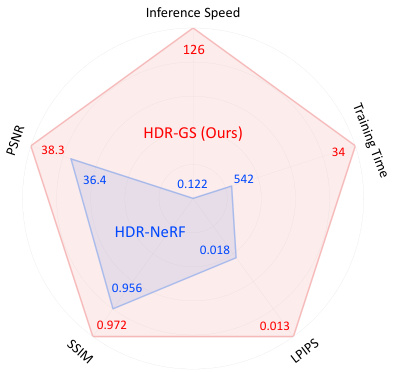

This figure compares the LDR visual results of different novel view synthesis methods (NeRF, 3DGS, NeRF-W, HDR-NeRF, and HDR-GS) on two synthetic scenes (‘dog’ and ‘sofa’) with two different exposure times (Δt = 8s and Δt = 0.5s). The figure highlights how HDR-GS (the authors’ method) produces significantly clearer images with better exposure control and detail preservation, compared to existing methods that suffer from artifacts like blurry regions, black spots, and inaccurate exposure rendering. The ground truth images are also shown for comparison.

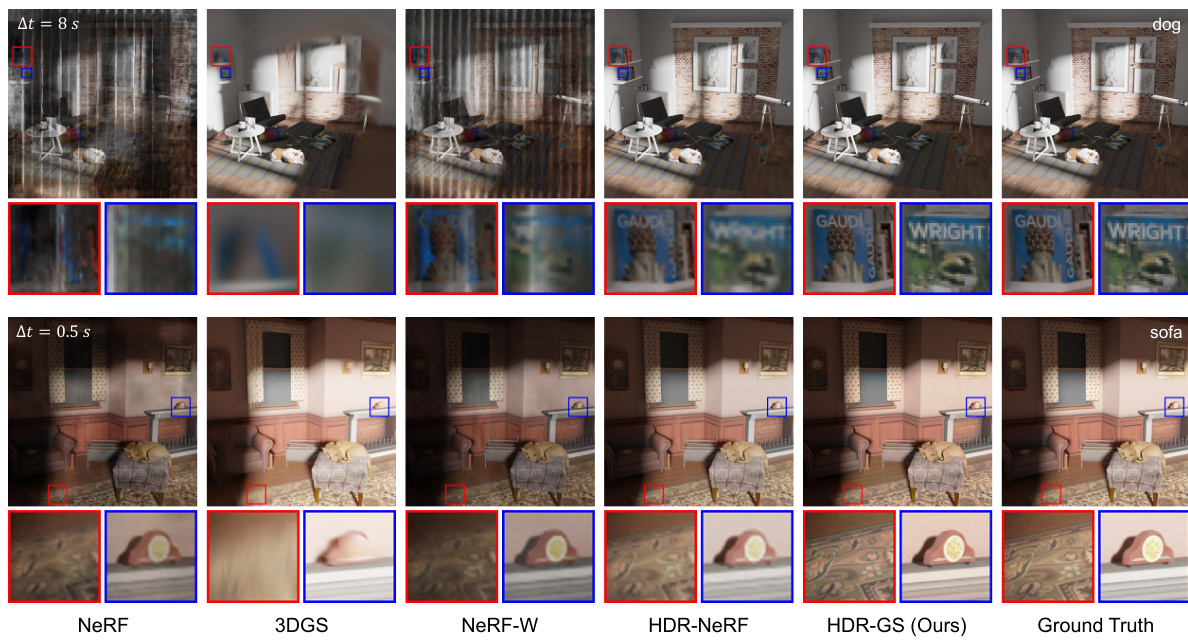

This figure compares the LDR visual results of different novel view synthesis methods (NeRF, 3DGS, NeRF-W, HDR-NeRF, and HDR-GS) on real-world scenes with two different exposure times (Δt = 0.17s and Δt = 0.1s). It showcases how previous methods like NeRF, 3DGS, and NeRF-W often introduce artifacts such as black spots or blurry images, especially when dealing with challenging lighting conditions. In contrast, HDR-NeRF shows improvement but still suffers from blurriness in some areas. The proposed HDR-GS method demonstrates significantly better performance, producing cleaner and more detailed images with better exposure control, closely resembling the ground truth images.

This figure compares the LDR visual results of different novel view synthesis methods on synthetic scenes. The top row shows the ground truth images. The middle rows show the results from HDR-NeRF and the proposed HDR-GS method. The bottom row shows zoomed-in regions highlighting the differences in detail and clarity. HDR-NeRF produces some black spots and blurry areas, while HDR-GS is able to reconstruct clearer images and better control the exposure.

More on tables

This table presents a quantitative comparison of different novel view synthesis (NVS) methods on real-world datasets. It shows the performance of several methods, including NeRF, 3DGS, NeRF-W, and HDR-NeRF, against the proposed HDR-GS method. The metrics used for comparison are PSNR (Peak Signal-to-Noise Ratio), SSIM (Structural Similarity Index), and LPIPS (Learned Perceptual Image Patch Similarity). The table is divided into two main sections: LDR-OE (Low Dynamic Range - Optimized Exposure), which uses images with exposure times t1, t3, and t5; and LDR-NE (Low Dynamic Range - Non-Optimized Exposure), which uses images with exposure times t2 and t4. HDR-GS demonstrates superior performance across all metrics and exposure scenarios.

This table presents a quantitative comparison of the proposed HDR-GS model against other state-of-the-art models on synthetic datasets. It evaluates performance using Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), Learned Perceptual Image Patch Similarity (LPIPS), training time (in minutes), and inference speed (frames per second). The results are broken down for Low Dynamic Range (LDR) images using exposure times t1, t3, t5 (LDR-OE) and exposure times t2, t4 (LDR-NE), and for High Dynamic Range (HDR) images. HDR-GS demonstrates superior results across all metrics.

Full paper#