↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many real-world interactive decision-making applications (bandits, contextual bandits, reinforcement learning) involve delays in receiving rewards. This creates difficulties in attributing rewards to specific decisions, making it challenging to design effective algorithms. A recent study found that a ‘Graves-Lai constant’ of zero is crucial for achieving the theoretically desirable ‘bounded regret’ (meaning consistent, near-optimal performance). However, this condition is very restrictive.

This paper investigates the relationship between delay robustness and bounded regret. The authors demonstrate a near-equivalence between these two properties. Specifically, they show that a zero Graves-Lai constant is not only necessary but also sufficient for linear models to achieve both bounded regret and robustness to unknown reward delays. This significantly broadens the applicability of bounded-regret algorithms to practical scenarios.

Key Takeaways#

Why does it matter?#

This paper is crucial because it bridges the gap between theoretical efficiency and practical robustness in online decision-making by establishing a near-equivalence between delay robustness and bounded regret. This directly addresses the limitations of pursuing bounded regret in real-world applications where delays are inevitable. The findings are particularly important for linear models and open new avenues for designing more efficient and robust algorithms.

Visual Insights#

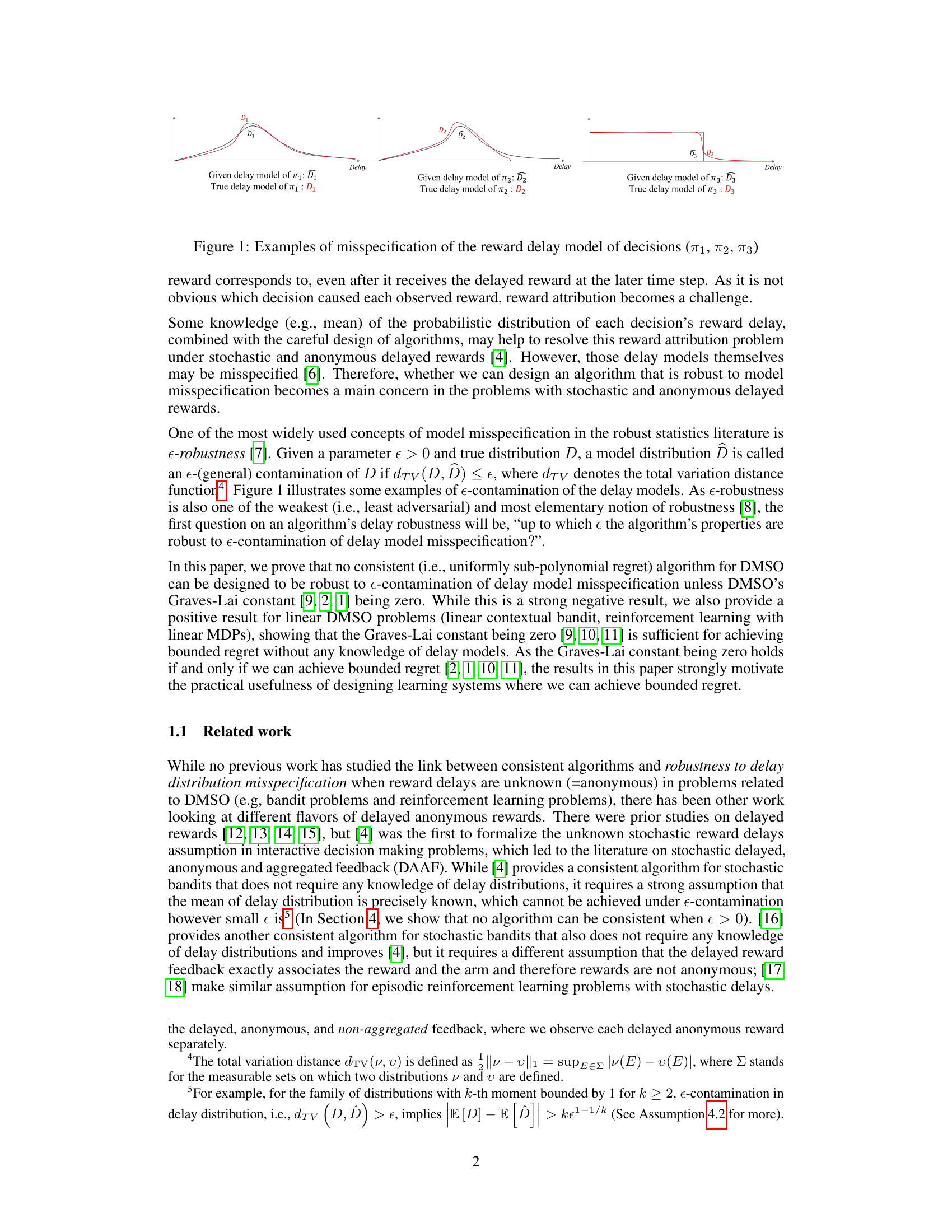

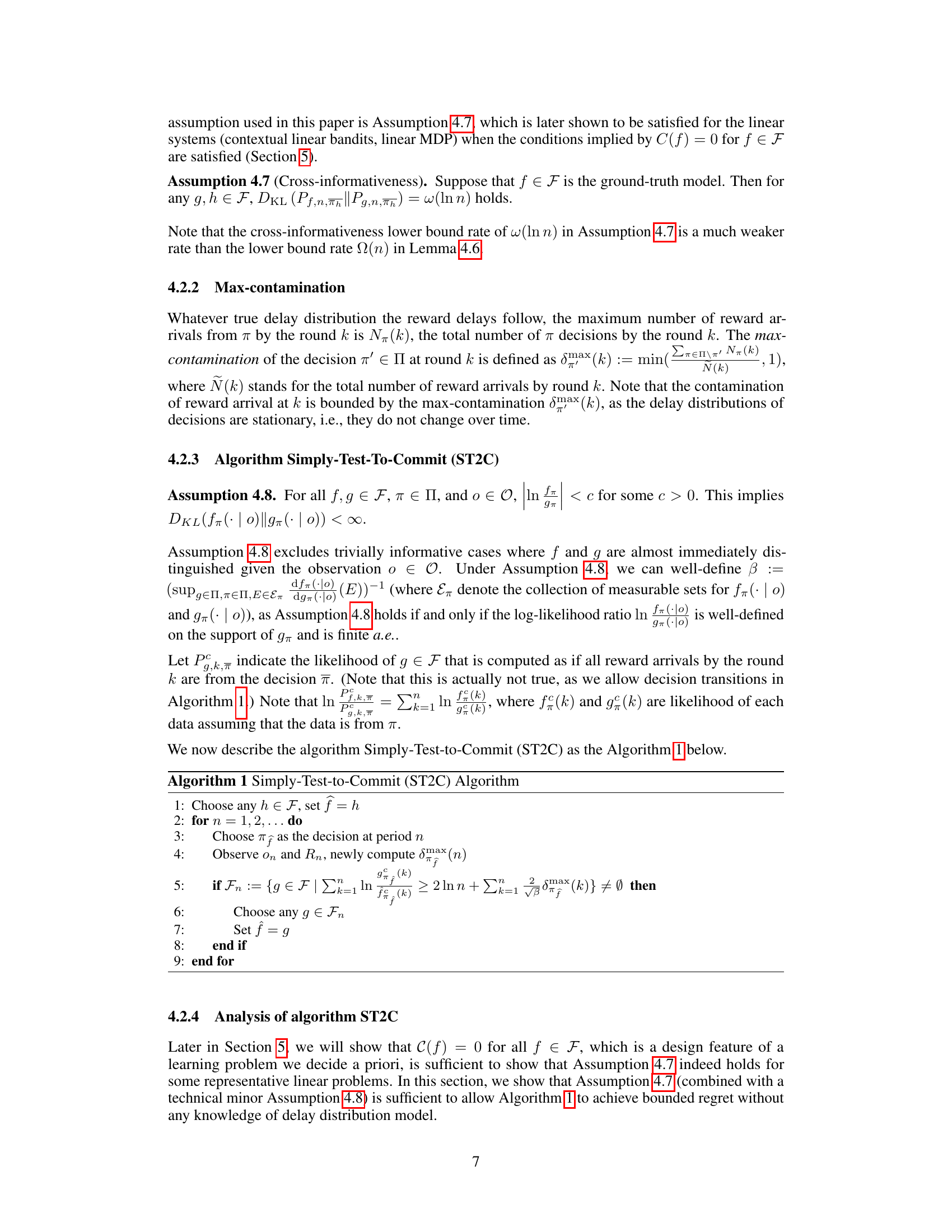

This figure shows three examples of how the assumed (given) reward delay model can be misspecified compared to the actual (true) delay model. Each example represents a different decision (π1, π2, π3), with the blue line representing the given delay model and the red line representing the true delay model. The difference between the given and true delay models illustrates the concept of model misspecification (epsilon-contamination) discussed in the paper. The total variation distance between the true and given models is used as a measure of misspecification.

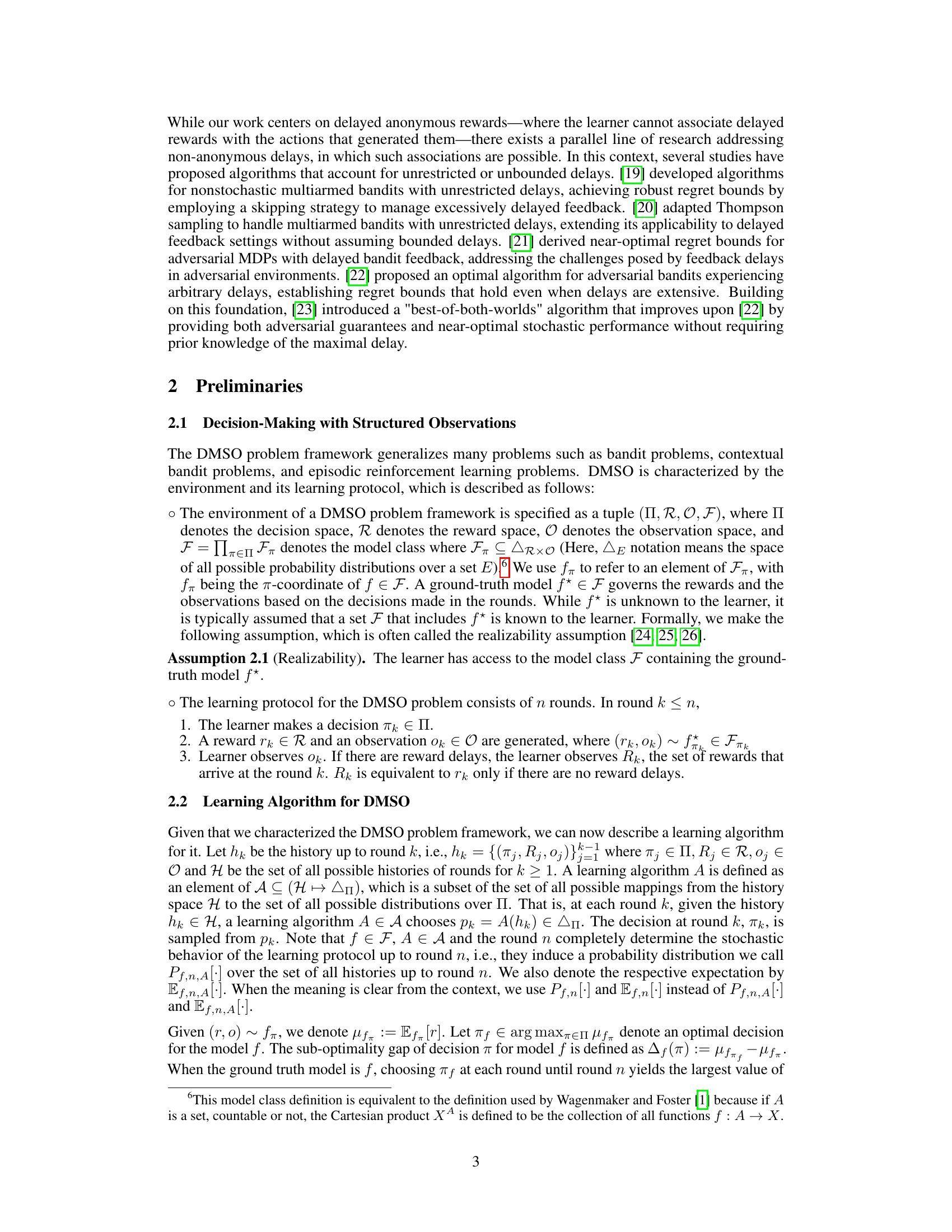

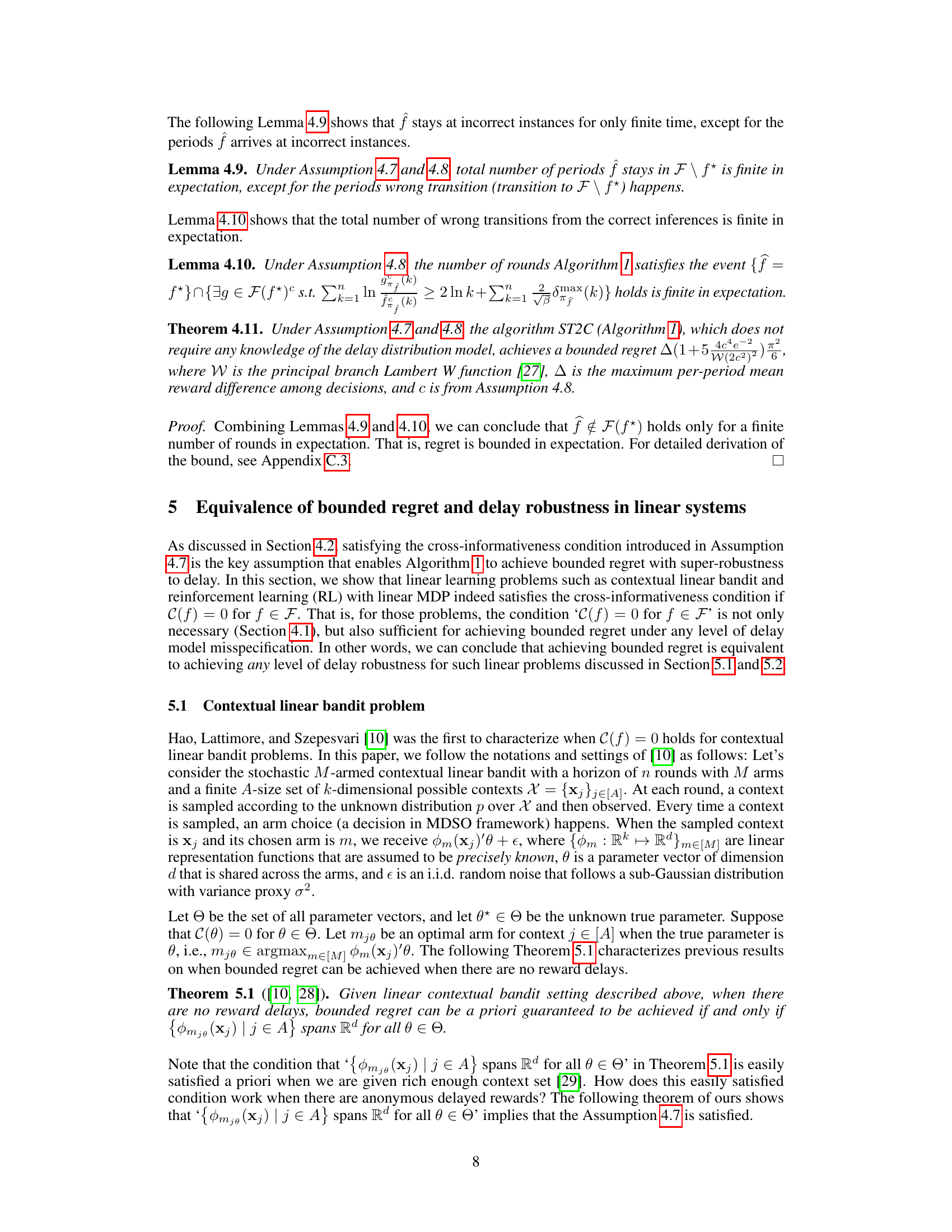

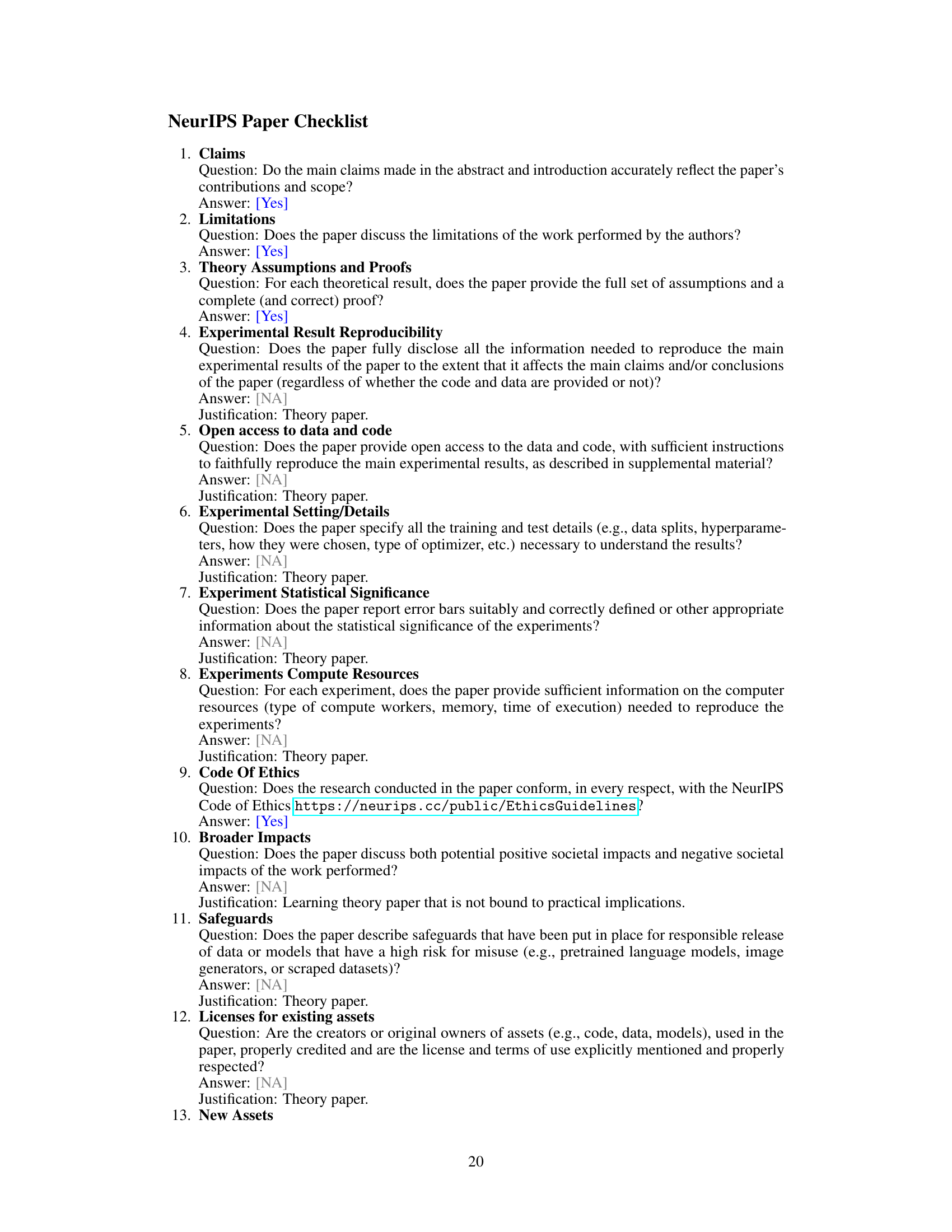

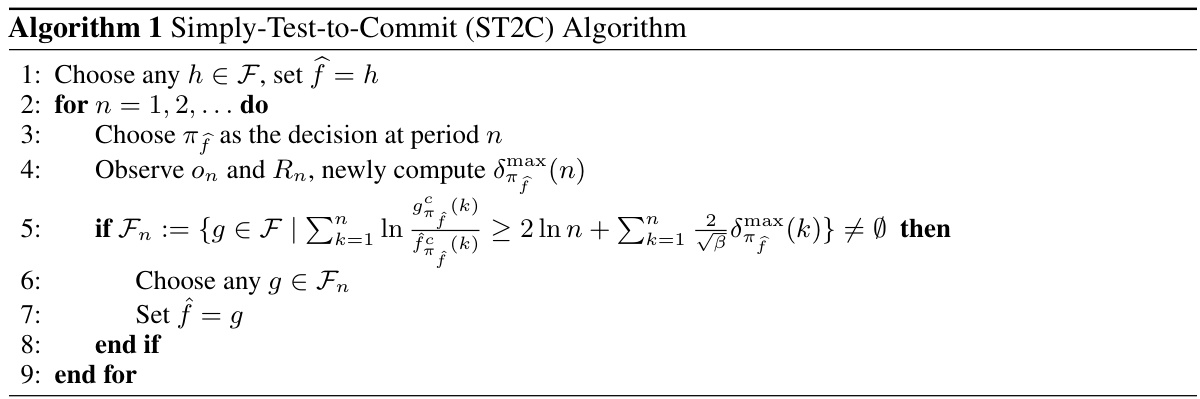

This algorithm is designed for decision-making problems with structured observations (DMSO) and delayed, anonymous rewards. It iteratively chooses decisions, observes outcomes and rewards, and updates its model. The core logic involves testing whether a new model g is significantly better than the current model f based on a log-likelihood ratio test, incorporating a penalty term involving the maximum contamination. If a better model is found, the algorithm switches to it. This procedure is repeated until the end of the time horizon.

Full paper#