↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Federated graph learning (FGL) faces challenges with non-IID data across clients and structural heterogeneity in cross-domain scenarios. Existing methods struggle to share knowledge effectively or tailor solutions to local data distributions. This leads to suboptimal global collaboration and unsuitable personalized models.

The proposed FedSSP framework innovatively uses spectral knowledge to address the knowledge conflict resulting from domain shifts and provides personalized preference modules to accommodate heterogeneous graph structures across clients. By sharing generic spectral knowledge, FedSSP enables effective global collaboration while also utilizing PGPA to ensure suitable local applications. Experimental results on various cross-dataset and cross-domain tasks demonstrate that FedSSP significantly outperforms existing FGL approaches, showcasing its effectiveness and efficiency.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in federated learning and graph neural networks due to its novel approach to handling non-IID data and structural heterogeneity in cross-domain scenarios. It proposes a new framework with significant improvements over existing methods, thus advancing the state-of-the-art and opening avenues for further research in personalized and effective federated graph learning.

Visual Insights#

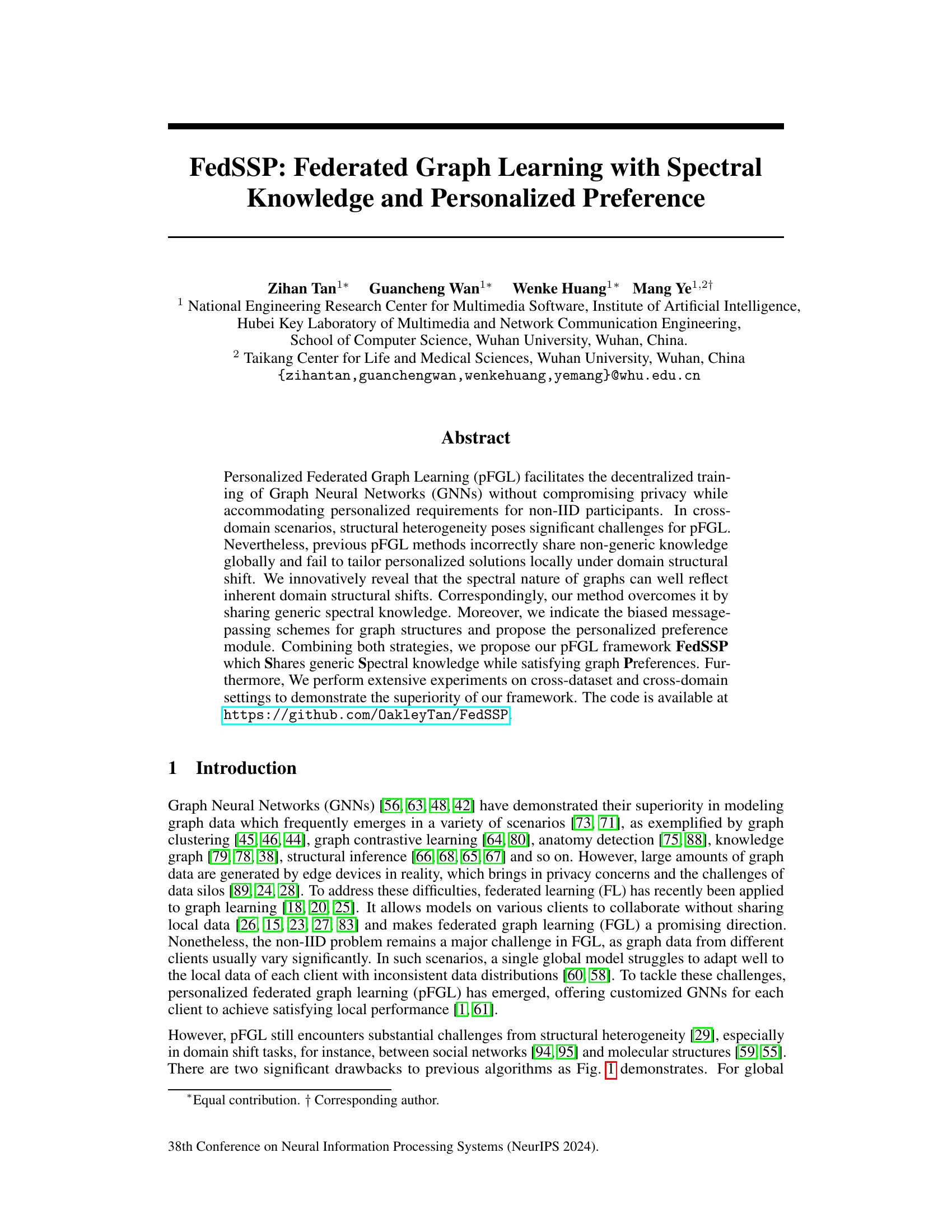

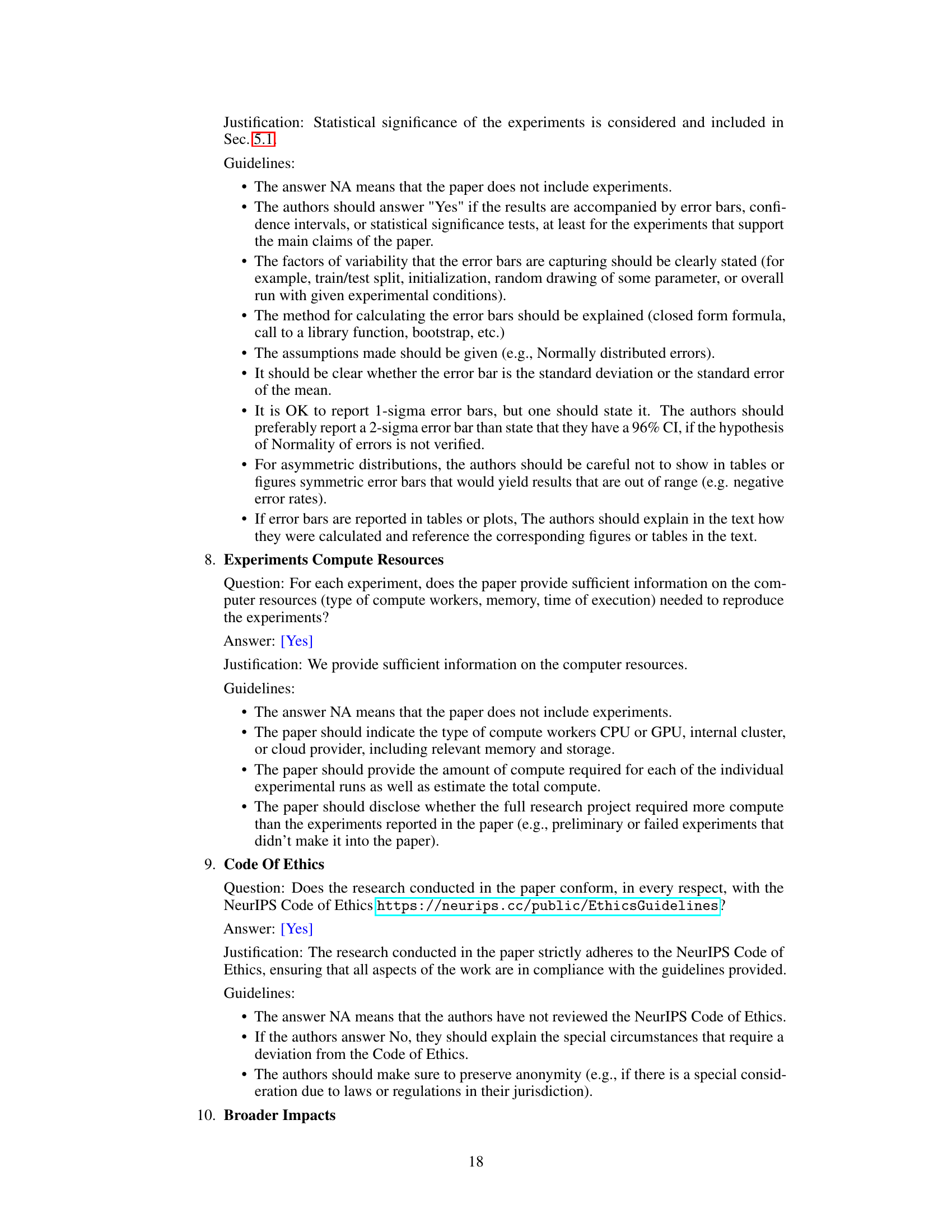

This figure illustrates the challenges of cross-domain personalized federated graph learning (pFGL). Panel (a) shows how structural heterogeneity across domains (e.g., molecule and social network graphs) leads to knowledge conflict when non-generic knowledge is shared globally. Panel (b) demonstrates how a single aggregated model fails to satisfy the diverse preferences of individual clients, leading to unsuitable features for local applications. Panel (c) provides a heatmap visualizing the Jensen-Shannon divergence of algebraic connectivity and eigenvalue distributions across six datasets from three domains, highlighting the spectral biases reflecting these structural shifts.

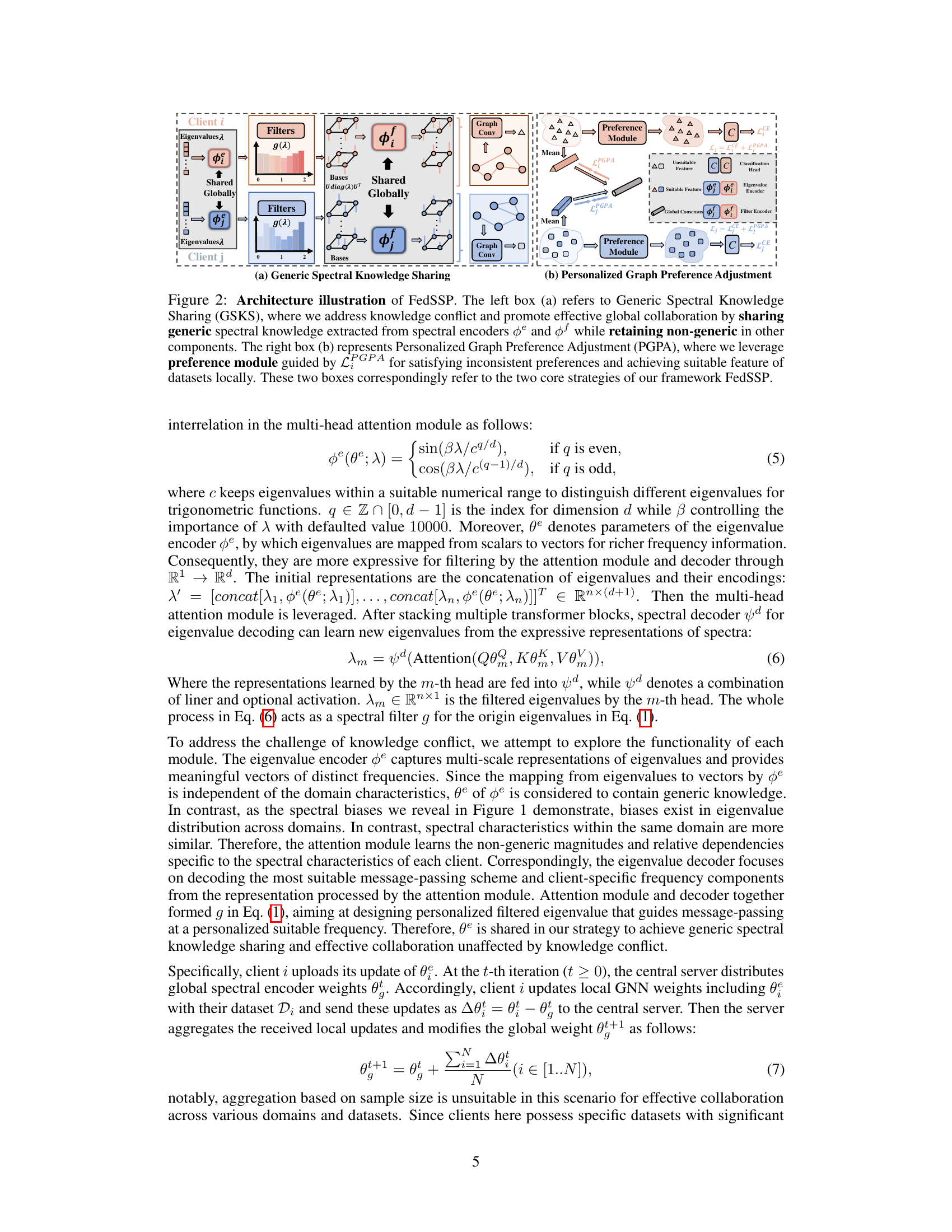

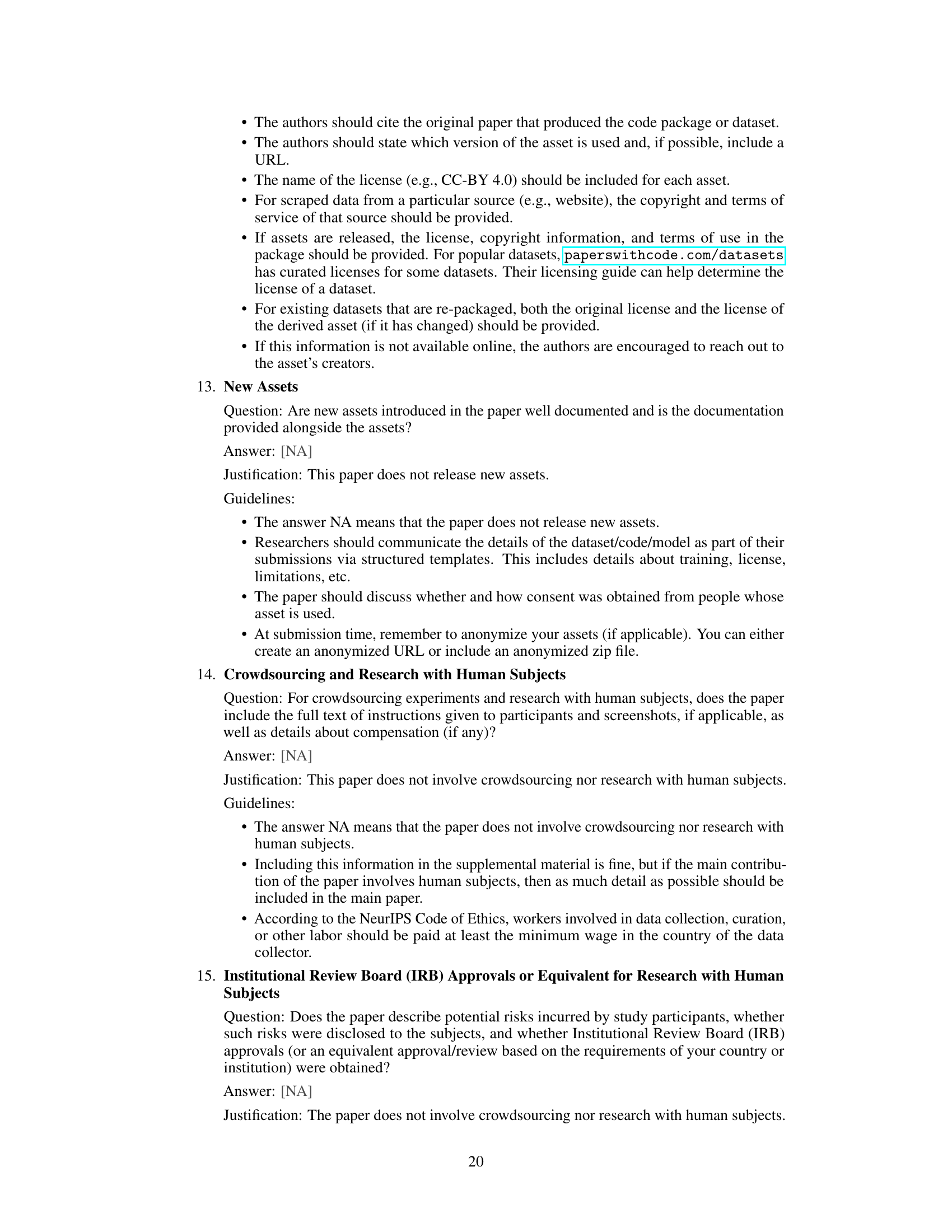

This table compares the performance of FedSSP against nine other state-of-the-art methods across six different experimental settings. The settings vary in the number of datasets and domains involved, assessing the model’s robustness in handling non-IID data distribution across clients in federated graph learning. The results show the average test accuracy and standard deviation for each method across the settings, highlighting FedSSP’s superior performance in most cases.

In-depth insights#

Spectral Bias in pFGL#

In personalized federated graph learning (pFGL), spectral bias emerges as a critical challenge due to the inherent heterogeneity of graph structures across decentralized clients. Unlike conventional federated learning, where data distribution discrepancies primarily affect feature spaces, pFGL faces the added complexity of structural variations impacting how graph neural networks (GNNs) process information. These structural differences manifest as spectral discrepancies, altering the eigenvalues and eigenvectors of graph Laplacian matrices, which fundamentally shape GNN’s message passing mechanisms. This leads to biased model aggregation, hindering effective global model training. The spectral bias issue is further exacerbated in cross-domain scenarios, where structural heterogeneity is more pronounced. Addressing this requires innovative strategies such as generic spectral knowledge sharing to mitigate conflicts and personalized preference adjustments to accommodate local structural idiosyncrasies. Effective solutions must carefully disentangle generic spectral information from client-specific, non-generic features, ensuring that the global model benefits from shared knowledge without being overly influenced by any single client’s structure.

GSKS: Spectral Sharing#

The proposed GSKS (Generic Spectral Knowledge Sharing) method tackles challenges in federated graph learning arising from structural heterogeneity across domains. Instead of directly sharing raw graph structures, which often leads to conflicts due to domain-specific variations, GSKS leverages the spectral properties of graphs. It cleverly extracts and shares generic spectral knowledge, which is less sensitive to structural differences. This is achieved by processing graph signals in the spectral domain, isolating and sharing generic components, thereby mitigating domain-specific biases. The core idea is that spectral characteristics, though influenced by structure, often reveal underlying, shared patterns across domains, allowing for effective collaboration without compromising client-specific data. This approach is novel because it moves beyond simplistic global knowledge sharing, which often fails to account for structural shifts, and directly addresses the core issue of structural heterogeneity through careful spectral analysis and knowledge transfer. The resulting model learns more robustly and generalizes better across diverse datasets.

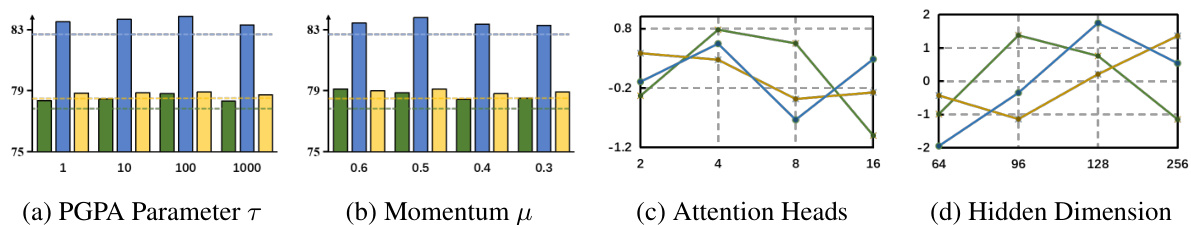

PGPA: Preference Tuning#

The proposed Personalized Graph Preference Adjustment (PGPA) module is a crucial component for adapting the model to the unique characteristics of each client’s graph data. It directly addresses the non-IID nature of federated graph learning by allowing each client’s model to tune its preference to local data distributions. This is achieved through learnable parameters that adjust the features extracted by the global model. This targeted tuning ensures that the learned features are more suitable for the specific graph structures present in each client’s data. The key innovation is in addressing over-reliance on preference adjustment. A regularization term, based on the Mean Squared Error (MSE) between local and global graph features, prevents the feature extractor from excessively relying on PGPA. This balances personalized adjustments with the benefit of global collaboration, striking a critical balance between local and global information use. The effectiveness of PGPA is validated through experiments and ablation studies, which show improved performance in cross-dataset and cross-domain scenarios.

FedSSP Framework#

The FedSSP framework represents a novel approach to personalized federated graph learning (pFGL), aiming to overcome the limitations of existing methods in handling cross-domain scenarios with structural heterogeneity. It tackles the challenge of non-IID data by innovatively sharing generic spectral knowledge, leveraging the spectral nature of graphs to reflect inherent domain shifts. This strategy minimizes knowledge conflicts often present in global collaborations, facilitating more effective sharing of information. Furthermore, FedSSP incorporates a personalized preference module, addressing the biased message-passing schemes associated with diverse graph structures. By customizing these schemes, FedSSP ensures that the global model effectively adapts to the unique characteristics of each client’s local data. This dual approach, combining generic spectral knowledge sharing with personalized preference adjustments, significantly enhances both global collaboration efficiency and the effectiveness of local application of the model. FedSSP thus provides a more robust and accurate solution for pFGL, particularly in complex, cross-domain settings where traditional methods often struggle.

Future: Dynamic Models#

The heading ‘Future: Dynamic Models’ suggests a promising research direction focusing on adapting graph neural networks (GNNs) to handle dynamic graph structures. Static GNNs are limited by their inability to adapt to changes in the graph over time, which is a common occurrence in real-world applications. A key area to explore is how to efficiently update model parameters as the graph evolves, perhaps using techniques like incremental learning or online learning algorithms. Developing dynamic GNNs would greatly enhance the model’s ability to capture evolving relationships and provide more accurate predictions in situations with temporal aspects. Research should address the challenges of computational complexity and memory requirements associated with continuously updating models on large graphs. Furthermore, exploring different approaches to modeling temporal dynamics in graphs, such as using recurrent neural networks (RNNs) or graph attention networks (GATs) in conjunction with GNNs, would be beneficial. This could pave the way for more robust and accurate predictive models in domains like social networks, recommendation systems, and traffic flow prediction, where dynamics are critical.

More visual insights#

More on figures

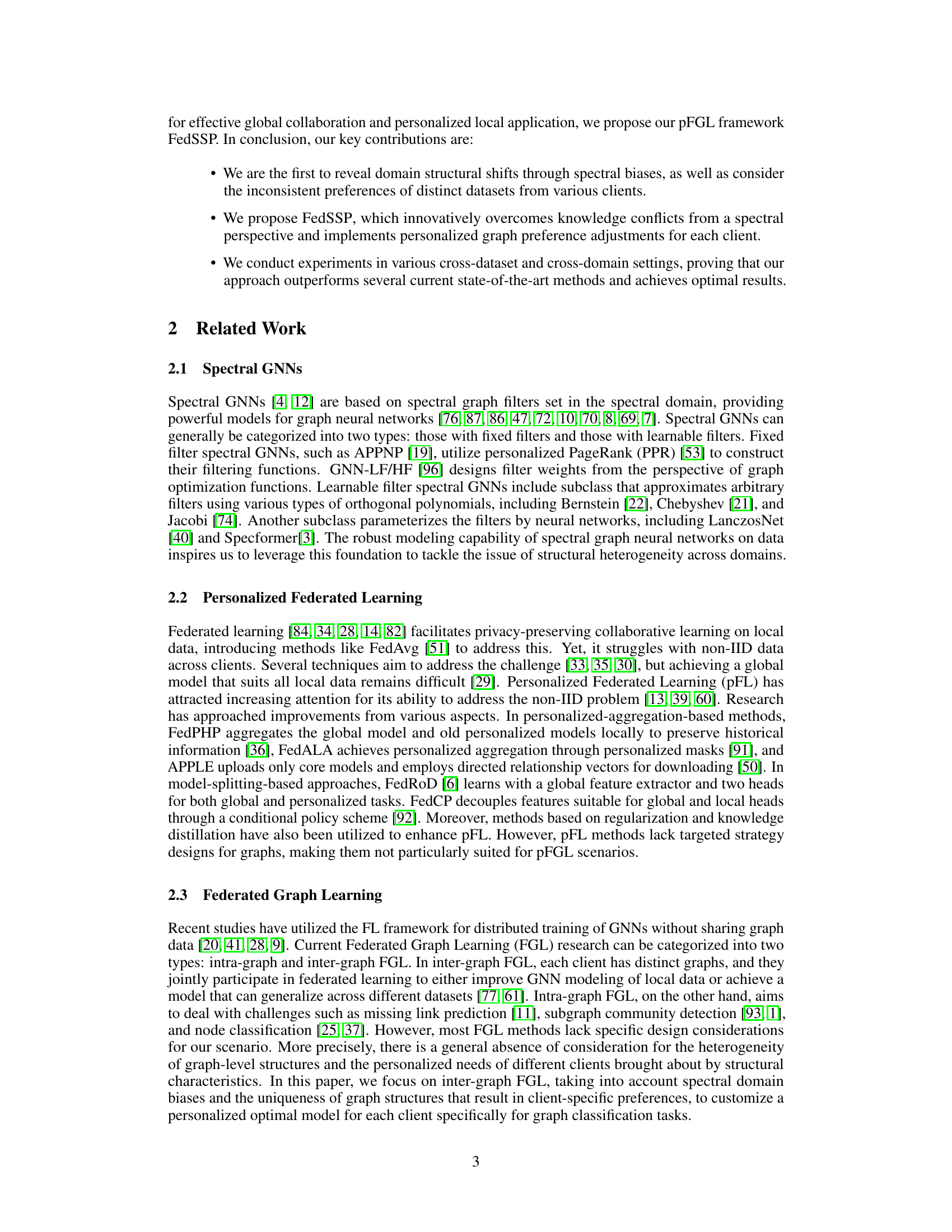

This figure illustrates the architecture of the FedSSP framework, highlighting its two core strategies: Generic Spectral Knowledge Sharing (GSKS) and Personalized Graph Preference Adjustment (PGPA). GSKS addresses knowledge conflicts by sharing generic spectral knowledge, extracted from spectral encoders, while retaining non-generic components locally to facilitate effective global collaboration. PGPA addresses inconsistent dataset preferences by using a preference module (LPGPA) to customize features locally for optimal performance. The figure uses visual representations of the data flow and processing steps within each strategy to improve understanding.

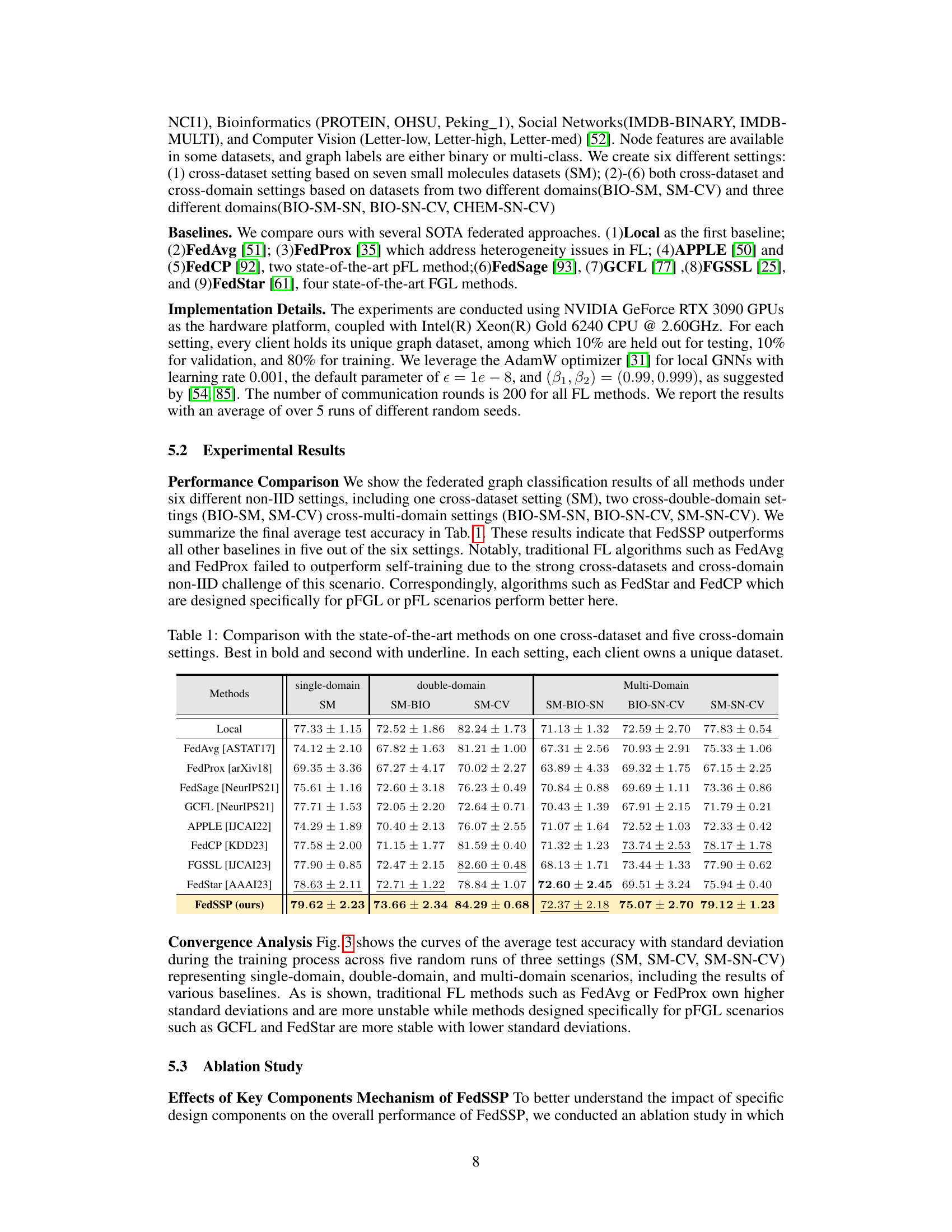

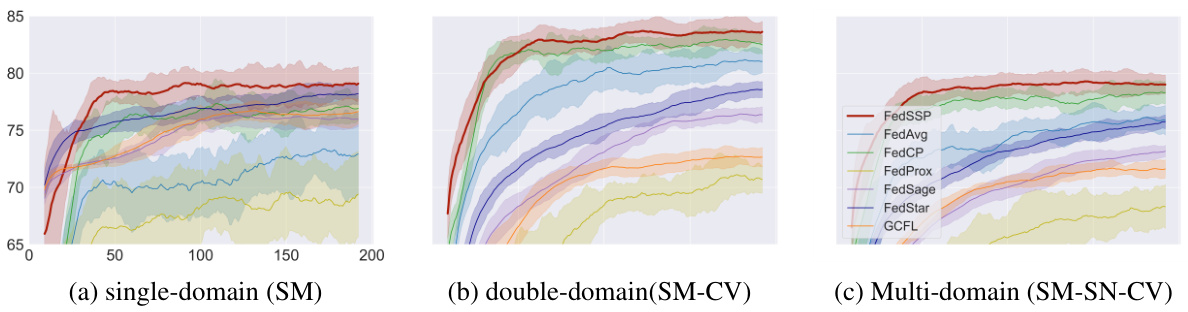

This figure displays the test accuracy curves across different federated learning methods (FedSSP, FedAvg, FedCP, FedProx, FedSage, FedStar, and GCFL) over 200 communication rounds. Three distinct experimental settings are compared: single-domain (SM), double-domain (SM-CV), and multi-domain (SM-SN-CV). The y-axis represents the test accuracy, ranging from 65% to 85%, and the x-axis denotes the number of communication rounds.

This figure illustrates the architecture of the FedSSP framework, highlighting its two core strategies: Generic Spectral Knowledge Sharing (GSKS) and Personalized Graph Preference Adjustment (PGPA). GSKS addresses knowledge conflicts by sharing generic spectral knowledge, promoting effective global collaboration. PGPA satisfies inconsistent preferences by making personalized adjustments to features, ensuring suitable features for local applications.

Full paper#