↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Contrastive learning (CL), while successful, lacks a strong theoretical foundation and struggles with noisy data or domain shifts. Existing CL methods primarily focus on bringing positive pairs together, ignoring the broader distribution of latent representations. This limitation restricts adaptability and robustness.

The paper introduces a novel Generalized Contrastive Alignment (GCA) framework that addresses these issues. By reformulating CL as a distribution alignment problem and leveraging optimal transport (OT), GCA offers flexible control over alignment and handles various challenges. Specifically, GCA-UOT, a variant using unbalanced OT, shows strong performance in noisy scenarios and domain generalization tasks, surpassing existing methods.

Key Takeaways#

Why does it matter?#

This paper is important because it offers new theoretical insights into contrastive learning, connects it to the well-established field of optimal transport, and provides new tools that can improve existing methods. It also opens up new avenues for incorporating domain knowledge and handling noisy data, making it highly relevant to current research trends in self-supervised learning and domain adaptation.

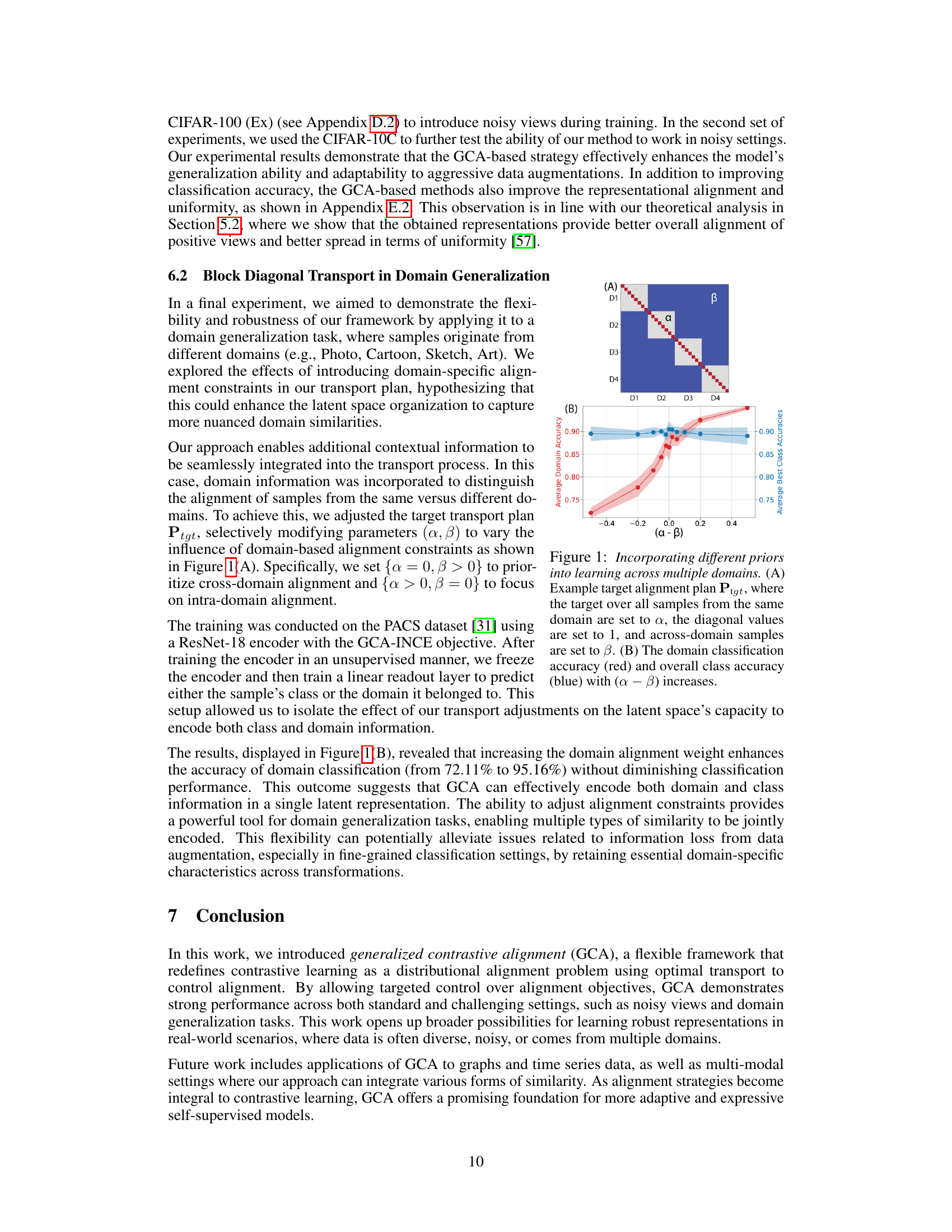

Visual Insights#

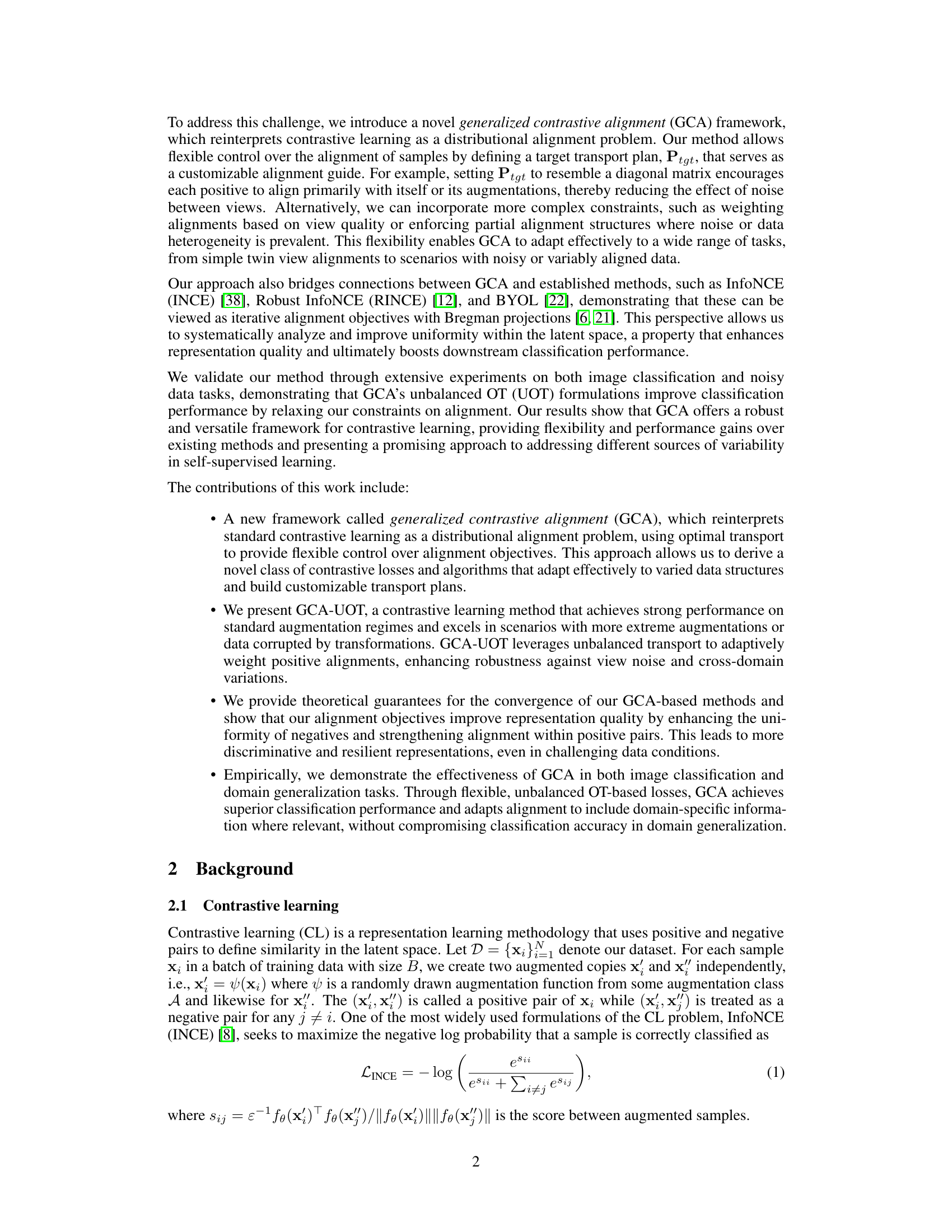

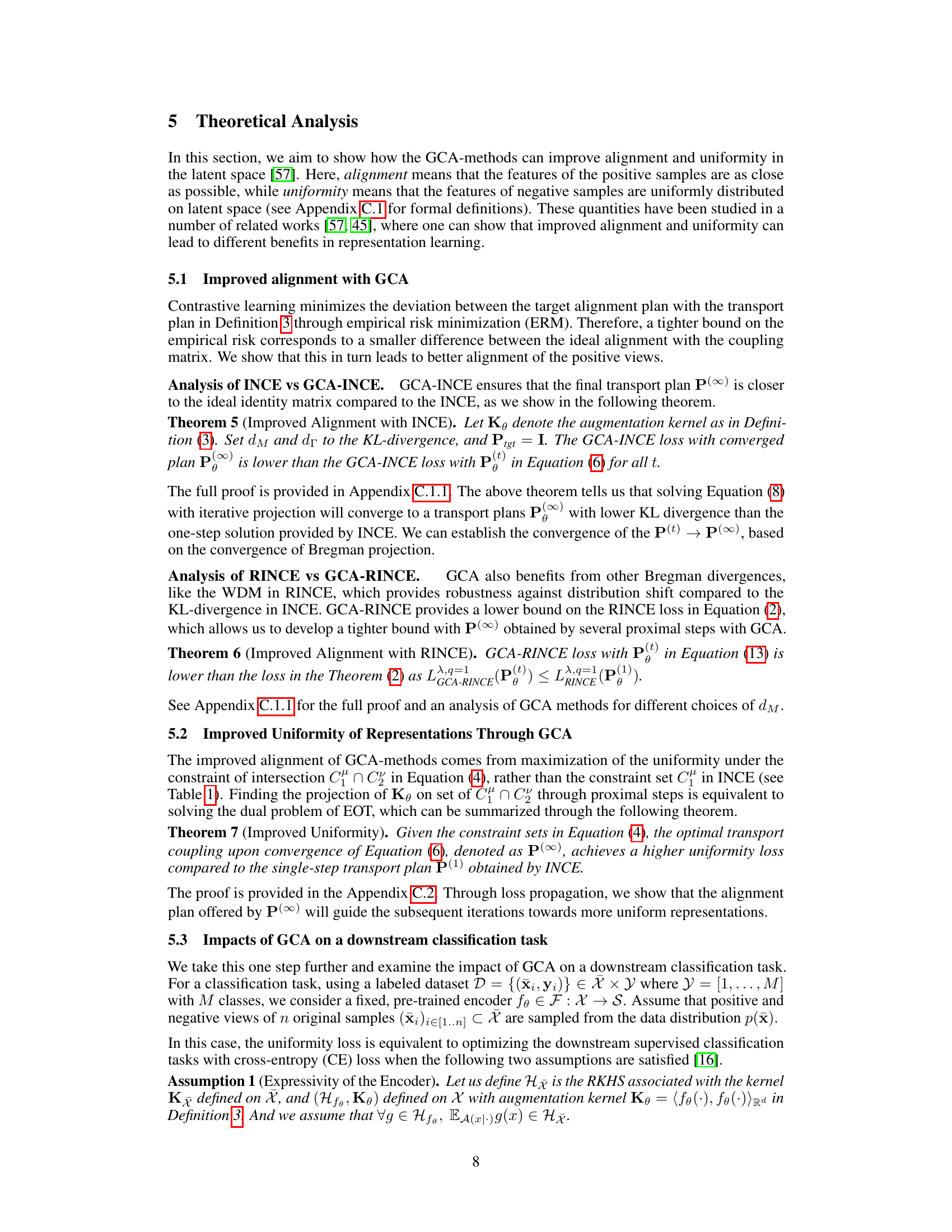

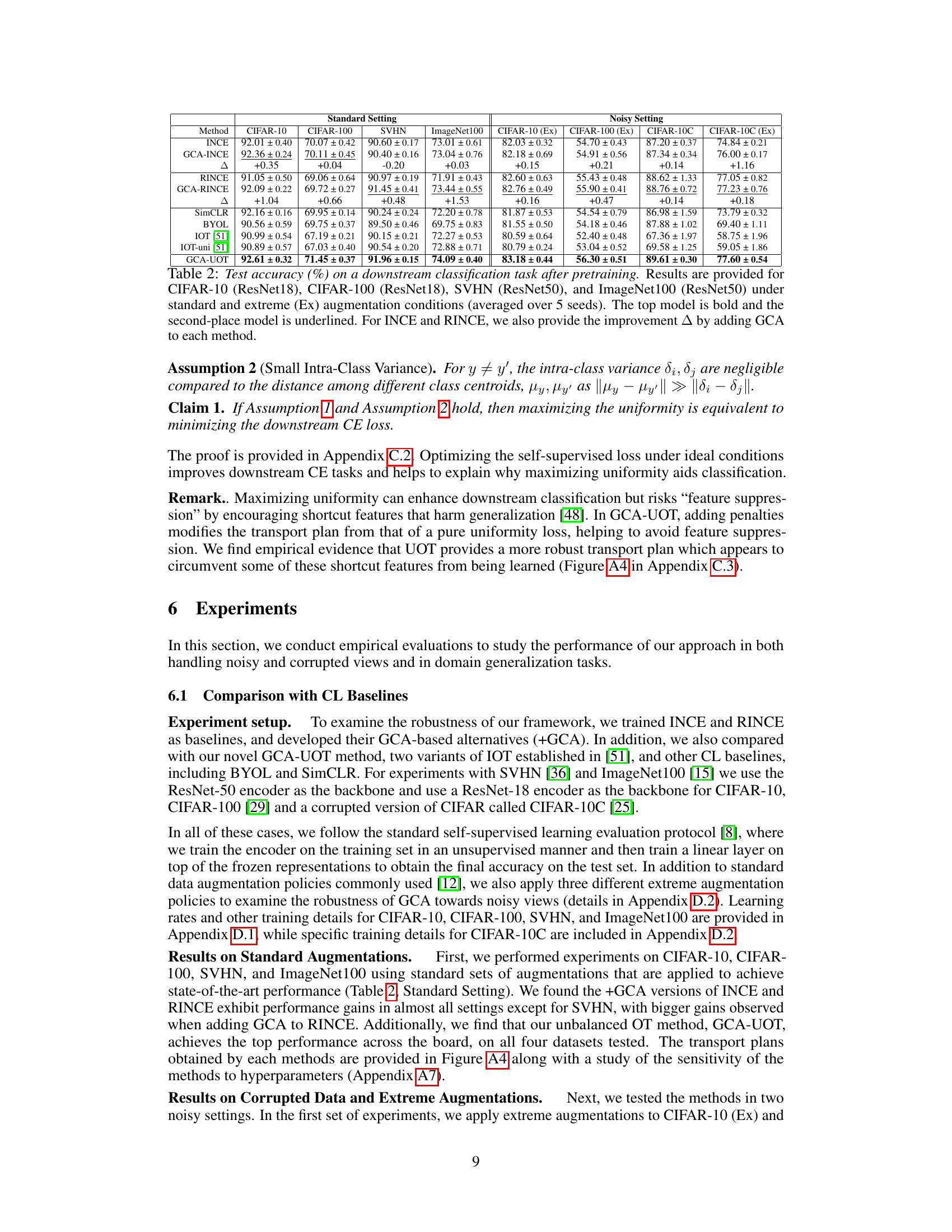

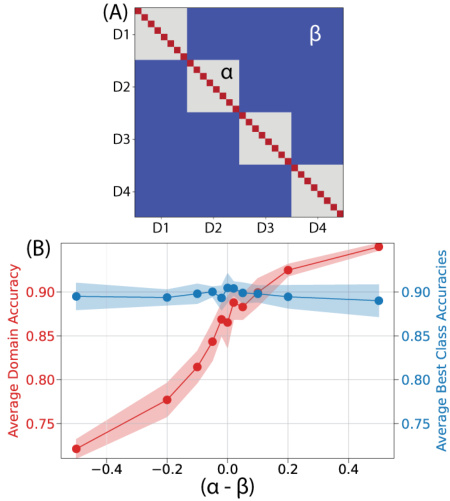

This figure shows how the proposed method (GCA) can be used to improve domain generalization performance by incorporating domain-specific alignment constraints. Panel (A) illustrates a target transport plan (Ptgt) that incorporates domain information by weighting the alignment between samples from the same domain (α) differently from those in different domains (β). Panel (B) demonstrates the effect of this weighting strategy on both domain classification accuracy (red) and overall classification accuracy (blue) as the difference between α and β ((α - β)) increases. The results show that incorporating domain information into the alignment process improves the overall performance of the model.

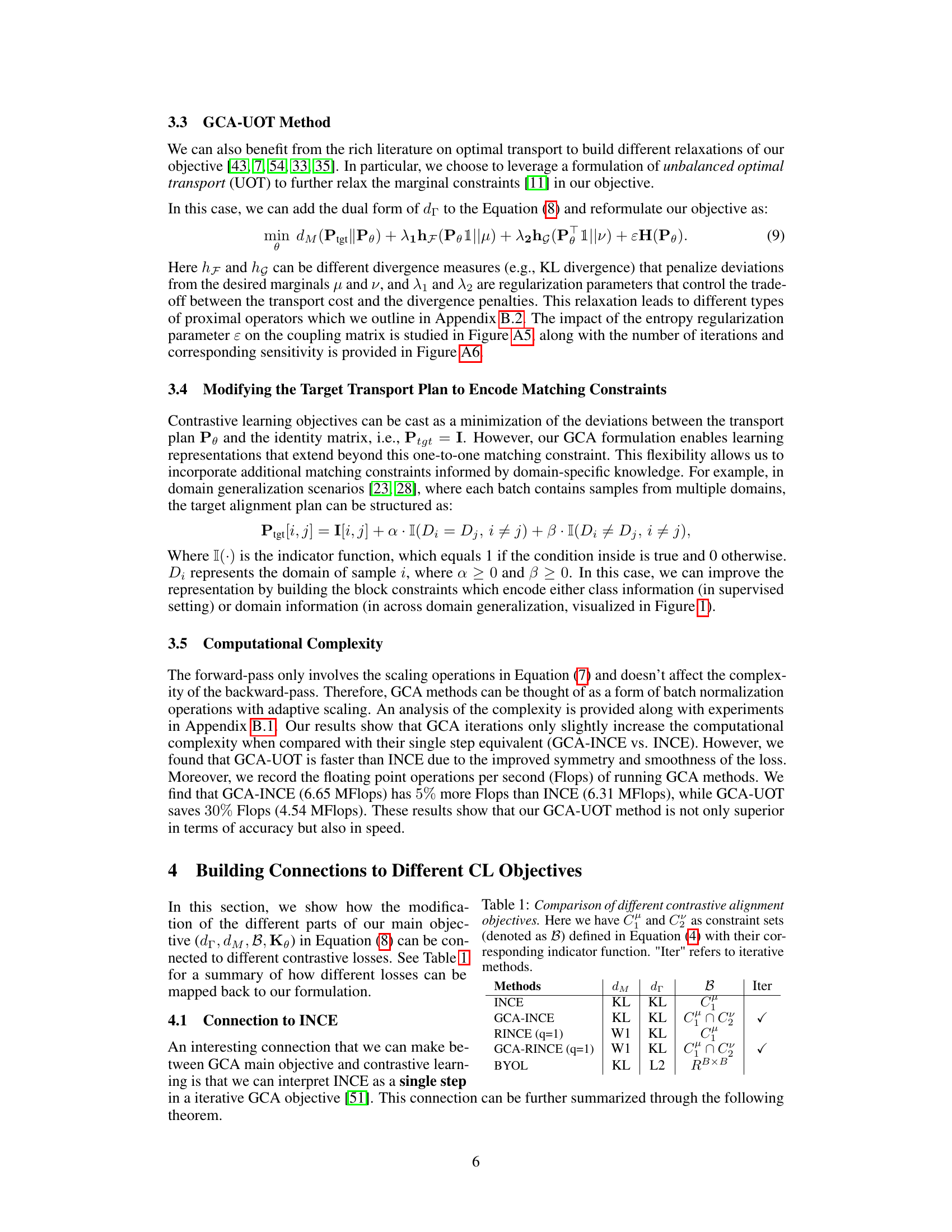

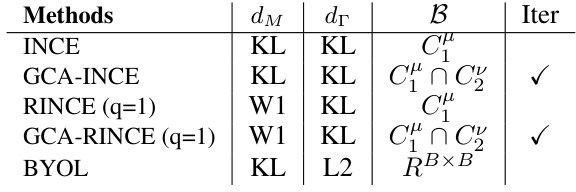

This table summarizes the connections between the proposed Generalized Contrastive Alignment (GCA) framework and existing contrastive learning methods (INCE, RINCE, BYOL). It shows how different choices for the divergence measures (dM, dr), constraint sets (B), and iterative algorithms (Iter) in the GCA formulation correspond to the specific objectives and algorithms of these existing methods. The table highlights the flexibility of the GCA framework to encompass various contrastive learning approaches by adjusting these parameters.

Full paper#