TL;DR#

AI safety research faces the challenge of misspecified reward functions, where the AI’s objective, as defined by its reward function, doesn’t align with the user’s intentions. Existing methods implicitly define misspecification, lacking a clear understanding of its causes and solutions. This issue leads to AI systems failing to achieve intended goals, highlighting the need for robust solutions.

This paper introduces Expectation Alignment (EAL), a framework using theory of mind to formally define and explain misspecified objectives. EAL offers insights into existing methods’ limitations and proposes a new interactive algorithm. This algorithm infers potential user expectations from the reward function, mapping this inference to efficient linear programs for implementation. Evaluated on standard benchmarks, the method demonstrates improvements in handling reward uncertainty, showcasing its potential for advancing AI safety research.

Key Takeaways#

Why does it matter?#

This paper is important because it addresses the critical issue of reward misspecification in AI safety, a problem hindering the development of reliable and beneficial AI systems. It proposes a novel framework and algorithm, offering potential solutions and new avenues for research in AI alignment. By formalizing the problem and introducing a query-based approach, it offers a more practical and effective way to tackle the challenges of misaligned AI objectives than existing methods. This work is highly relevant to the growing field of AI safety and aligns with the broader trends of aligning AI systems with human values.

Visual Insights#

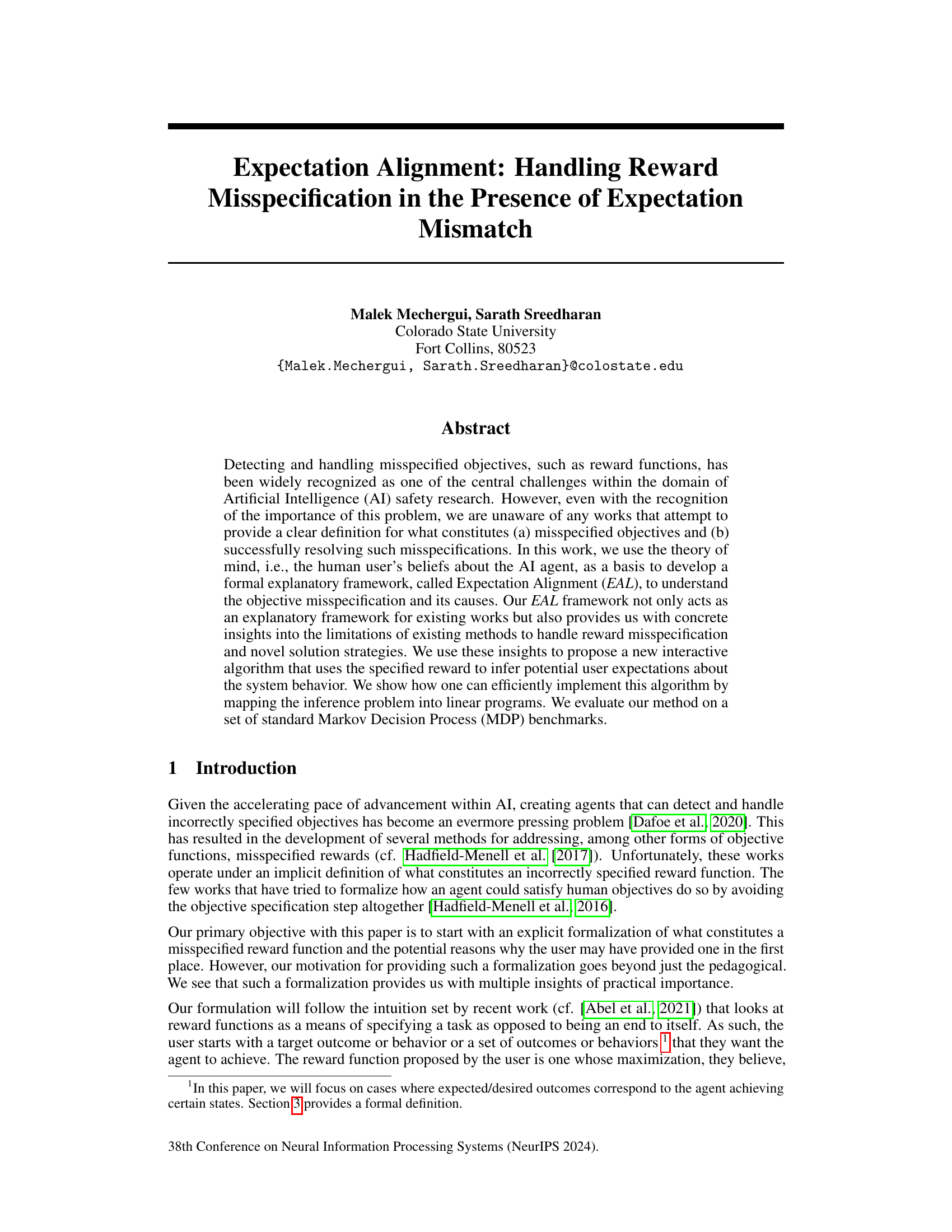

🔼 This figure illustrates the process by which a human user creates a reward function for an AI agent. The user’s beliefs about the task and the agent’s capabilities, along with their expectations about the agent’s behavior, combine to inform their choice of reward function. The reward function is then used by the AI agent (represented by a model) to determine its behavior, resulting in a policy. A mismatch between the user’s expected policy and the actual policy generated by the agent indicates a misspecified reward function.

read the caption

Figure 1: A diagrammatic overview of how specifying a reward function plays a role in whether or not their expectations are met.

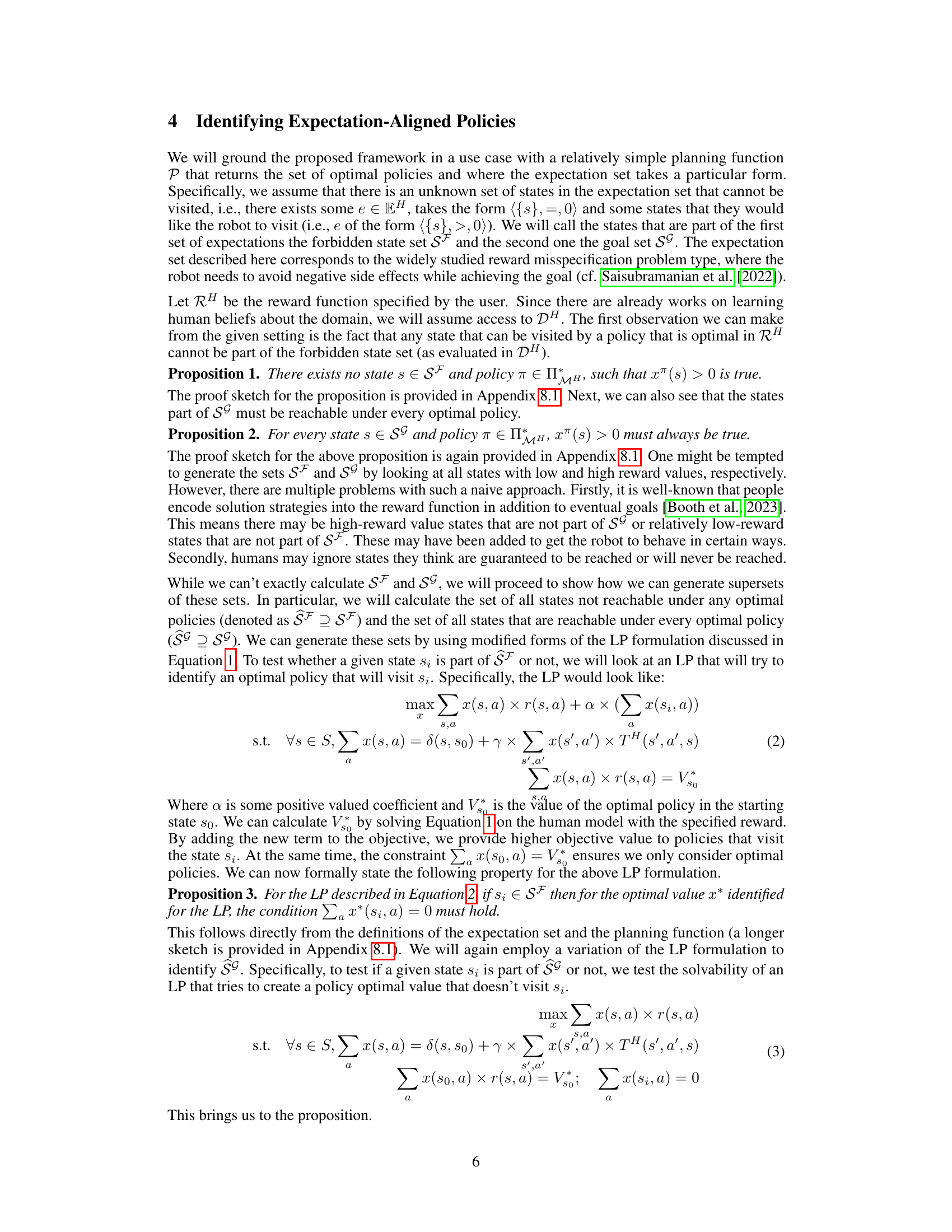

🔼 This table compares the performance of the proposed method against the Inverse Reward Design (IRD) method. The proposed method uses a query-based approach to identify expectation-aligned policies. The table shows the number of queries required by the proposed method, the time taken to find these policies, the number of violated expectations by IRD, and the time taken by IRD. The key finding is that the proposed method is significantly faster than IRD and guarantees that no expectations are violated, unlike IRD.

read the caption

Table 1: For our method, the table reports the number of queries raised and the time taken by our method. For IRD, it shows the number of expectations violated by the generated policy and the time taken. Note that our method is guaranteed not to choose a policy that results in violated expectations.

Full paper#