↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on 3D Gaussian splatting and novel view synthesis. It addresses a critical limitation in rendering speed by introducing a novel fragment pruning technique, significantly boosting efficiency on resource-constrained devices. This opens avenues for real-time applications in VR/AR and related fields, and inspires further research in efficient rendering of complex 3D scenes.

Visual Insights#

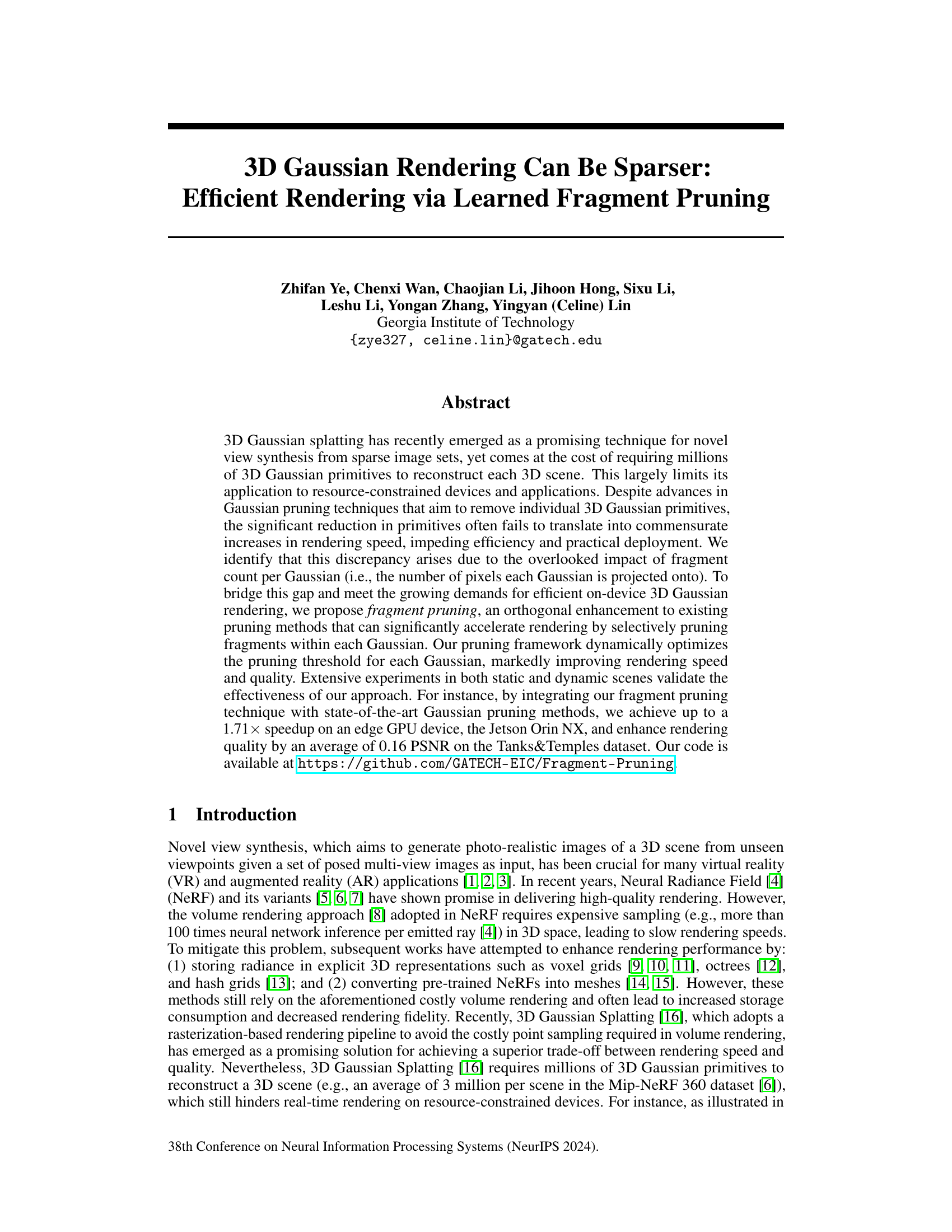

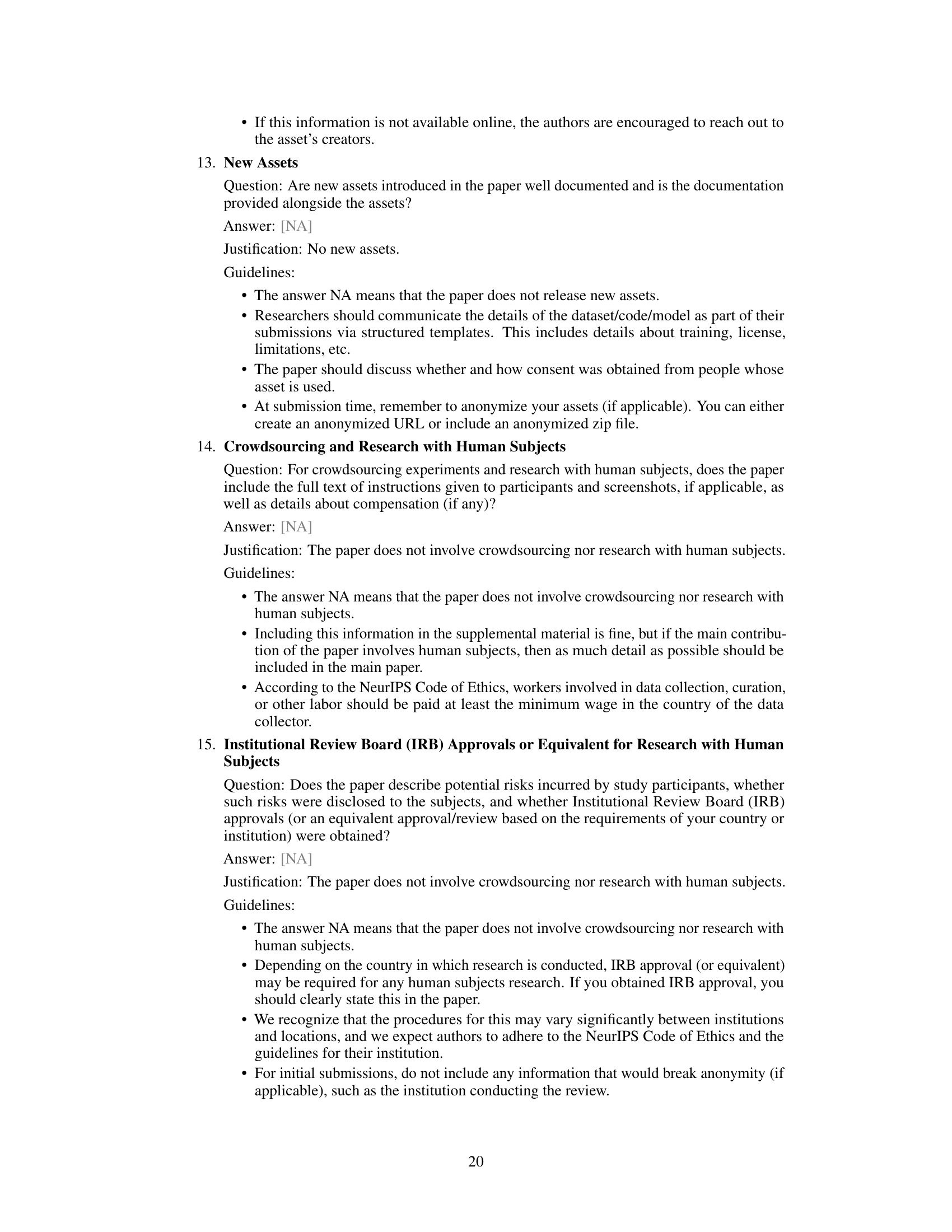

Figure 1 presents a comparison of the proposed fragment pruning approach with existing methods. Subfigure (a) shows the fragment count per pixel before and after applying the fragment pruning technique. Subfigure (b) provides a comparison of rendering speed (FPS) and image quality (PSNR) for various methods, including the proposed approach, demonstrating the efficiency gains achieved with fragment pruning. Note that the proposed method is applied on top of 3DGS and Mini-Splatting, further enhancing their performance.

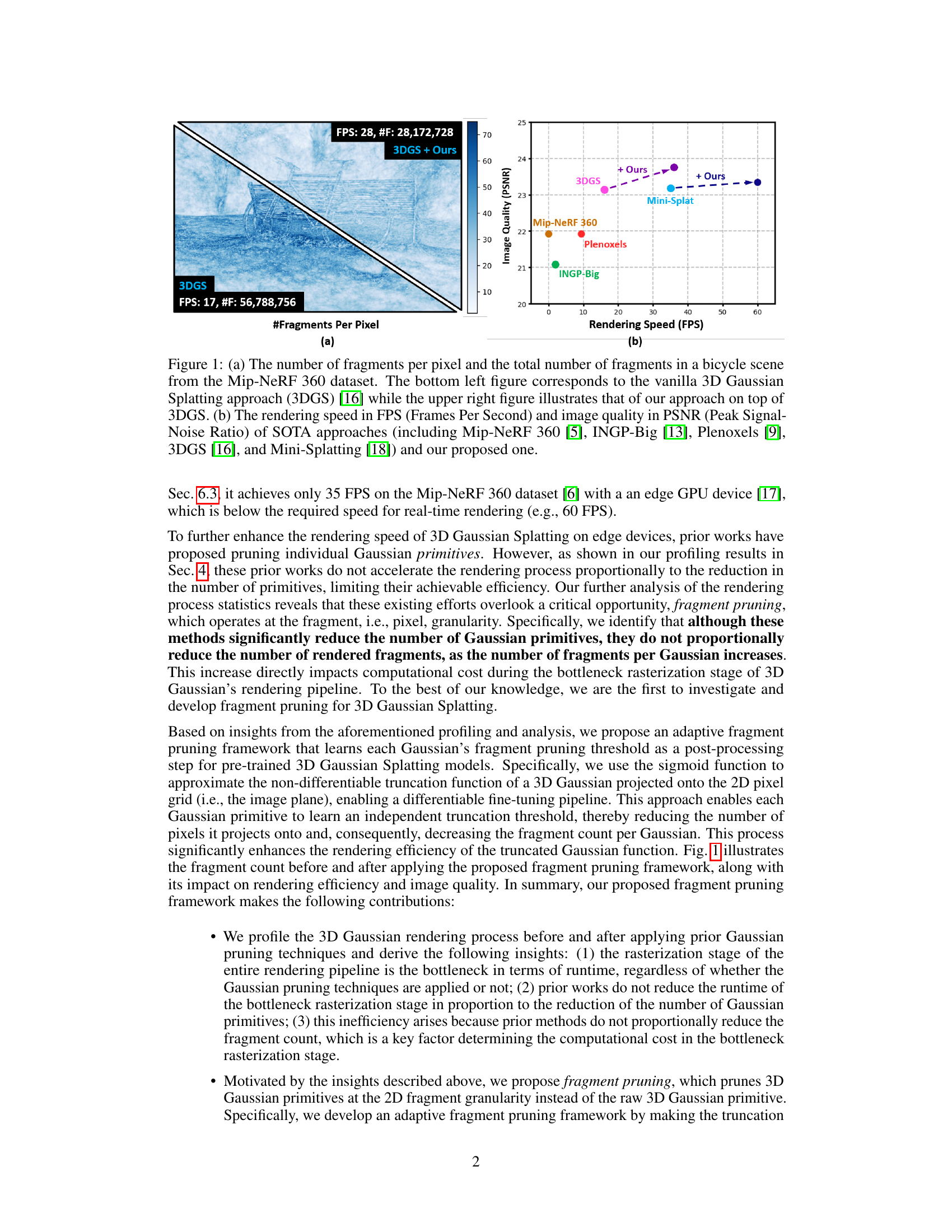

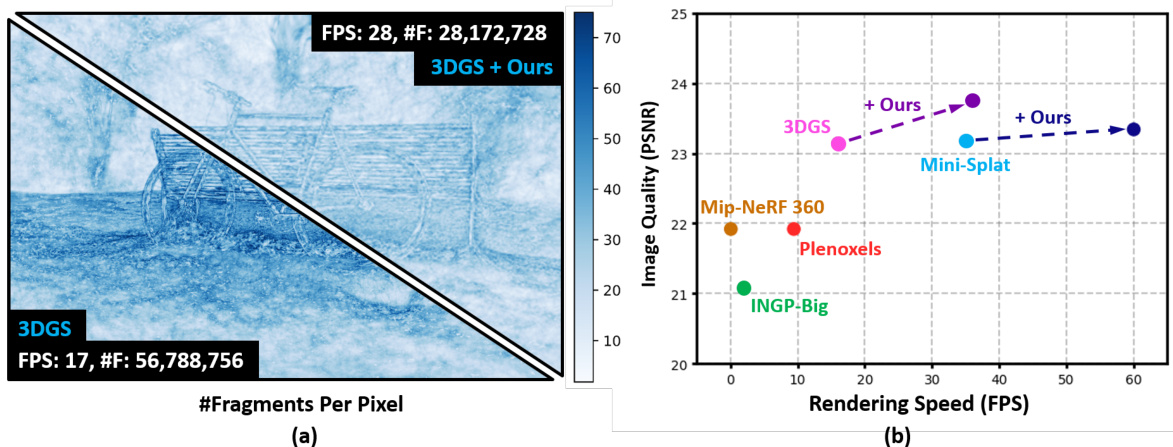

This table shows a breakdown of the rendering time for each stage (Projection, Sorting, Rasterization) of the 3D Gaussian Splatting pipeline for four different scenes. It compares the performance of the vanilla 3D Gaussian Splatting method with a state-of-the-art pruning method (Mini-Splatting). The table highlights the significant time spent in the Rasterization stage and how pruning affects the number of Gaussians and fragments, impacting overall rendering speed. The ‘#F/#G’ column shows the ratio of fragments to Gaussians, illustrating the disproportionate increase in fragments compared to the reduction in Gaussians when pruning.

In-depth insights#

Learned Fragment Pruning#

The proposed “Learned Fragment Pruning” technique presents a novel approach to optimize 3D Gaussian splatting rendering. Instead of solely pruning entire Gaussian primitives, this method intelligently prunes individual fragments (pixels) within each Gaussian. This is achieved by learning a unique truncation threshold for each Gaussian, dynamically adjusting the number of fragments rendered per pixel. This approach addresses the inefficiency of existing Gaussian pruning techniques which don’t always translate reduced primitive count into commensurate speed improvements. By learning these thresholds through a differentiable pipeline, the framework ensures compatibility with existing pre-trained models, enhancing both rendering speed and image quality. The results demonstrate significant speedups and improved PSNR, showcasing the effectiveness of this orthogonal approach to existing pruning methods. Adaptive thresholding is crucial for handling varying Gaussian sizes and spatial distributions, thereby preventing over-pruning or insufficient pruning, making it more efficient than uniform approaches.

3D Gaussian Bottleneck#

The concept of a “3D Gaussian Bottleneck” in novel view synthesis highlights the computational limitations of using 3D Gaussian splatting for efficient rendering. While 3D Gaussian splatting offers advantages in speed and quality over traditional volume rendering, its reliance on millions of Gaussian primitives for scene reconstruction creates a performance bottleneck, particularly during the rasterization stage. This bottleneck arises because the number of fragments (pixels a Gaussian affects) often significantly exceeds the number of Gaussians, negating the benefits of reduced primitive counts achieved through Gaussian pruning techniques. The core issue is the disproportionate computational cost of fragment processing versus Gaussian processing. This inefficiency makes real-time rendering on resource-constrained devices challenging. Addressing this bottleneck requires innovative approaches that move beyond simply reducing Gaussian primitives, focusing instead on efficient fragment management and reduction. This may involve techniques that selectively prune low-impact fragments, or optimize the rasterization process itself to handle high fragment counts more efficiently. Solutions exploring these avenues are critical for unlocking the full potential of 3D Gaussian splatting and enabling its widespread use in real-time applications.

Adaptive Thresholding#

Adaptive thresholding, in the context of image processing and computer vision, is a technique that dynamically adjusts the threshold value used to segment an image, unlike global thresholding which uses a single fixed value. This adaptability is crucial because images often exhibit varying illumination levels and contrast across different regions. Adaptive methods offer significant advantages by handling these variations effectively, leading to more accurate and robust segmentation results. Several algorithms achieve this by calculating local thresholds based on small neighborhoods or regions of interest. Common strategies involve calculating the mean or median intensity within a window, adapting to local contrast changes. The size and shape of the window itself can be a parameter, influencing the sensitivity and detail of the resulting segmentation. Therefore, optimizing the window size is important for balancing noise reduction with preserving fine details. The performance of adaptive thresholding techniques heavily depends on the characteristics of the input image and the choice of algorithm. Selecting an appropriate method requires careful consideration of factors such as noise level, texture, and object shape within the image.

Edge Device Speedup#

The research paper explores accelerating 3D Gaussian splatting, a novel view synthesis technique, on edge devices. A key finding is that while existing Gaussian pruning methods reduce the number of primitives, they don’t proportionally improve rendering speed due to the overlooked impact of fragment count per Gaussian. The paper introduces fragment pruning, an orthogonal approach that dynamically optimizes pruning thresholds for each Gaussian, leading to significant speed gains. Experimental results demonstrate substantial speedups on an edge GPU (Jetson Orin NX), surpassing existing state-of-the-art Gaussian pruning techniques. The combination of fragment and Gaussian pruning yields up to 1.71x speedup, demonstrating the effectiveness of this novel approach for efficient on-device rendering of complex 3D scenes. This efficiency enhancement is particularly crucial for resource-constrained applications like AR/VR. The improvements in rendering speed are achieved without a significant loss of visual quality; in fact, quality is even enhanced in some cases, with an average PSNR improvement of 0.16.

Future Research#

The ‘Future Research’ section of this paper presents exciting avenues for extending the work on fragment pruning in 3D Gaussian splatting. One key area is integrating threshold learning directly into the original pre-training process, eliminating the need for a separate, time-consuming fine-tuning step. This would significantly streamline the workflow and potentially improve efficiency. Another promising direction is applying this fragment pruning technique to other related tasks, such as 3D content generation and dynamic scene reconstruction. The authors mention that their method could be applied to the aforementioned using 4D Gaussian features, creating a path towards more efficient, high-quality dynamic scene rendering. Finally, exploring the application of fragment pruning in conjunction with other advanced Gaussian splatting techniques, such as those focusing on model compression or improved rendering quality, could lead to substantial improvements in rendering speed and visual fidelity. Investigating these possibilities holds immense potential for advancing the field of 3D Gaussian splatting and its various applications.

More visual insights#

More on figures

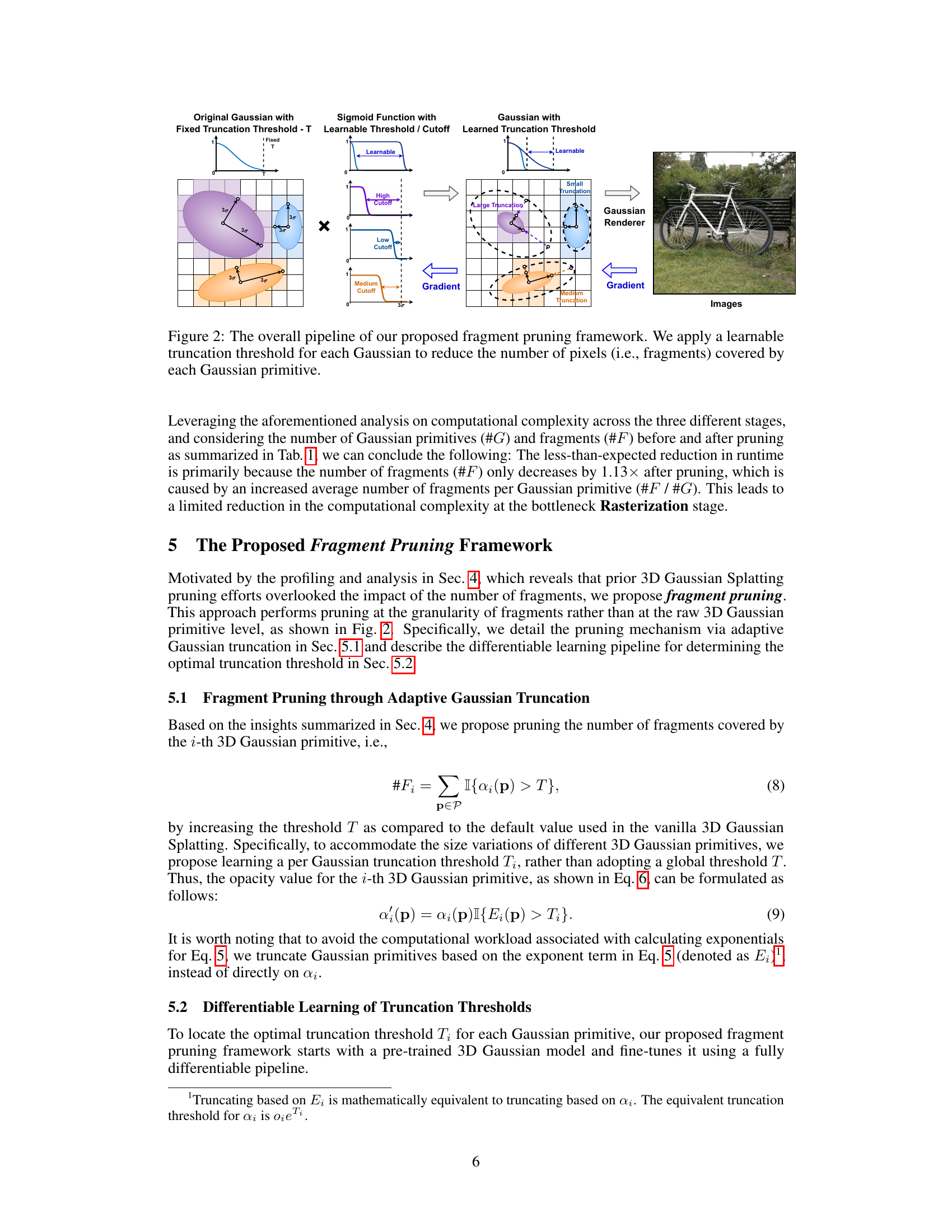

This figure illustrates the proposed fragment pruning framework. It shows how a learnable truncation threshold is applied to each Gaussian primitive to reduce the number of pixels it projects onto. The process starts with an original Gaussian with a fixed truncation threshold, then uses a sigmoid function with a learnable threshold to approximate the non-differentiable truncation function. This allows for each Gaussian to learn its own optimal threshold, thereby reducing fragment count and improving rendering efficiency. The figure includes visual representations of the Gaussians at different stages of this process, demonstrating the effect of the learnable threshold on truncation.

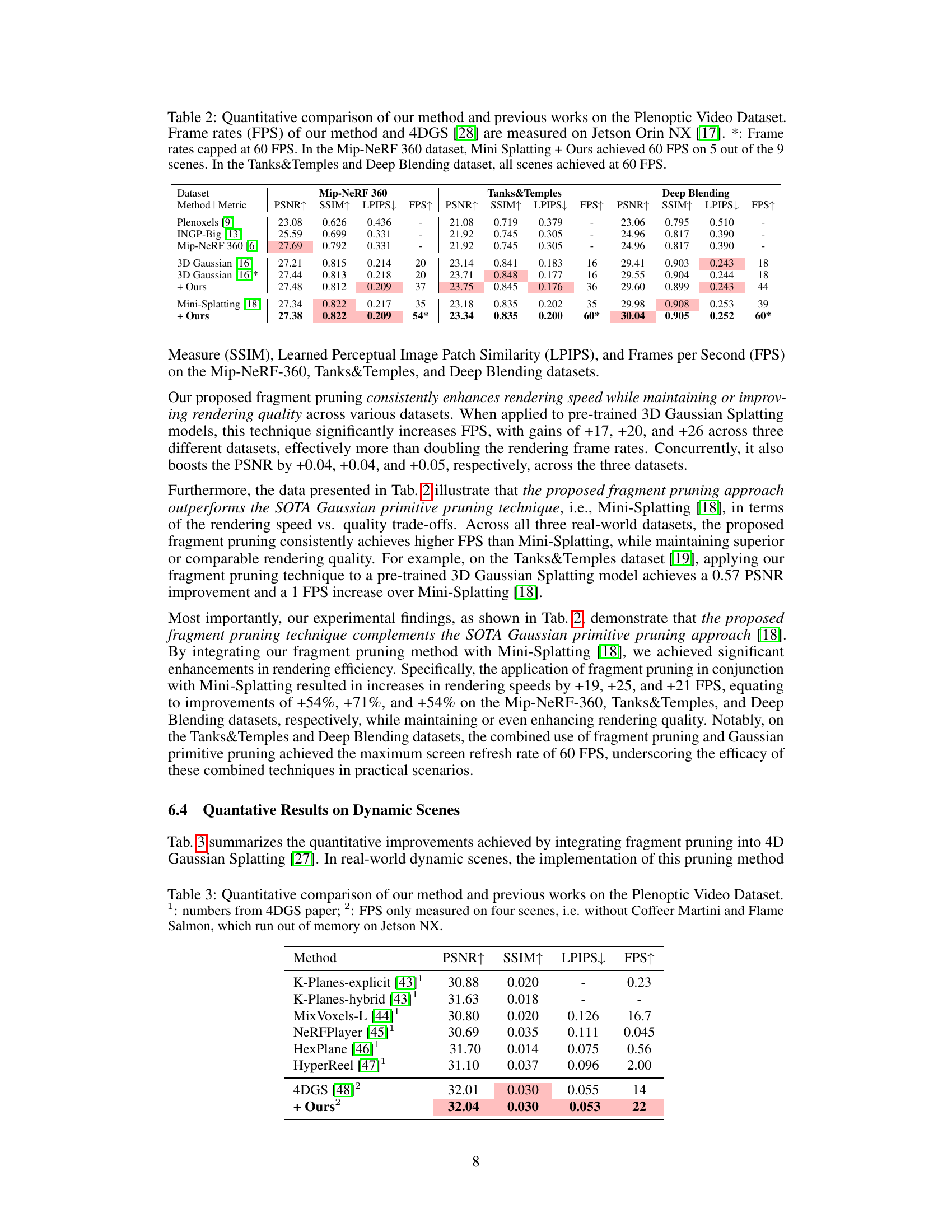

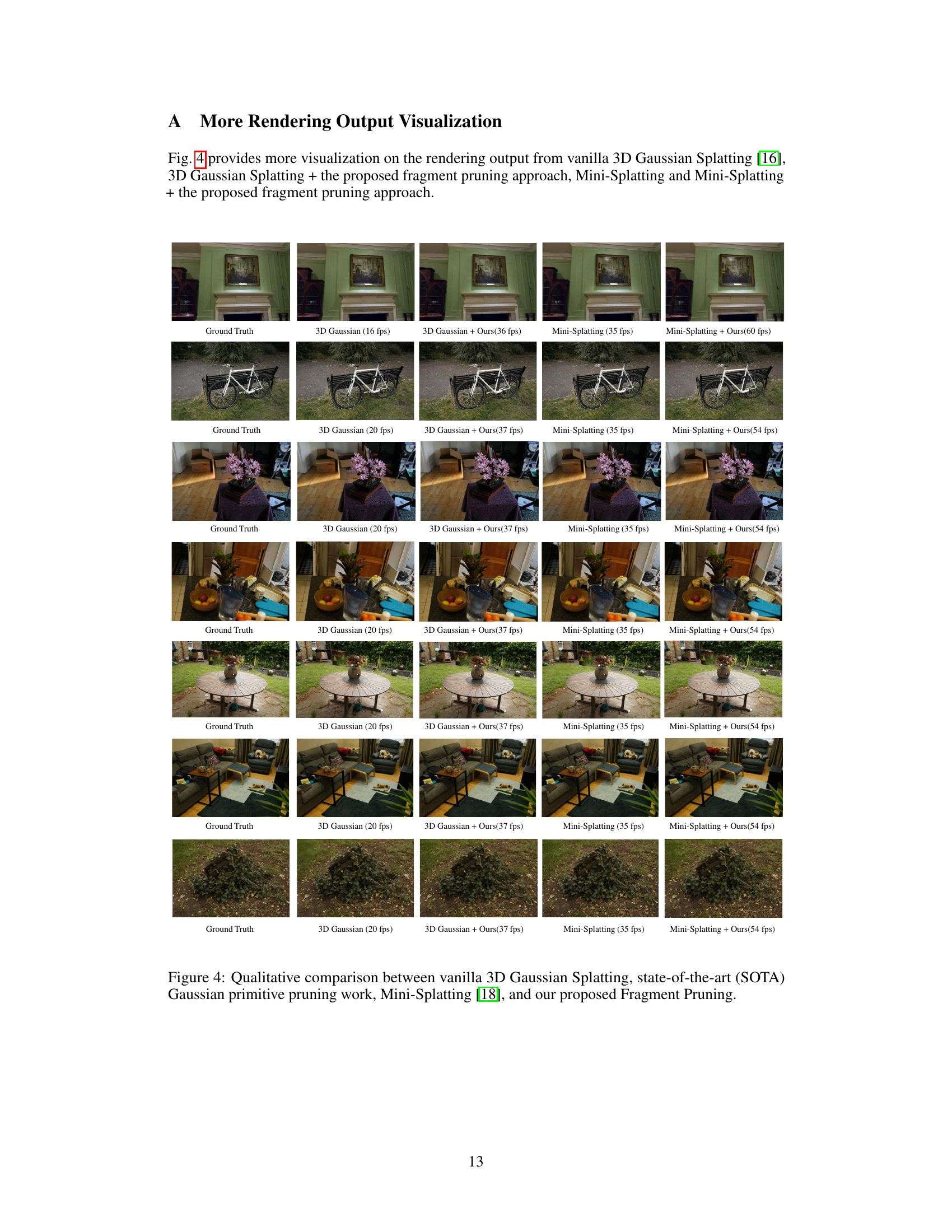

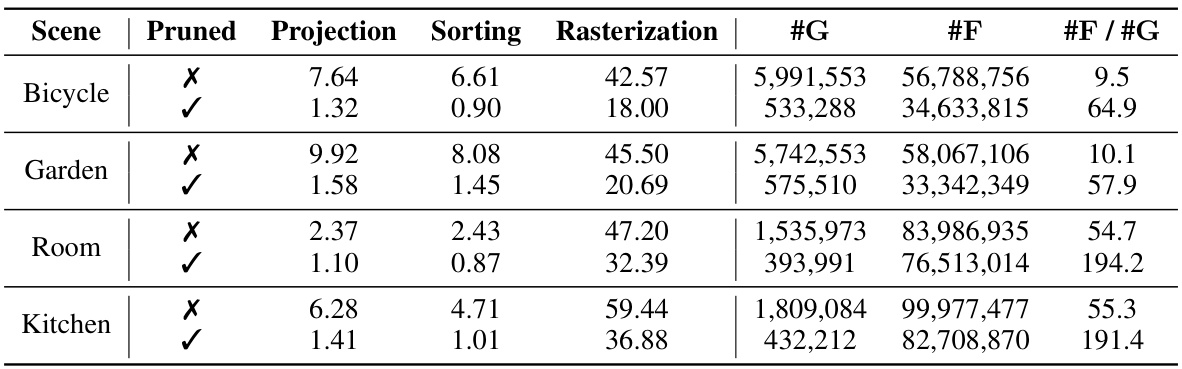

This figure shows a qualitative comparison of rendering results from four different methods: vanilla 3D Gaussian Splatting, 3D Gaussian Splatting with the proposed fragment pruning, Mini-Splatting, and Mini-Splatting with the proposed fragment pruning. The images demonstrate the visual improvements in rendering quality and efficiency achieved by incorporating the proposed fragment pruning technique. The comparison highlights the reduction in artifacts and improved clarity, especially in distant regions, resulting from the application of the proposed approach.

This figure shows a qualitative comparison of rendering results between four methods: vanilla 3D Gaussian Splatting, 3D Gaussian Splatting with the proposed fragment pruning, Mini-Splatting, and Mini-Splatting with the proposed fragment pruning. Each row represents a different scene from the dataset. The results demonstrate that the proposed fragment pruning method improves rendering quality, especially in distant regions, while also increasing rendering speed.

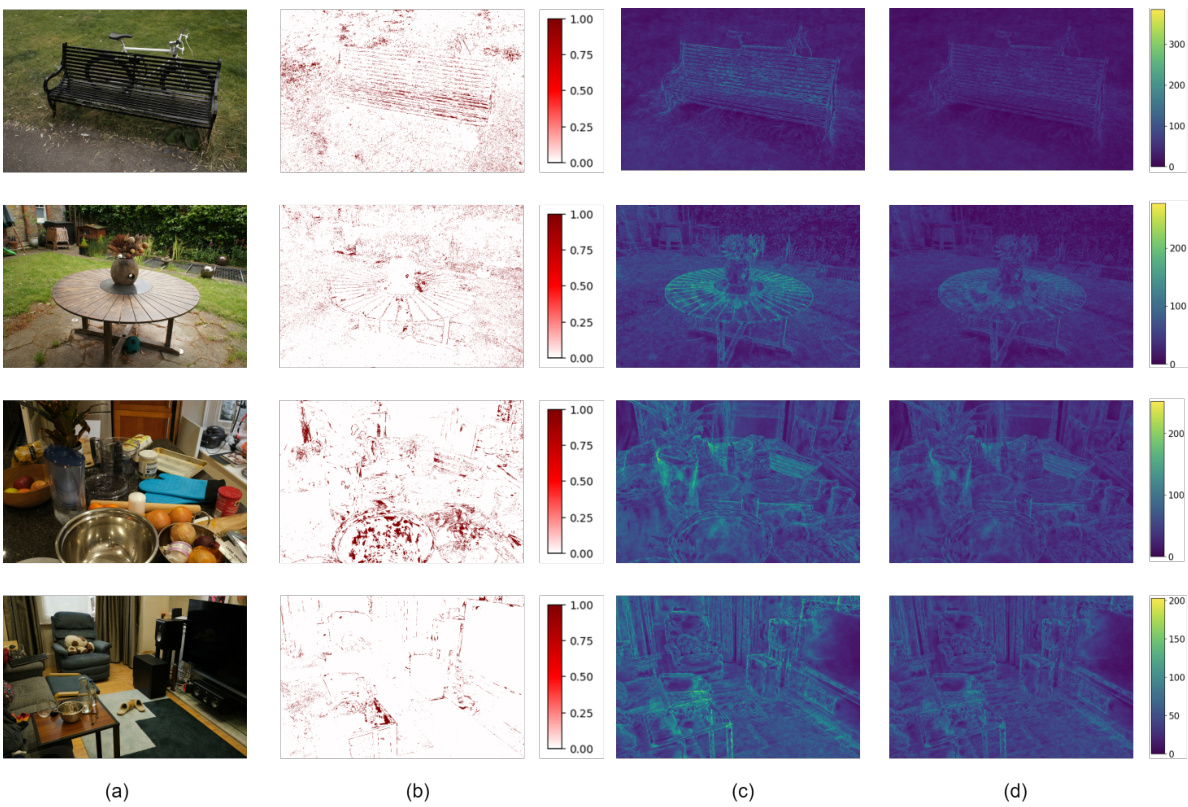

This figure visualizes how fragment pruning improves rendering quality and reduces fragment density. It shows a comparison between the ground truth image (a), regions where pruning improves fidelity (b), fragment density before pruning (c), and fragment density after pruning (d). The comparison highlights the reduction of fragment density, particularly along object edges, leading to improved rendering quality.

More on tables

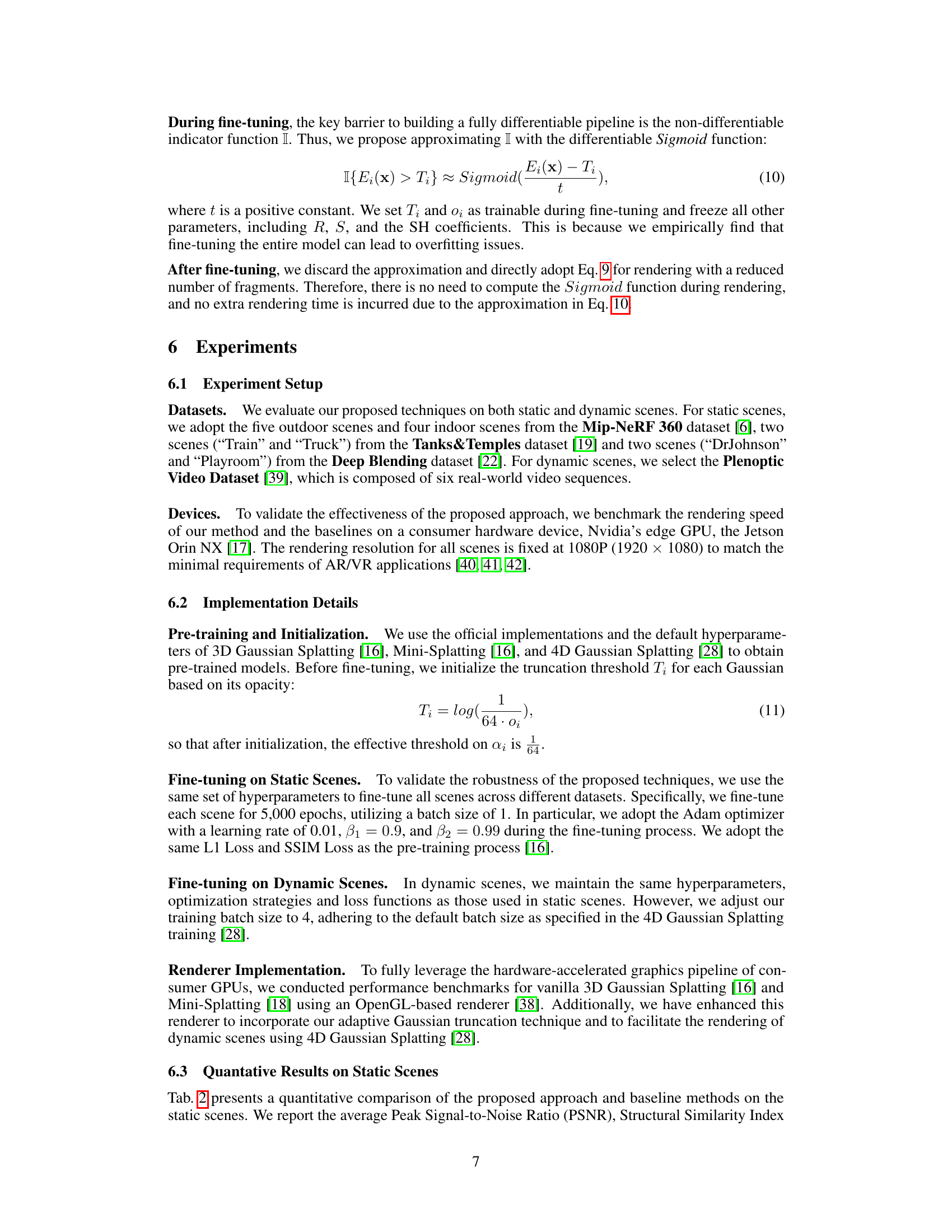

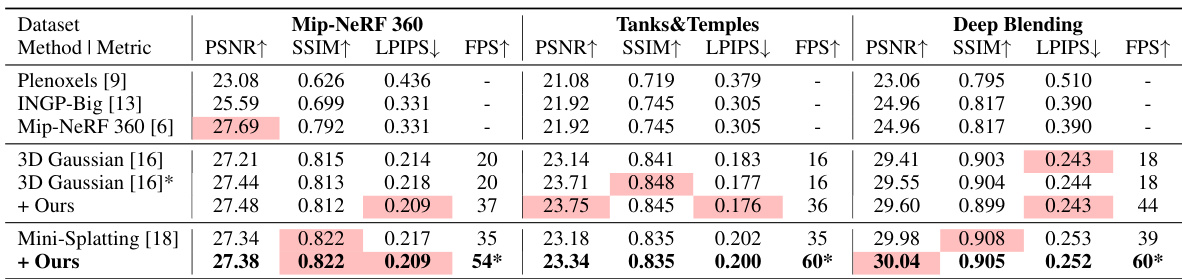

This table presents a quantitative comparison of the proposed fragment pruning method and several baseline methods on three datasets: Mip-NeRF 360, Tanks & Temples, and Deep Blending. It compares the Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), Learned Perceptual Image Patch Similarity (LPIPS), and Frames Per Second (FPS). The results show that the proposed method consistently improves FPS across all datasets, while maintaining or improving PSNR and SSIM, often outperforming state-of-the-art Gaussian primitive pruning techniques.

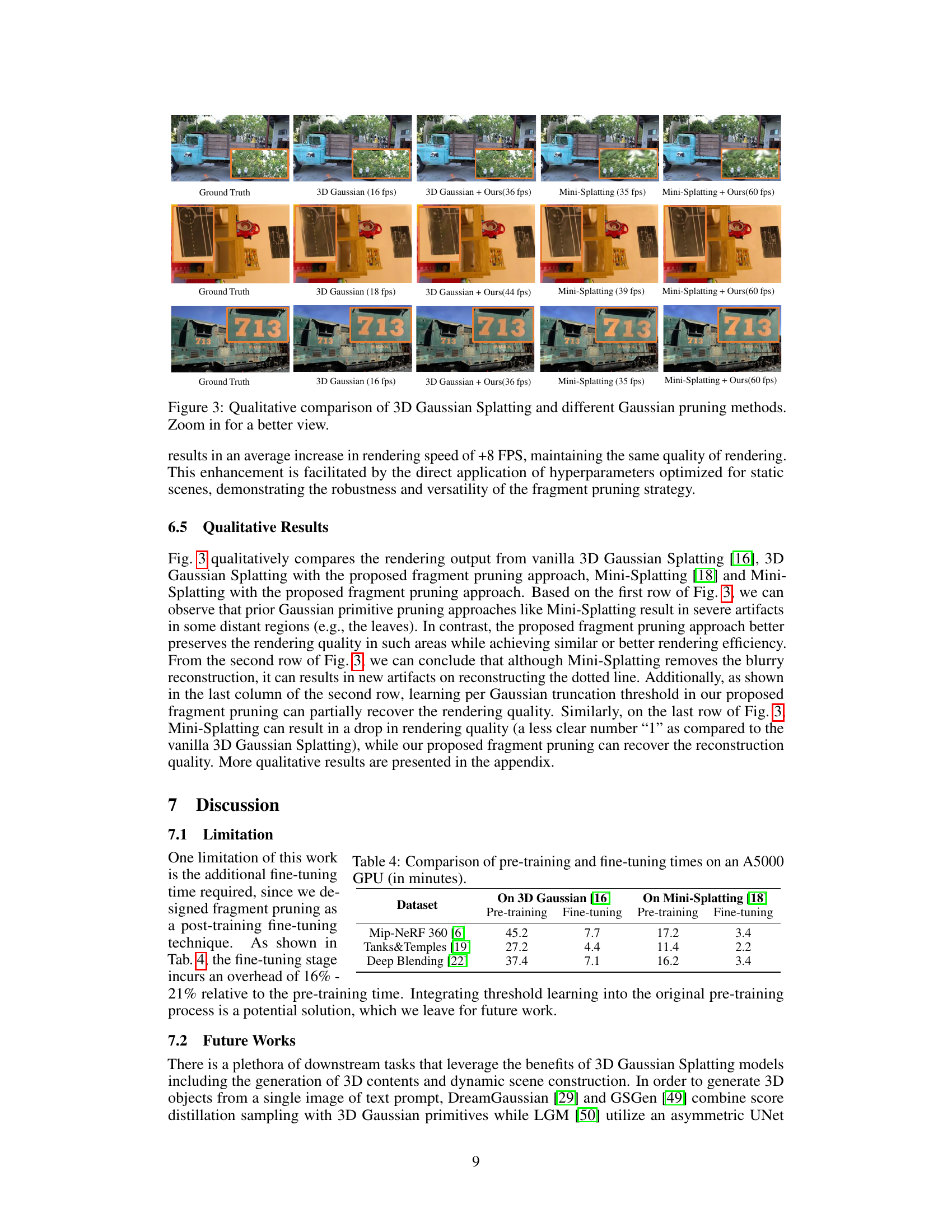

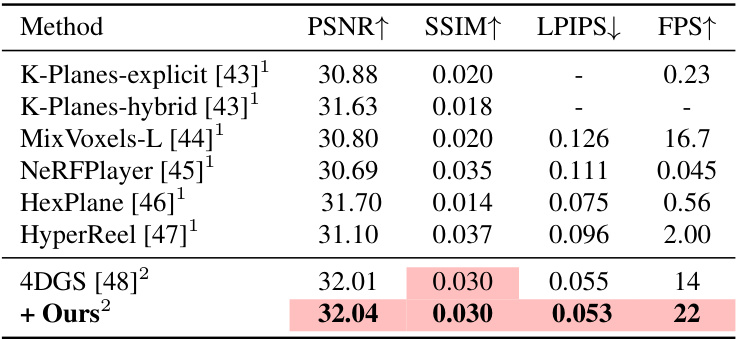

This table compares the proposed method’s performance with other state-of-the-art methods on the Plenoptic Video Dataset. It shows quantitative metrics including PSNR, SSIM, LPIPS, and FPS. Note that the FPS for some methods was limited due to memory constraints on the hardware.

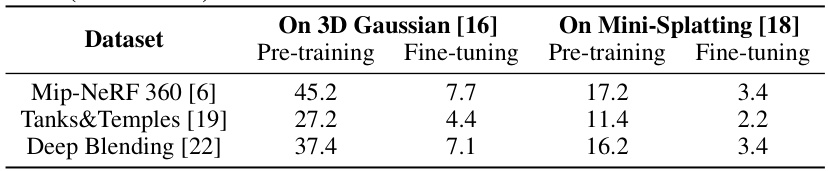

This table shows the pre-training and fine-tuning times on an NVIDIA A5000 GPU for three different datasets: Mip-NeRF 360, Tanks & Temples, and Deep Blending. The times are broken down for two different methods: vanilla 3D Gaussian Splatting and Mini-Splatting. It demonstrates the additional time required for the fine-tuning stage of the proposed fragment pruning method.

Full paper#