TL;DR#

Differentially private (DP) machine learning struggles with performance. Researchers explored using public data to boost DP algorithms’ accuracy. However, it’s unclear how much public data can actually help, and whether this approach is even optimal. Prior works struggled to provide clear theoretical guidance on leveraging public data for DP learning, especially when it is unlabeled. This research addresses this crucial knowledge gap.

This research presents both theoretical and practical contributions. It introduces new lower bounds for DP stochastic convex optimization, proving when simply treating all data as private or discarding the public data is the best possible solution. Furthermore, it proposes novel methods for incorporating unlabeled public data in supervised learning, demonstrating significant improvements in performance without compromising privacy. The study extends its results beyond generalized linear models to broader hypothesis classes, providing strong theoretical justification and broader applicability.

Key Takeaways#

Why does it matter?#

This paper is crucial because it bridges the gap between theory and practice in differentially private machine learning. It provides tight theoretical bounds for public-data assisted private learning, highlighting the limitations and potential of using public data to improve privacy-preserving algorithms. This work guides future research by identifying promising avenues for leveraging public information while protecting private data effectively. The novel methods and lower bounds established lay the groundwork for future advancements in the field.

Visual Insights#

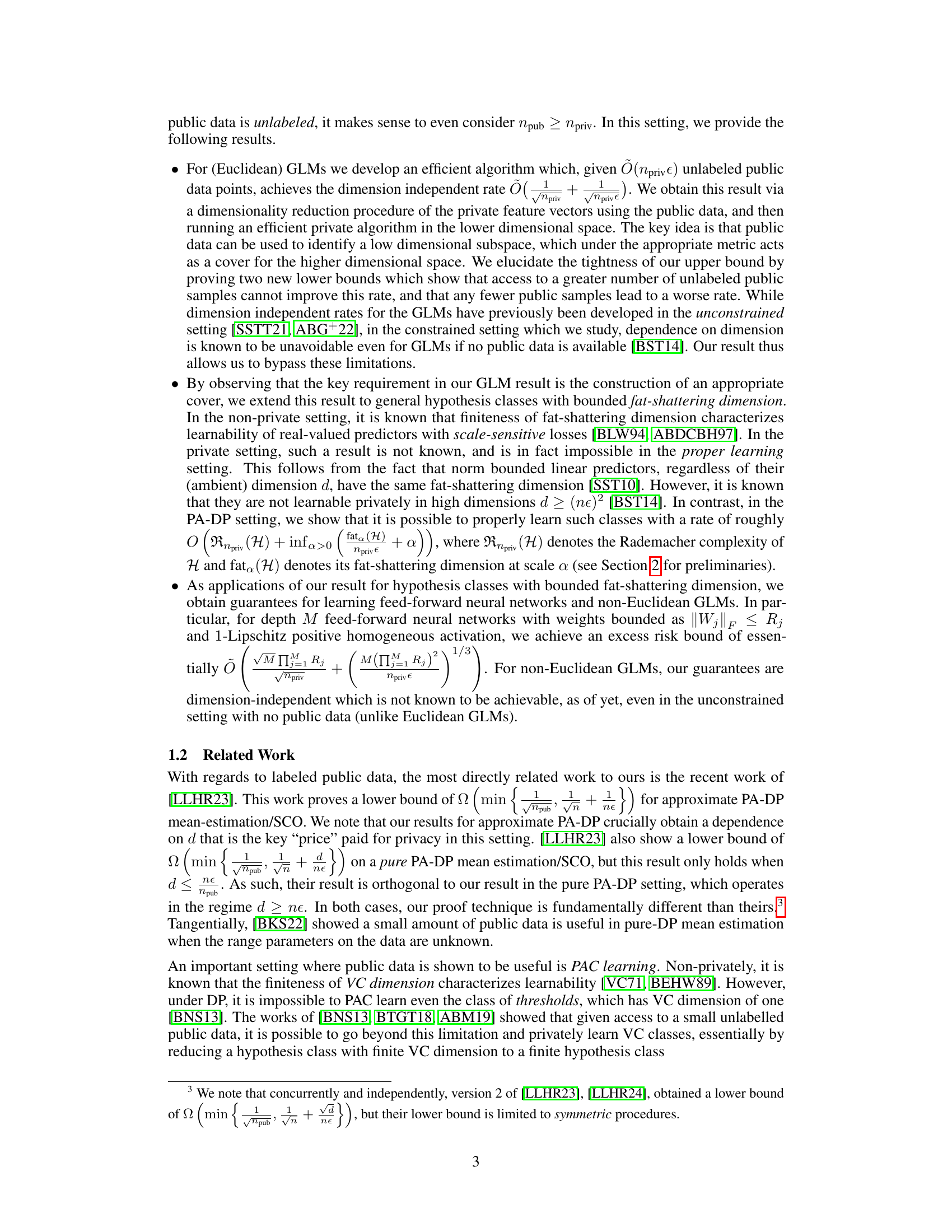

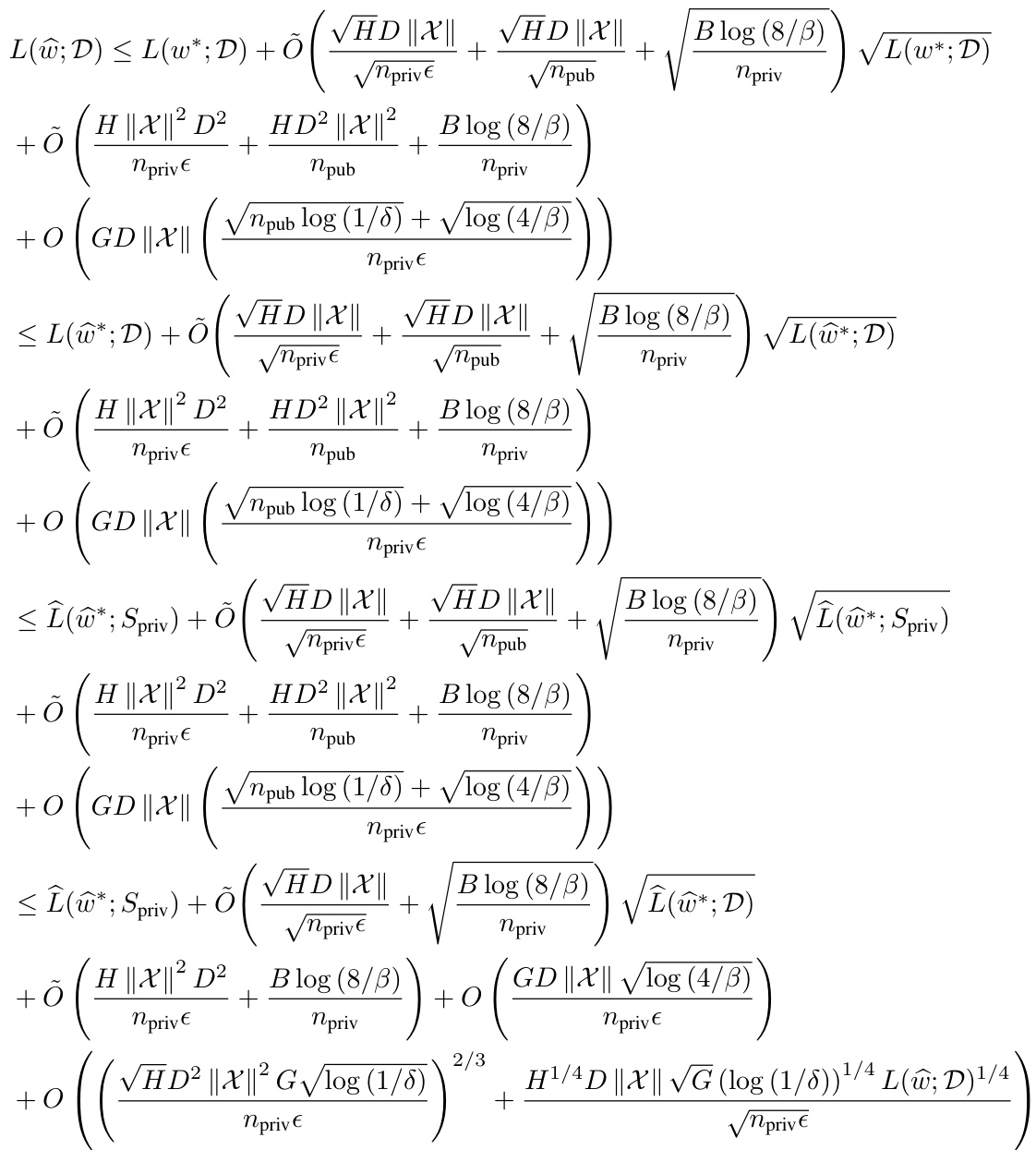

🔼 The algorithm uses public unlabeled data to perform dimensionality reduction of the private labeled feature vectors. It projects the private feature vectors onto the subspace spanning W∩span(Xpub) to get a lower dimensional representation. Then, it reparametrizes the loss function and applies a private subroutine in the lower dimensional space. Finally, it embeds the output back in Rd.

read the caption

Algorithm 1 Efficient PA-DP learning of GLMs with unlabeled public data

Full paper#