↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Meta-learning aims to train models adaptable to new tasks with minimal overhead, but existing methods like MAML are computationally expensive and lack strong theoretical guarantees. This paper addresses this challenge by focusing on generalization error through algorithmic stability analysis. Traditional approaches struggle to provide meaningful generalization bounds for complex meta-learning scenarios.

The paper introduces a novel concept called “uniform meta-stability” to analyze meta-learning algorithms. It then presents two uniformly meta-stable algorithms, one based on regularized risk minimization and another on gradient descent, providing generalization bounds for different problem settings (convex, smooth; weakly convex, non-smooth). The results are also extended to stochastic and robust meta-learning settings, offering significant improvements in theoretical understanding and practical applicability.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in meta-learning due to its novel notion of uniform meta-stability, providing stronger generalization guarantees than existing work. It also addresses the computational challenges of meta-learning, making it relevant to practical applications and providing theoretical insights for robust and stochastic variants.

Visual Insights#

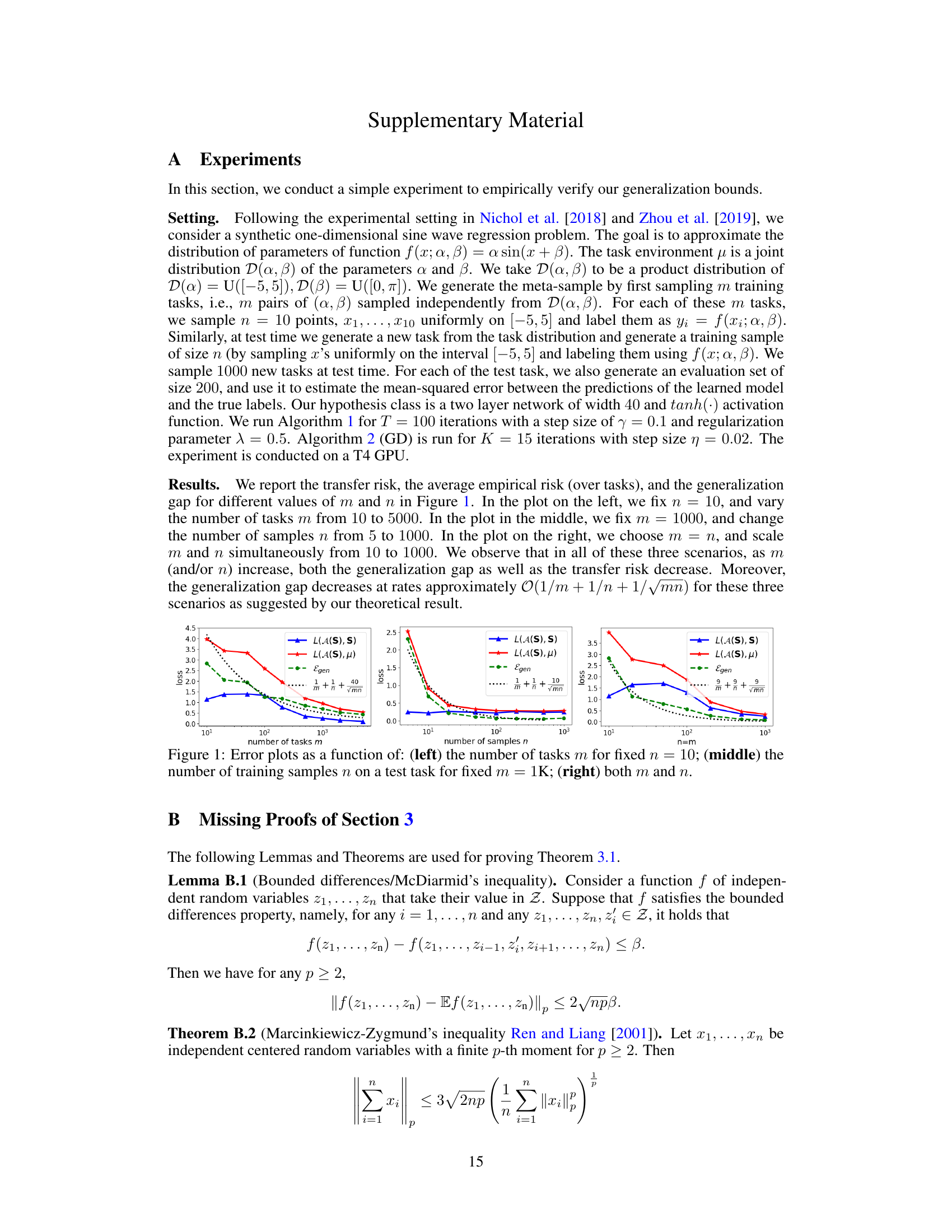

This figure displays three plots illustrating the relationship between error metrics (transfer risk, empirical risk, and generalization gap) and the number of tasks (m) and training samples (n) used in a meta-learning experiment. The left plot shows that with a fixed number of samples per task (n=10), the error decreases as the number of tasks increases. The middle plot shows the error decreasing as the number of samples increases while fixing the number of tasks (m=1000). The rightmost plot shows that when the number of tasks and samples increase proportionally, the error decreases. Overall, the figure visually supports the authors’ theoretical findings on generalization performance.

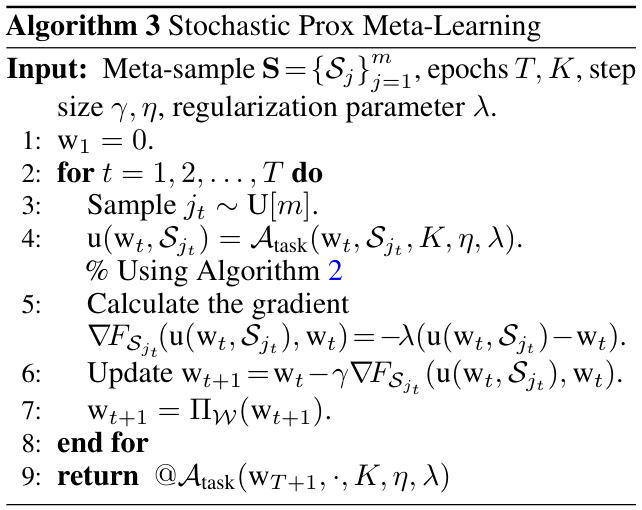

This table presents the theoretical bounds on uniform meta-stability (β) for different meta-learning algorithms and loss function types. It shows how the stability of the algorithms (a measure of their sensitivity to changes in the data) depends on various hyperparameters (step sizes η and γ, regularization parameter λ), the number of training tasks (m), and the number of training examples per task (n). Different rows represent different algorithm choices (using regularized empirical risk minimization or gradient descent for task-specific learning) and assumptions about the loss function (convex and smooth, weakly convex and non-smooth).

In-depth insights#

Meta-Stability Notion#

The novel notion of meta-stability introduced in this paper offers a more nuanced and robust approach to analyzing generalization in meta-learning. Unlike traditional stability measures, meta-stability considers the impact of replacing both a task within the meta-training set and a single training example from the test task. This dual-level perspective accounts for the unique challenges of meta-learning. The strength of the meta-stability framework is its ability to provide generalization bounds that are tighter and more informative than those derived from traditional methods alone. By directly addressing the dual nature of meta-learning, meta-stability offers a more precise way to assess generalization performance. This refined analysis is particularly useful when dealing with various learning settings, including those with non-convex or non-smooth loss functions. It opens avenues for improved algorithm design and analysis in meta-learning.

Generalization Bounds#

The concept of generalization bounds is central to machine learning, focusing on how well a model trained on a finite dataset performs on unseen data. In meta-learning, this is especially crucial as models are trained on a distribution of tasks to generalize to new, unseen tasks. The paper likely explores different types of generalization bounds, comparing and contrasting their strengths and weaknesses. The analysis probably involves novel techniques to handle the complexities of meta-learning, such as analyzing the stability of the meta-learning algorithms. The goal is to derive bounds that are tight and informative, not just theoretically sound but also practically useful in understanding the actual performance of meta-learning models. The paper likely emphasizes generalization guarantees for different types of losses (convex, smooth, non-convex, non-smooth), and potentially examines the influence of model stability and robustness on the generalization bounds. The results will likely provide a theoretical basis for understanding the generalization capabilities of meta-learning algorithms and could contribute to designing improved meta-learning models with better generalization performance.

Convex Loss Analysis#

A convex loss analysis in a machine learning context typically involves studying the behavior of algorithms under the assumption that the loss function is convex. This assumption simplifies the analysis because convexity guarantees a unique global minimum, making it easier to prove convergence and generalization bounds. The analysis might explore different optimization techniques (like gradient descent) and their convergence rates for convex loss functions. Specific properties of the convex loss, such as smoothness or strong convexity, are often considered to obtain tighter convergence bounds. The analysis also might examine the relationship between the empirical risk (loss on the training data) and the expected risk (loss on unseen data), potentially using techniques from statistical learning theory to quantify generalization performance. Generalization error bounds are often a key focus, as they provide guarantees on the algorithm’s ability to generalize to unseen data. The analysis may consider different assumptions about the data distribution and the characteristics of the hypothesis space. Finally, the study often aims to provide insights into the trade-offs between computational efficiency and statistical accuracy.

Robustness Variants#

The concept of ‘Robustness Variants’ in the context of meta-learning algorithms addresses the crucial issue of ensuring that the learned meta-model generalizes well to unseen tasks, even when faced with various forms of uncertainty or noise. A robust meta-learning algorithm should maintain its performance despite noisy or corrupted training data (stochastic robustness), or adversarial attacks specifically designed to mislead the model (adversarial robustness). This involves developing modifications to standard meta-learning algorithms, such as adding regularization techniques or employing adversarial training methods. The core idea is to increase the stability and generalization capabilities of the meta-model making it more resilient to deviations from the ideal training conditions. Analyzing the theoretical guarantees of these robustness variants is crucial, as it offers insights into how the modifications impact generalization performance, and may help guide the development of even more robust meta-learning approaches. In essence, studying robustness variants improves the reliability of meta-learning models in real-world applications where perfect data and ideal conditions are rarely encountered.

Future Research#

The paper’s theoretical contributions on meta-learning’s stability and generalization open exciting avenues for future research. Tightening the generalization bounds by potentially removing the logarithmic factors in the current bounds is a significant goal. Exploring the impact of different task distributions and their effect on stability is crucial for practical applications. Extending the analysis to more complex meta-learning algorithms beyond the ones considered here (e.g., those involving neural networks) and relaxing the convexity assumptions to broader loss functions warrants investigation. Investigating the interplay between algorithmic stability and robustness (e.g., adversarial robustness) is another critical direction. Finally, empirical validation of the theoretical findings on diverse datasets and real-world tasks is needed to solidify the practical implications of these results.

More visual insights#

More on tables

This table presents theoretical bounds on uniform meta-stability (β) for different types of loss functions used in meta-learning algorithms. The bounds depend on several hyperparameters: the step size for gradient descent in both the task-specific and meta-learning stages (η and γ respectively), the number of training tasks (m), and the number of training examples per task (n). The table helps to understand how these hyperparameters and the properties of the loss function (convexity, smoothness, Lipschitz continuity) impact the stability of the algorithm.

This table summarizes the upper bounds on the uniform meta-stability (β) for four different scenarios of convex and non-convex loss functions. Each row represents a setting where the loss function has different properties (e.g., convex and Lipschitz, or weakly convex and Lipschitz) and different optimization methods (e.g., regularized empirical risk minimization (RERM) or gradient descent (GD)) are used for task-specific learning. The bounds for uniform meta-stability are expressed in terms of the step-sizes (η, γ), the number of tasks (m), and the number of training data per task (n).

Full paper#