↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Existing research on diffusion models primarily focuses on models trained on large datasets and struggles to explain the success of few-shot models which are trained with few data points. Moreover, their analyses often fall prey to the ‘curse of dimensionality’—the difficulty in accurately approximating high-dimensional functions with limited data. These limitations hinder the understanding and advancement of few-shot diffusion models.

This work introduces the first theoretical analysis of few-shot diffusion models. It provides novel approximation and optimization bounds that show these models are significantly more efficient than previously thought, overcoming the curse of dimensionality. The paper introduces a linear structure distribution assumption, and then using approximation and optimization perspectives, proves better bounds than existing methods. A latent Gaussian special case is also considered, proving a closed-form minimizer exists. Real-world experiments validate the theoretical findings.

Key Takeaways#

Why does it matter?#

This paper is crucial because it offers the first theoretical analysis of few-shot diffusion models, a rapidly growing area. It directly addresses the limitations of existing analyses that struggle with the curse of dimensionality and provides novel approximation and optimization bounds, opening exciting avenues for model improvement and more efficient training strategies. This work will be essential reading for researchers to understand and advance the field of few-shot learning and image generation.

Visual Insights#

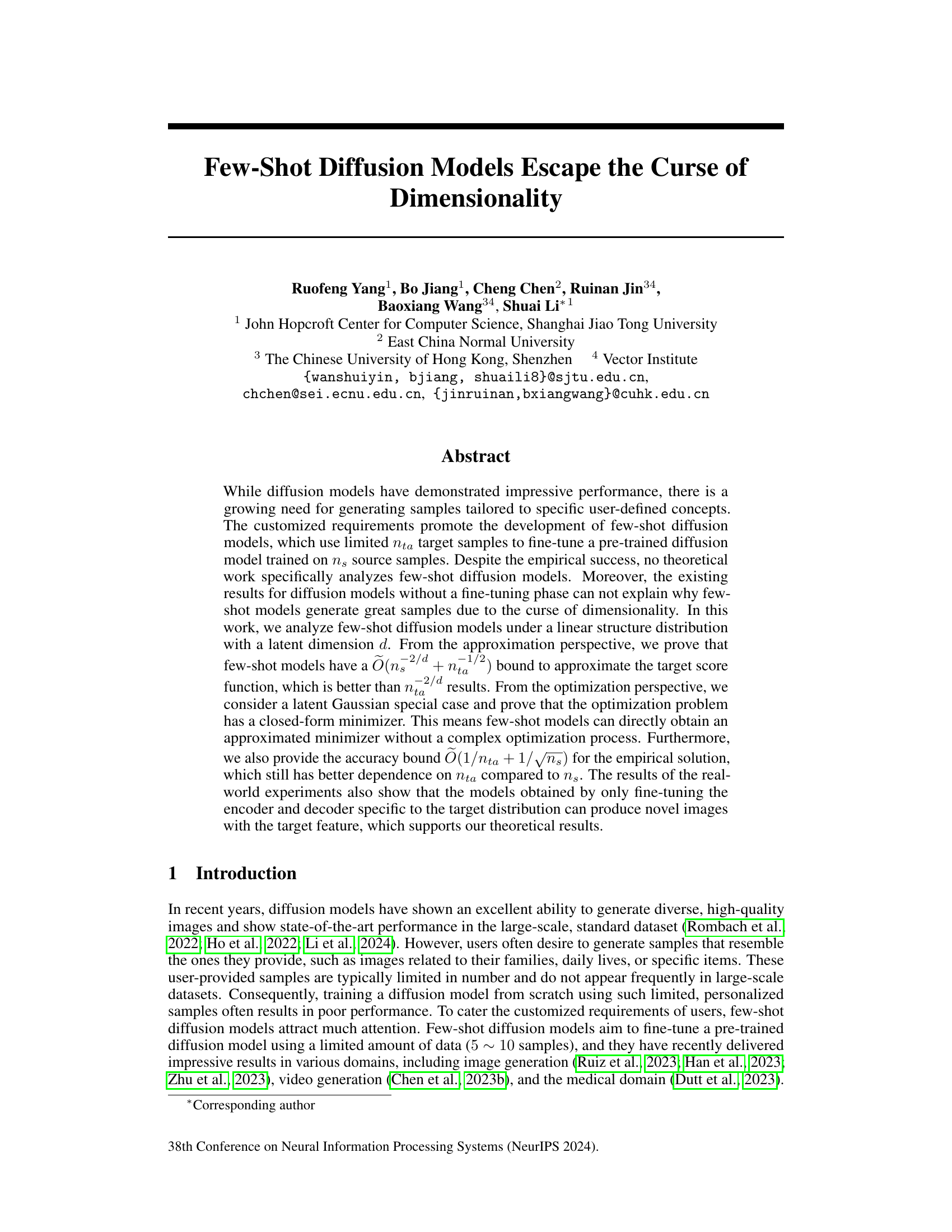

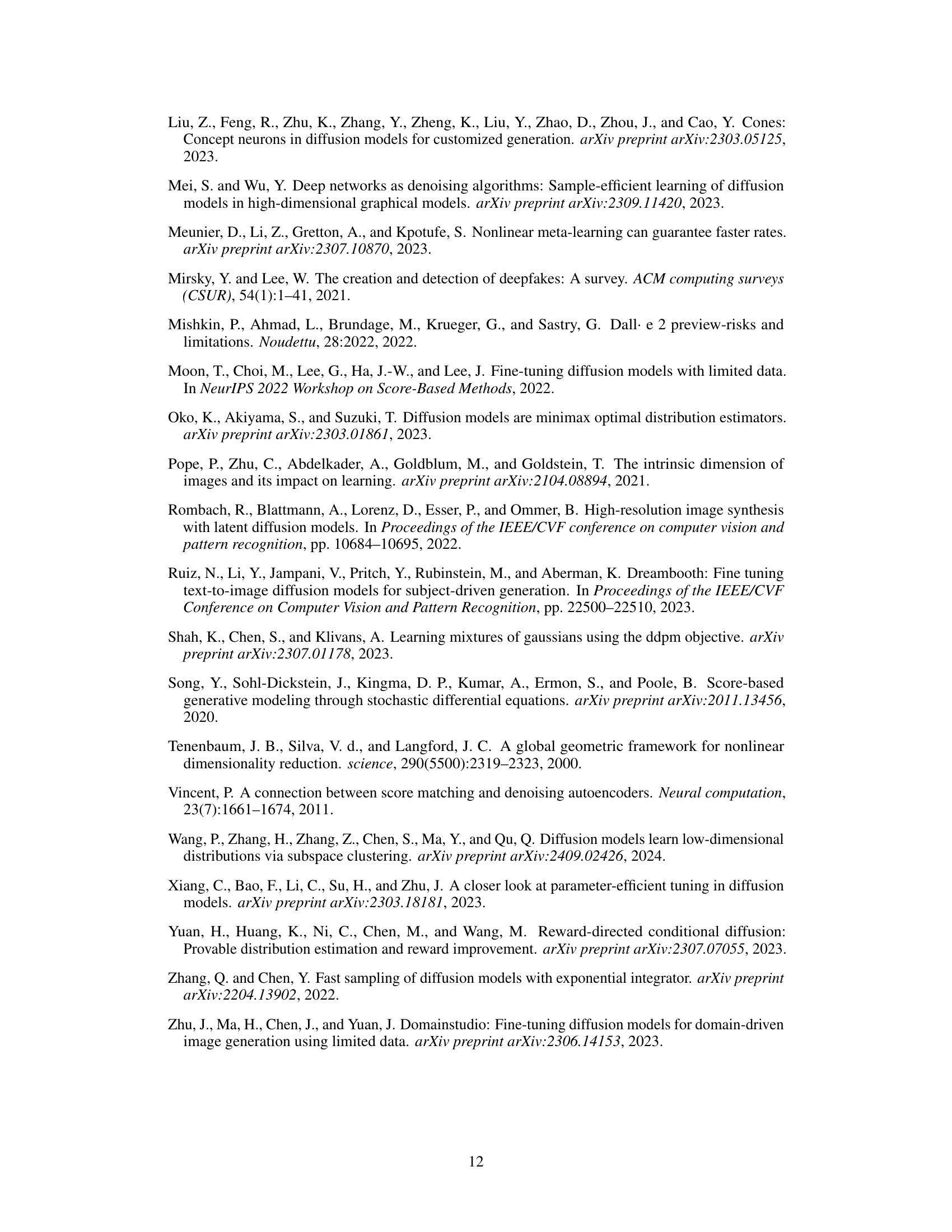

This figure shows the results of three different experiments on the CelebA64 dataset. The first row (a) displays the target dataset, which contains images of people with bald heads. The second row (b) shows the results when fine-tuning all parameters of the pre-trained model. The third row (c) presents the results of fine-tuning only the encoder and decoder parameters. The comparison visually demonstrates the superior performance of fine-tuning only the encoder and decoder, resulting in more novel and diverse images of bald individuals compared to fine-tuning all parameters, which struggles to produce significantly different images from the original target set.

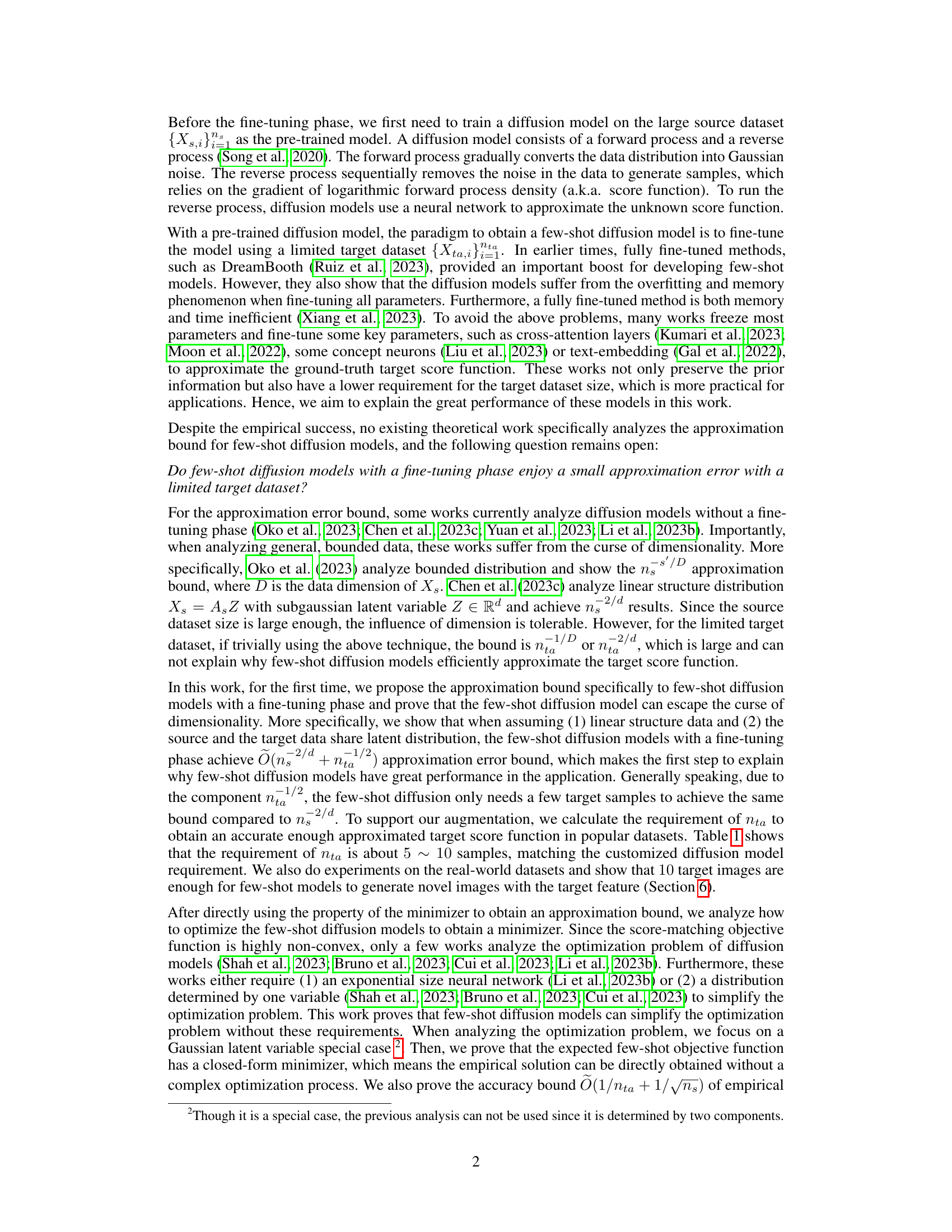

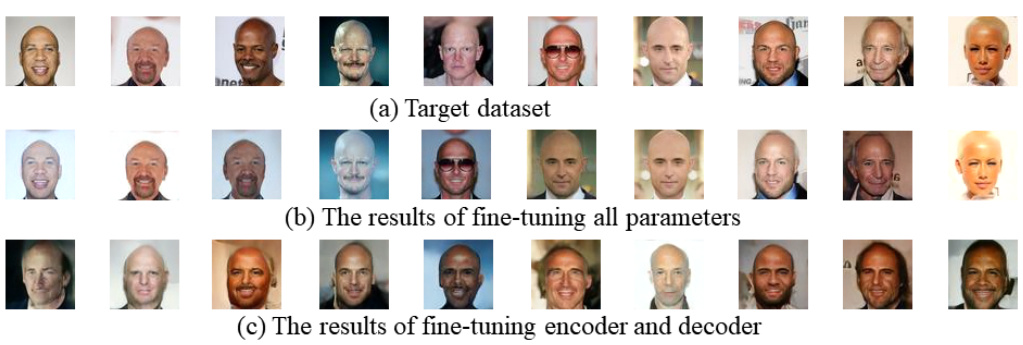

This table shows the requirement of the number of target samples (nta) needed to achieve the same accuracy as pre-trained models in popular datasets. The latent dimension (d) for each dataset, obtained from Pope et al. (2021), is included as it significantly influences the required nta. Datasets with higher latent dimensions require more target samples for comparable performance to the pre-trained models.

Full paper#