↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Stochastic zeroth-order optimization, where algorithms only access noisy function evaluations, poses a significant challenge in machine learning. Optimizing strongly convex functions with smooth characteristics (Lipschitz Hessian) is particularly difficult due to the algorithm’s limited access to information about the function’s structure. Existing research lacks a precise understanding of the fundamental limits (minimax simple regret) for this class of problems, and efficient algorithms are still lacking.

This research paper makes a substantial contribution by providing the first tight characterization of the minimax simple regret for this optimization problem. The authors achieve this by developing matching upper and lower bounds. They introduce a new algorithm that effectively combines bootstrapping and mirror descent techniques. A key innovation is their sharp characterization of the spherical-sampling gradient estimator under higher-order smoothness. This allows the algorithm to balance the tradeoff between bias and variance and makes it robust to unbounded Hessian. The improved algorithm achieves optimal sample complexity, which advances our theoretical understanding and practical capabilities for stochastic zeroth-order optimization.

Key Takeaways#

Why does it matter?#

This paper is crucial because it provides the first tight characterization of the minimax simple regret for stochastic zeroth-order optimization of strongly convex functions with Lipschitz Hessian. This addresses a major challenge in online learning and derivative-free optimization. It opens new avenues for research into the interplay between smoothness, convexity, and sample complexity in stochastic optimization, leading to more efficient algorithms for various applications.

Visual Insights#

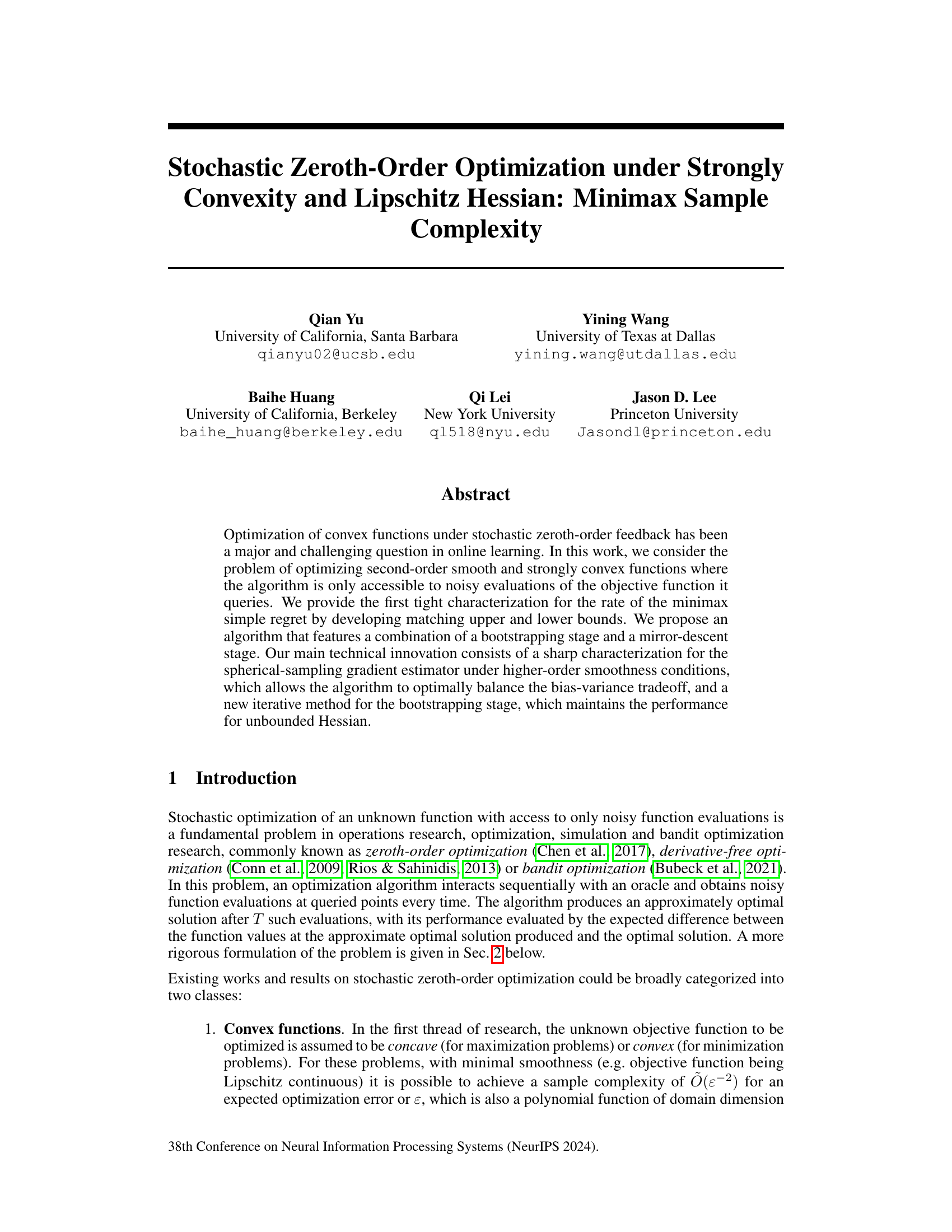

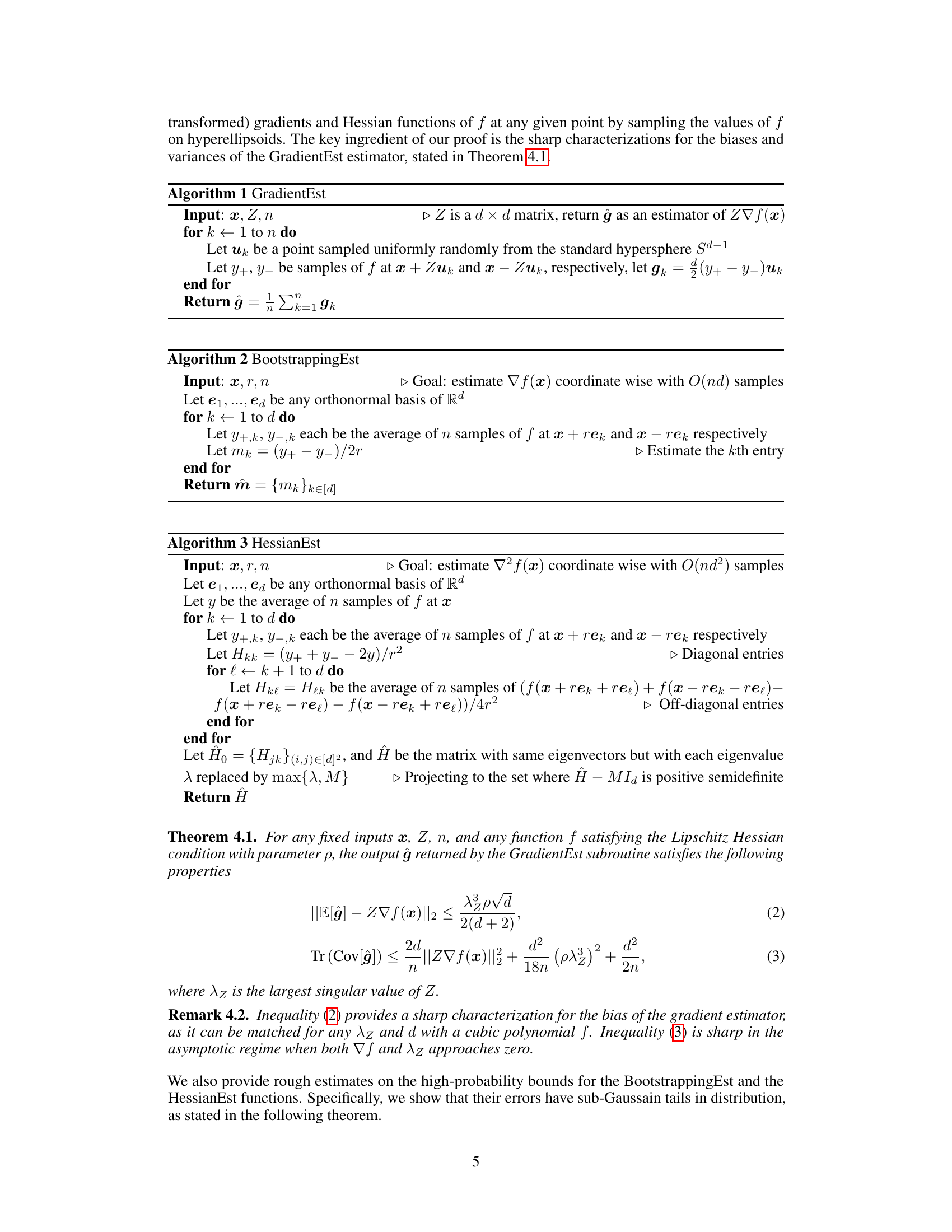

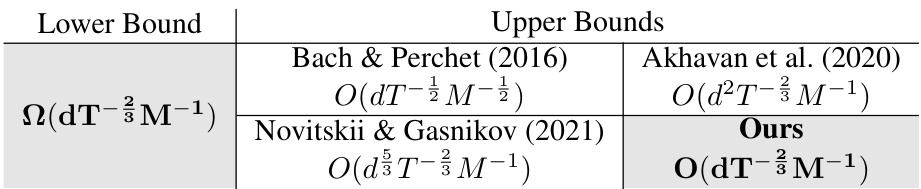

This table compares the upper and lower bounds of simple regret, a measure of performance in stochastic zeroth-order optimization, found in previous research to the upper and lower bounds derived in this paper. The simple regret depends on the number of function evaluations (T), the dimension of the problem (d), and a parameter (M) describing the strong convexity of the objective function. The table shows that the current work provides tighter bounds than those previously established, achieving the minimax optimal sample complexity.

Full paper#