↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many machine learning applications involve learning from noisy data, where labels are corrupted by various sources. Online learning, where data arrives sequentially, adds another layer of complexity. Existing research primarily focuses on the ‘agnostic’ setting, evaluating performance on observed noisy labels rather than the true labels, overlooking the critical issue of achieving good performance on the ground truth. This paper addresses this gap by studying online classification where true labels are corrupted by stochastic noise modeled via a general noisy kernel, and features are generated adversarially. The goal is to minimize the minimax risk when comparing against the true labels.

The paper introduces a novel online learning framework that models general noisy mechanisms. The researchers use a novel reduction to an online comparison of two hypotheses and a new conditional version of Le Cam-Birgé testing. Their key finding is that the minimax risk is characterized by the Hellinger gap of the noisy label distributions, independent of other properties of the noise such as the means and variances. This is significant because it provides a clear and comprehensive characterization of the problem, going beyond simpler noise models and offering new insights into the fundamental limits of online classification with noisy labels.

Key Takeaways#

Why does it matter?#

This paper is crucial because it provides the first comprehensive characterization of noisy online classification, addressing a fundamental challenge in machine learning. Its theoretical guarantees, applicable to real-world scenarios, guide the design of more robust and reliable learning systems handling noisy data. The novel reduction technique and conditional Le Cam-Birgé testing offer valuable tools for researchers in the field, opening new avenues for tackling online learning under uncertainty.

Visual Insights#

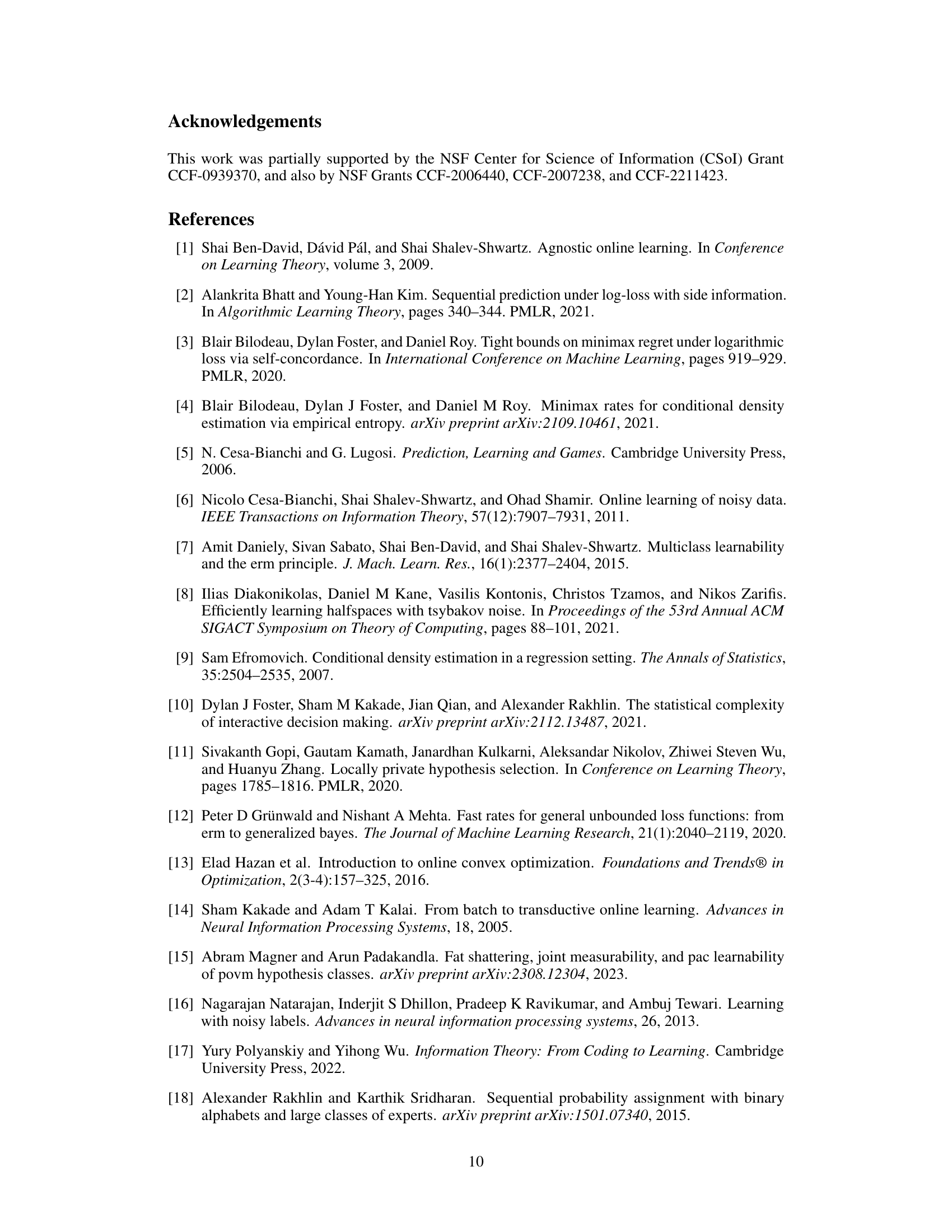

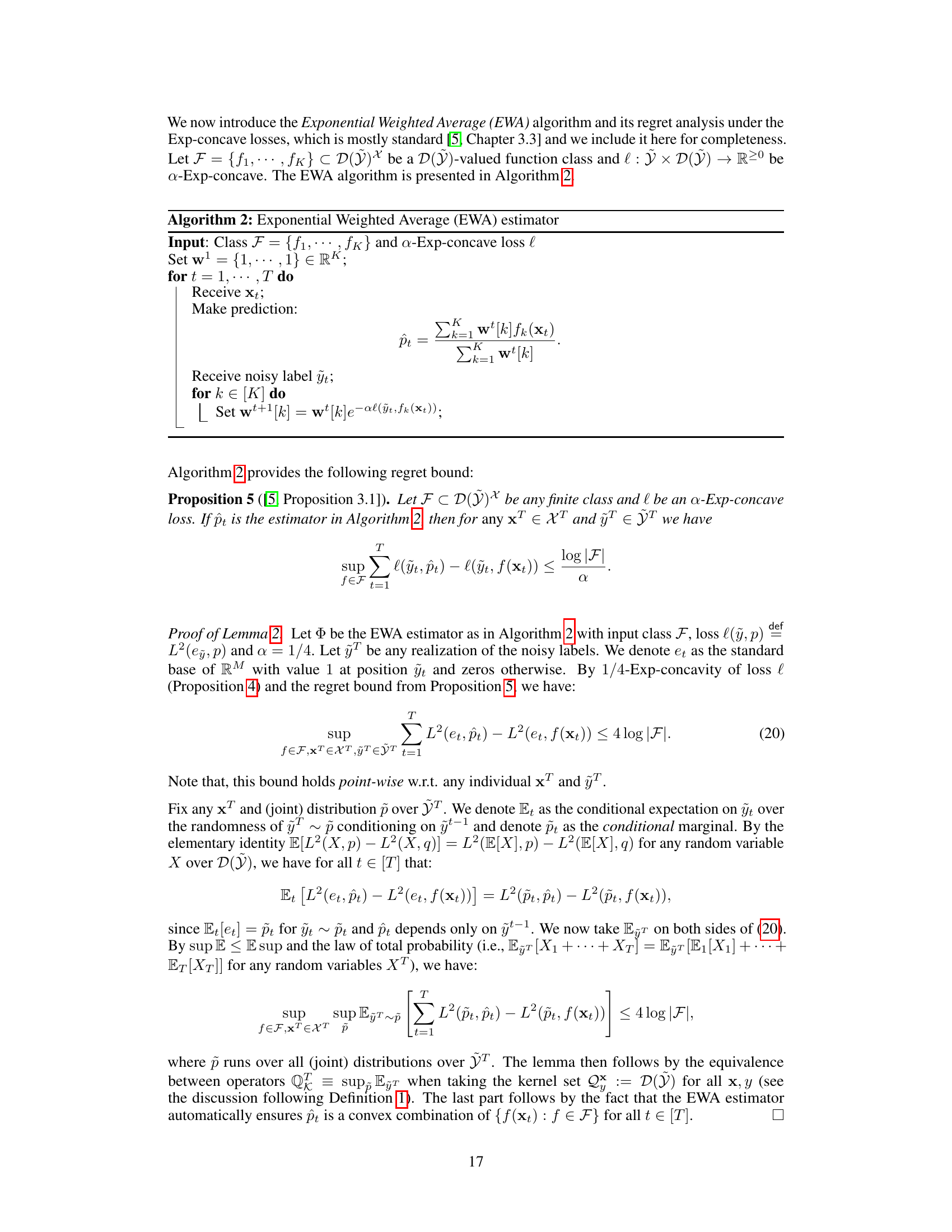

Algorithm 1 presents a prediction rule for online classification with noisy labels. It leverages pairwise hypothesis testing, iteratively refining a set of candidate hypotheses (St) based on cumulative loss vectors (vi[j]). For each time step, a hypothesis is sampled from St, a prediction is made, and a noisy label is received. The algorithm updates St+1 by removing hypotheses with cumulative losses exceeding a threshold (C), effectively focusing on more promising hypotheses. The algorithm’s core is a reduction from a general online classification problem into a set of pairwise classification subproblems which are independently solvable. This makes the algorithm robust and agnostic to adversarial choices of noisy labels.

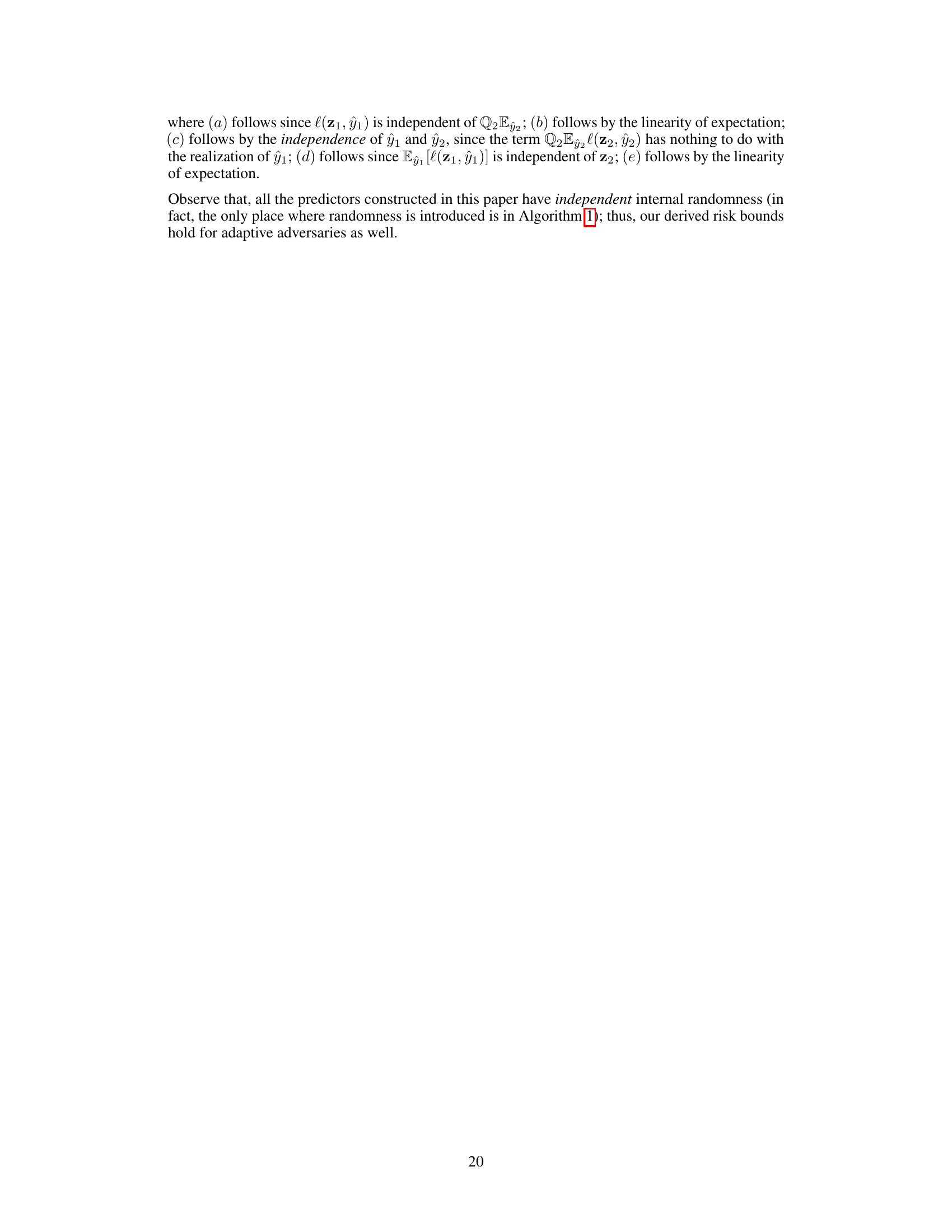

This table summarizes the notations used throughout the paper. It defines the key mathematical objects such as the set of features (X), labels (Y), noisy observations (Ÿ), hypothesis class (H), noisy kernel (K), and probability distributions (D(Ý)). It clarifies the meanings of symbols used for sets, sizes, and operations relevant to the online classification framework.

Full paper#