TL;DR#

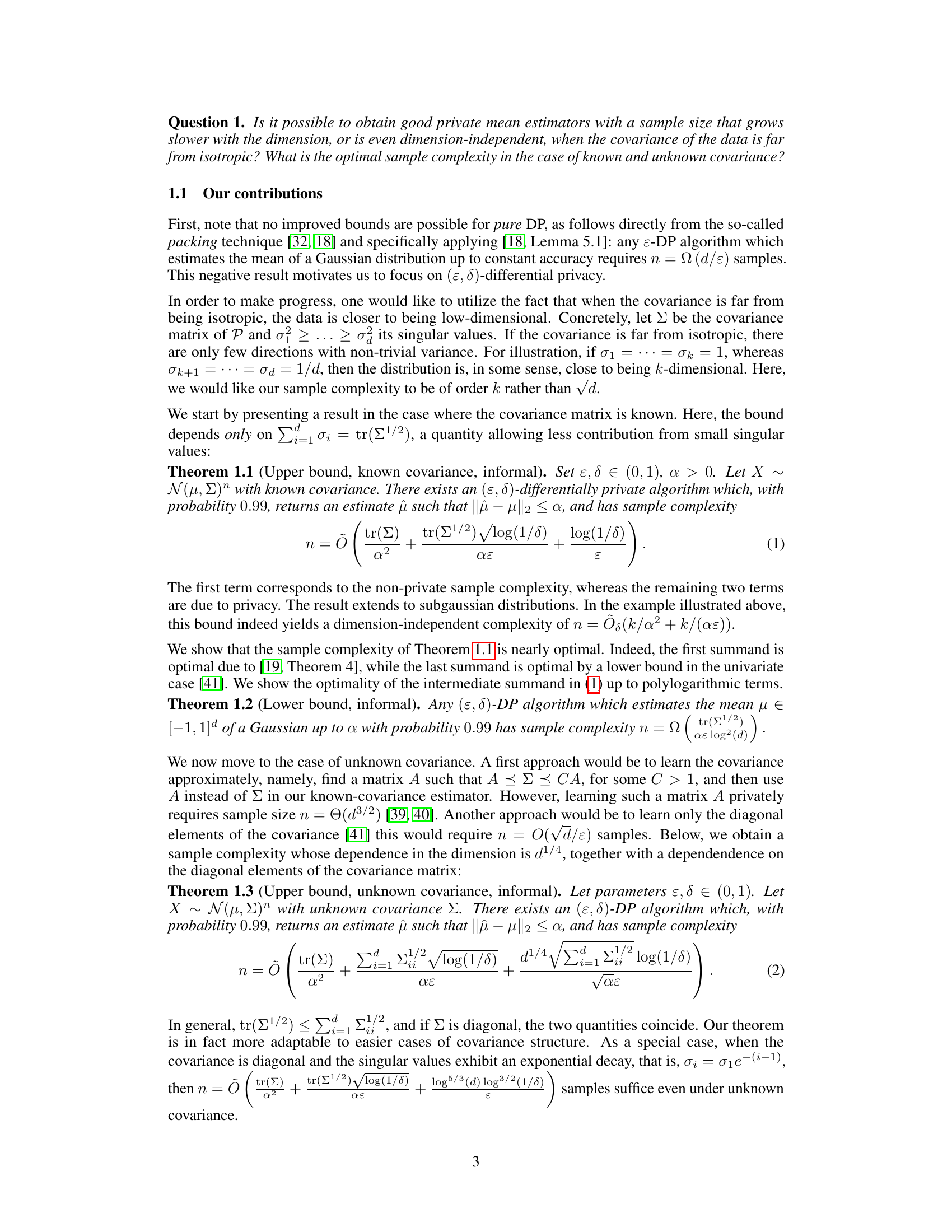

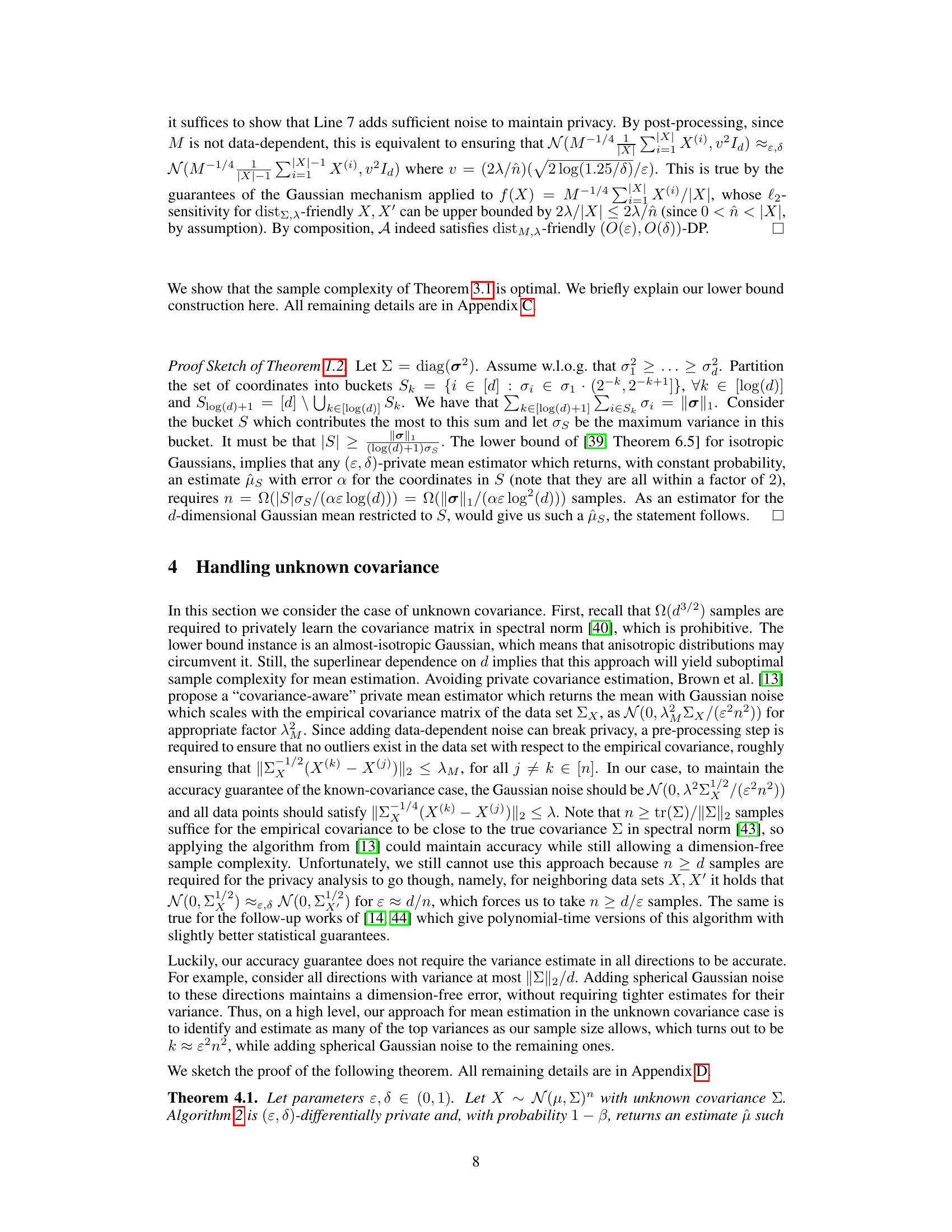

High-dimensional data analysis often requires balancing accuracy with individual privacy. Existing differentially private mean estimators suffer from a “curse of dimensionality,” needing many samples as the data dimension increases. This is especially problematic when data exhibits anisotropic properties, meaning data variance is unevenly distributed across dimensions. This is common in real-world datasets and ignoring it limits the effectiveness of existing methods.

This paper develops new differentially private algorithms that overcome these issues. The core contribution is that they achieve dimension-free sample complexities for anisotropic data, with error bounds improving upon previous results. The algorithms are designed for scenarios with both known and unknown covariance matrices, further demonstrating their versatility and applicability to diverse real-world problems.

Key Takeaways#

Why does it matter?#

This paper is crucial because it challenges the conventional wisdom about the limitations of differentially private mean estimation in high dimensions. By focusing on anisotropic data, a more realistic scenario, the authors achieve dimension-independent sample complexity. This finding opens new avenues for privacy-preserving data analysis in high-dimensional settings and potentially influences broader machine learning practices. The improved theoretical lower bounds and optimal sample complexities are significant contributions.

Visual Insights#

Full paper#