↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

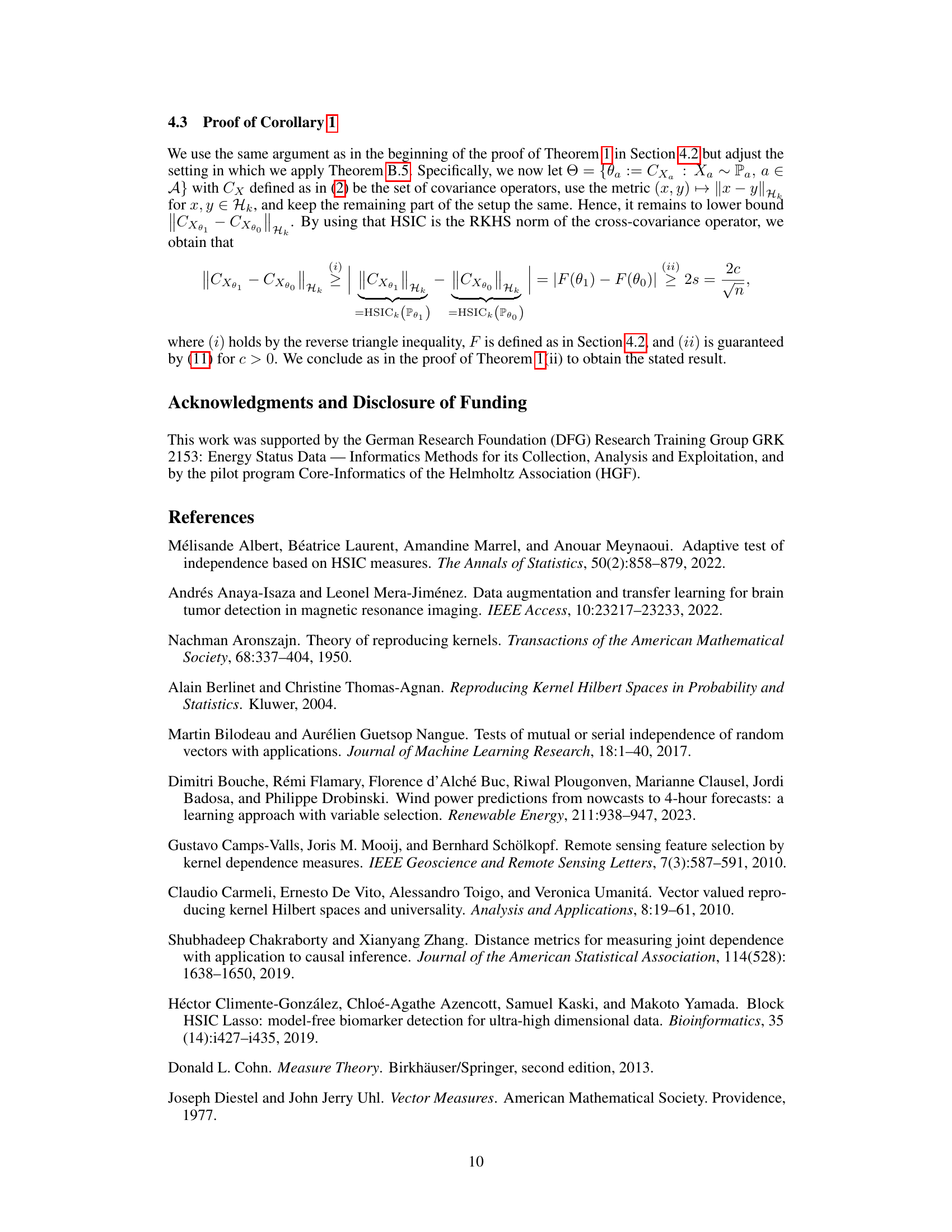

Estimating independence between random variables using kernel methods is crucial in various fields, with the Hilbert-Schmidt Independence Criterion (HSIC) being a popular choice. However, the optimal estimation rate for HSIC remained unknown for nearly two decades, hindering the development of efficient and reliable algorithms. This paper addresses this critical issue.

This research establishes the minimax lower bound for HSIC estimation, proving that the existing estimators, such as U-statistic, V-statistic, and Nyström-based ones, achieve the optimal rate of O(n⁻¹/²). This finding settles a long-standing open problem, confirming the efficiency of commonly used methods. Moreover, the results extend to estimating cross-covariance operators, providing a more solid theoretical foundation for applications using HSIC.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working with kernel methods and independence measures. It establishes a minimax optimal rate for HSIC estimation, resolving a long-standing open problem and validating the optimality of many existing estimators. This directly impacts the reliability and efficiency of numerous applications relying on HSIC, from independence testing to causal discovery.

Visual Insights#

Full paper#