↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Generative models frequently produce outputs that do not meet basic quality criteria. Prior work on validity-constrained distribution learning adopts a worst-case approach, showing that proper learning requires exponentially many queries. This paper shifts the focus to more realistic scenarios. The core challenge is ensuring that a learned model outputs invalid examples with a provably small probability while maintaining low loss.

This paper tackles this problem by investigating learning settings where guaranteeing validity is less computationally demanding. The authors consider scenarios where the data distribution belongs to the model class and the log-loss is minimized, demonstrating that significantly fewer samples are needed. They also explore settings where the validity region is a VC-class, showing that a limited number of validity queries suffice. The work provides new algorithms with improved query complexity and suggests directions for further research into the interplay of loss functions and validity guarantees.

Key Takeaways#

Why does it matter?#

This paper is important because it challenges the pessimistic view of validity-constrained distribution learning, showing that guaranteeing valid outputs is easier than previously thought under specific conditions. It opens new avenues for research by demonstrating algorithms with reduced query complexity and highlighting the role of loss functions in ensuring validity. This work is highly relevant to researchers in generative models, impacting the development of high-quality, reliable systems.

Visual Insights#

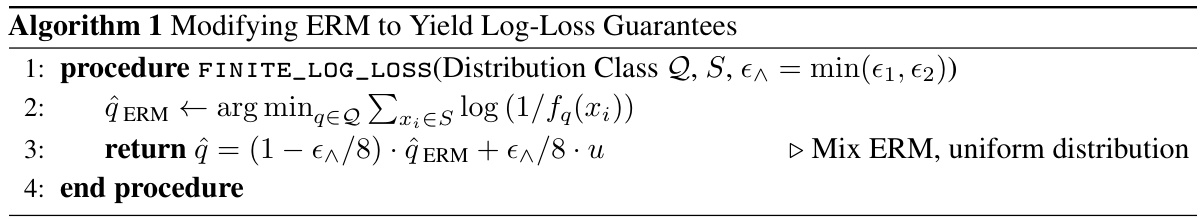

This algorithm modifies the Empirical Risk Minimization (ERM) to achieve guarantees on both the log-loss and the validity of the generated model. It does so by mixing the ERM output with a uniform distribution, with the mixing weight determined by the desired levels of suboptimality in loss (€1) and invalidity (€2). The algorithm uses no validity queries.

Full paper#