↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

The John ellipsoid problem, finding the minimum volume ellipsoid enclosing a set of points, is crucial in various fields like statistics and optimization. Existing algorithms rely on iterative computation of leverage scores, leading to high computational costs, especially for high-dimensional data. This poses a significant challenge for applications involving massive datasets or real-time processing.

This paper introduces a novel algorithm to improve the efficiency of John ellipsoid computation. It leverages lazy updates, delaying high-accuracy leverage score computations, and employs fast rectangular matrix multiplication for batch processing. The result is a substantial speedup, achieving nearly linear time complexity. The researchers also extend their approach to create low-space streaming algorithms suitable for resource-constrained environments. These contributions not only enhance computational efficiency but also expand the practical applicability of John ellipsoids to a wider range of problems.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in computer science, statistics, and optimization because it significantly accelerates the computation of John ellipsoids, a fundamental problem with wide-ranging applications. The improved algorithms offer substantial speedups, particularly for large datasets, opening up new possibilities for applying John ellipsoids in various domains. Its exploration of low-space streaming algorithms also addresses limitations in memory-constrained settings, broadening the applicability of the method.

Visual Insights#

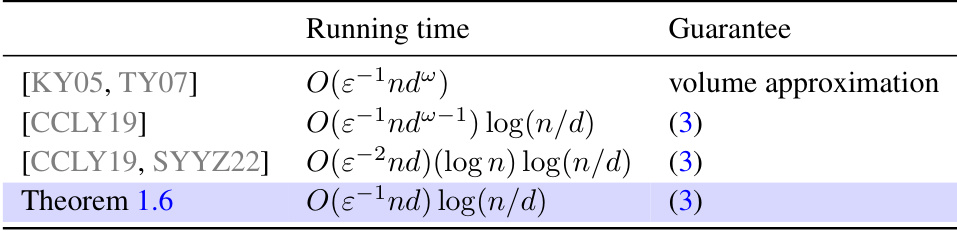

This table compares the running times of different algorithms for approximating the John ellipsoid for dense matrices where the number of rows (n) is significantly larger than the number of columns (d). It highlights the improvement achieved by the algorithm presented in Theorem 1.6 compared to previous works. The guarantee column refers to the approximation quality of the resulting ellipsoid.

Full paper#