↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many real-world applications involve online decision-making where feedback is delayed and occasionally very late. Existing bandit algorithms often struggle in these scenarios, especially when dealing with unpredictable delays and outliers. Moreover, these algorithms frequently require prior knowledge of the maximal delay, making them less practical. This creates a need for algorithms that work well across a broad range of delay patterns and are robust to outliers.

This paper introduces a new algorithm that addresses these issues. It is designed to work well regardless of whether the underlying loss generating process is stochastic or adversarial—what’s called a “best-of-both-worlds” approach. This is achieved through three key innovations: a novel implicit exploration scheme, a method for controlling distribution drift without assuming bounded delays, and a procedure that links the standard regret to the regret calculated with the delayed and potentially skipped observations. The resulting algorithm offers significant improvements in terms of robustness and performance compared to previous methods.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on bandit algorithms with delayed feedback, a common challenge in various real-world applications. It offers a novel solution to improve the robustness and efficiency of these algorithms, thus opening new avenues for practical applications and further research in online learning. The best-of-both-worlds approach is particularly relevant, offering improved performance across stochastic and adversarial settings. It addresses critical limitations in existing research, focusing on dealing with outliers and excessive delays. The findings of this study are important for advancing our understanding and solutions for the class of online learning problems.

Visual Insights#

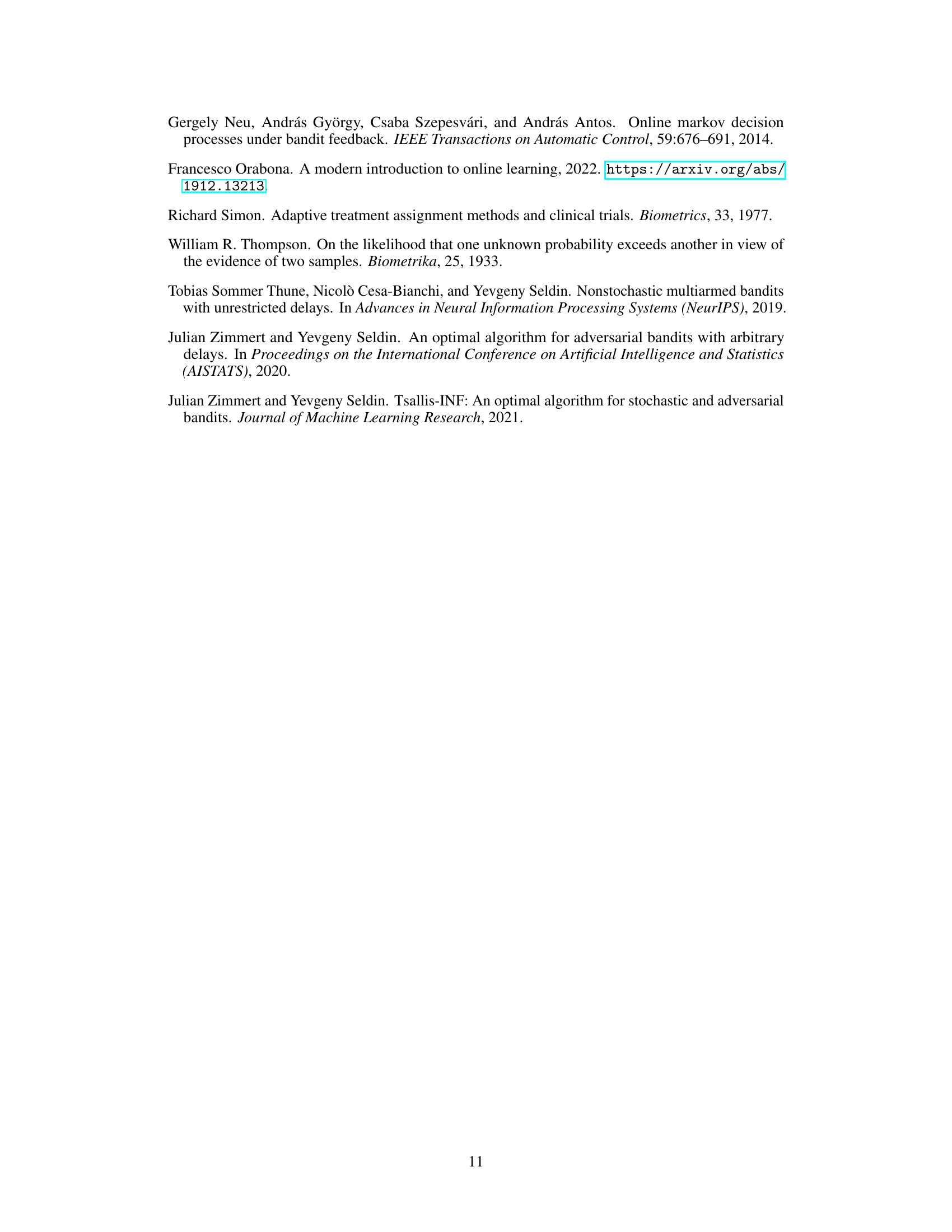

This algorithm is a best-of-both-worlds modification of the adversarial FTRL algorithm with a hybrid regularizer. It incorporates three key innovations: biased loss estimators (implicit exploration), an adjusted skipping threshold, and a novel control of distribution drift under highly varying delays. The algorithm maintains a set of skipped rounds, a cumulative count of active outstanding observations, and a vector of cumulative observed loss estimates. At each round, it samples an arm based on an FTRL distribution, updates loss estimates, counts outstanding observations, and applies a skipping threshold to manage excessive delays. The skipping threshold and loss estimates are dynamically adjusted based on the running count of outstanding observations.

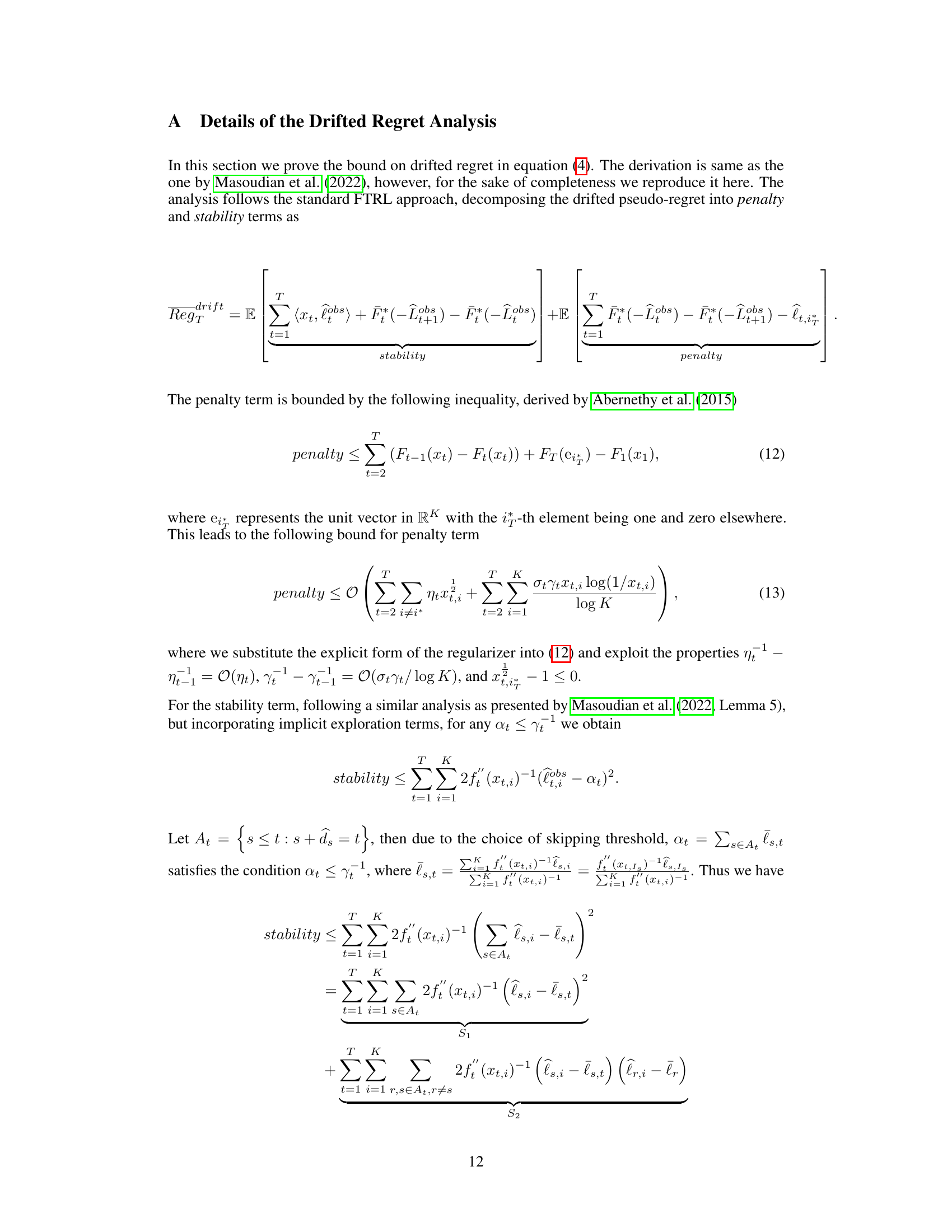

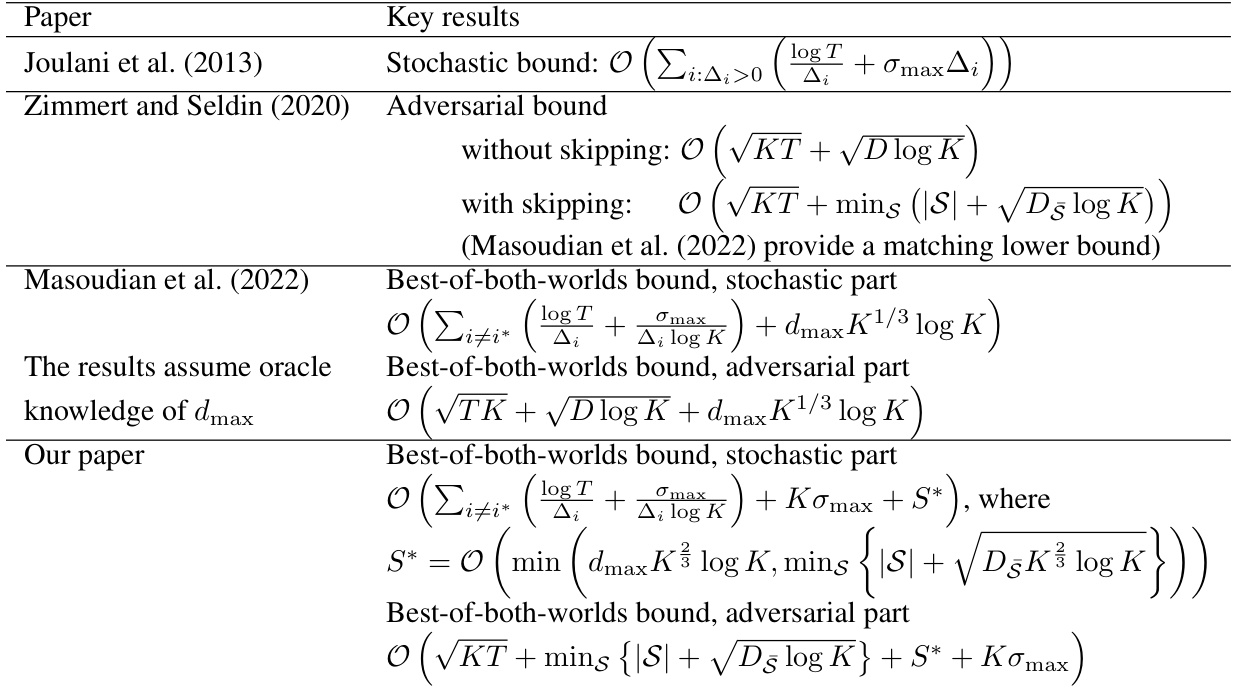

This table compares the key results (regret bounds) of the proposed algorithm with those of several state-of-the-art algorithms for bandits with delayed feedback. It highlights the differences in terms of assumptions (e.g., knowledge of maximal delay), regret bounds (stochastic and adversarial), and the use of skipping techniques to handle excessive delays. The notation used in the regret bounds is defined in the caption, allowing for a detailed comparison of the algorithm’s performance under different scenarios.

Full paper#