↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many machine learning and sampling problems involve optimizing nonconvex functions over probability distributions. Current optimization methods in the Wasserstein space often struggle with nonconvexity, lacking strong theoretical guarantees. Existing methods like the Forward-Backward Euler lack convergence analysis for these complex scenarios.

This paper introduces a novel optimization algorithm, the semi Forward-Backward Euler method, specifically designed to handle non-geodesically convex functions in the Wasserstein space. It provides convergence analysis and rates under various conditions (differentiable or nonsmooth). This is a significant contribution as it offers theoretical guarantees, previously unavailable, for a widely applicable class of problems. The results are validated through numerical experiments on challenging non-log-concave distributions, demonstrating the effectiveness of the proposed method.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working in optimization and sampling, particularly those dealing with nonconvex problems in probability spaces. It offers novel theoretical guarantees for a modified Forward-Backward Euler scheme, addressing a significant gap in the field. The findings are highly relevant to various applications including machine learning and Bayesian inference, opening up new avenues for algorithm design and analysis in nonconvex settings.

Visual Insights#

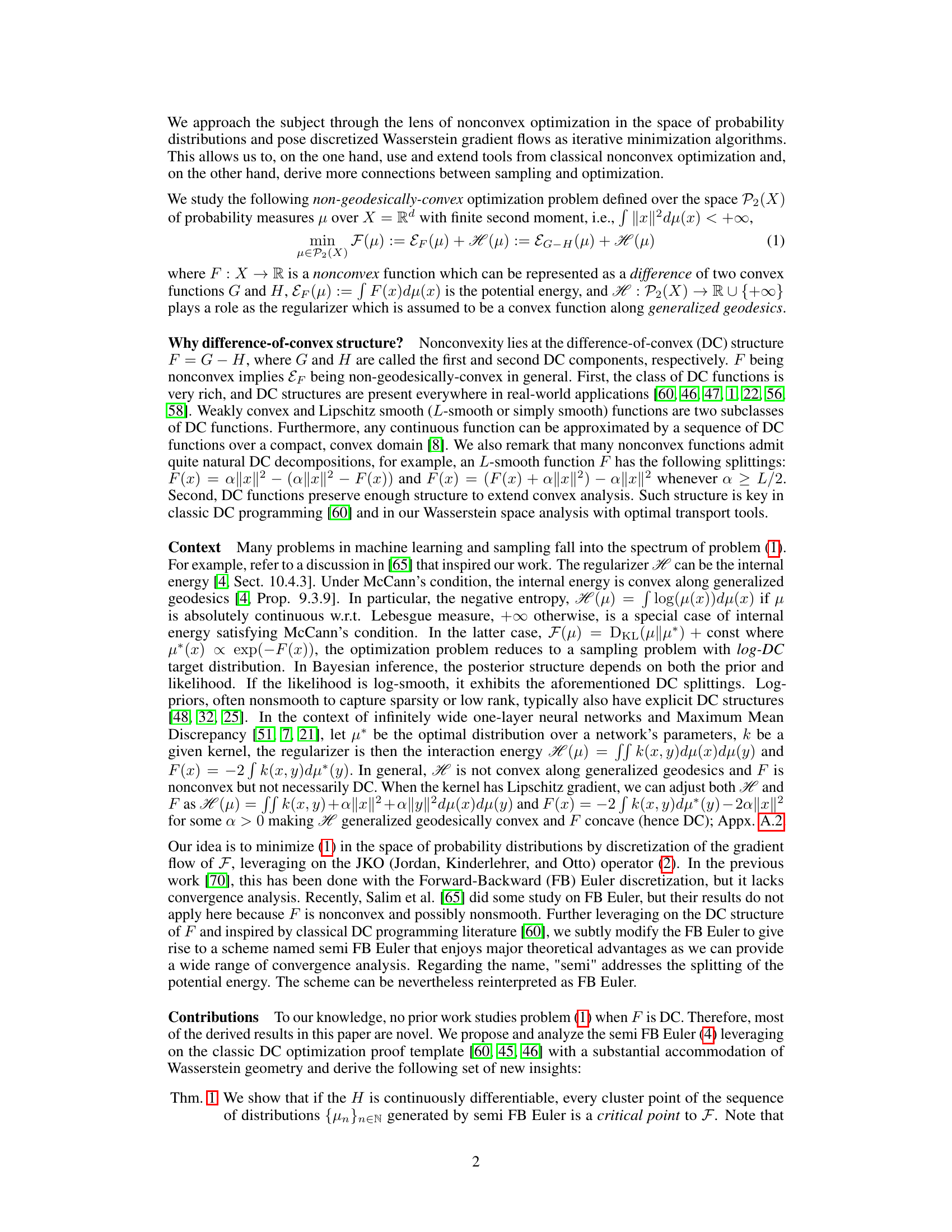

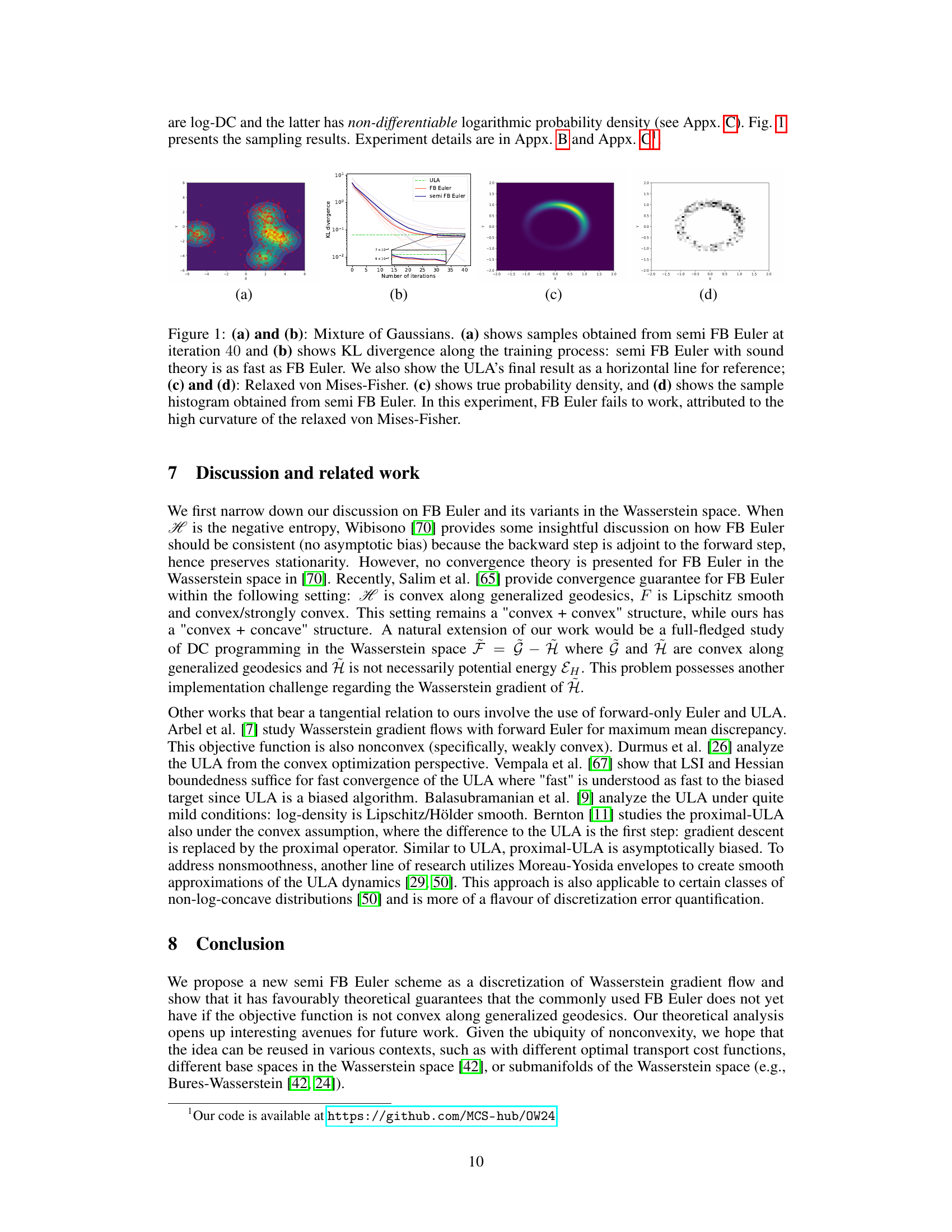

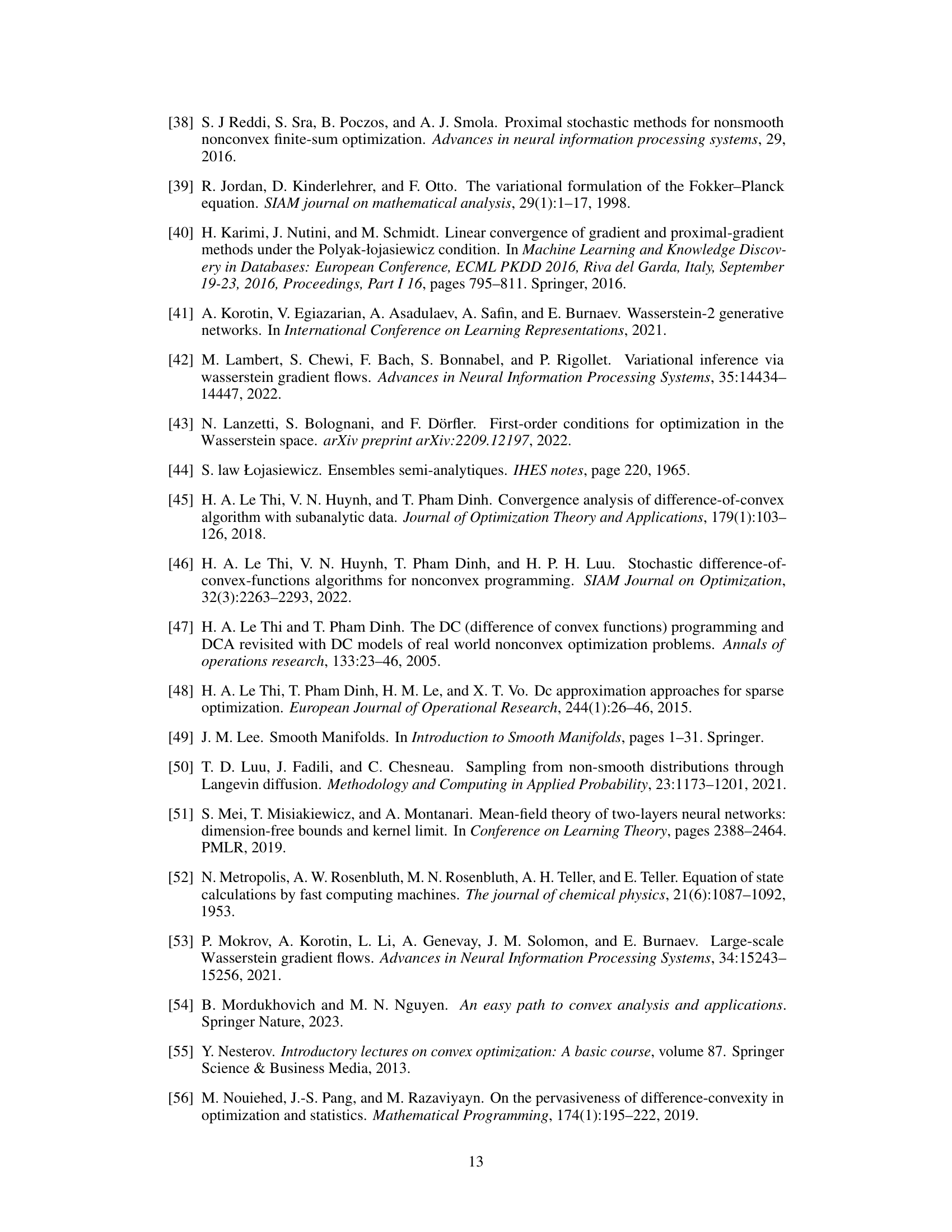

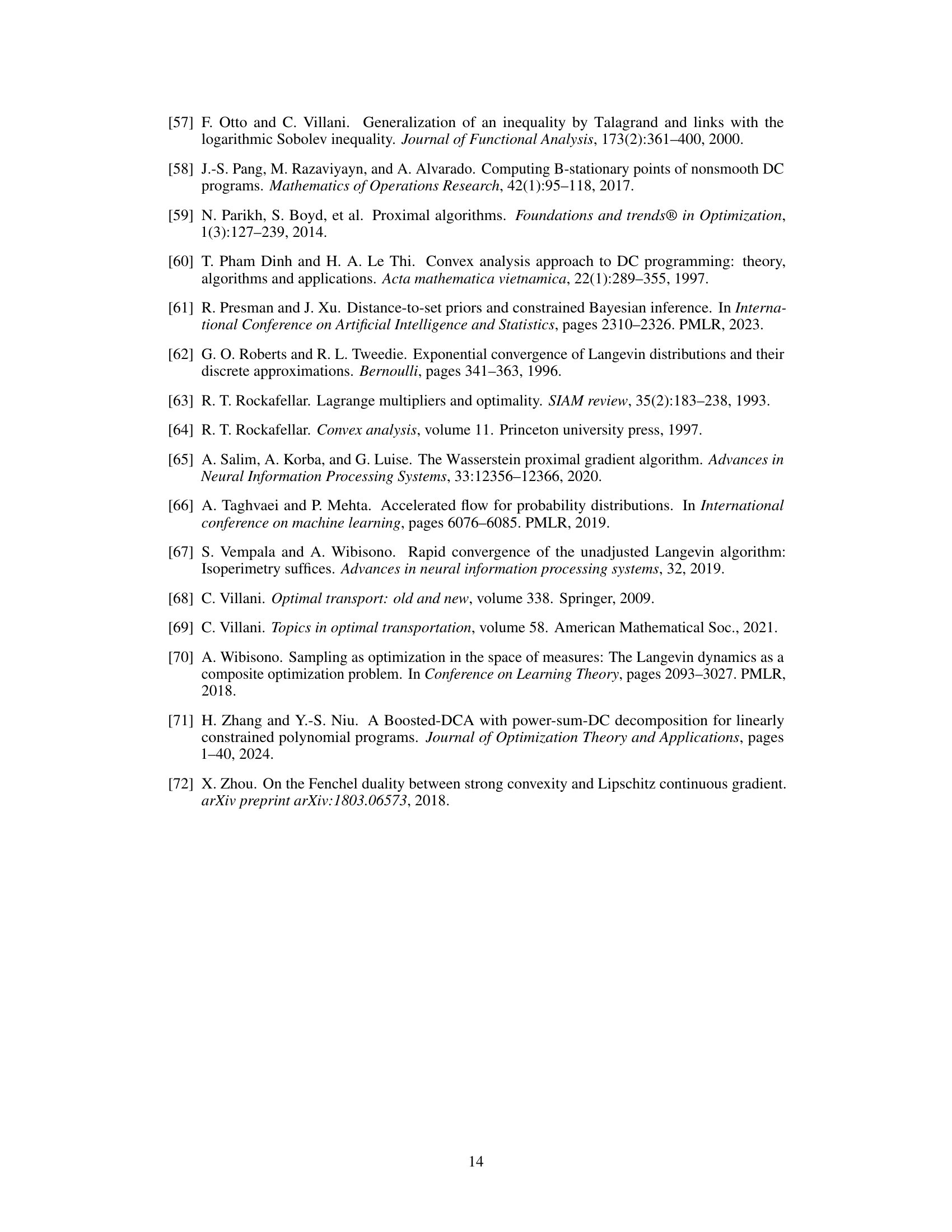

This figure contains four subfigures visualizing the results of the semi FB Euler algorithm applied to Gaussian Mixture and Relaxed von Mises-Fisher distributions. Subfigure (a) shows a heatmap of samples generated by the algorithm for Gaussian Mixture. Subfigure (b) is a plot showing KL divergence vs. number of iterations for semi FB Euler, FB Euler, and ULA algorithms, highlighting the faster convergence of the semi FB Euler method. Subfigures (c) and (d) show the true probability density and a histogram of samples obtained by the semi FB Euler algorithm, respectively, for the Relaxed von Mises-Fisher distribution; FB Euler failed for this case.

Full paper#