↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Many machine learning problems involve reward distributions that aren’t easily modeled using traditional methods. Existing work on generalized linear bandits often assumes simplified distributions like subgaussian, which are less realistic in many applications. This limits the applicability and accuracy of theoretical guarantees.

This paper tackles this limitation by proving that generalized linear bandits with subexponential reward distributions are self-concordant. Using this property, it develops novel second-order regret bounds that are both more accurate and applicable to a much wider range of problems. These bounds are free of an exponential dependence on problem parameters, a significant improvement over previous results. The findings extend to a broader class of problems including Poisson, exponential, and gamma bandits.

Key Takeaways#

Why does it matter?#

This paper significantly advances research on generalized linear bandits by providing the first regret bounds for problems with subexponential tails, broadening the applicability to a wider range of real-world problems and improving the accuracy of theoretical guarantees. It establishes the self-concordance property for a broad class of natural exponential families, enabling the use of efficient optimization techniques. The findings are highly relevant to researchers working on optimization and statistical estimation within the bandit framework.

Visual Insights#

This figure shows the result of numerical simulations performed on exponential bandits using the OFU-GLB algorithm. The plot displays the average regret (the total cumulative shortfall of the mean reward of the arms the learner chose relative to the optimal choice) across 60 independent runs, along with standard deviation error bars, plotted against the time horizon (number of rounds). The plot visually demonstrates the sublinear growth of regret over time, consistent with the theoretical findings of the paper.

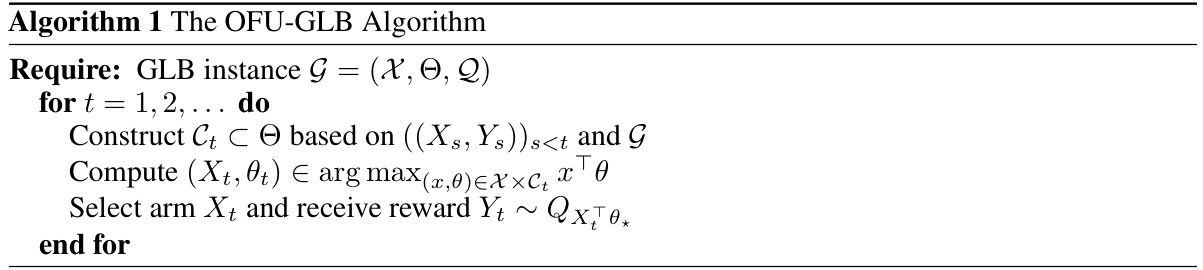

This algorithm is an optimistic algorithm for generalized linear bandits, where the learner selects an arm that maximizes the reward in the confidence set. The algorithm’s confidence set is constructed using past information and the properties of the generalized linear bandit model.

Full paper#