↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Person re-identification (ReID) systems, which identify individuals across different cameras and time points, are increasingly using multiple modalities (like visible and infrared images). However, current research largely focuses on single-modality systems, leaving cross-modality ReID vulnerable. This is a significant security concern because attackers could easily mislead these systems.

This paper introduces a new method called the Cross-Modality Perturbation Synergy (CMPS) attack to address this issue. CMPS is a universal perturbation attack that works across different modalities by cleverly using gradient information to create adversarial examples. Experiments on multiple datasets showed that CMPS is highly effective in fooling cross-modality ReID systems. The research is important because it identifies a critical gap in current ReID security research and provides a new benchmark for testing and improving the robustness of cross-modality ReID systems.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in computer vision and security, particularly those working on person re-identification. It highlights a critical vulnerability in cross-modality ReID systems, a field rapidly gaining importance due to the increasing use of diverse sensor data in real-world applications. By demonstrating the effectiveness of a novel attack method, the research motivates further development of more robust and secure ReID models and promotes critical discussion on security implications.

Visual Insights#

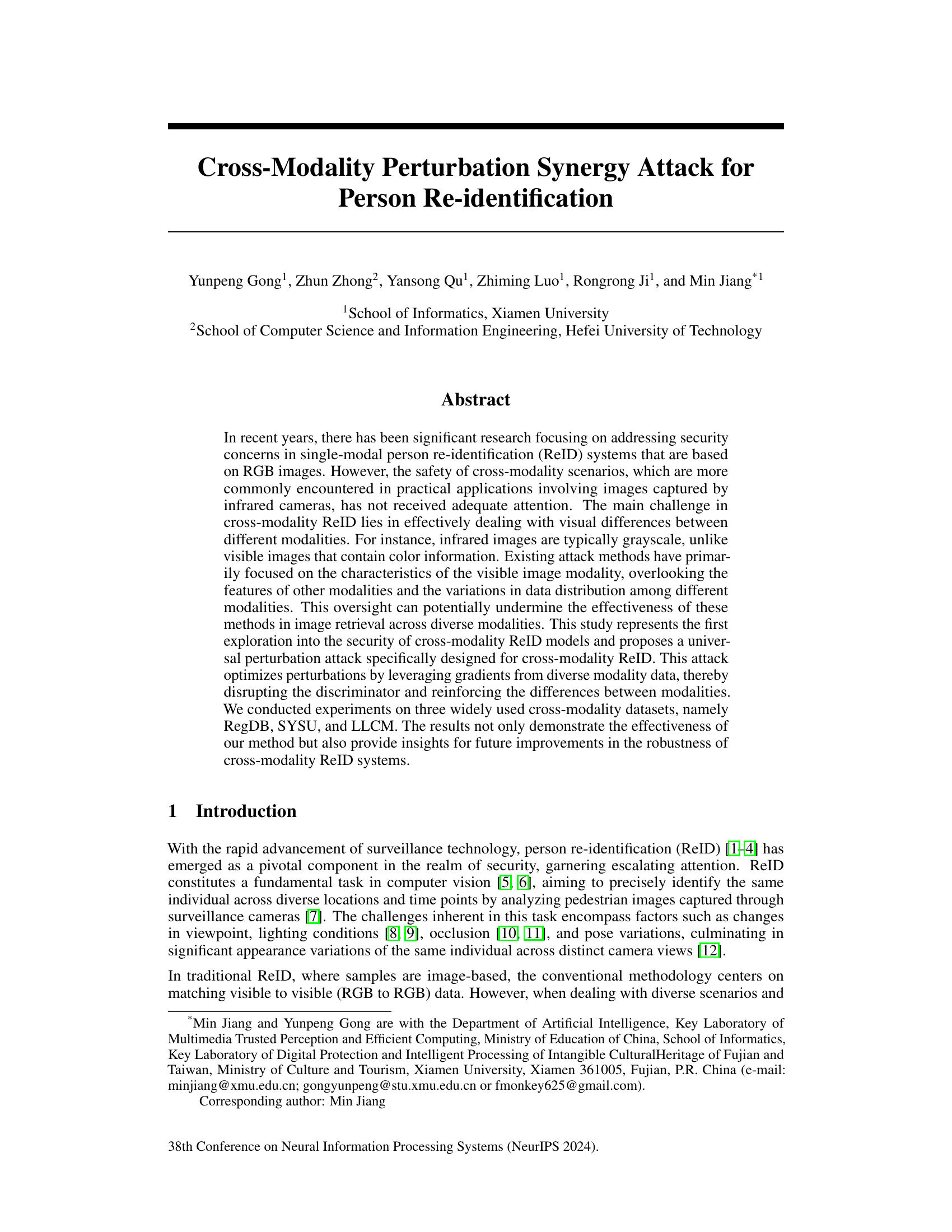

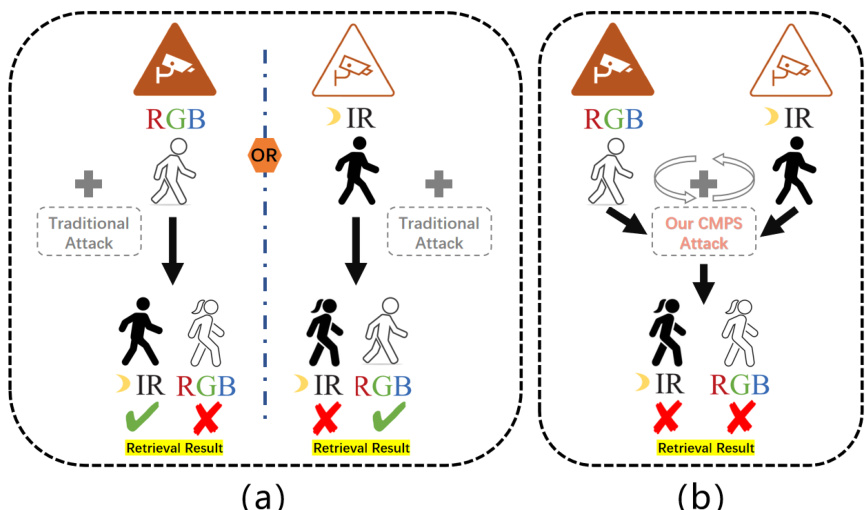

This figure compares traditional single-modality attack methods with the proposed Cross-Modality Perturbation Synergy (CMPS) attack. Traditional methods, shown in (a), are ineffective in cross-modality scenarios because they fail to account for the differences between modalities and cannot mislead retrieval results across all modalities simultaneously. In contrast, CMPS (b) leverages an intrinsic mechanism that accounts for these differences, effectively associating multiple modalities and achieving simultaneous misleading results in both modalities.

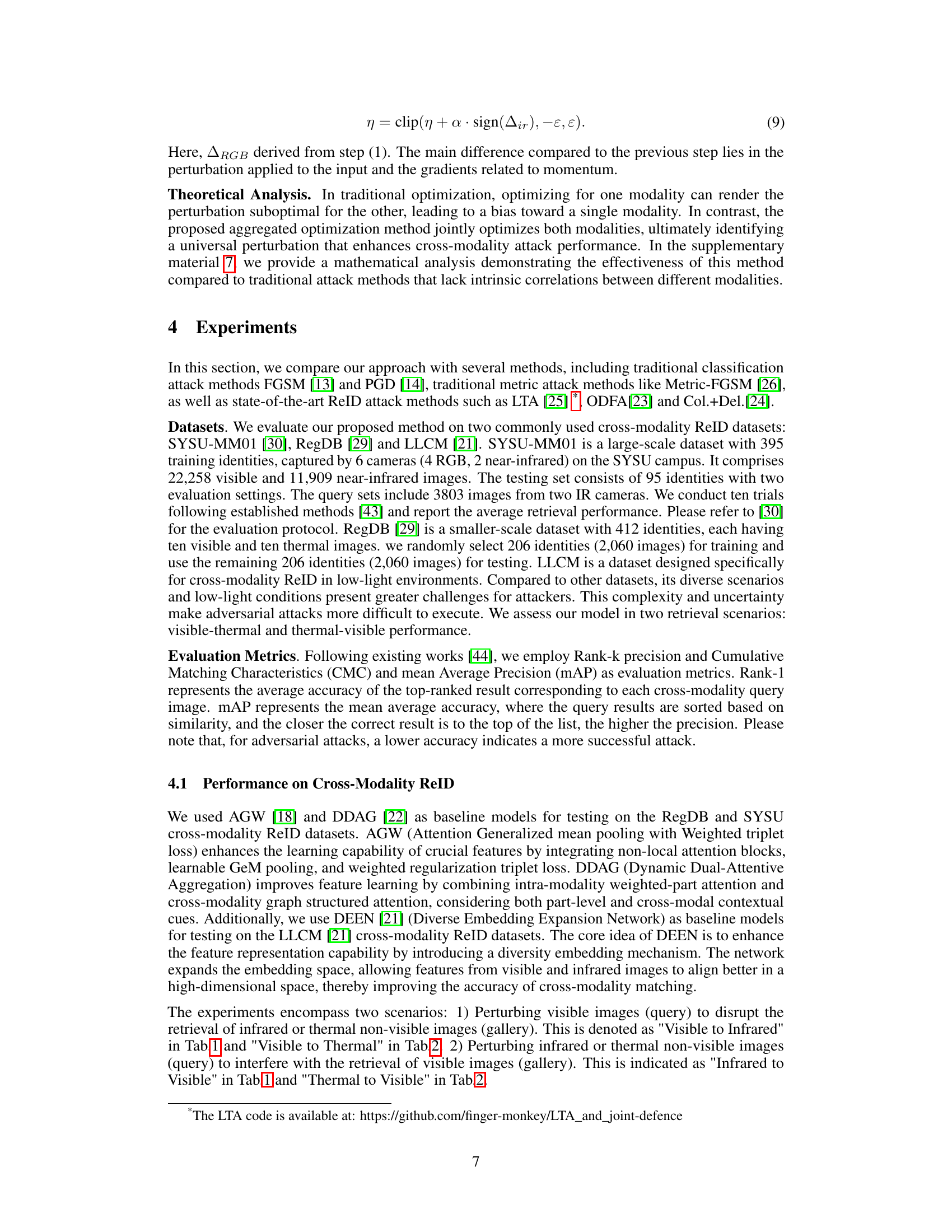

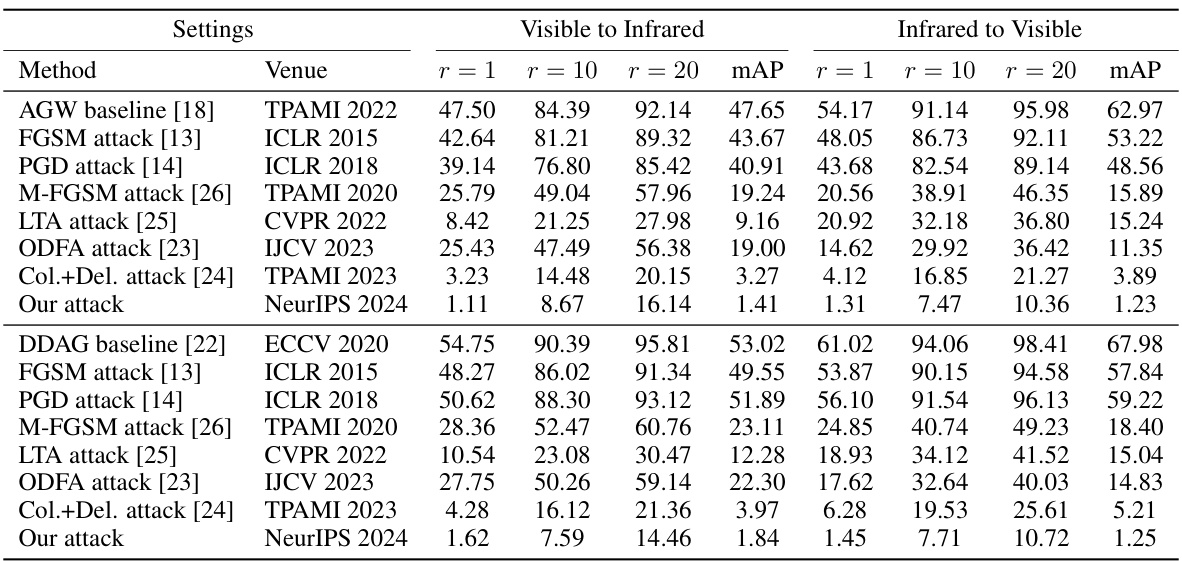

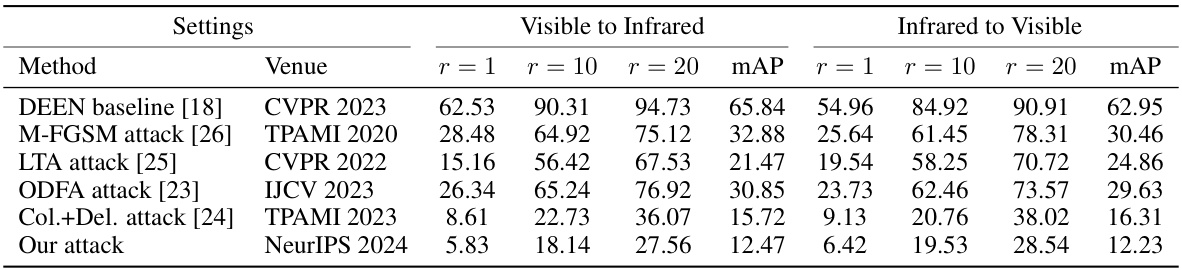

This table presents the results of attacking cross-modality person re-identification (ReID) systems using different attack methods on the SYSU dataset. It shows the rank-1 accuracy, rank-10 accuracy, rank-20 accuracy, and mean average precision (mAP) for both scenarios: visible images querying infrared images (‘Visible to Infrared’) and infrared images querying visible images (‘Infrared to Visible’). The all-search and indoor-search modes are specified for each scenario respectively.

In-depth insights#

Cross-Modal Attacks#

Cross-modal attacks, targeting person re-identification (ReID) systems, pose a significant challenge due to the inherent differences in visual information across modalities like RGB and infrared. Existing single-modal attack methods often fail to generalize effectively to cross-modal scenarios. A successful cross-modal attack requires strategies to simultaneously mislead the system using perturbations that effectively bridge the gap between the distinct visual characteristics of different modalities. This necessitates an understanding of shared features and the impact of modality-specific variations on the model’s decision-making process. The development of universal perturbations that can successfully deceive ReID models across modalities requires novel methods such as synergistic optimization, incorporating multiple modality gradients, or using transformations to standardize the visual representations. The success of these approaches will largely depend on factors such as the specific modality, the model architecture, and the training data. Robustness against cross-modal attacks is crucial for the security and reliability of ReID systems, particularly in real-world applications where multiple sensor modalities are commonly used. Therefore, robust and generalized defense mechanisms are urgently needed to mitigate the risks of cross-modal attacks.

CMPS Framework#

The CMPS (Cross-Modality Perturbation Synergy) framework is a novel approach to crafting adversarial attacks against cross-modal person re-identification (ReID) systems. Its core strength lies in its synergistic optimization strategy, leveraging gradient information from multiple modalities (e.g., RGB and infrared) simultaneously to generate a universal perturbation. This contrasts sharply with traditional single-modality attack methods, which often fail to account for the significant visual discrepancies across modalities. The CMPS approach cleverly incorporates cross-modality triplet loss to ensure feature consistency across modalities, thereby enhancing the generality and effectiveness of the perturbation. Furthermore, the use of cross-modality attack augmentation (grayscale image transformations) helps to standardize visual representation and facilitate learning of modality-agnostic perturbations. The framework’s iterative optimization process involves extracting gradient information from one modality, applying the perturbation to another, and reiterating to optimize the universal perturbation. The result is a robust attack capable of successfully deceiving a wide range of cross-modal ReID models, highlighting vulnerabilities in existing systems and prompting the need for more robust and secure models.

Synergy Effects#

The concept of “Synergy Effects” in the context of a research paper likely refers to the combined effect of multiple factors or methods being greater than the sum of their individual parts. This could manifest in various ways. For instance, a synergistic attack strategy might combine different adversarial perturbation techniques to achieve a higher attack success rate than any individual technique could achieve alone. It may also refer to a combined modality approach, where using multiple sensory inputs (e.g., visible and infrared images) results in improved performance. The analysis of synergy effects would require a detailed investigation into how the combination of methods produces enhanced results compared to individual methods, potentially revealing underlying mechanisms and interactions crucial for optimization. Understanding synergy is vital, as it allows for the development of more effective and robust systems, which in the case of adversarial attacks would require more resilient defenses. The discussion of these effects should include both qualitative and quantitative analyses, demonstrating how the interaction of elements produces the enhanced results and explaining why such synergy occurs. Identifying the conditions under which synergy is most pronounced is also essential for improving system design and defense strategies.

Robustness Limits#

Analyzing the robustness limits of a system requires a multifaceted approach. Understanding the vulnerabilities inherent in the system’s architecture, algorithms, and data is crucial. For example, adversarial attacks exploit weaknesses in the model’s decision boundary or gradient calculations. Data quality and distribution play a critical role, with noisy or biased data leading to reduced robustness. The chosen evaluation metrics also influence the perceived robustness, with some metrics providing a more favorable view than others. Quantifying the robustness limits often involves carefully designing and conducting experiments to evaluate performance under various stress tests, such as adding noise to inputs, altering environmental conditions, or employing adversarial examples. Ultimately, establishing robustness limits necessitates a thorough exploration of these factors to provide a holistic and comprehensive evaluation.

Future Research#

Future research directions stemming from this work on cross-modality person re-identification (ReID) attacks could focus on several key areas. Improving the transferability of attacks across different ReID models and datasets is crucial, moving beyond current limitations that hinder generalization. Exploring more sophisticated attack strategies beyond gradient-based methods, perhaps using evolutionary algorithms or other advanced optimization techniques, should be investigated to overcome limitations of current gradient-based attacks. Developing robust defense mechanisms against these attacks is paramount; research should explore both algorithmic improvements to ReID models and data augmentation strategies. Finally, investigating real-world scenarios beyond the scope of current datasets, accounting for varying lighting conditions, occlusion, and diverse camera types, is necessary to ensure future security protocols are robust and effective in realistic deployments. The ethical implications of this research also warrant further exploration.

More visual insights#

More on figures

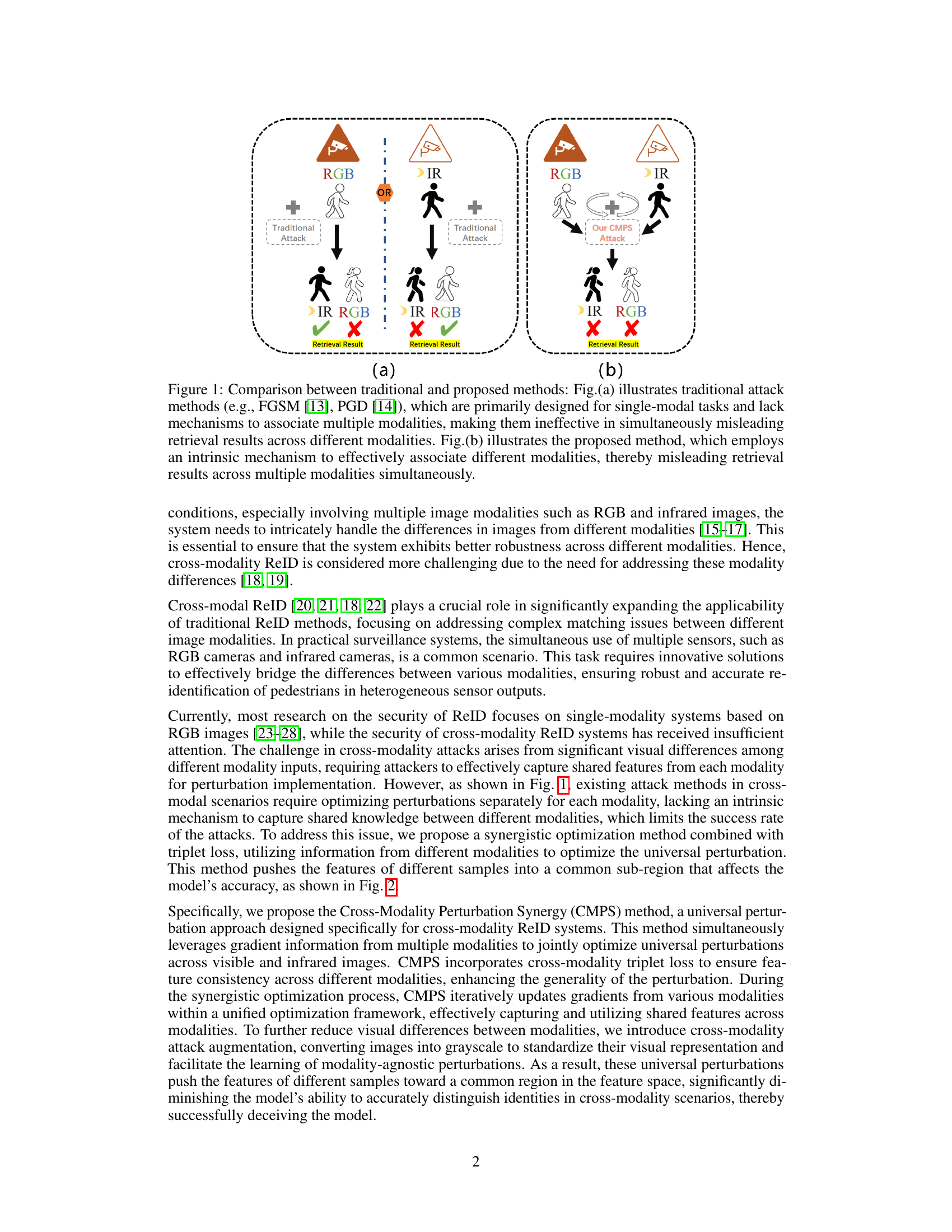

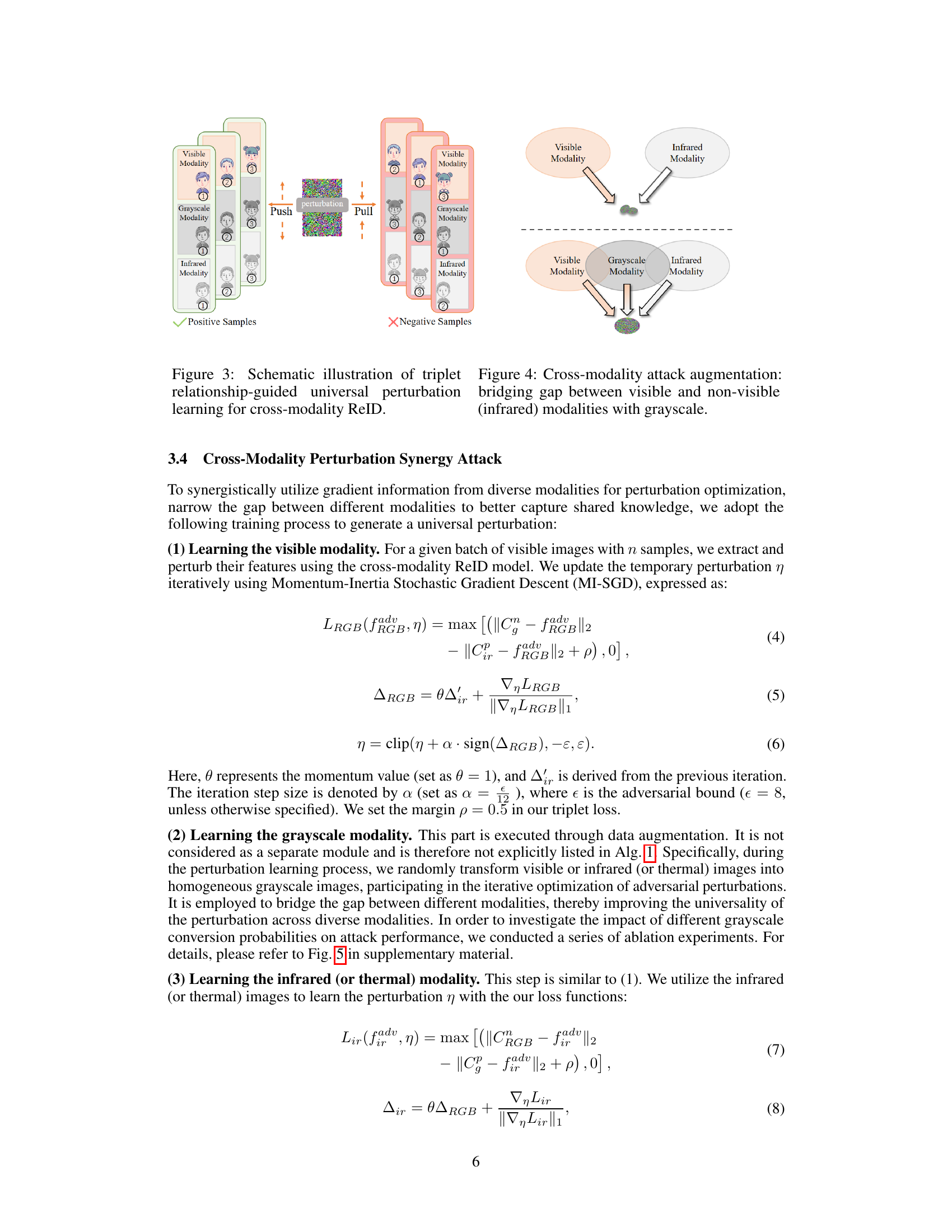

This figure illustrates the CMPS attack framework. It uses grayscale image transformations to reduce differences between modalities and iteratively learns a universal perturbation by aggregating feature gradients from different modalities. This shared knowledge between modalities enables more effective learning of cross-modal universal perturbations, pushing samples toward a common region and deceiving the model.

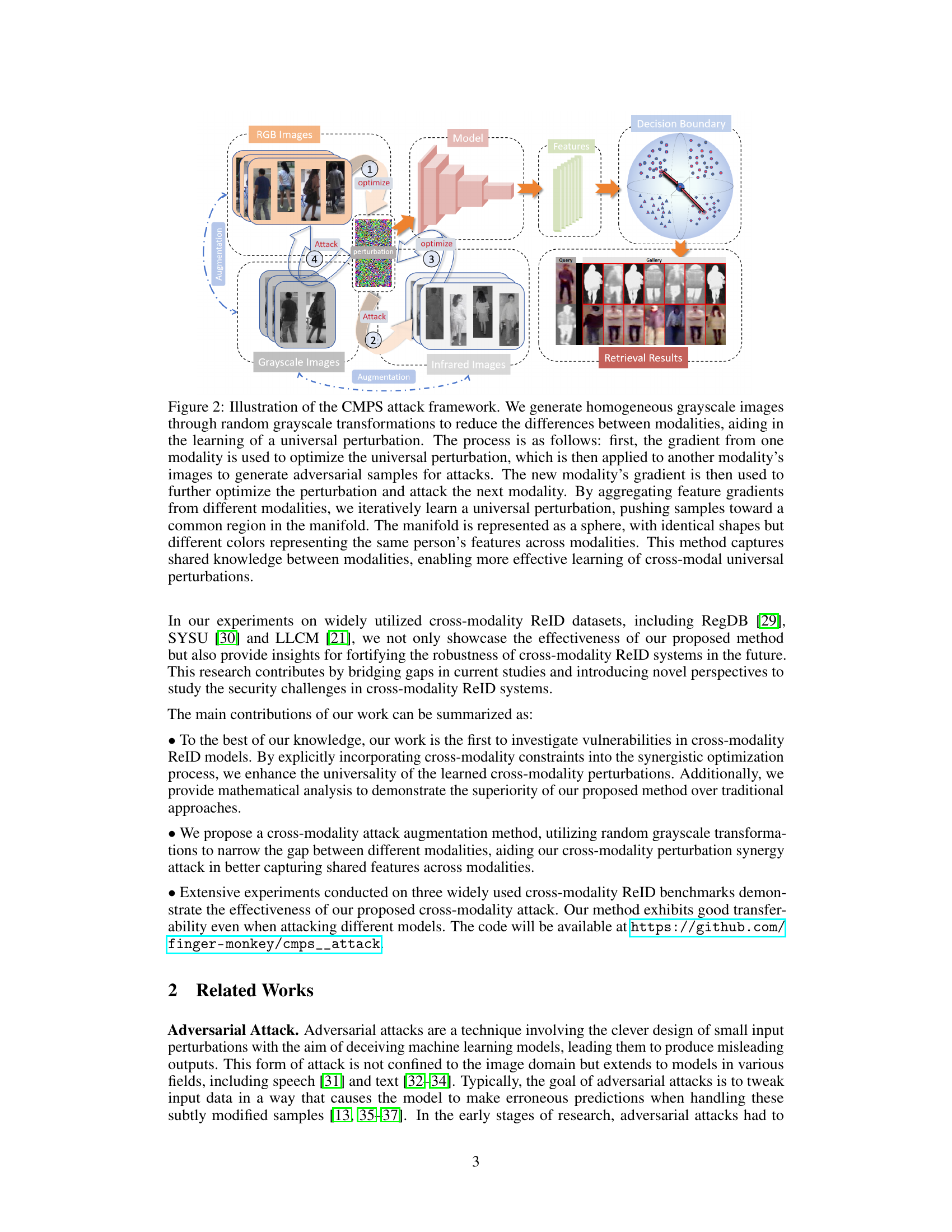

This figure illustrates the CMPS attack framework which uses a synergistic optimization method combined with triplet loss to learn universal perturbations. It leverages gradient information from multiple modalities to jointly optimize the perturbations. The process begins by generating homogeneous grayscale images to reduce differences between modalities, aiding the learning of a universal perturbation which is then iteratively optimized across different modalities. The goal is to push feature vectors of different samples towards a common region, deceiving the model.

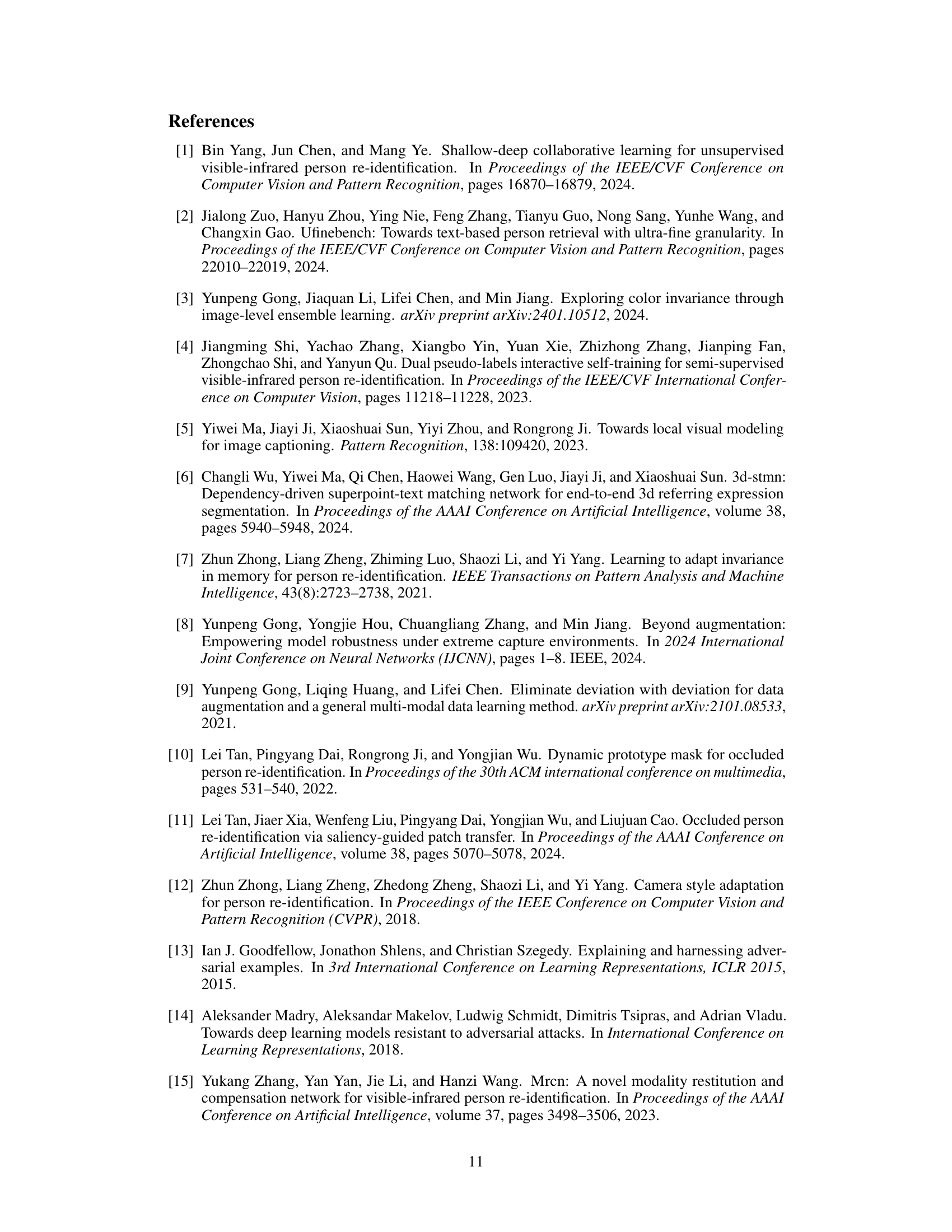

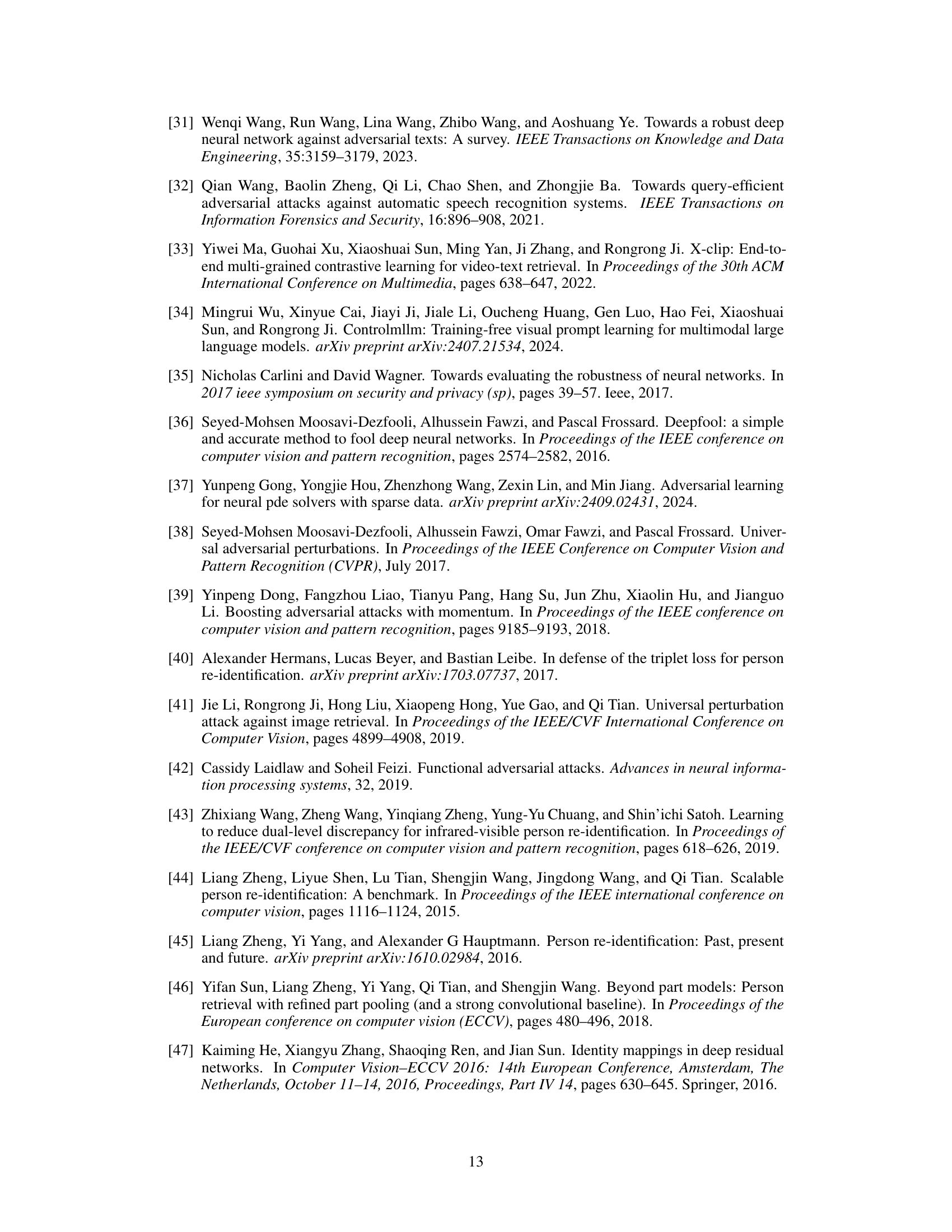

This figure shows the results of an ablation study evaluating the impact of different grayscale transformation probabilities on the attack performance. The lower the evaluation metrics (r=1, r=10, r=20, mAP), the higher the attack success rate. The experiment was conducted on the RegDB dataset using the AGW model as a baseline.

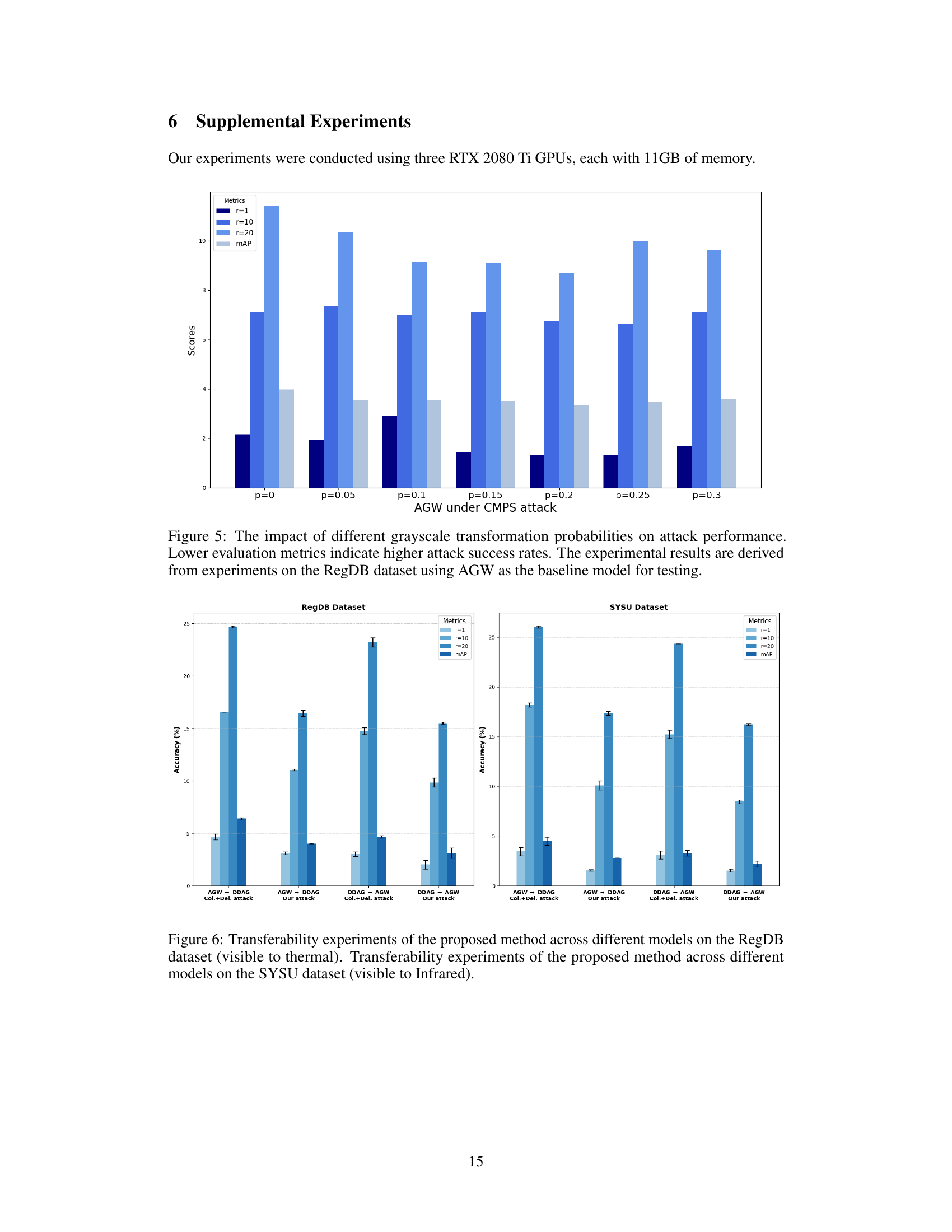

This figure demonstrates the transferability of the CMPS attack across different models and datasets. The left panel shows results for the RegDB dataset (visible to thermal), while the right panel presents results for the SYSU dataset (visible to infrared). Each bar represents the average accuracy across different metrics (rank-1, rank-10, rank-20, and mAP) for each model (AGW, DDAG, Col.+Del. attack, and the authors’ proposed method). The error bars show the standard deviation, illustrating the variability in performance. The results indicate the effectiveness of the universal perturbation learned by CMPS, which maintains high attack success rates even when transferred to different models.

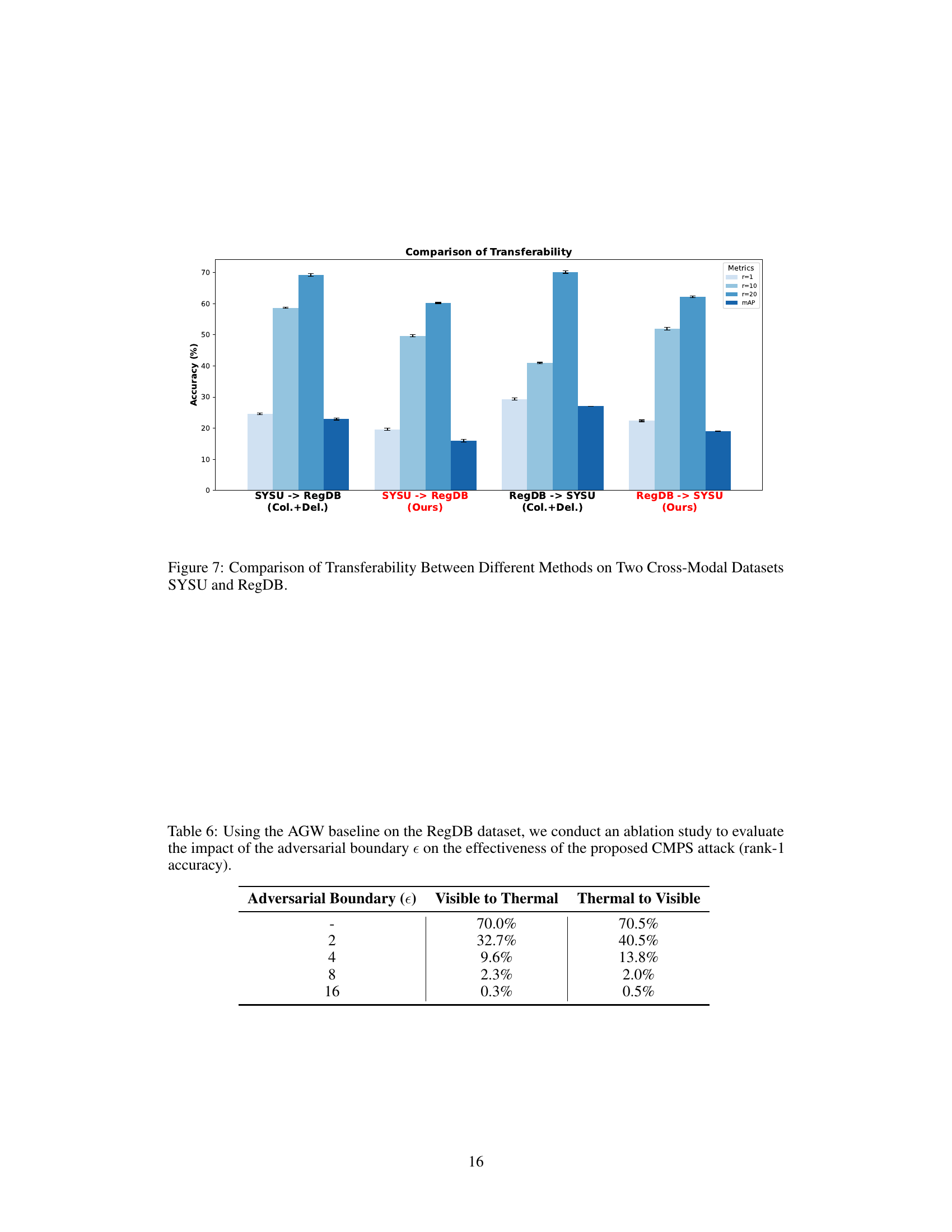

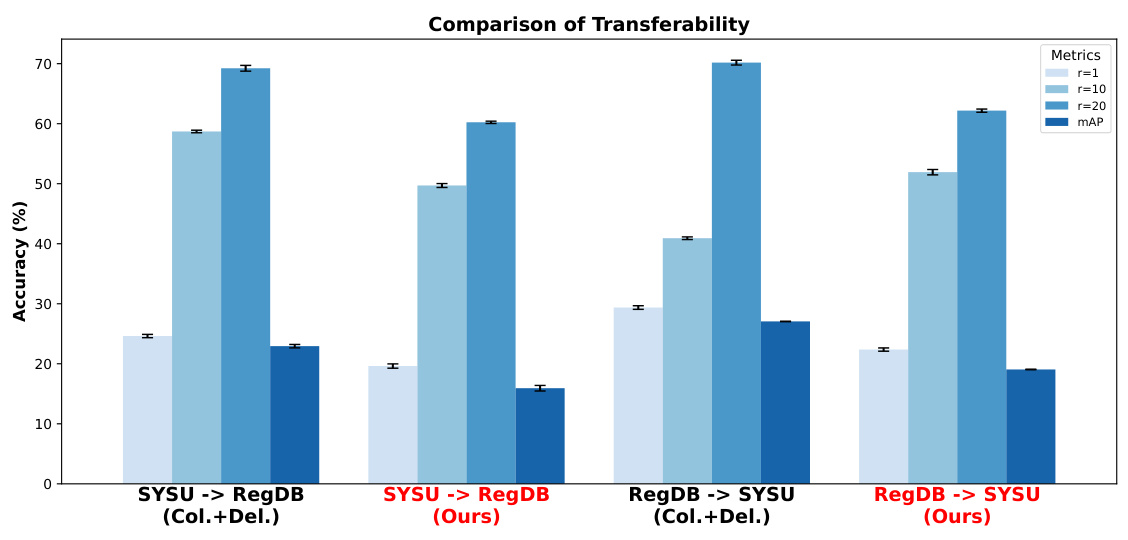

This figure compares the transferability of the proposed CMPS attack method and the Col.+Del. attack method across two cross-modal datasets, SYSU and RegDB. Transferability refers to the ability of an adversarial attack trained on one dataset to successfully attack models trained on a different dataset. The graph shows the rank-1, rank-10, rank-20 accuracies and mean average precision (mAP) for both methods when transferring attacks from SYSU to RegDB and vice-versa. Lower accuracy values indicate more successful attacks, hence better transferability. The results suggest that the CMPS method demonstrates superior transferability compared to Col.+Del.

More on tables

This table presents the results of attacking cross-modality person re-identification (ReID) systems on the SYSU dataset. It compares various attack methods (FGSM, PGD, M-FGSM, LTA, ODFA, Col.+Del., and the authors’ proposed CMPS attack) against two baseline models (AGW and DDAG). The table shows the rank-1 accuracy, rank-10 accuracy, rank-20 accuracy, and mean Average Precision (mAP) for both scenarios: visible images querying infrared images and infrared images querying visible images. Different search modes (all-search and indoor-search) were used depending on the query type.

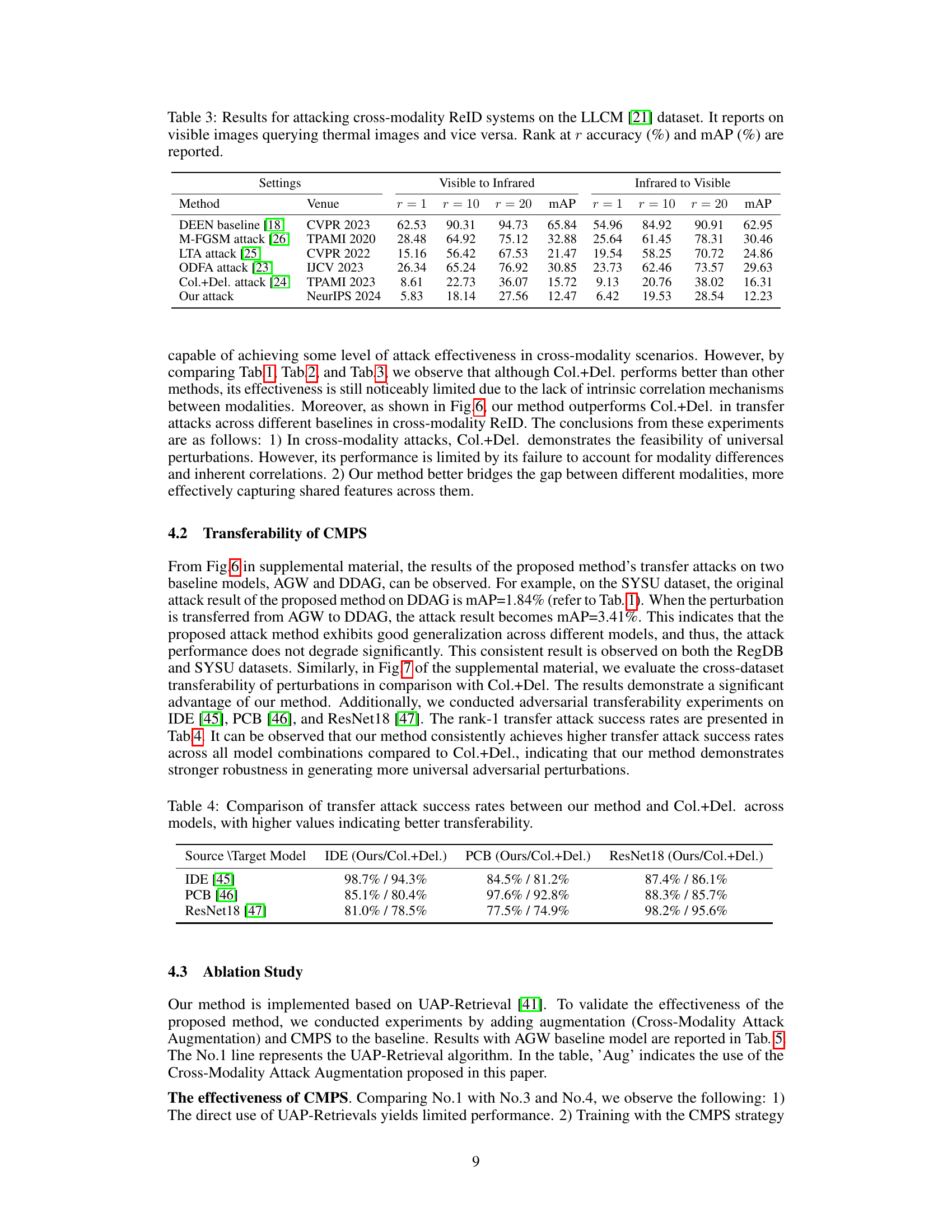

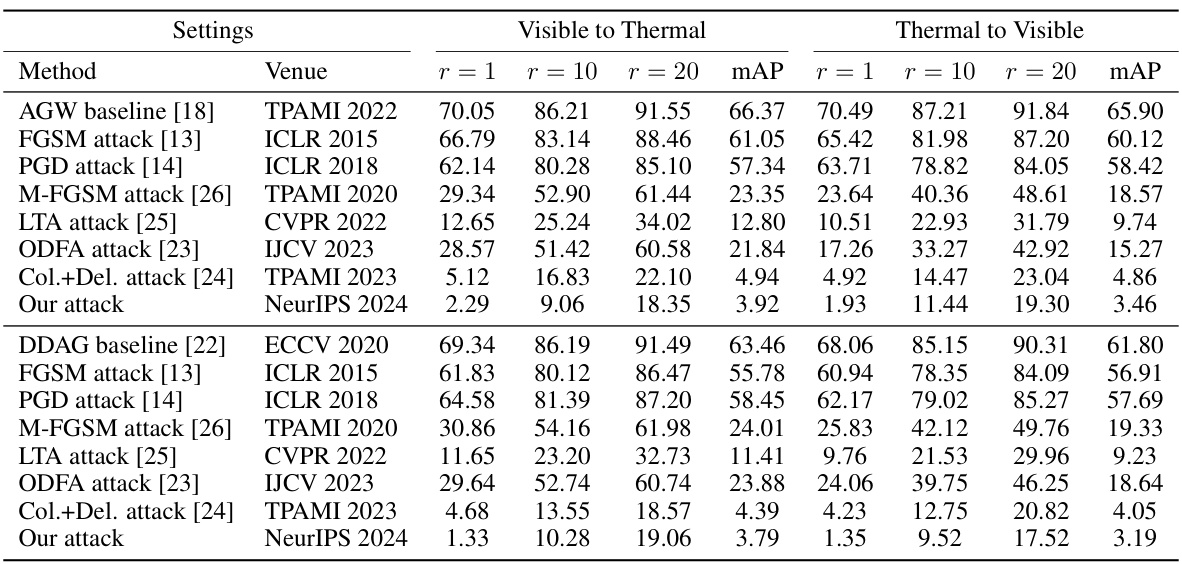

This table presents the results of attacking cross-modality person re-identification (ReID) systems using different attack methods on the LLCM dataset. The attacks involve using visible images to query thermal images and vice versa. The table shows the rank-1 accuracy, rank-10 accuracy, rank-20 accuracy, and mean Average Precision (mAP) for each attack method, providing a comprehensive evaluation of their effectiveness in deceiving the ReID system.

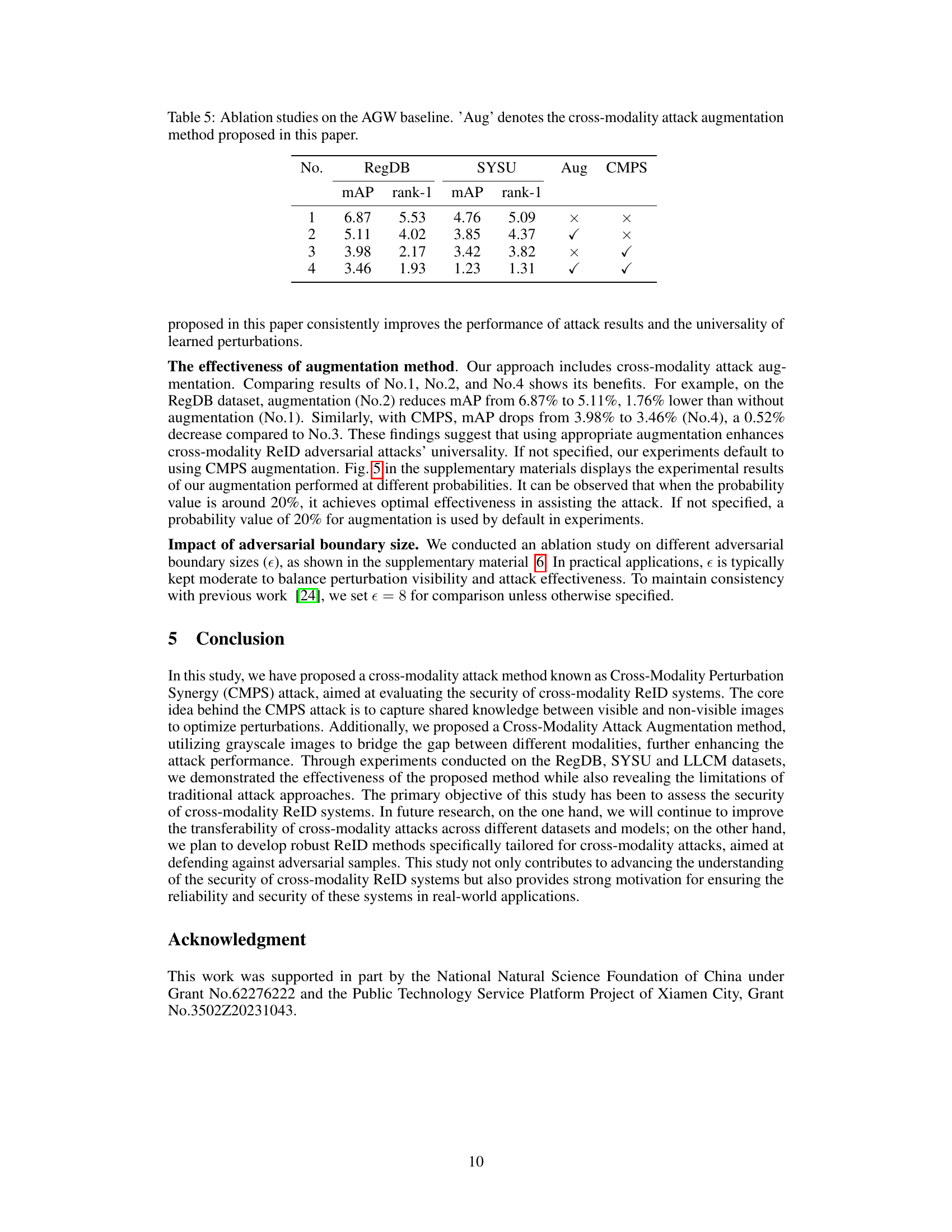

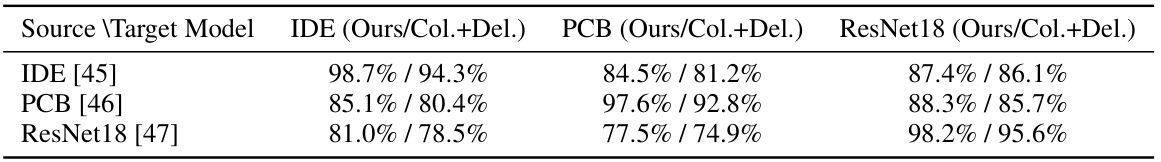

This table presents a comparison of the transfer attack success rates achieved by the proposed CMPS method and the Col.+Del. method across three different models (IDE, PCB, and ResNet18). Higher values indicate better transferability, meaning the attack is more effective when transferred from one model to another. The results show that the CMPS method consistently outperforms Col.+Del. across all model combinations, demonstrating its superior ability to generate universal adversarial perturbations.

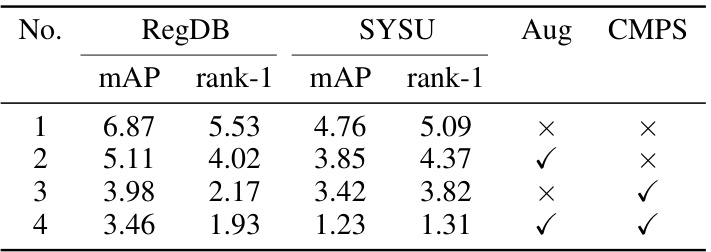

This table presents the ablation study results on the AGW baseline model. It compares four different attack strategies: 1) using only UAP-Retrieval, 2) adding cross-modality attack augmentation, 3) adding CMPS method, and 4) using both augmentation and CMPS. The results show the mAP and rank-1 accuracy on RegDB and SYSU datasets for each strategy, demonstrating the effectiveness of the proposed augmentation and CMPS methods in improving the overall attack performance.

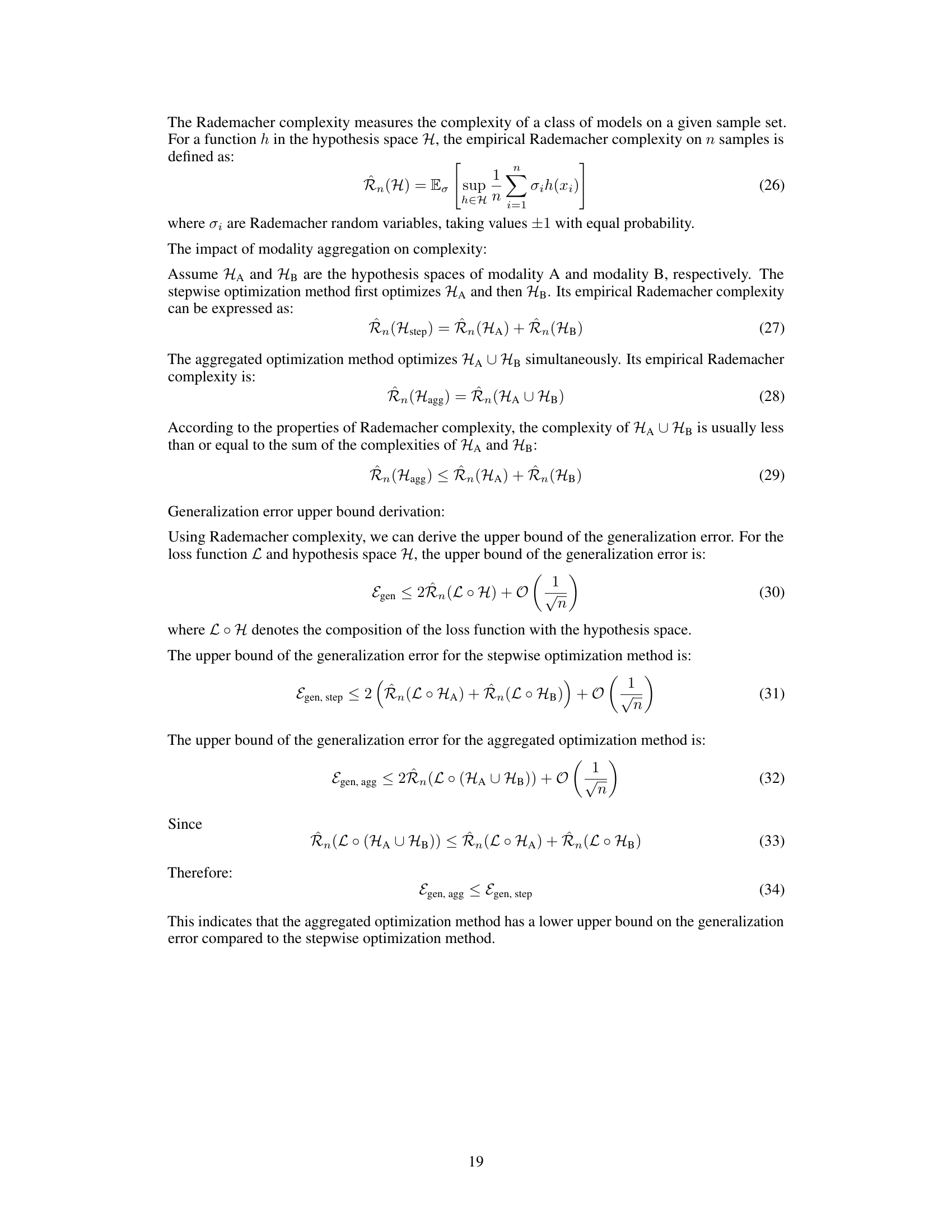

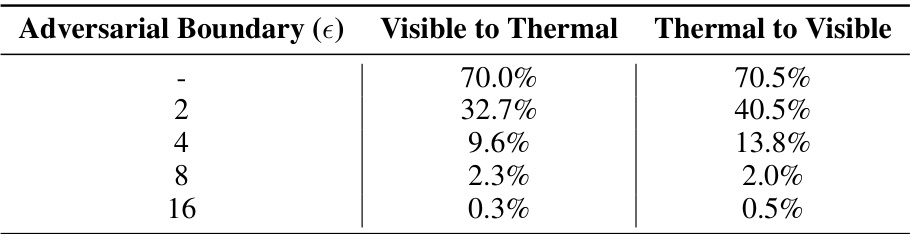

This table shows the results of an ablation study conducted on the RegDB dataset using the AGW baseline model. The study aimed to evaluate the impact of different adversarial boundary sizes (epsilon) on the effectiveness of the proposed CMPS attack, specifically focusing on the rank-1 accuracy. The table presents rank-1 accuracy results for two scenarios: ‘Visible to Thermal’ and ‘Thermal to Visible’, each with varying epsilon values.

Full paper#