↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Reinforcement learning (RL) often faces challenges in real-world applications due to the complexity of environments and their non-stationary nature. Adversarial linear mixture Markov Decision Processes (MDPs) model these scenarios, but existing algorithms struggle with unknown transitions and non-stationary rewards. This creates limitations in achieving optimal performance.

This research introduces a new algorithm that effectively addresses these challenges. By cleverly combining occupancy-measure-based and policy-based approaches, it achieves near-optimal dynamic regret. This means the algorithm performs exceptionally well even when rewards change adversarially and the environment’s dynamics are unknown. The algorithm’s optimality is rigorously proven, providing a strong theoretical foundation and setting a new benchmark for future research in this domain.

Key Takeaways#

Why does it matter?#

This paper is crucial because it presents the first near-optimal algorithm for adversarial linear mixture Markov Decision Processes (MDPs) with unknown transitions. This is a significant advancement in reinforcement learning, addressing a major challenge in handling complex, non-stationary environments. The minimax optimality proven by the authors adds to the algorithm’s significance, providing a benchmark for future research. It also opens doors for exploring further in computationally efficient algorithms while retaining optimality.

Visual Insights#

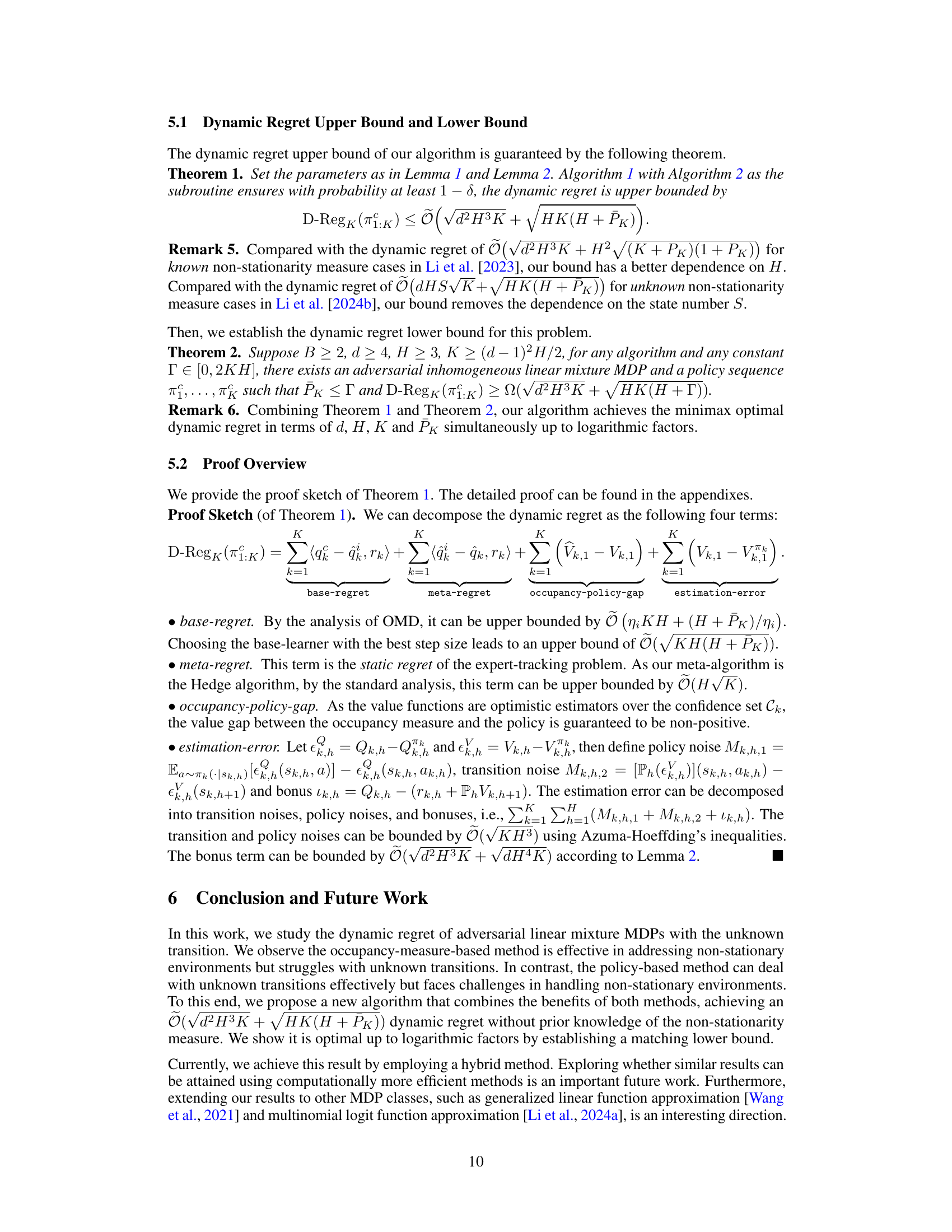

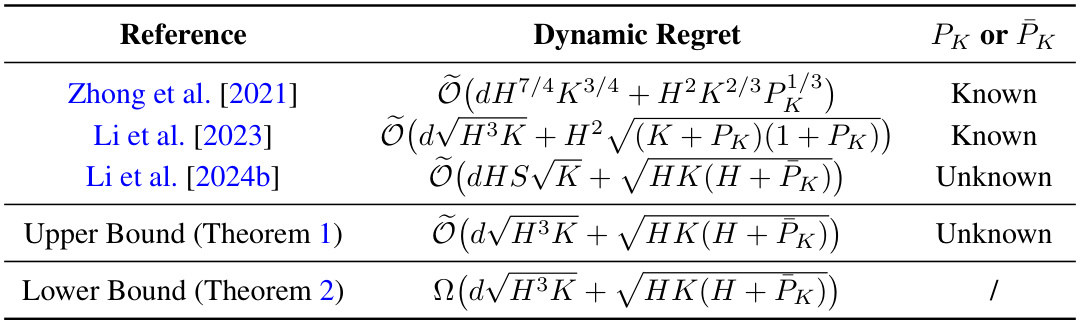

This table compares the dynamic regret guarantees of different algorithms for solving adversarial linear mixture Markov Decision Processes (MDPs) with unknown transition and full-information feedback. It shows the dependence of the dynamic regret on various factors such as feature dimension (d), episode length (H), number of episodes (K), and a non-stationarity measure (PK or PK). The table highlights the differences in the results obtained with different algorithms and whether prior knowledge of the non-stationarity measure was assumed.

Full paper#