TL;DR#

Online classification, a core machine learning problem, typically uses a worst-case analysis, assuming an adversarial data stream. This leads to pessimistic performance bounds, especially when the actual data is easier to learn. This paper addresses this issue by proposing a novel approach that incorporates predictions about future data points, enhancing the algorithm’s adaptability and effectiveness.

The paper introduces online learners that use black-box access to a predictor, a forecasting algorithm. The performance of these algorithms gracefully adapts to the quality of the predictor’s predictions. The key findings demonstrate that online learners can significantly outperform worst-case bounds when accurate predictions are available, matching optimal offline learners in ideal scenarios, while gracefully degrading to the standard worst-case bounds under poor prediction quality.

Key Takeaways#

Why does it matter?#

This paper is important because it significantly advances our understanding of online learning by moving beyond worst-case analyses. It introduces a novel framework that incorporates machine-learned predictions to improve online learning algorithms’ performance. This opens exciting avenues for future research, such as designing algorithms that gracefully adapt to the quality of predictions and exploring how these improvements translate to real-world applications.

Visual Insights#

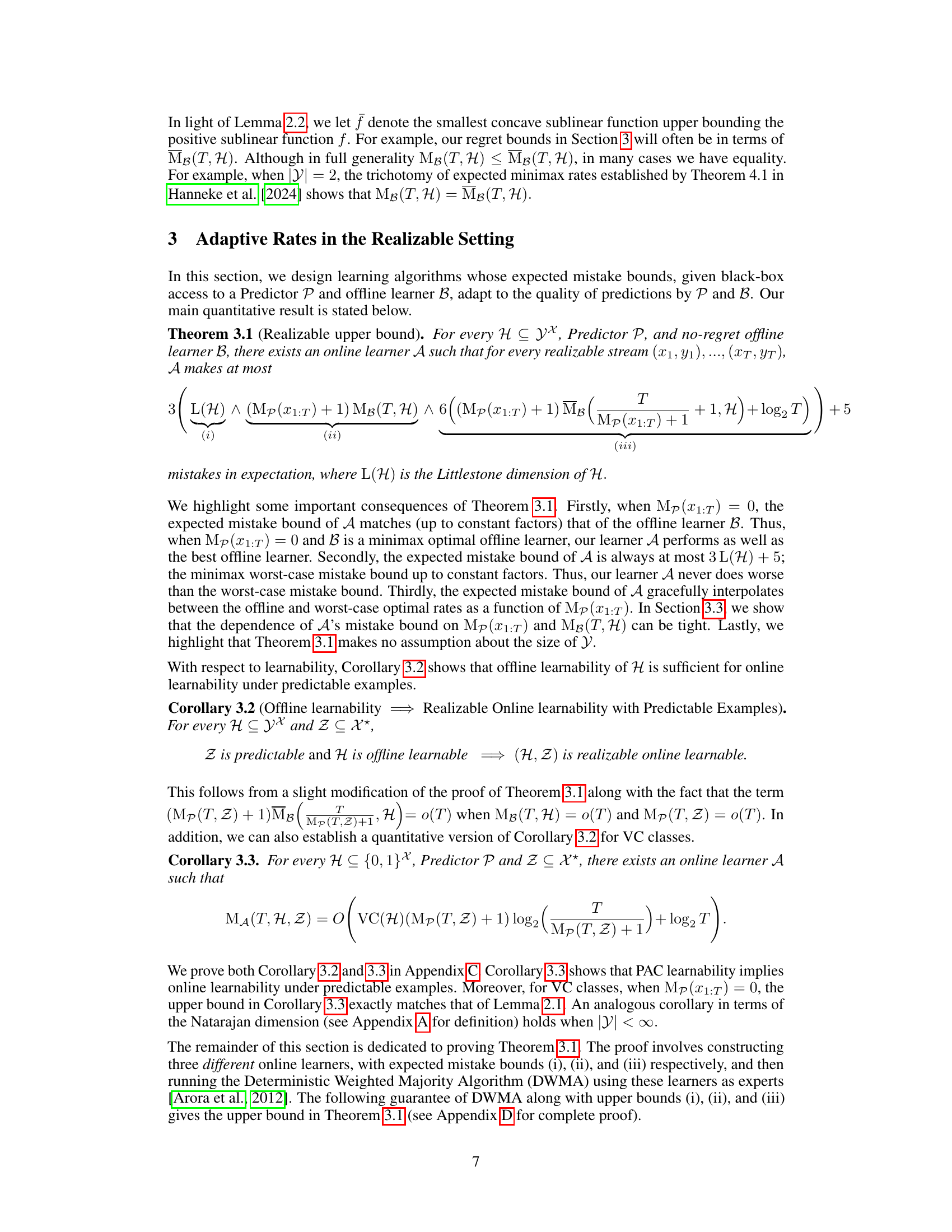

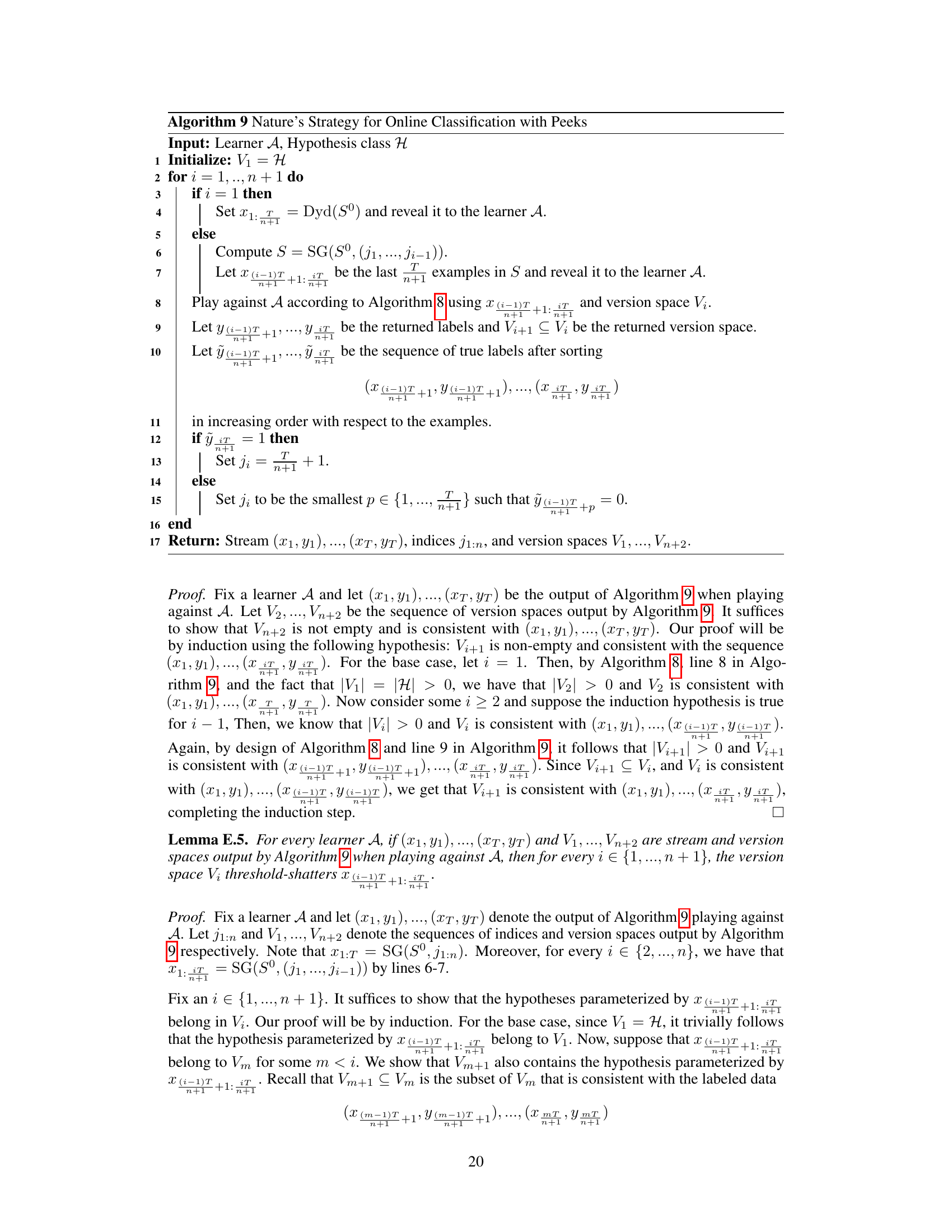

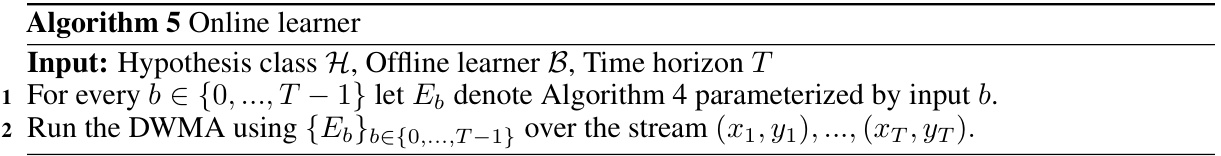

🔼 The algorithm takes as input a hypothesis class H, an offline learner B, and a time horizon T. For every integer b from 0 to T-1, it creates an instance of Algorithm 4 parameterized by b. Then, it runs the Deterministic Weighted Majority Algorithm (DWMA) using these instances of Algorithm 4 as experts on the input stream of examples (x1, y1), …, (xT, yT).

read the caption

Algorithm 5 Online learner

In-depth insights#

Adaptive Online Learn#

Adaptive online learning algorithms are designed to dynamically adjust their learning strategies based on the incoming data stream. Unlike traditional online learning methods that rely on fixed learning rates or strategies, adaptive algorithms modify their behavior in response to the observed data characteristics. This adaptability is crucial in non-stationary environments where the underlying data distribution may shift over time. Key benefits include improved performance and robustness to concept drift. Challenges include determining appropriate adaptation mechanisms, balancing exploration and exploitation, and managing computational overhead. The effectiveness of adaptive techniques depends greatly on the nature of the data and the specific adaptation strategy employed; careful consideration of the data’s statistical properties and the algorithm’s sensitivity to noise and outliers is critical for successful implementation. Future directions involve developing more sophisticated adaptation methods that are capable of handling complex and high-dimensional data while maintaining computational efficiency.

Predictor Impact#

The hypothetical heading ‘Predictor Impact’ in the context of online classification with predictions warrants a thorough examination. The core idea revolves around how the quality of predictions provided by a predictor influences the learner’s performance. A high-quality predictor, consistently providing accurate predictions of future data points, should significantly reduce the learner’s regret compared to the worst-case scenario. Conversely, a poor predictor might offer little to no improvement over standard online learning algorithms. The extent of this predictor impact depends on several factors, including the complexity of the hypothesis class, the characteristics of the data stream, and the predictor’s adaptation strategy. A key research question is whether incorporating predictions can make online learning feasible for hypothesis classes that are intractable in traditional worst-case settings. This involves investigating whether access to a good predictor can bridge the gap between the stringent requirements of online learnability and the more relaxed constraints of offline learnability. The analysis likely encompasses both theoretical guarantees (e.g., regret bounds) and empirical evaluations demonstrating the predictor’s effectiveness across different datasets and predictors. Graceful degradation of performance as predictor accuracy declines is a crucial aspect, ensuring robustness in real-world scenarios where perfect predictions are improbable.

Regret Bounds#

Regret bounds are a crucial aspect of online learning algorithms, quantifying the difference in performance between an algorithm’s cumulative loss and the loss of the best fixed hypothesis in hindsight. The paper likely explores various regret bounds, potentially differentiating between worst-case regret (assuming an adversarial environment) and adaptive regret (which leverages predictions about future data for improved performance). A key focus would likely be on demonstrating how the quality of these predictions impacts the regret. Tight bounds are highly desirable, as they offer a precise characterization of the algorithm’s performance. The analysis will likely involve mathematical proofs, showing that under specific conditions, the derived regret bounds hold. The paper might also compare different algorithms based on their respective regret bounds, potentially highlighting trade-offs between computational complexity and regret. Finally, the discussion of regret bounds is crucial for understanding the algorithm’s robustness and its adaptability to various datasets and scenarios.

Offline to Online#

The concept of bridging offline and online learning paradigms is a core theme in the research paper, focusing on how to leverage offline learning’s power to enhance online learning’s performance. The key insight is that when presented with easily predictable data streams (predictable examples), the complexity of online learning can be drastically reduced. This predictability allows the learner to effectively utilize an offline learning strategy, achieving comparable performance to offline methods even in the dynamic online setting. The authors establish this connection theoretically, showing that offline learnability is sufficient for online learnability given predictable data. This is important because some hypothesis classes are considered not online learnable in a worst-case scenario but become learnable if the examples are predictable. The work is significant in demonstrating how assumptions beyond the traditional worst-case analysis can reveal hidden relationships between offline and online learning. It highlights the promise of employing machine-learned predictions to characterize and exploit the inherent structure and predictability often present in real-world data streams.

Future Work#

The paper’s core contribution lies in designing online learners that gracefully adapt to the quality of predictions provided by a predictor algorithm. Future work could explore several promising directions. Firstly, extending the theoretical framework to handle more general loss functions beyond the 0/1 loss is crucial; this would broaden the applicability to regression and other learning paradigms. Secondly, the assumption of a consistent and lazy predictor simplifies analysis, but relaxing these constraints would significantly increase the model’s robustness and practical relevance. This could involve developing learning algorithms that are robust to noisy or inconsistent predictions. Thirdly, empirical evaluation on real-world datasets is vital to validate the theoretical findings and show the practical benefits of using machine-learned predictions in online classification. Finally, the paper primarily focuses on the realizable and agnostic settings; investigating the benefits of predictions in other settings like partial or bandit feedback scenarios would further enrich the research, and investigating different prediction models, perhaps using ensemble techniques to improve prediction accuracy, is another avenue to be explored.

More visual insights#

More on tables

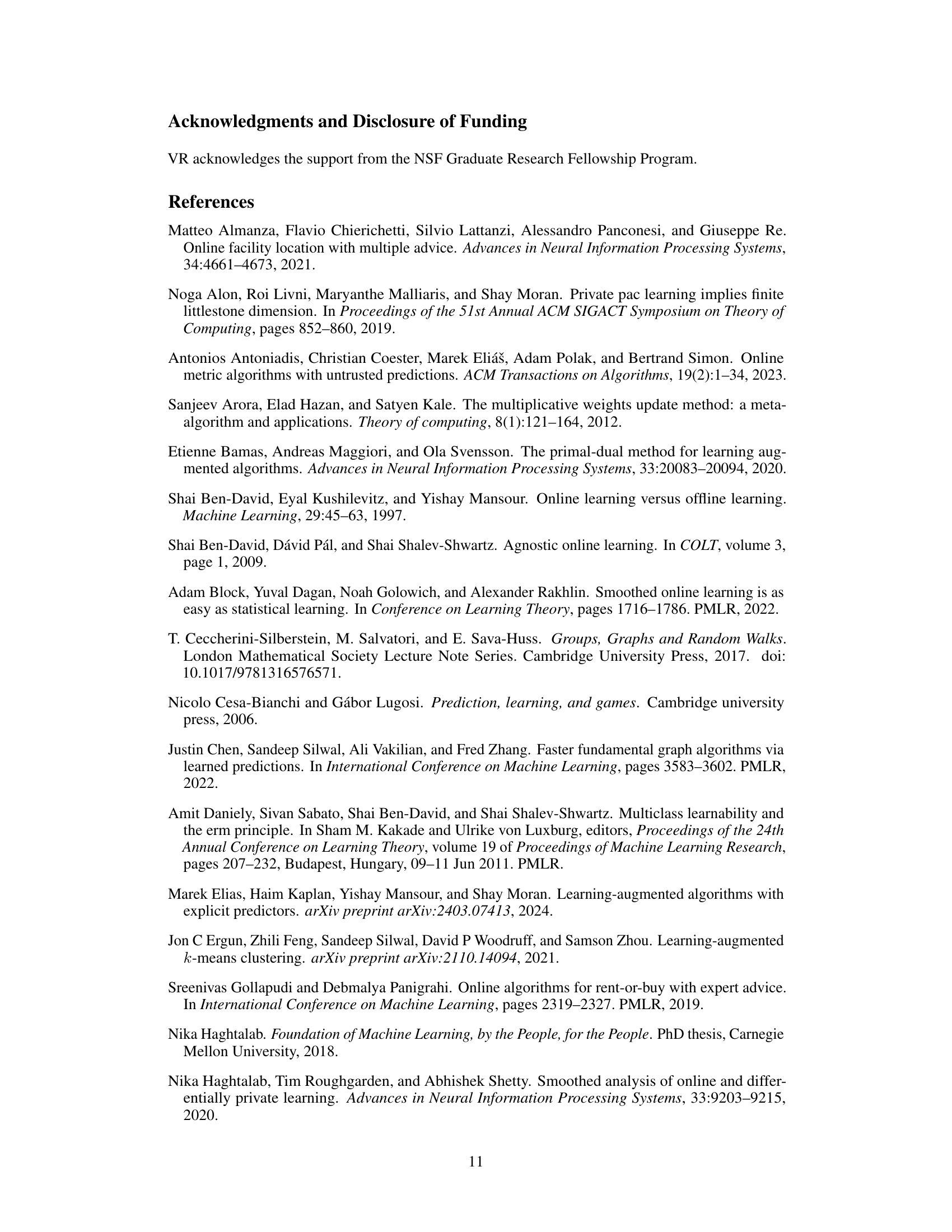

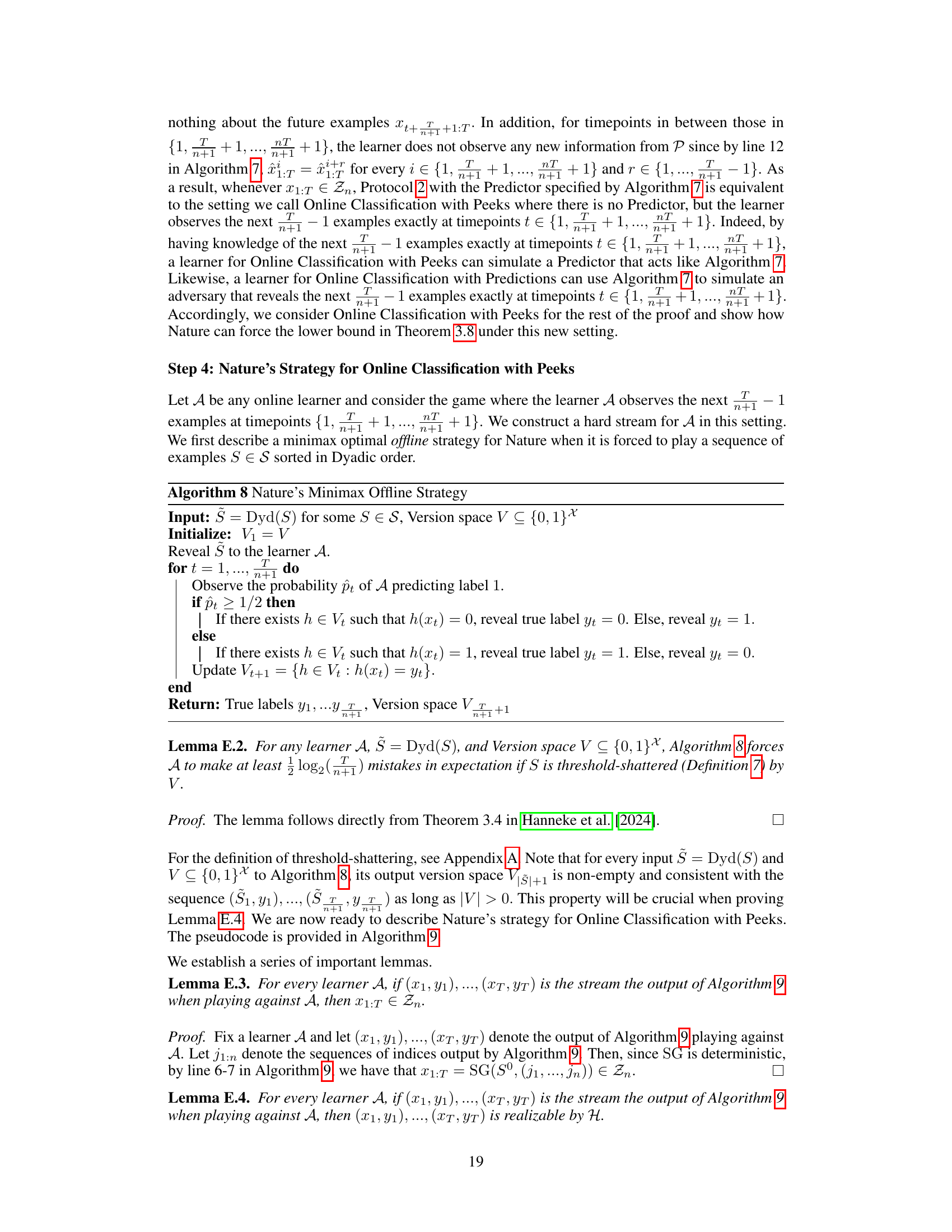

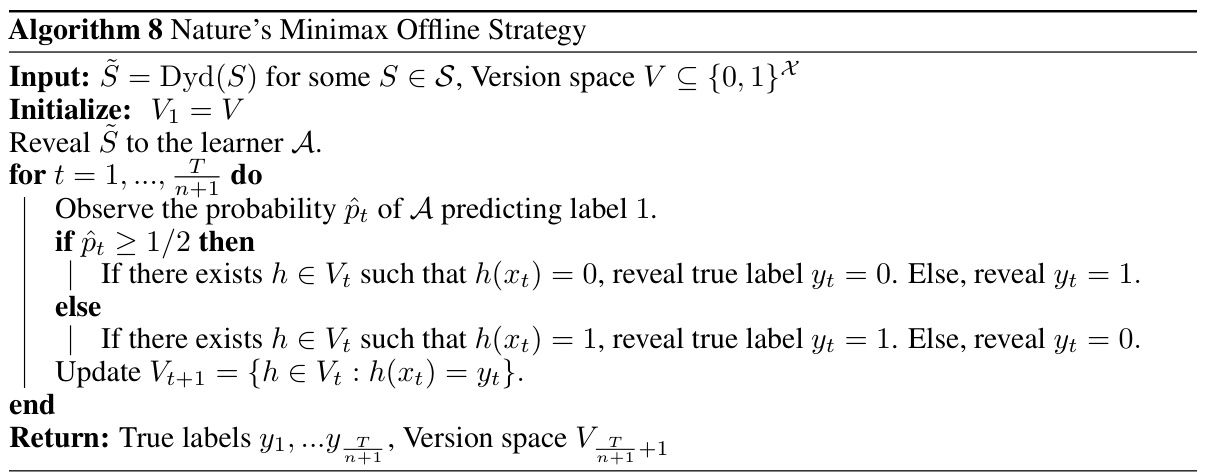

🔼 This table summarizes the main theoretical results of the paper, specifically in the realizable setting. It presents upper bounds on the number of mistakes made by online learners, given access to a predictor and an offline learner. These bounds depend on the quality of predictions, the offline learner’s performance, and the Littlestone dimension of the hypothesis class. The table highlights how online learning can be easier when the example stream is easily predictable.

read the caption

Table 3.1 Adaptive Rates in the Realizable Setting

🔼 This table presents the main theoretical result of the paper, which provides upper bounds on the number of mistakes made by an online learner with access to predictions in the realizable setting. The upper bound gracefully adapts to the quality of predictions and the complexity of the hypothesis class. Specifically, it shows that the expected mistake bound interpolates between that of the best offline learner and the worst-case mistake bound.

read the caption

Table 3.1: Adaptive Rates in the Realizable Setting

Full paper#