TL;DR#

Single-index models (SIMs) are a common machine learning model, but efficiently learning them becomes challenging when dealing with noisy labels (agnostic learning). Existing robust learners often require strong assumptions on the model and are computationally expensive, especially in high dimensions. The difficulty stems from the non-convex optimization landscape of SIMs and the potential for label noise to significantly impact the learning process.

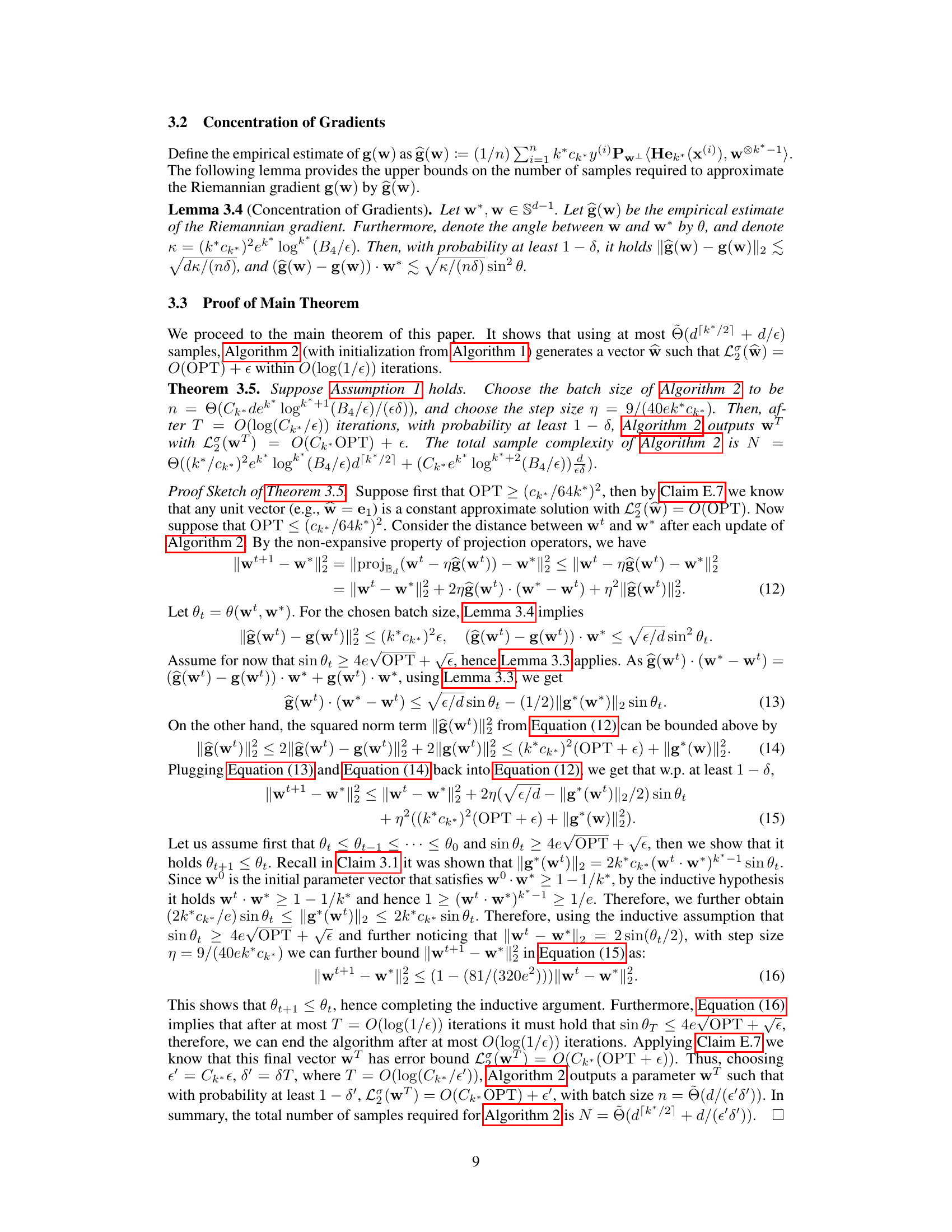

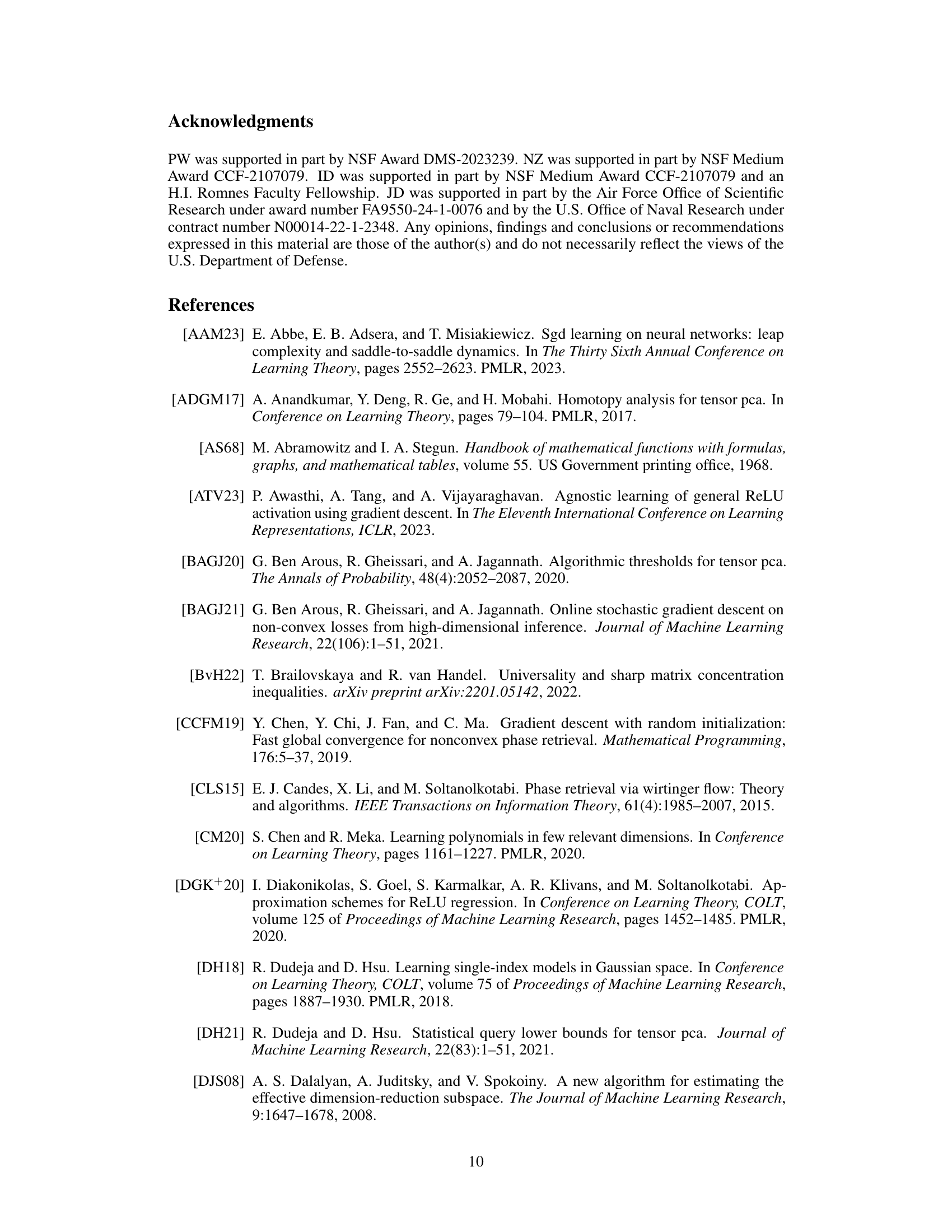

This paper introduces a new algorithm that addresses these challenges. The algorithm uses a gradient-based method combined with tensor decomposition to tackle the inherent non-convexity of SIMs. It is computationally efficient and provides robust learning even with significant label noise. The algorithm’s sample complexity is close to optimal, making it particularly useful for high-dimensional datasets. This work is relevant to a wide range of machine learning applications where robust learning and efficiency are essential.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on robust learning and high-dimensional data analysis. It offers a novel algorithm with near-optimal sample complexity, significantly advancing the state-of-the-art in learning single-index models with adversarial label noise. This opens up new avenues for research, including exploring the limits of computationally efficient robust learners and extending the approach to other challenging learning problems. The results also have implications for the broader machine learning community studying non-convex optimization and high-dimensional statistics.

Visual Insights#

Full paper#