↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

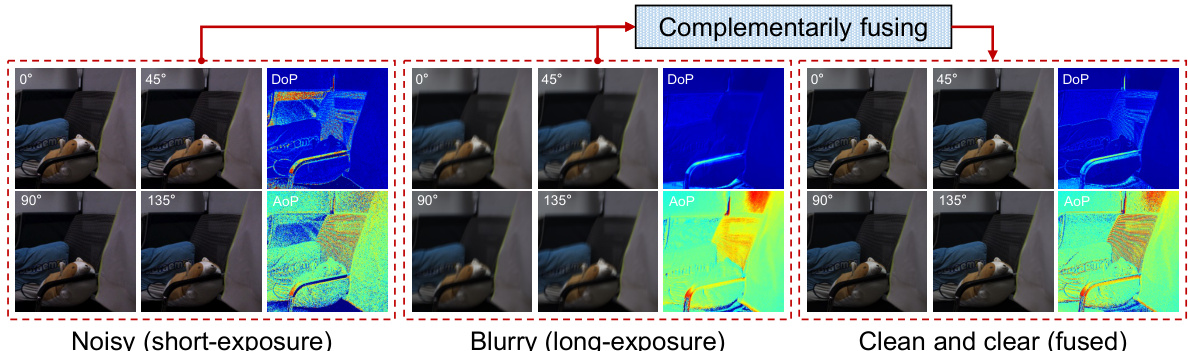

Polarimetric imaging, which captures the polarization properties of light, faces challenges due to trade-offs between noise and motion blur in captured snapshots. Using a short exposure time leads to high noise, while long exposure leads to motion blur, both degrading the accuracy of the polarization data. Existing methods struggle to balance noise and blur reduction while maintaining the polarization properties, limiting the quality of the resulting images.

This research tackles these issues by introducing a new framework that cleverly uses a degraded pair of noisy and blurry images to generate a high-quality image. The method involves three main processing phases: irradiance restoration, polarization reconstruction, and artifact suppression, each with specially-designed modules. This approach significantly improves image quality and surpasses current state-of-the-art techniques in polarimetric imaging. The framework demonstrated superior performance on both synthetic and real-world data, paving the way for applications in various fields relying on accurate polarization information.

Key Takeaways#

Why does it matter?#

This paper is important because it presents a novel framework for improving the quality of polarimetric images while preserving their properties. This is a significant contribution to the field of polarization-based vision, as it addresses a critical challenge in capturing high-quality polarized snapshots. The framework’s three-phase approach, incorporating specialized modules, offers a new avenue for improving the accuracy and clarity of polarization data, opening up possibilities for advancements in various applications such as reflection removal, shape from polarization, and dehazing. The work also emphasizes the potential of complementary fusing, suggesting new research directions for integrating diverse data sources to enhance image quality.

Visual Insights#

This figure shows the effects of short and long exposure times on polarimetric imaging. Short exposure leads to noisy images with low signal-to-noise ratio (SNR), resulting in poor quality Degree of Polarization (DoP) and Angle of Polarization (AoP). Long exposure reduces noise but introduces motion blur, again degrading DoP and AoP. The authors’ proposed framework addresses this by complementarily fusing a noisy, short-exposure image with a blurry, long-exposure image to produce clean and high-quality results.

This table presents a quantitative comparison of the proposed framework’s performance against several state-of-the-art methods on synthetic data. The metrics used are PSNR and SSIM for the Degree of Polarization (DoP), Angle of Polarization (AoP), and total intensity (S0). The comparison includes methods specifically designed for polarized images (PLIE, PolDeblur) and their enhanced versions, as well as general-purpose image enhancement methods that handle noisy and blurry image pairs (LSD2, LSFNet, SelfIR, D2HNet).

In-depth insights#

Polarimetric Fusion#

Polarimetric fusion is a powerful technique that leverages the complementary information from multiple polarized images to enhance the quality and robustness of polarimetric imaging systems. By combining data from different polarization states or images captured under varying conditions (e.g., different exposure times or noise levels), polarimetric fusion can effectively mitigate noise, reduce motion blur, and improve the accuracy of polarization parameters estimation (e.g., Degree of Polarization and Angle of Polarization). This is particularly useful in challenging scenarios such as low-light conditions or fast-moving scenes where single-image polarimetric measurements may be unreliable. Successful polarimetric fusion methods often employ advanced signal processing techniques, including image registration, noise filtering, and multi-spectral fusion algorithms, to properly align and combine the input images while preserving important polarization characteristics. Machine learning approaches, especially deep neural networks, are increasingly being incorporated to improve the fusion process and yield superior results. The main challenge in polarimetric fusion lies in effectively preserving the polarization information during the fusion process, as many standard image processing techniques are not polarization-aware and might distort or compromise the accuracy of the derived polarization parameters. Future research will likely focus on developing more sophisticated and robust fusion techniques, incorporating physics-based models to ensure accurate polarization preservation, and expanding the application of polarimetric fusion to a broader range of scenarios.

Three-Phase Network#

A three-phase network architecture for enhancing polarimetric imaging suggests a structured approach to processing. Phase 1 likely focuses on low-level irradiance restoration, perhaps using techniques to recover total intensity and handle noise or artifacts while preserving color information. Phase 2 would then tackle polarization reconstruction, which is a more complex task, possibly employing a coherence-aware approach to correlate features across different polarization angles and generate high-quality degree-of-polarization (DoP) and angle-of-polarization (AoP) maps. Finally, Phase 3 likely involves artifact suppression, refining the image using techniques to eliminate residual noise or inconsistencies from previous steps, enhancing overall image quality while preserving polarization properties. The modular design enables flexibility in choosing and optimizing components for each phase, potentially allowing for a more robust and adaptable framework compared to single-phase methods.

Cartesian DoP/AoP#

The concept of “Cartesian DoP/AoP” represents a novel approach to handling the degree of polarization (DoP) and angle of polarization (AoP) in polarimetric imaging. Traditional methods often work directly with the DoP and AoP values, which can be challenging due to their non-linear relationship and sensitivity to noise. The Cartesian representation transforms the polar coordinates (DoP, AoP) into Cartesian coordinates (x, y), effectively linearizing the relationship and potentially simplifying subsequent processing. This transformation facilitates the use of standard image processing techniques and deep learning methods that typically assume linearity in the data. The benefits include improved robustness to noise and artifacts, potentially leading to more accurate and stable polarization information extraction. This approach simplifies computations and enhances the ability to fuse or compare polarization data from different sources, potentially integrating more seamlessly with existing image fusion and processing pipelines. However, a key challenge would be handling the transition between Cartesian and polar representations, ensuring that the information is not lost or distorted during the conversion. The success of this method hinges on careful consideration of this conversion and the development of algorithms that can effectively leverage the benefits of the Cartesian representation while mitigating potential drawbacks.

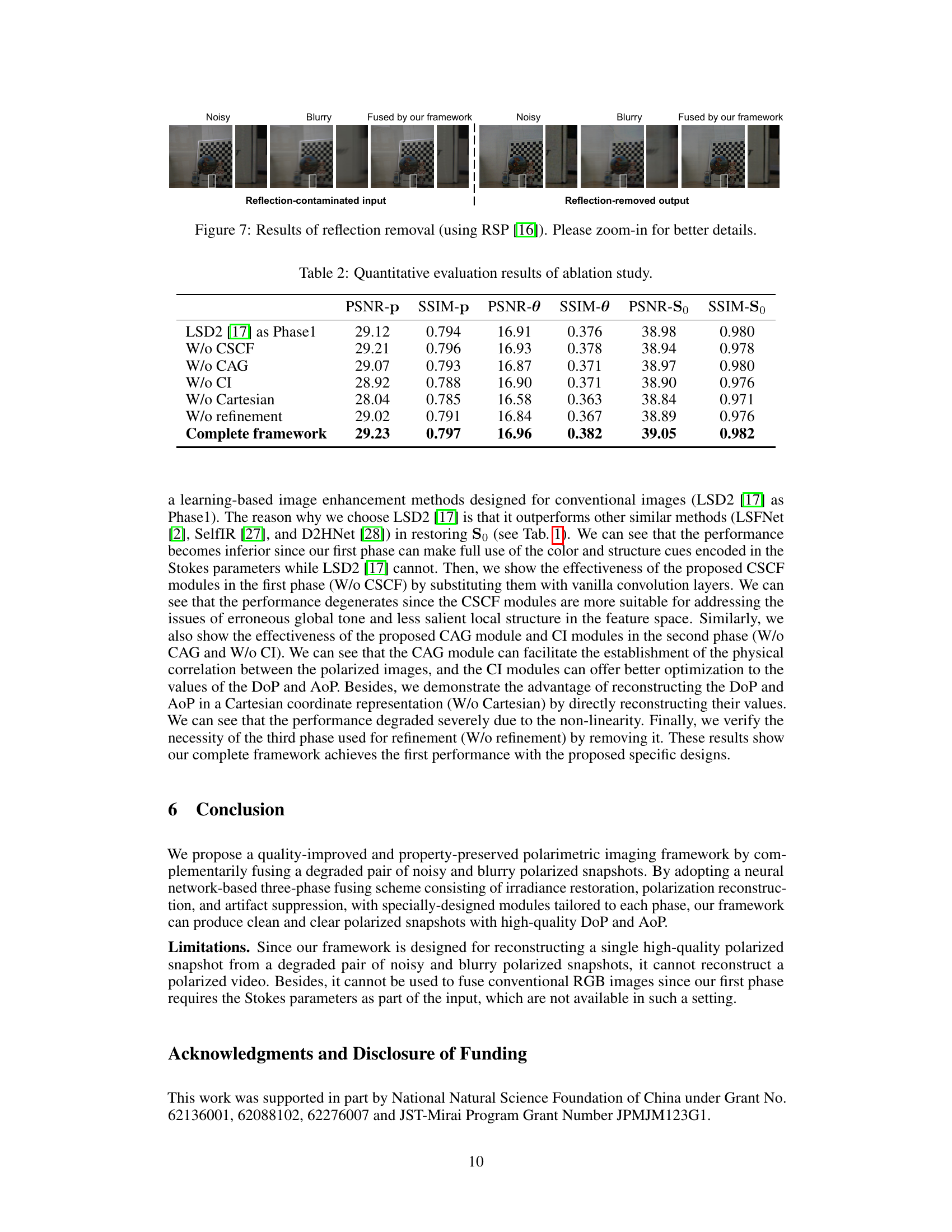

Ablation Study#

An ablation study systematically evaluates the contribution of individual components within a complex model. In the context of this polarimetric imaging paper, an ablation study would likely involve removing or modifying each of the three proposed phases (irradiance restoration, polarization reconstruction, and artifact suppression) individually to assess their impact on overall performance. By disabling phases one at a time, the researchers could isolate the effect of each module, observing the resulting changes in metrics like PSNR and SSIM for both DoP and AoP. The study would aim to demonstrate the necessity and effectiveness of each phase. Quantitative results from such experiments would be crucial in supporting the claims of the paper, providing a strong argument for the integrated design of the three-phase framework. Furthermore, the ablation study could extend to the individual modules within each phase, analyzing components such as the CSCF (color and structure cue fusion) or CAG (coherence-aware aggregation) modules. This would provide a granular understanding of which parts are most crucial to the final result, and inform future model improvements. For instance, the study might reveal a module is redundant or could be simplified without significant performance loss, guiding future optimization.

Future Works#

The paper’s success in enhancing polarimetric imaging through complementary fusion opens several avenues for future work. Extending the framework to handle video sequences is a natural progression, demanding efficient temporal processing and robust motion estimation. Exploring the fusion of more than two degraded images could significantly boost image quality, although it would necessitate more sophisticated strategies for managing increased complexity. The current framework is limited by its dependence on a specific type of degradation; therefore, investigating the robustness and adaptability to other degradation types (e.g., speckle noise, geometric distortions) is crucial. Improving the efficiency of the neural network, possibly through model compression or architectural optimization, will be important for practical applications. Finally, in-depth evaluation on a broader range of datasets and downstream tasks, beyond reflection removal, will strengthen the claim of generalizability. These extensions will strengthen the robustness, scalability, and impact of the method.

More visual insights#

More on figures

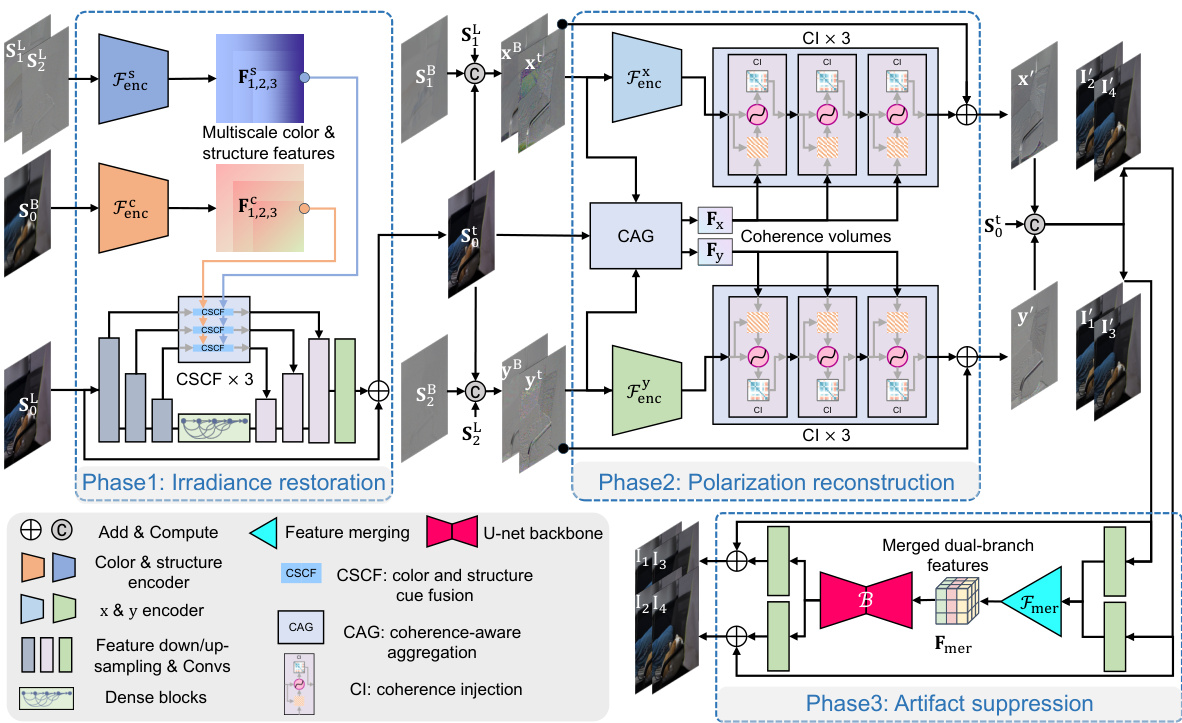

This figure illustrates the overall framework of the proposed polarimetric imaging method. It consists of three main phases: 1) Irradiance Restoration: This phase aims to restore the high-level irradiance information of the scene. The CSCF module is used to make full use of color and structure cues. 2) Polarization Reconstruction: This phase aims to establish the physical correlation between the polarized images by reconstructing the DoP and AoP in the Cartesian coordinate representation. CAG and CI modules are used. 3) Artifact Suppression: This phase aims to suppress the artifacts by performing refinement in the image domain. This figure shows the connections and data flow between these three phases and the different modules within each phase.

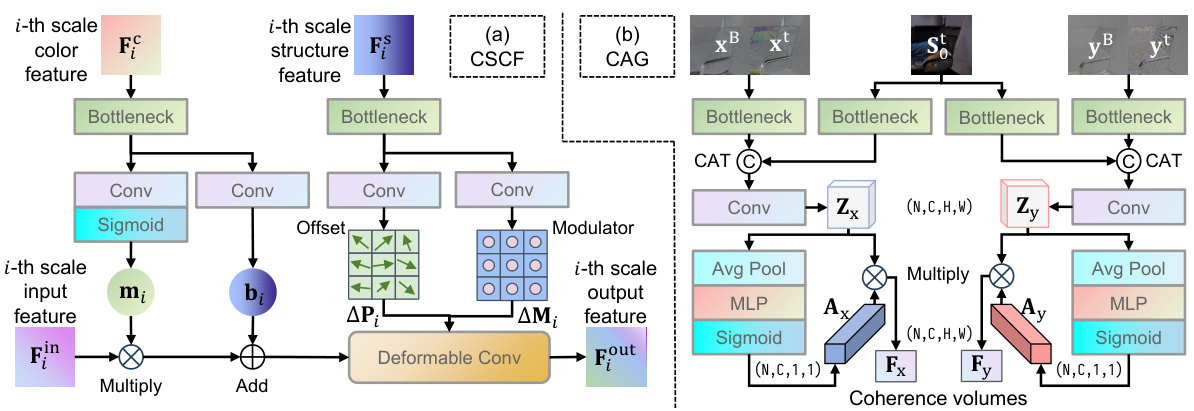

This figure shows the detailed architecture of the Color and Structure Cue Fusion (CSCF) module and the Coherence-Aware Aggregation (CAG) module. The CSCF module aims at fusing color and structure information from noisy and blurry images to restore irradiance. The CAG module aims at aggregating coherence information from blurry images and irradiance information to reconstruct polarization.

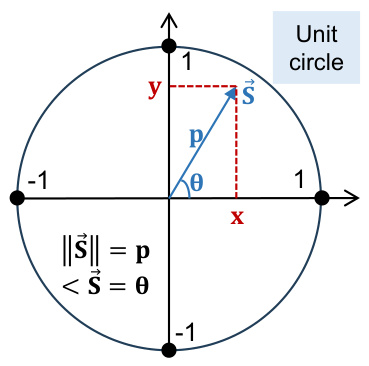

This figure shows the Cartesian coordinate representation of the Degree of Polarization (DoP) and Angle of Polarization (AoP). The DoP (p) and AoP (θ) are represented as the magnitude and angle of a vector (\vec{S}) in a unit circle. The x and y coordinates are calculated from the Stokes parameters S0, S1, and S2. This representation is used in the paper to simplify the reconstruction process of DoP and AoP, making it less ill-posed and easier to optimize.

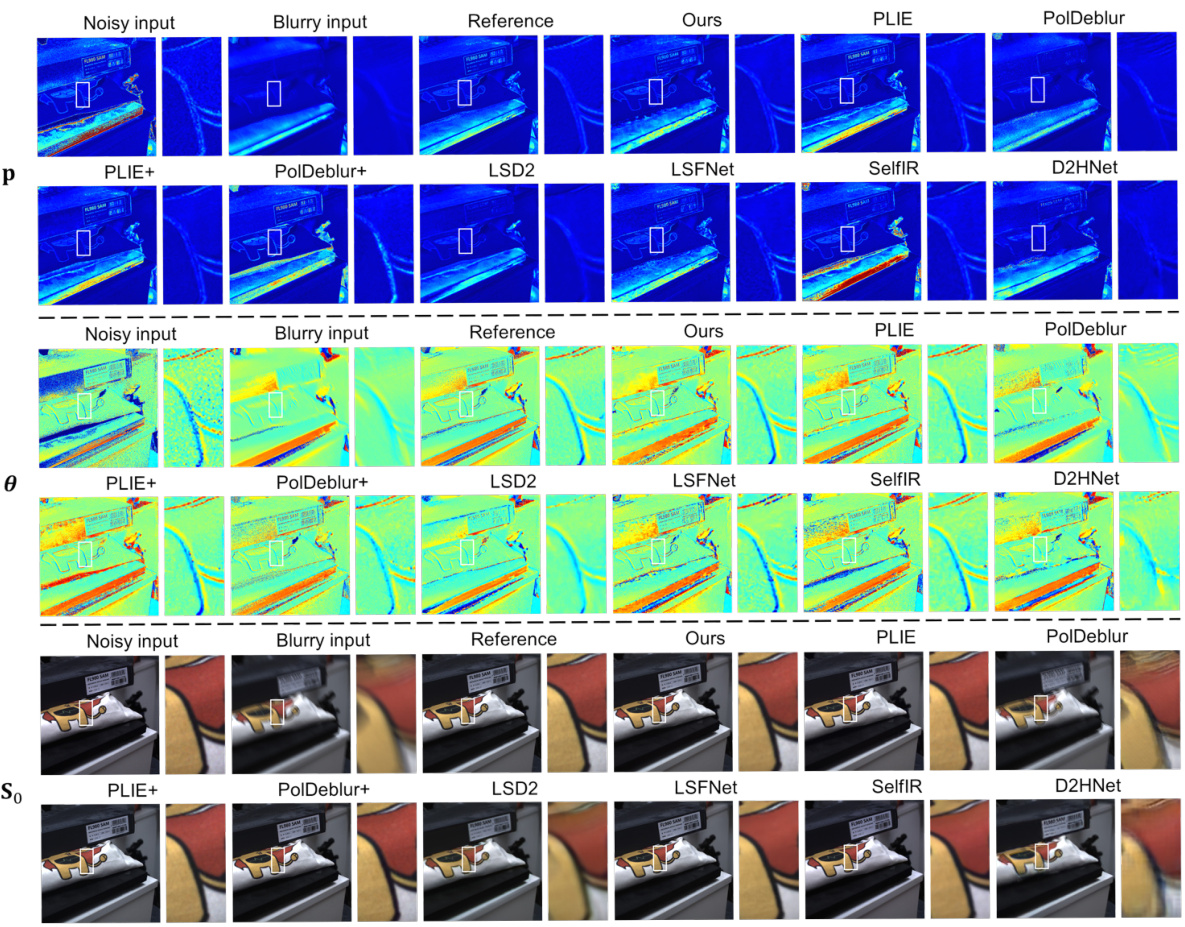

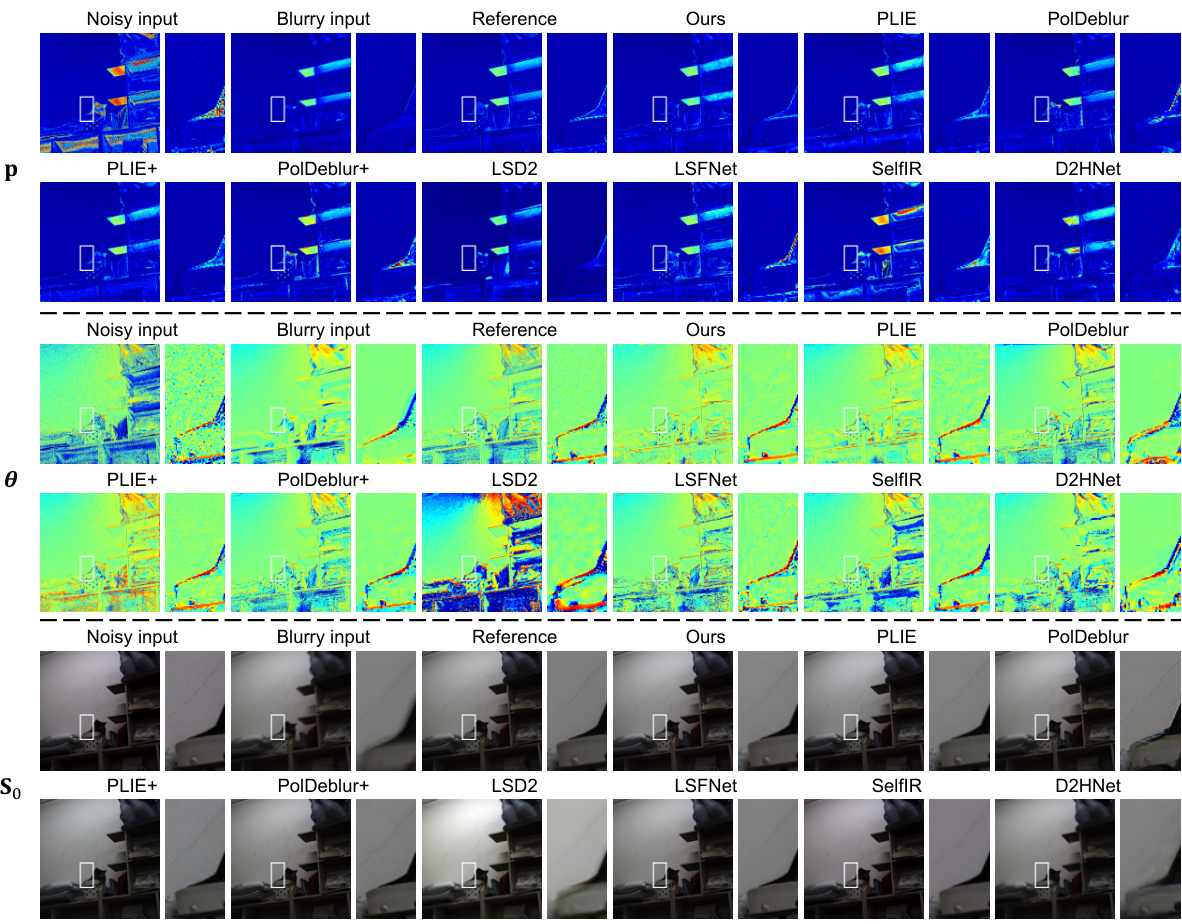

This figure shows a qualitative comparison of the proposed framework’s performance against other state-of-the-art methods on synthetic data. The comparison includes the degree of polarization (DoP), the angle of polarization (AoP), and the total intensity (S0). Color maps are used to visualize the DoP and AoP, which have been normalized and averaged across the RGB channels for consistency with other methods. The results demonstrate the superior ability of the proposed framework to produce clean and clear results, even when compared to advanced methods designed specifically for polarized image enhancement and deblurring.

This figure shows a qualitative comparison of the proposed method with other state-of-the-art methods on synthetic data. It visualizes the Degree of Polarization (DoP) and Angle of Polarization (AoP) using color maps. The results demonstrate the superior performance of the proposed method in generating cleaner and clearer DoP and AoP estimations compared to other methods.

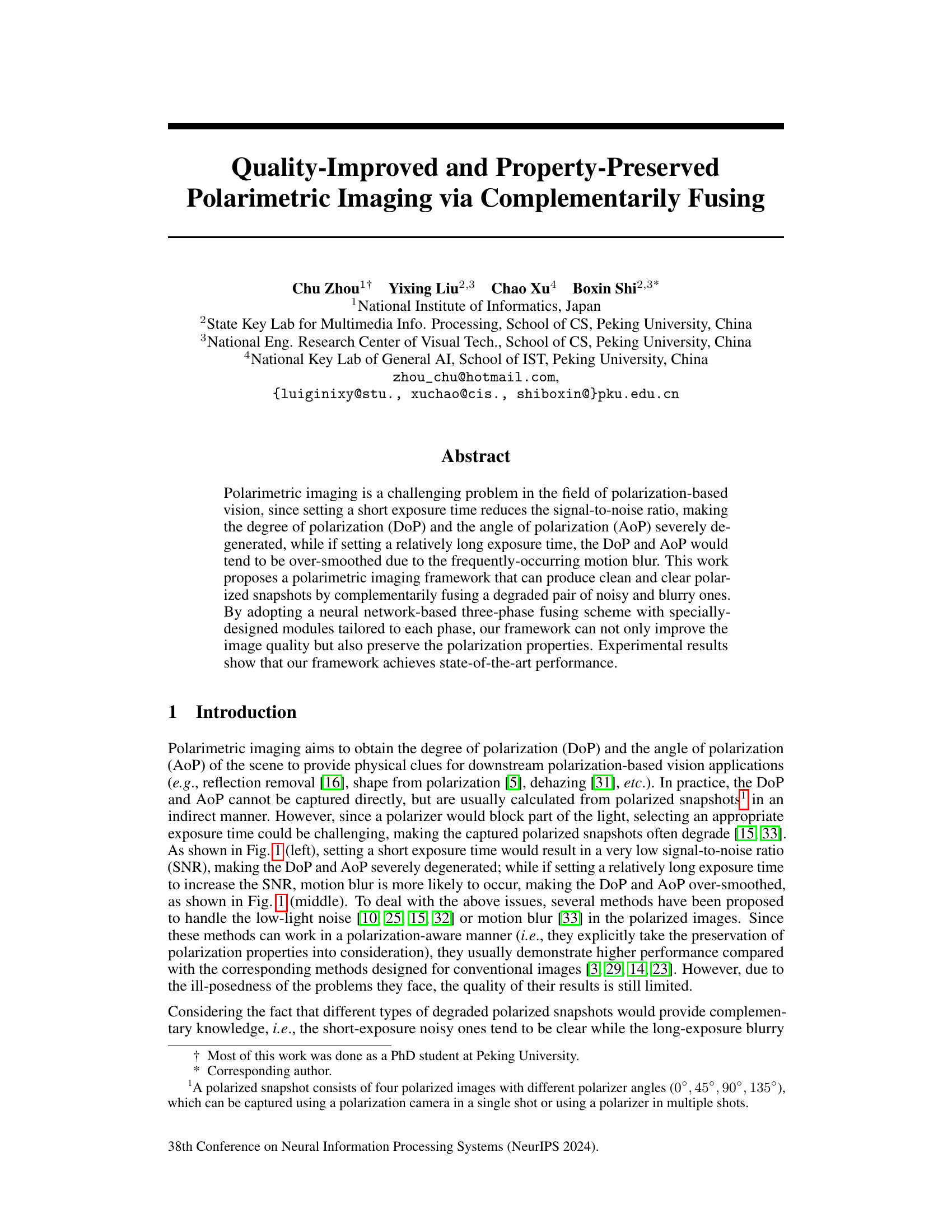

This figure shows the results of reflection removal using the RSP method on three types of input images: noisy, blurry, and fused by the proposed framework. The results demonstrate that the fused images, produced by the proposed framework, significantly improve the quality of reflection removal compared to noisy and blurry images. Zooming in on the images reveals more details about the improvement in quality.

Full paper#