↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Multi-exposure correction (MEC) aims to recover proper exposure from over- or under-exposed images. Existing deep learning models struggle with balancing high performance and efficiency, and often don’t fully integrate Retinex theory, which helps separate illumination and reflectance. This limitation leads to suboptimal results. Additionally, many existing approaches are limited in their generalizability.

This paper introduces ECMamba, a novel MEC framework inspired by the efficient Mamba sequence modeling. It uses a dual-branch design, each dedicated to restoring reflectance and illumination maps guided by Retinex estimation. The core of ECMamba is a novel 2D Selective State-space layer (Retinex-SS2D) using a deformable feature aggregation and feature-aware scanning, enhancing both efficiency and effectiveness. Experiments demonstrate that ECMamba outperforms state-of-the-art methods on multiple datasets.

Key Takeaways#

Why does it matter?#

This paper is important because it presents ECMamba, a novel and efficient framework for multi-exposure correction that leverages Retinex theory and a state-space model. It offers improved performance and efficiency compared to existing methods, opening new avenues for research in image restoration and other computer vision tasks. The Retinex-SS2D layer, in particular, offers a novel approach to 2D scanning that significantly improves the model’s effectiveness.

Visual Insights#

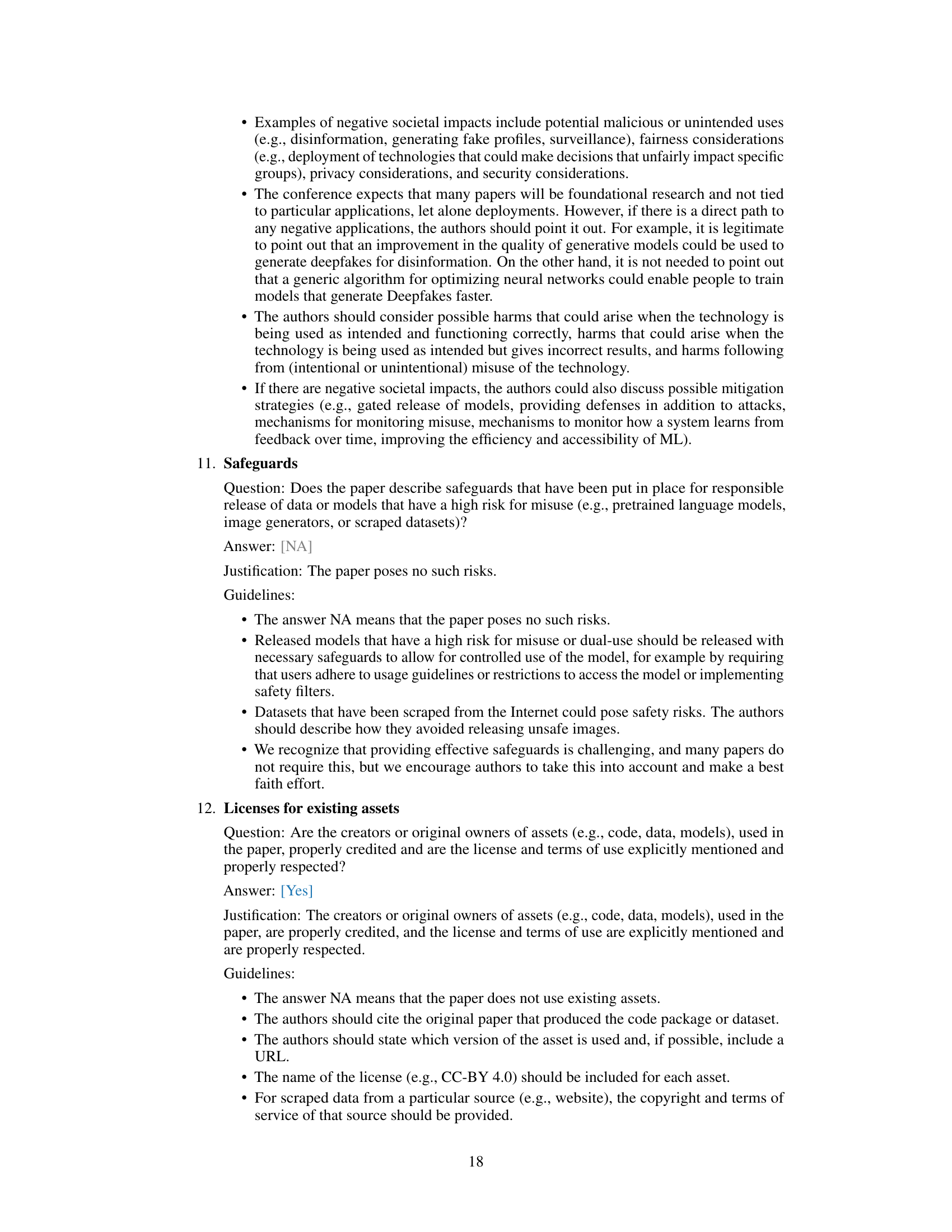

This figure shows the effectiveness of the proposed ECMamba network for exposure correction. (a) uses t-SNE to visualize the distributions of modulated reflectance, restored reflectance, and final output for under-exposed and over-exposed images, demonstrating that the proposed method brings the results closer to normal-exposed images. (b) shows visual results, highlighting improvements in color preservation and structure recovery.

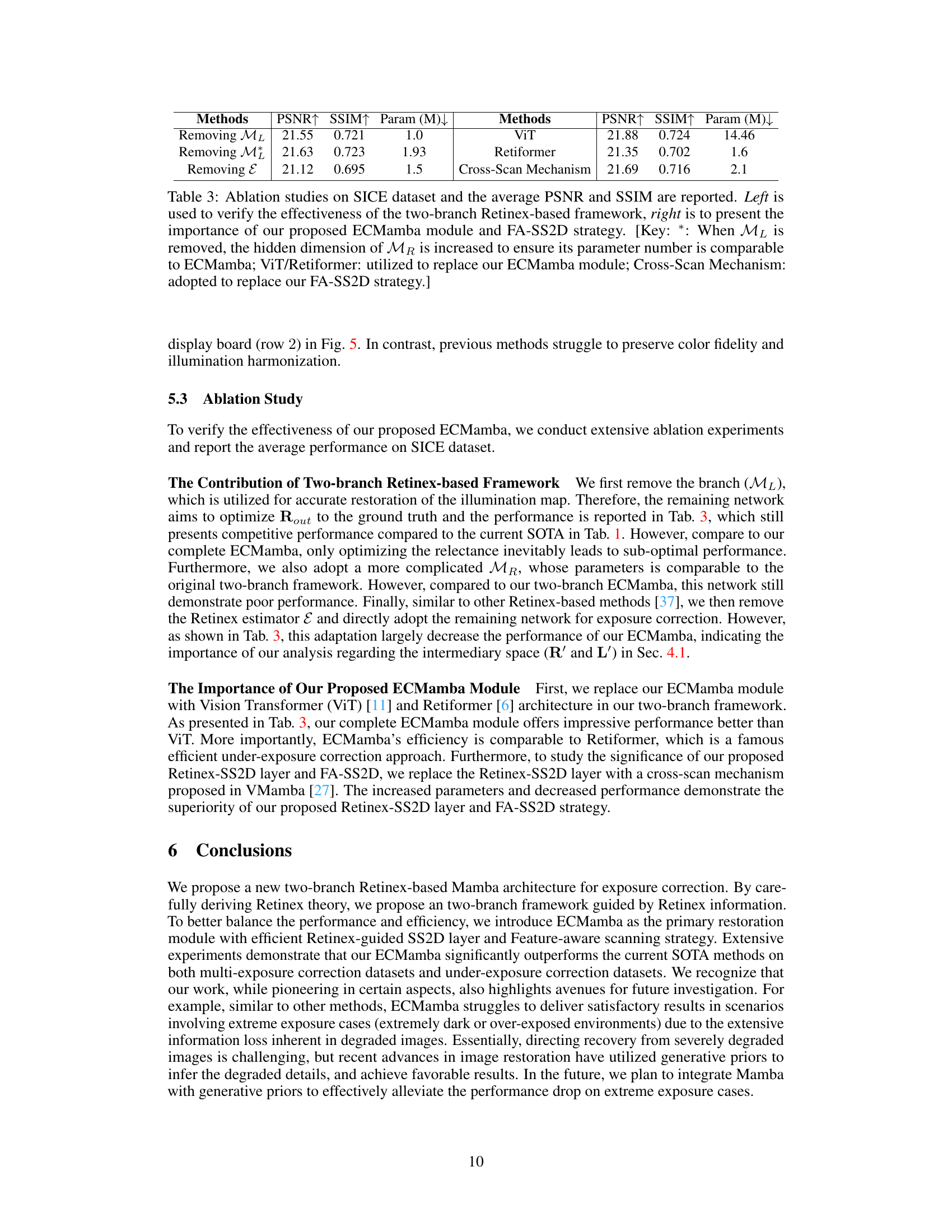

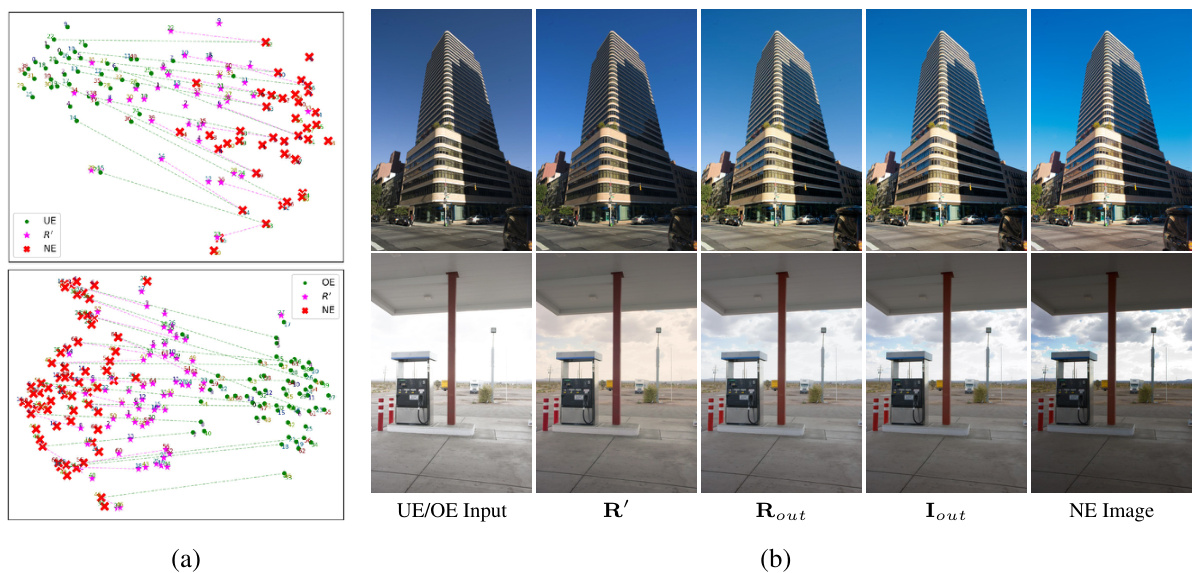

This table quantitatively compares the performance of ECMamba with other state-of-the-art (SOTA) methods on two multi-exposure correction datasets (ME and SICE). The metrics used for comparison are PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index), both higher values indicating better performance. The table also shows the average performance across both under-exposed and over-exposed images for each method. Some methods are marked with an asterisk (*) indicating that the original paper did not report the results for the datasets used in this paper, so the authors re-trained them.

In-depth insights#

Retinex-Mamba Fusion#

A hypothetical ‘Retinex-Mamba Fusion’ heading suggests a method integrating Retinex theory with the Mamba architecture for image processing. Retinex, focusing on illumination-reflectance separation, could provide a strong foundation for preprocessing images, addressing issues like over/under-exposure. Mamba, known for its efficient sequence modeling, would be ideally suited for processing the separated components. This fusion would likely involve a two-branch network where one branch processes the reflectance map and another handles the illumination. A key advantage is the potential for improved efficiency compared to methods relying solely on transformers. However, challenges might arise in effectively integrating the Retinex decomposition’s output with Mamba’s sequential processing. Careful consideration of the optimal fusion strategy, along with extensive experimentation to validate its performance compared to existing methods, would be crucial to demonstrating the viability and advantages of this approach. Furthermore, exploring the architecture’s ability to handle diverse lighting conditions and image types would be essential to understanding its robustness and generalizability.

SS2D Scan Strategy#

A hypothetical “SS2D Scan Strategy” in a computer vision paper likely involves a novel approach to processing 2D image data. Instead of the typical 1D sequential processing (like in NLP), it might rearrange or reorganize the pixels to leverage the inherent spatial relationships within the image. This could involve a deformable scanning mechanism, adapting the scan path based on feature importance (e.g., edges, textures). The strategy may enhance feature extraction by prioritizing informative regions first and thereby improving efficiency and effectiveness of subsequent processing modules, like state-space models. Retinex-guided approaches could further refine the strategy by weighting the scan path based on illumination and reflectance information, ensuring that the most salient features are processed effectively. The core of the proposed method would be to convert 2D image data to a meaningful sequence for state-space modelling, thus combining the strengths of 2D spatial information and efficient 1D sequential processing. The effectiveness would rely on choosing an appropriate scan path that captures spatial context whilst providing speed and efficiency. Ablation studies could analyze the impact of different scanning strategies on overall accuracy and efficiency. Ultimately, this approach potentially leads to improved performance and reduced computational cost in relevant image processing tasks.

Exposure Correction#

Exposure correction, a crucial aspect of image processing, aims to recover images from overexposed or underexposed conditions. Traditional methods often rely on Retinex theory, which decomposes an image into reflectance and illumination components to restore proper exposure, but these methods often struggle with efficiency and generalization. Deep learning techniques, offering powerful representations, have shown promise, yet few fully integrate Retinex theory into their architectures. This creates a gap for developing approaches that balance high performance and efficiency, a key limitation of current methods. Therefore, there’s a strong need for novel frameworks that leverage the strengths of both Retinex theory and deep learning to overcome limitations, improve performance on multi-exposure correction, and achieve efficient and robust results across various exposure scenarios. The goal is to develop methods with good generalizability, moving beyond the limitations of approaches that focus solely on under- or over-exposed image restoration.

Ablation Studies#

Ablation studies systematically assess the contribution of individual components within a complex model. In the context of a research paper, these studies are crucial for demonstrating the model’s design choices and their impact on performance. By removing or altering specific parts of the model—for example, a particular module, layer, or hyperparameter—and observing the effect on key metrics, researchers can gain a deeper understanding of their model’s strengths and weaknesses. Well-designed ablation studies are vital for establishing causality, showing that performance improvements aren’t solely due to increased model complexity. The results provide valuable insights into the model’s architecture and help to pinpoint critical components that contribute most significantly to its overall effectiveness. A robust ablation study strengthens the paper’s claims by providing strong empirical evidence to support the design decisions. This detailed analysis also helps identify potential areas for future work, guiding further model improvements by highlighting the areas most in need of refinement.

Future of EC#

The future of exposure correction (EC) likely involves deeper integration of physics-based models like Retinex theory with advanced deep learning architectures. This will enable more robust and generalizable EC methods capable of handling diverse and challenging imaging conditions. Improved efficiency is crucial; future EC models must achieve high performance with lower computational cost, ideally suitable for real-time applications and resource-constrained devices. Addressing multi-exposure scenarios will be another key development, requiring sophisticated algorithms that can seamlessly reconcile varying exposures within a single image or across a sequence of images. Further research should focus on handling more complex degradations, moving beyond basic over- and under-exposure to address issues like noise, artifacts, and color distortion, and improving the EC model’s ability to preserve fine details and structural information. This could leverage advances in generative models and other image restoration techniques. The ultimate goal is to create versatile and user-friendly EC tools applicable across various applications, from consumer photography to scientific imaging and medical diagnostics.

More visual insights#

More on figures

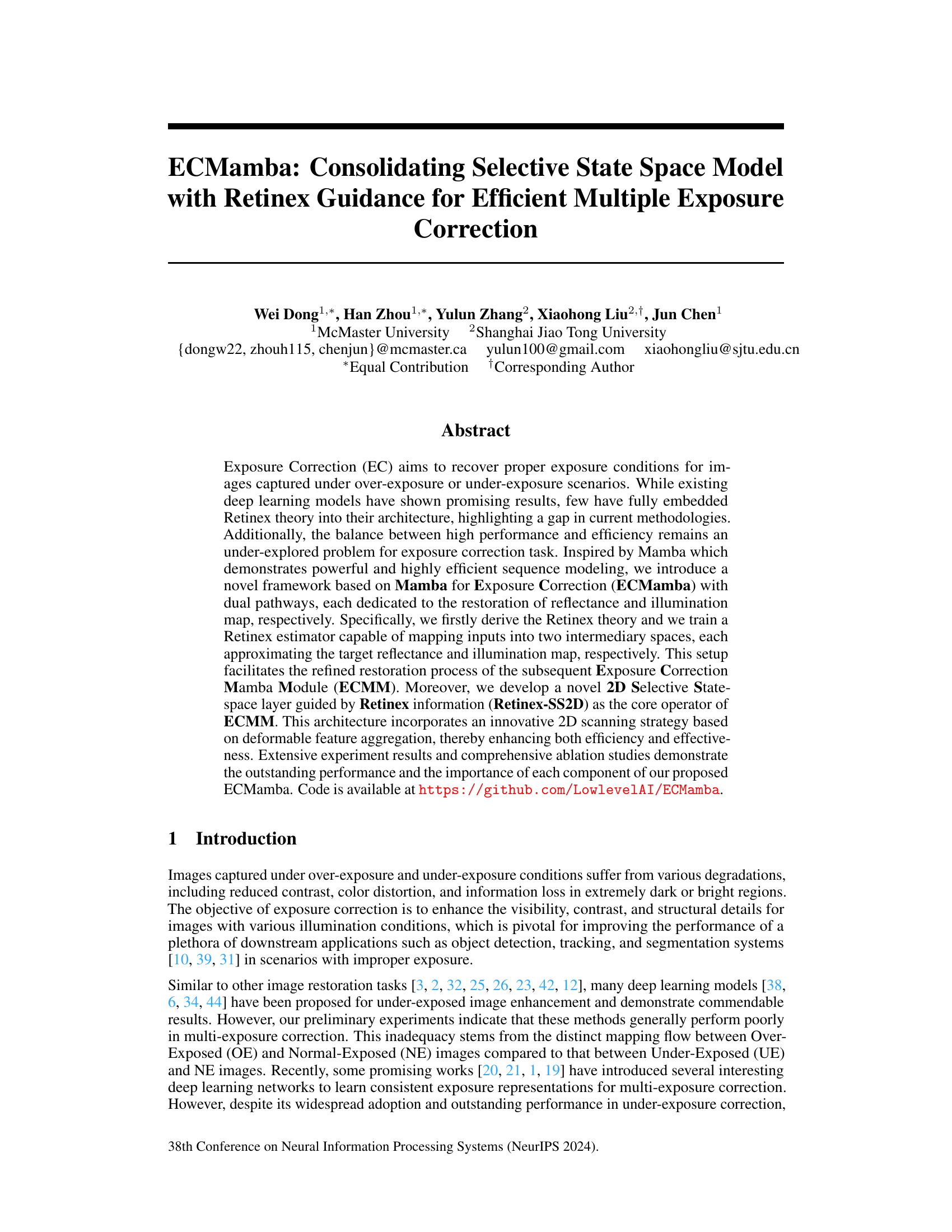

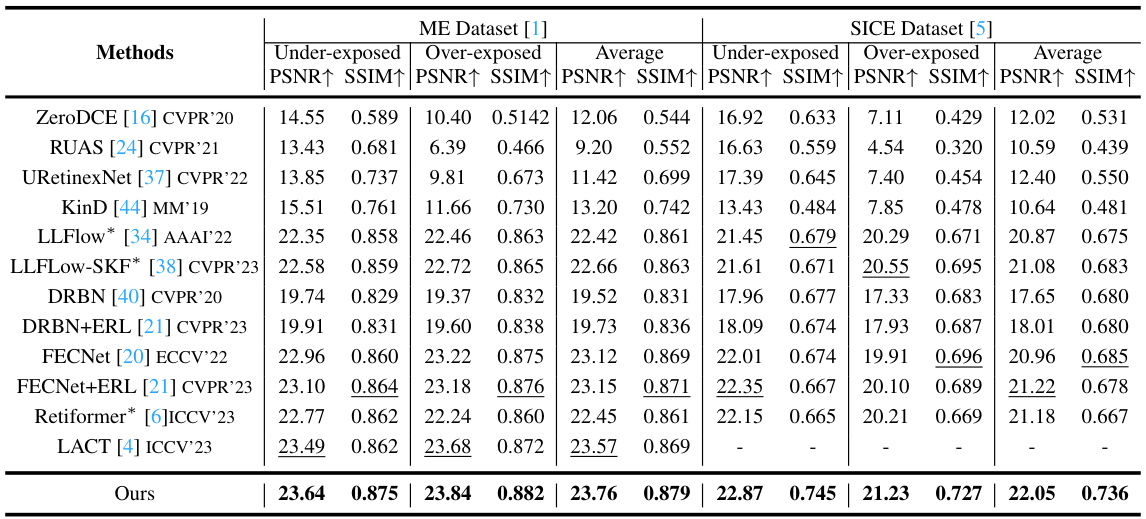

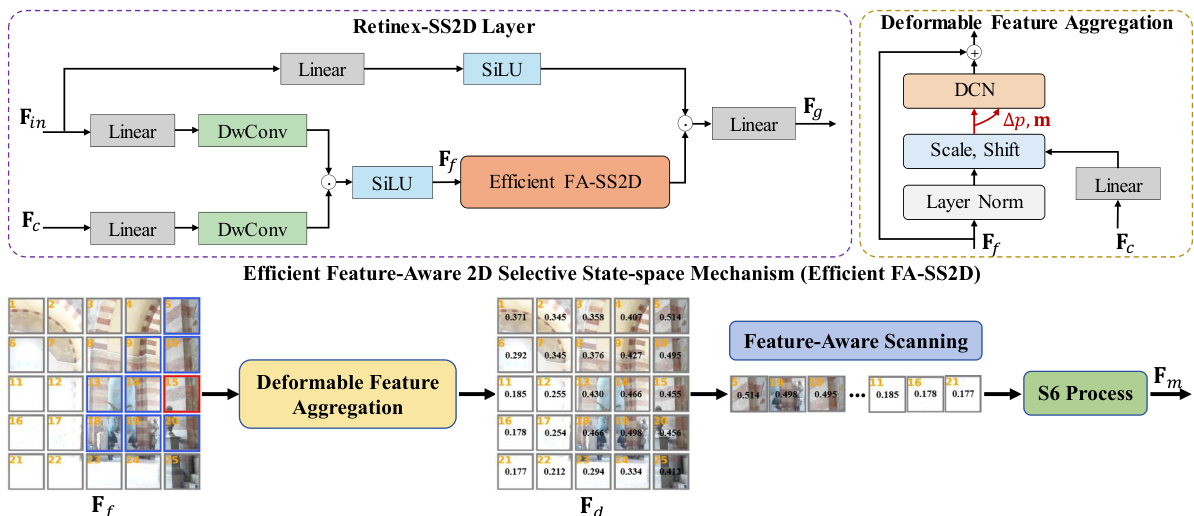

This figure shows the overall architecture of the proposed Retinex-based framework for exposure correction. It consists of three main components: 1. Retinex Estimator: This component takes the input image (I) and estimates the reflectance (R) and illumination (L) maps using Retinex theory. The output of the Retinex Estimator is then used to refine the reflectance and illumination maps further. 2. Exposure Correction Mamba Module (ECMM): This module consists of two branches, one for processing the reflectance map (R’) and another for processing the illumination map (L’). Each branch uses a Retinex Mamba Block (RMB) to refine the respective maps. The RMB incorporates a Retinex-SS2D layer, an innovative scanning strategy that enhances both efficiency and effectiveness. 3. Final Output: The final output (Iout) of the system is obtained by combining the refined reflectance and illumination maps. The result demonstrates an enhanced image with improved contrast, color, and structural details. The figure also includes detailed diagrams of the ECMM and RMB components, showing the specific layers and operations involved in each.

This figure details the Retinex-SS2D layer, a core component of the ECMamba model for exposure correction. It shows how input features are fused with Retinex guidance, followed by deformable convolution for feature aggregation. A novel feature-aware scanning strategy then orders the aggregated features by importance before processing with the Selective State Space mechanism of Mamba.

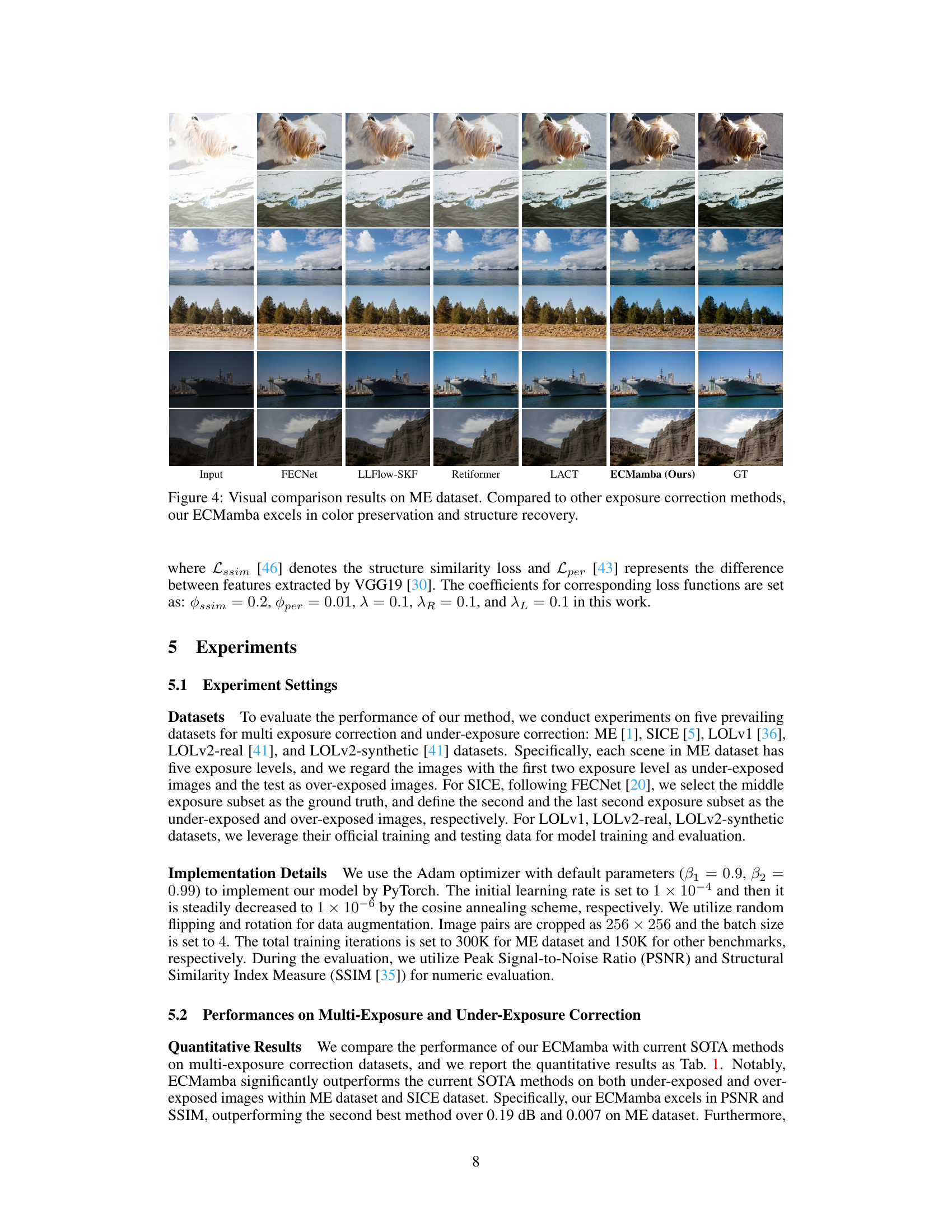

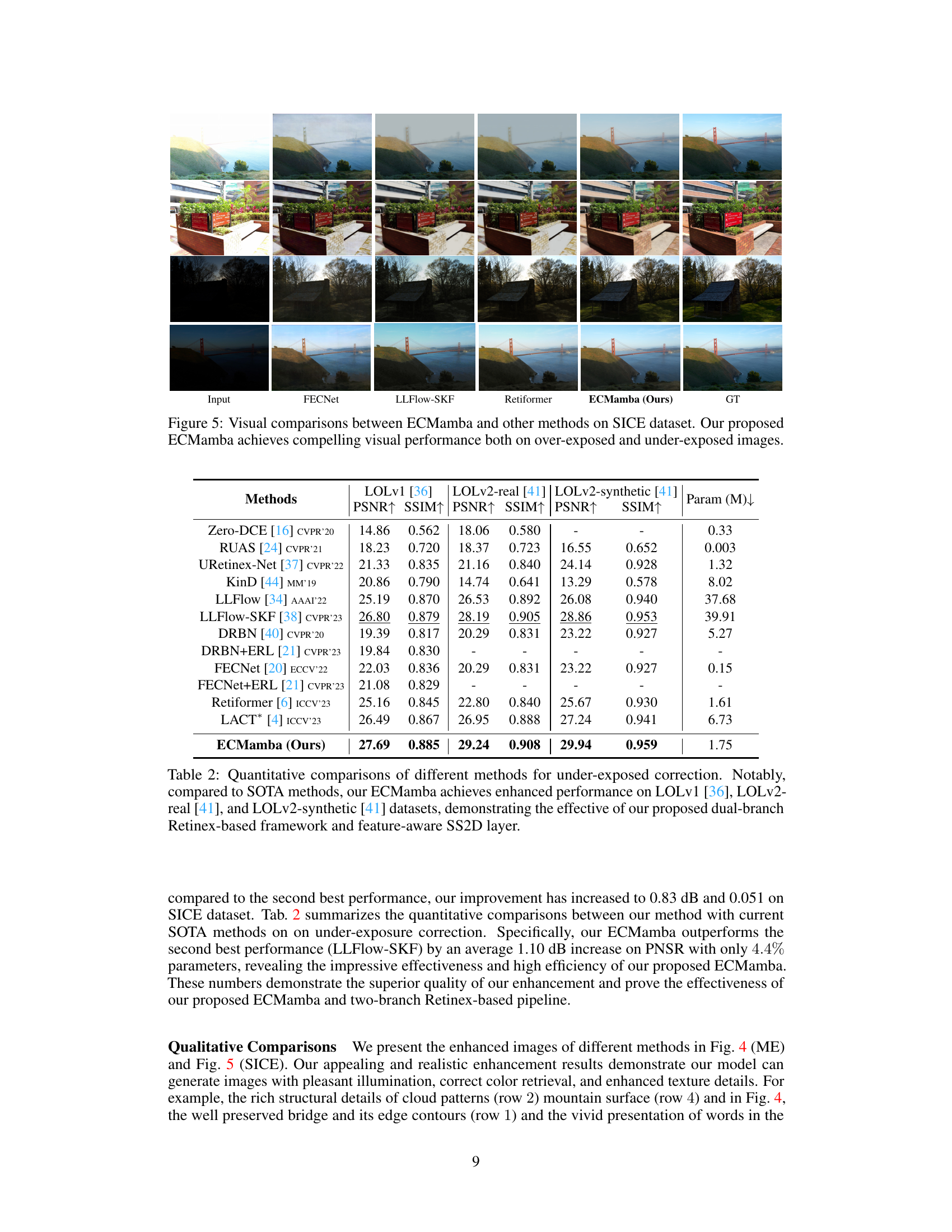

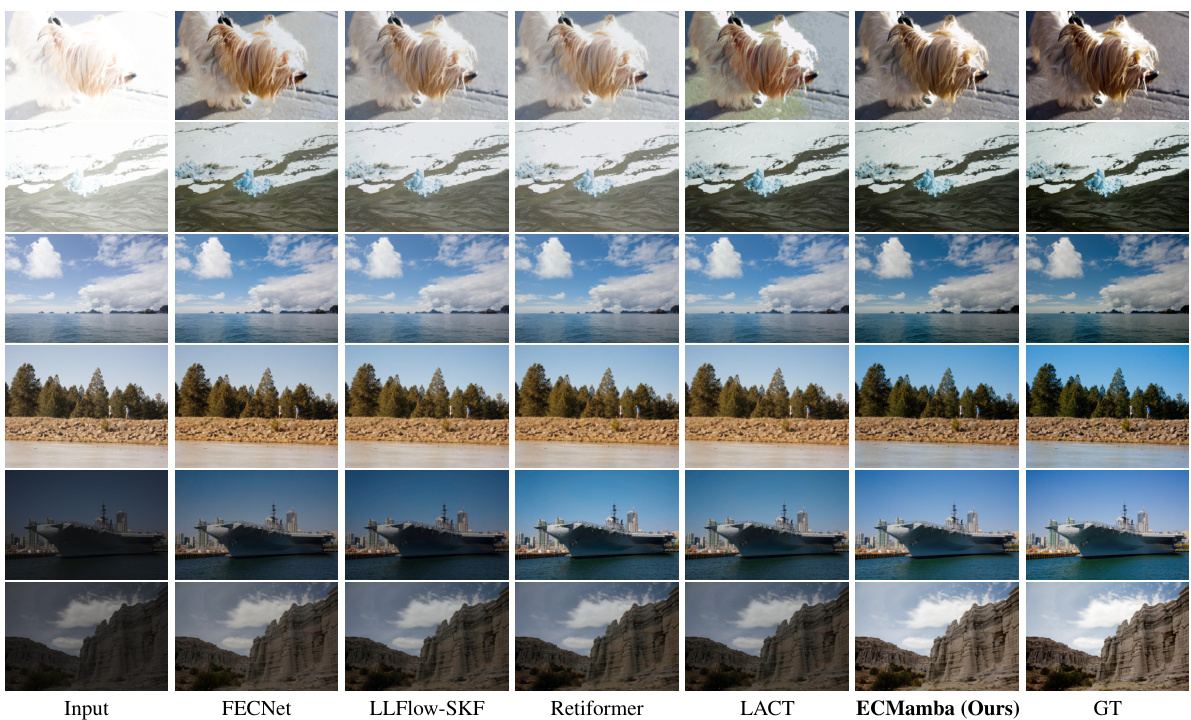

This figure showcases a visual comparison of different exposure correction methods on the ME dataset. It presents input images along with the results produced by FECNet, LLFlow-SKF, Retiformer, LACT, and the proposed ECMamba method. The ground truth (GT) is also included for comparison. The figure highlights that ECMamba performs better than other methods in preserving color fidelity and restoring structural details in the corrected images, particularly in maintaining sharp edges and clear textures.

This figure shows a visual comparison of the results obtained by different exposure correction methods on the ME dataset. The methods compared include FECNet, LLFlow-SKF, Retiformer, and the authors’ proposed ECMamba. The results are displayed in image rows for four different scenes (two overexposed and two underexposed). The ground truth (GT) is presented as the final column for comparison. The caption highlights that ECMamba outperforms other methods in preserving color and maintaining image details.

More on tables

This table presents a quantitative comparison of various methods for multi-exposure correction using two datasets, ME and SICE. The performance metrics used are PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index). Higher values indicate better performance. The table includes the names of the compared methods, their source publications, and the obtained PSNR and SSIM values for under-exposed and over-exposed images. The table also indicates whether the results were taken from the original papers or obtained by running the authors’ code using publicly available pre-trained models.

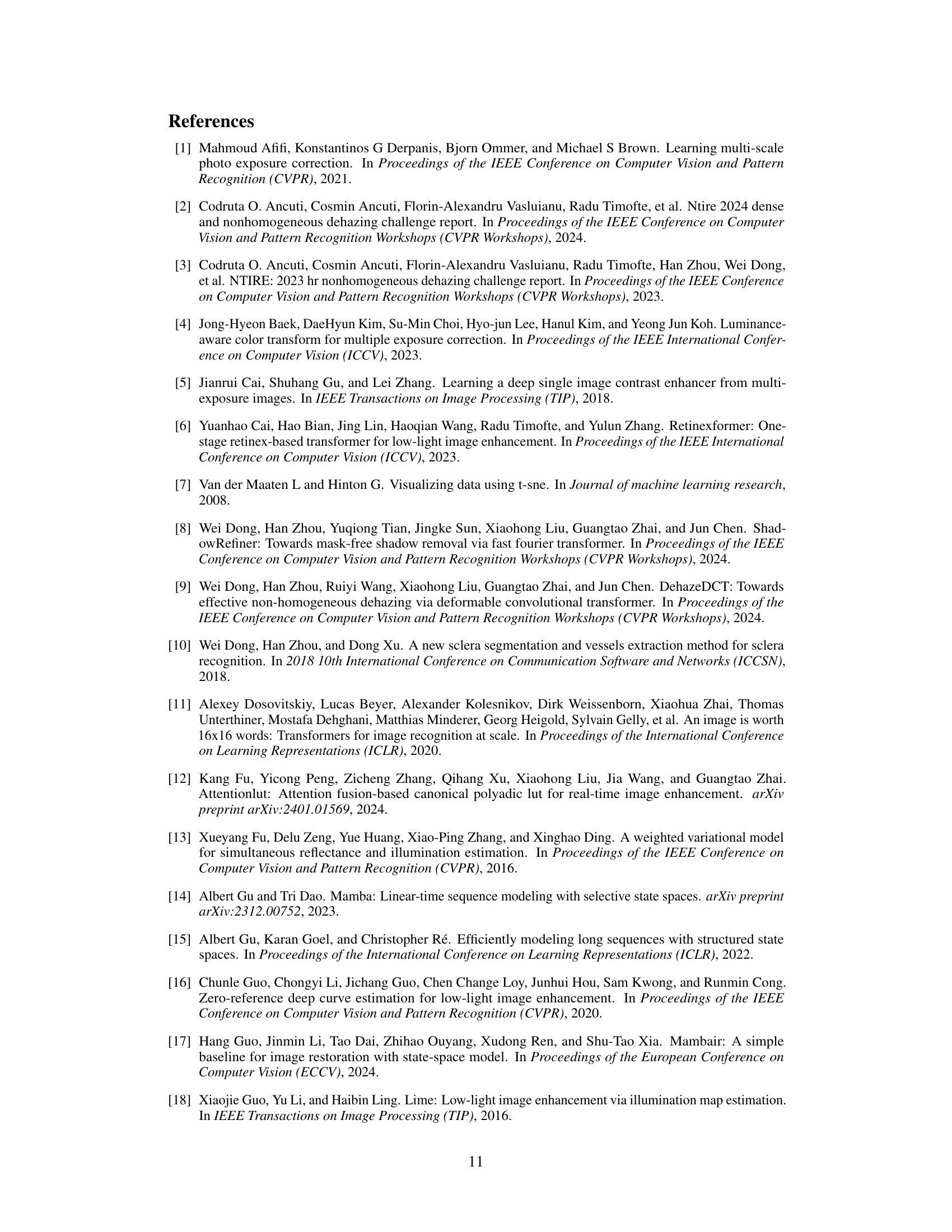

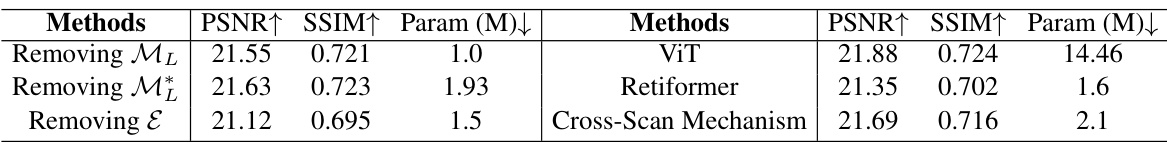

This ablation study investigates the contribution of different components in the proposed ECMamba model for exposure correction. The left side evaluates the two-branch Retinex-based framework by removing either the illumination branch (ML), reflectance branch (MR), or the Retinex estimator (E). The right side compares ECMamba against other methods by replacing the core module with Vision Transformer (ViT) and Retiformer, and by replacing the FA-SS2D with a cross-scan mechanism, showing the superiority of the proposed approach.

Full paper#