TL;DR#

Blind image restoration aims to recover high-quality images from degraded ones, but existing methods often assume specific degradation models, limiting real-world applications. Blind image restoration problems become challenging when the degradation function is entirely unknown. Previous methods using diffusion models also rely on pre-defined degradation models or manually-set hyperparameters, limiting their versatility and practicality.

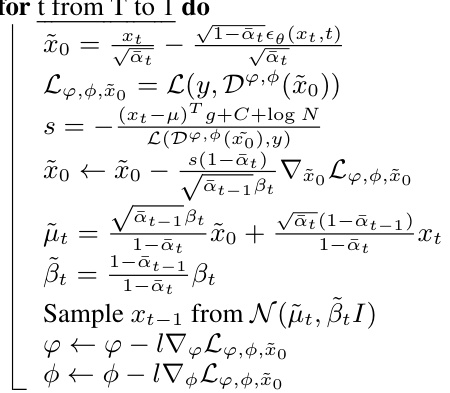

This paper introduces BIR-D, a novel approach using an optimizable convolutional kernel to dynamically simulate the degradation process. The kernel’s parameters are updated during the diffusion sampling steps, allowing BIR-D to adapt to diverse degradations. The authors also derive an empirical formula for adaptive guidance scale, eliminating the need for grid search and improving performance. Experiments demonstrate BIR-D’s superior performance and versatility across various image restoration tasks, including those with multiple and complex degradations.

Key Takeaways#

Why does it matter?#

This paper is important because it presents BIR-D, a novel and versatile approach to blind image restoration that significantly advances the field. Its ability to handle diverse and complex degradations without requiring prior knowledge of the degradation model opens exciting new avenues for research and practical applications. Researchers will find the empirical formula for adaptive guidance and the universal degradation function particularly valuable for improving existing image restoration models and developing new ones. The readily available code further enhances the paper’s impact, facilitating wider adoption and future research.

Visual Insights#

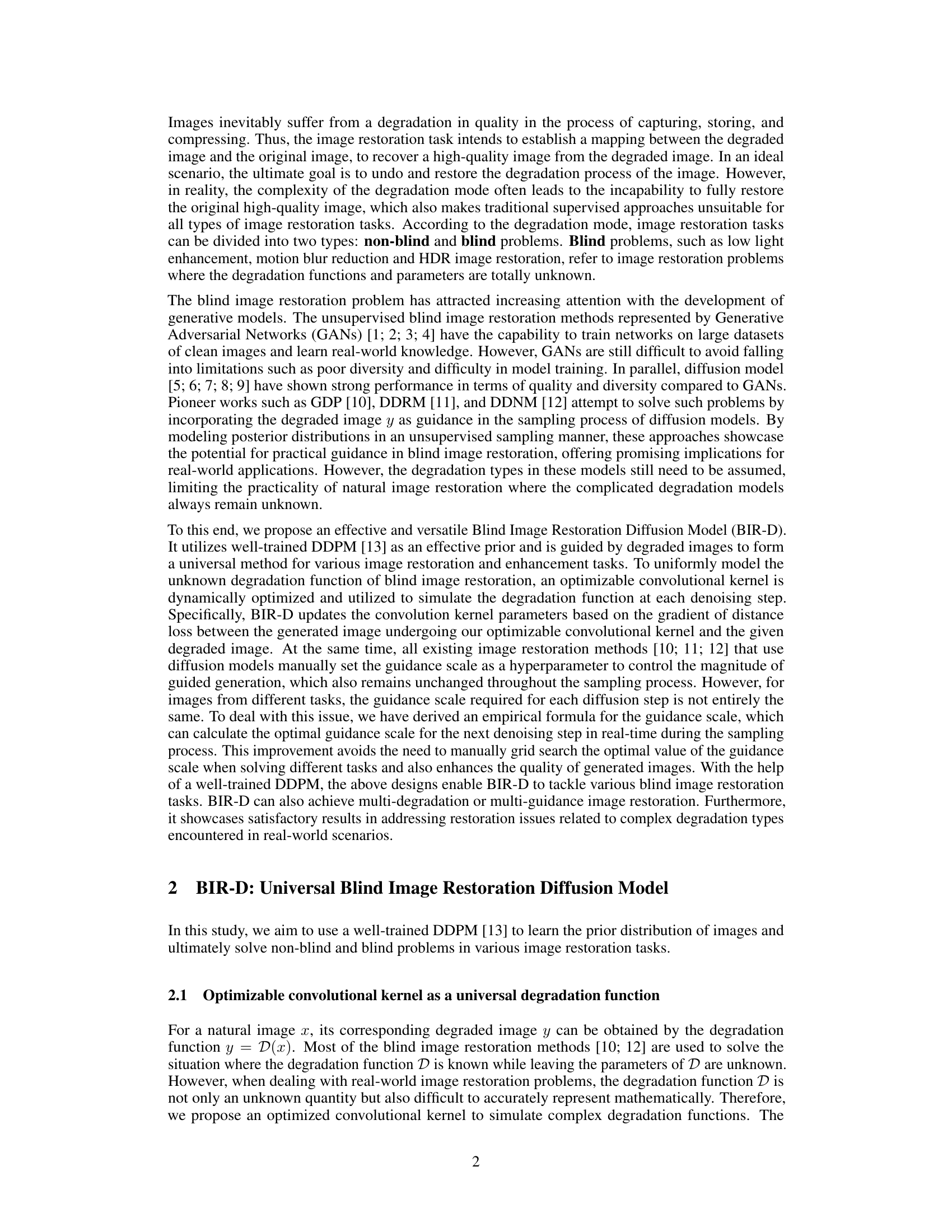

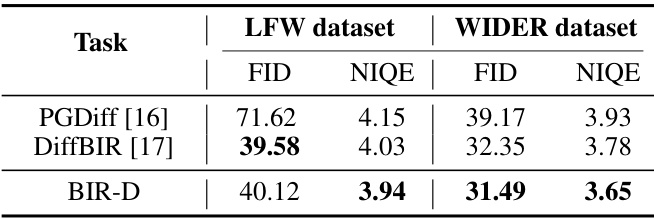

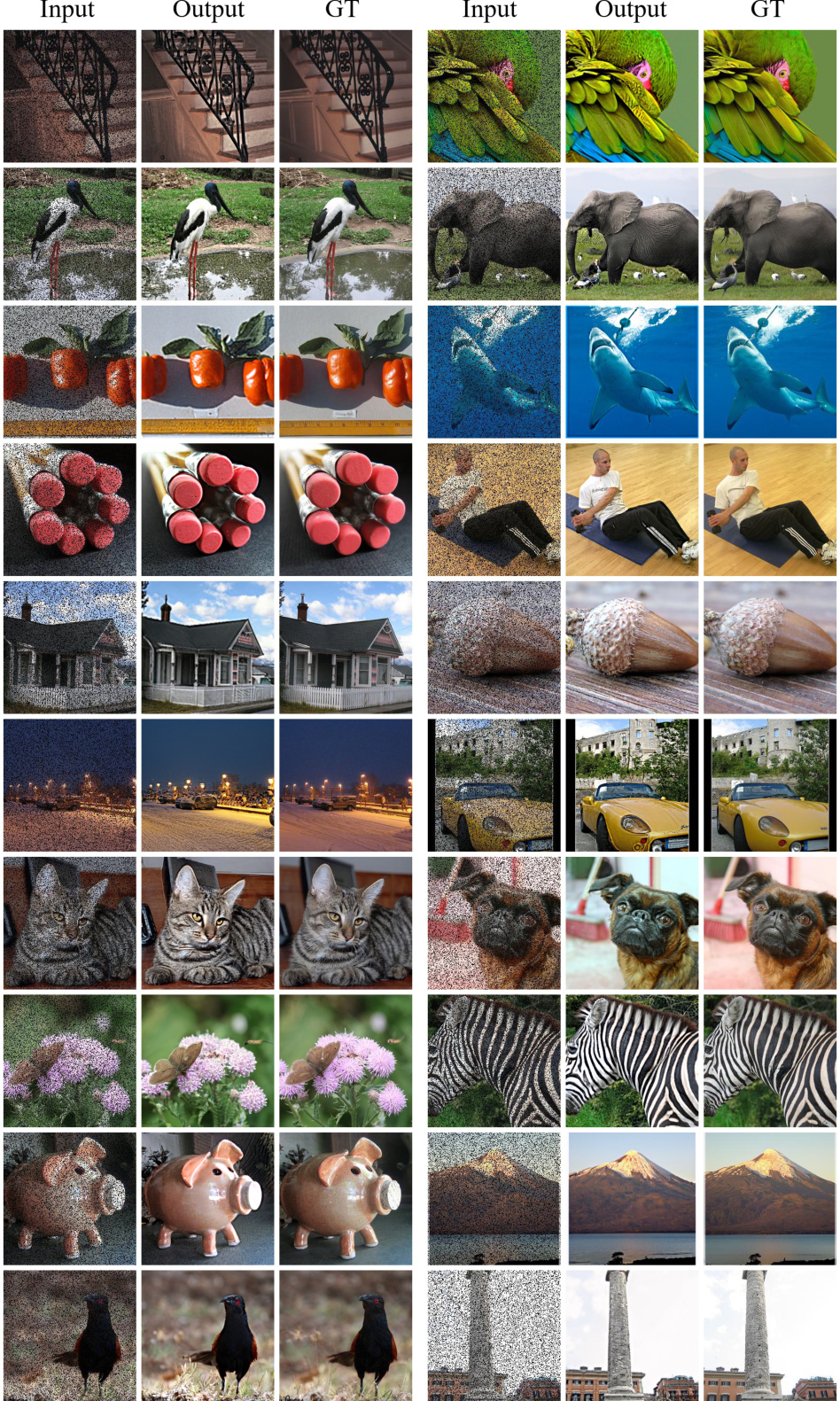

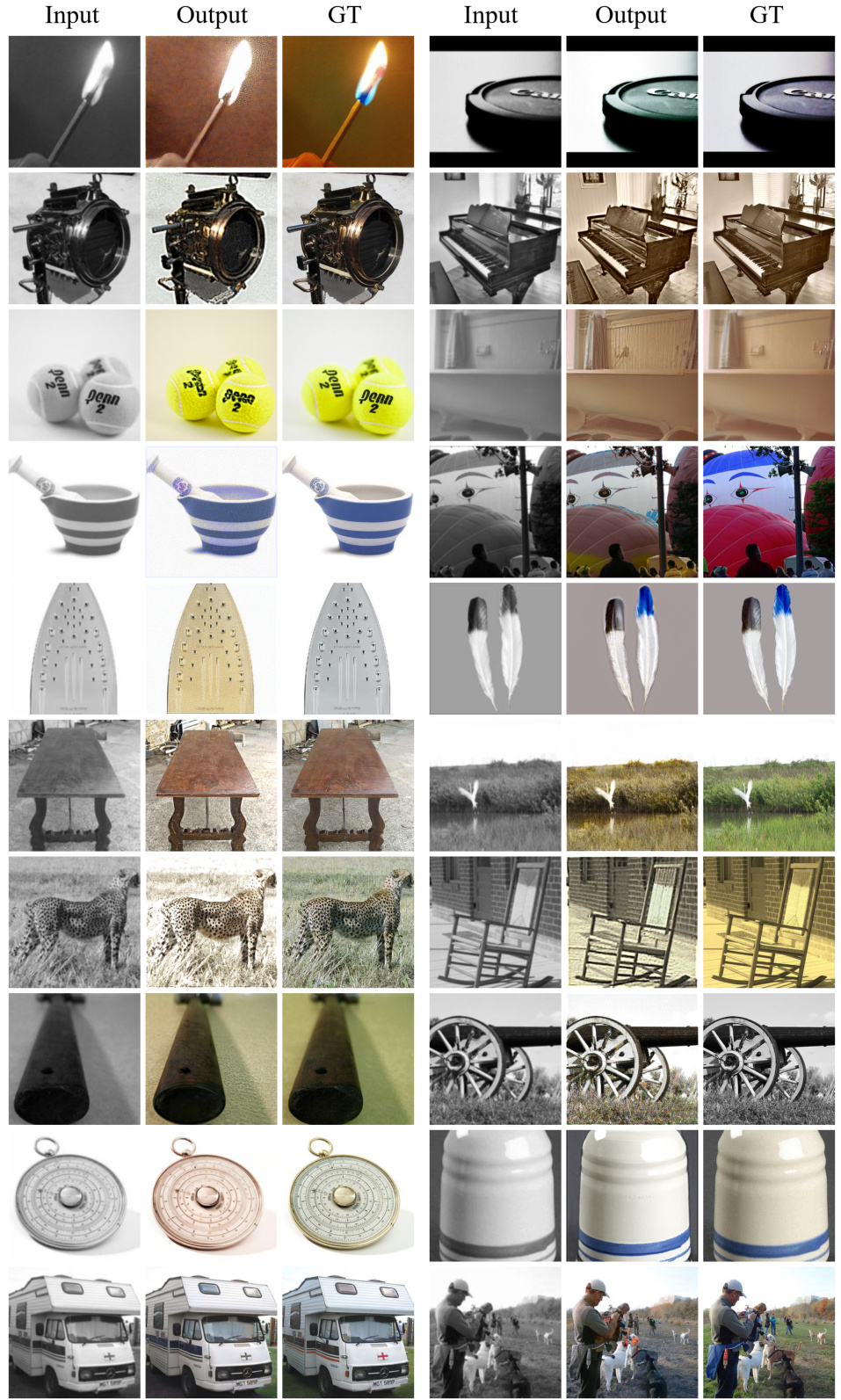

🔼 This figure shows several examples of image restoration using the proposed BIR-D model. It demonstrates the model’s ability to handle various types of image degradation, including blur, low resolution, occlusion, grayscale conversion, and mixed degradations. The figure highlights BIR-D’s capability to produce high-quality restorations in both linear inverse problems (where the degradation function is known) and blind scenarios (where the degradation function is unknown).

read the caption

Figure 1: Blind Image Restoration Diffusion Model (BIR-D) can achieve high-quality restoration for different types of degraded images. BIR-D not only has the capability to restore (a) linear inverse problems when the degradation function is known. BIR-D can also achieve high-quality image restoration in (b) blind issues with unknown degradation functions, as well as in (c) mixed degradation and real degradation scenarios.

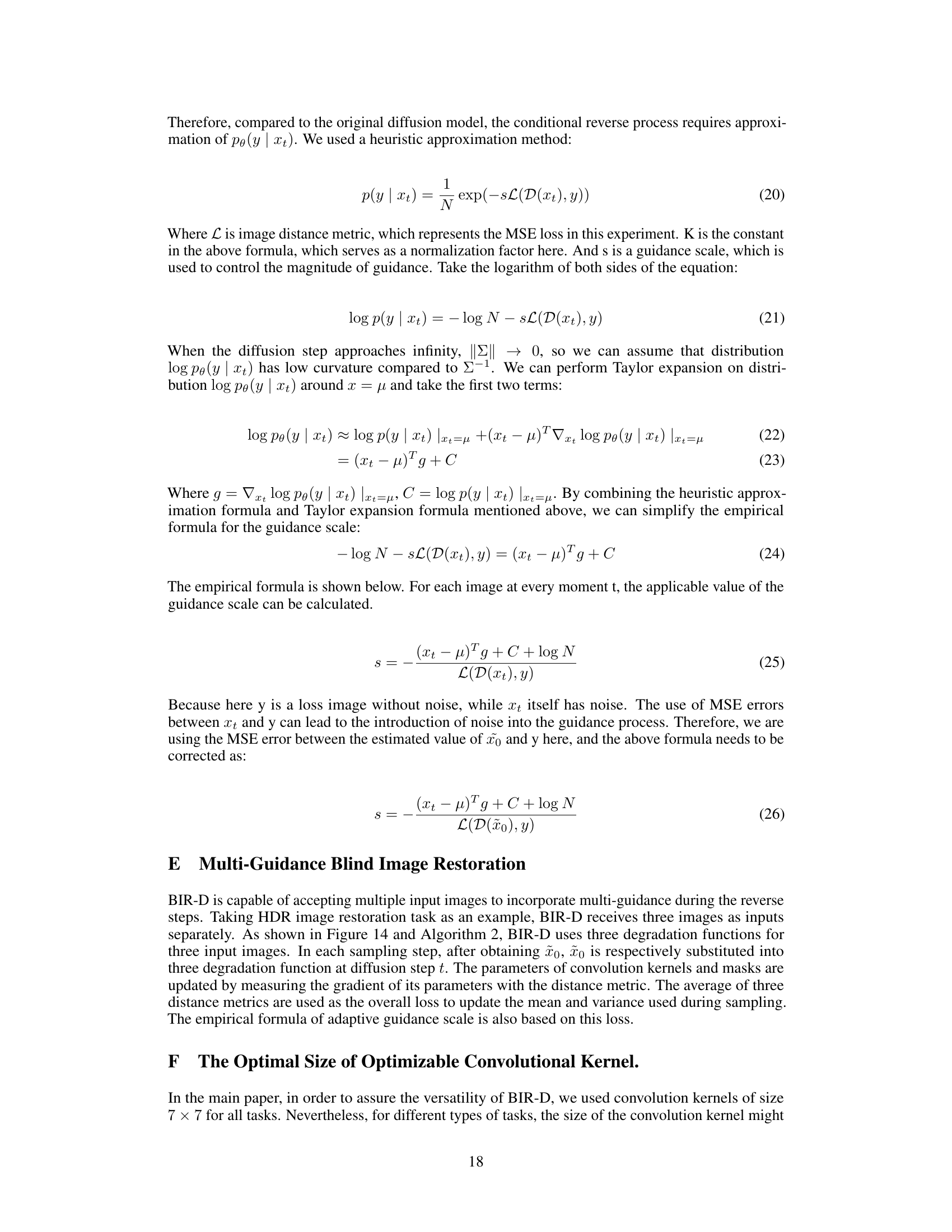

🔼 This table presents a quantitative comparison of blind face restoration results achieved by three different methods: PGDiff, DiffBIR, and BIR-D. The comparison is done using two metrics: Fréchet Inception Distance (FID) and Natural Image Quality Evaluator (NIQE) on two benchmark datasets: LFW and WIDER. Lower FID and NIQE scores indicate better image quality and more natural-looking results.

read the caption

Table 1: Quantitative comparison of blind face restoration on LFW and WIDER datasets

In-depth insights#

Universal Restoration#

The concept of “Universal Restoration” in image processing suggests a single model capable of handling diverse degradation types without model retraining or significant parameter tuning. This is a significant advancement over traditional methods, which typically require separate models or extensive hyperparameter searches for specific degradations (like blur, noise, compression artifacts). A truly universal approach would significantly reduce the engineering effort needed for image restoration applications and improve real-world applicability. However, such universality likely presents trade-offs. A complex, universal model might compromise performance on individual degradation types compared to specialized models, and achieving true universality across all image degradations is a significant research challenge. The practical implications are substantial: faster processing times, reduced storage requirements for multiple models, and more robust handling of unknown or mixed degradations in real-world scenarios. The central challenge is finding the right balance between model complexity, generalization ability, and performance on specific tasks. A key aspect to consider in evaluating a universal restoration model is how effectively it deals with diverse and possibly unknown combinations of degradations.

Adaptive Guidance#

Adaptive guidance, in the context of image restoration using diffusion models, represents a significant advancement over traditional methods. Instead of using a fixed guidance scale throughout the denoising process, adaptive guidance dynamically adjusts this scale based on factors such as the generated image quality, the level of noise, and the characteristics of the degraded image. This dynamic adjustment allows the model to better balance the contributions of the prior (clean image distribution) and the guidance signal (degraded image), leading to improved restoration results, particularly in the presence of complex or unknown degradations. The use of an empirical formula to automatically calculate the optimal guidance scale is a key innovation, eliminating the need for manual hyperparameter tuning. This intelligent approach enhances the model’s robustness and generalizability across various image restoration tasks, ultimately yielding more accurate and visually appealing restorations than fixed-guidance approaches.

Kernel Optimization#

Optimizing the convolutional kernel is crucial for effective blind image restoration. The core idea is to dynamically learn the degradation function during the denoising process, rather than assuming a pre-defined model. This approach enhances the model’s adaptability to diverse real-world degradation scenarios. A key challenge is that the degradation is unknown, so an optimizable convolutional kernel is used to simulate it. This kernel’s parameters are updated iteratively based on the reconstruction loss, allowing the model to adapt to complex degradation patterns and achieve superior restoration quality in varied conditions. The effectiveness of this method relies on the ability of the model to learn the right kernel parameters efficiently. The optimization strategy itself significantly impacts the performance of the restoration. Therefore, the choice of optimization algorithm and loss function are important elements that should be carefully considered and potentially adapted based on the specific degradation characteristics.

Multi-degradation#

The heading ‘Multi-degradation’ suggests the research paper investigates image restoration scenarios involving multiple, complex degradation types simultaneously. This is a significant advancement over traditional methods that usually focus on single degradation forms (e.g., blur, noise). The ability to handle diverse combined degradations, such as blur and noise or low-light and compression artifacts, showcases the robustness and real-world applicability of the proposed model. This implies the model has a superior capability to generalize beyond controlled laboratory settings. The results likely demonstrate that the model effectively learns a more general representation of image degradation, proving its versatility. The approach is likely data-driven, relying on large and diverse datasets to learn and disentangle these complex degradations. Overall, it represents a major step toward creating more practical and powerful image restoration techniques.

Future Directions#

The research paper on “Taming Generative Diffusion Prior for Universal Blind Image Restoration” presents a novel approach for image restoration using an optimizable convolutional kernel. Future directions could explore several promising avenues. Firstly, improving the model’s efficiency is crucial; current methods are computationally expensive, limiting their applicability. Exploring more efficient diffusion models or optimization techniques could significantly enhance real-world usability. Secondly, the current model assumes a single degradation type per image; expanding to handle multiple, concurrent degradations with varying intensities and types would boost the model’s generalizability and practicality. Investigating the integration of prior information from other modalities, such as depth maps or semantic segmentation, could further improve the model’s performance and robustness. Finally, rigorous testing on more diverse real-world datasets and addressing potential biases are needed to validate its generalization capabilities for broader applications.

More visual insights#

More on figures

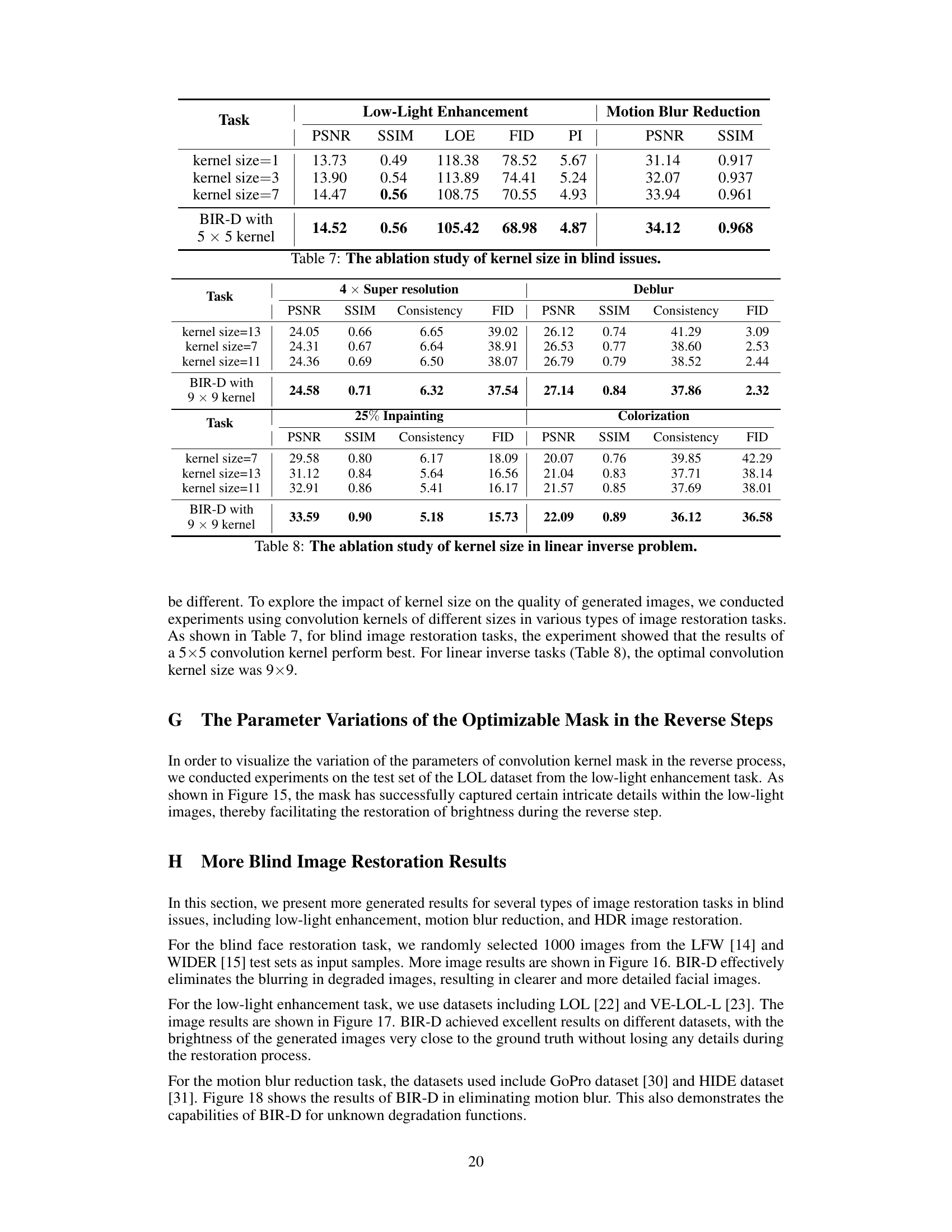

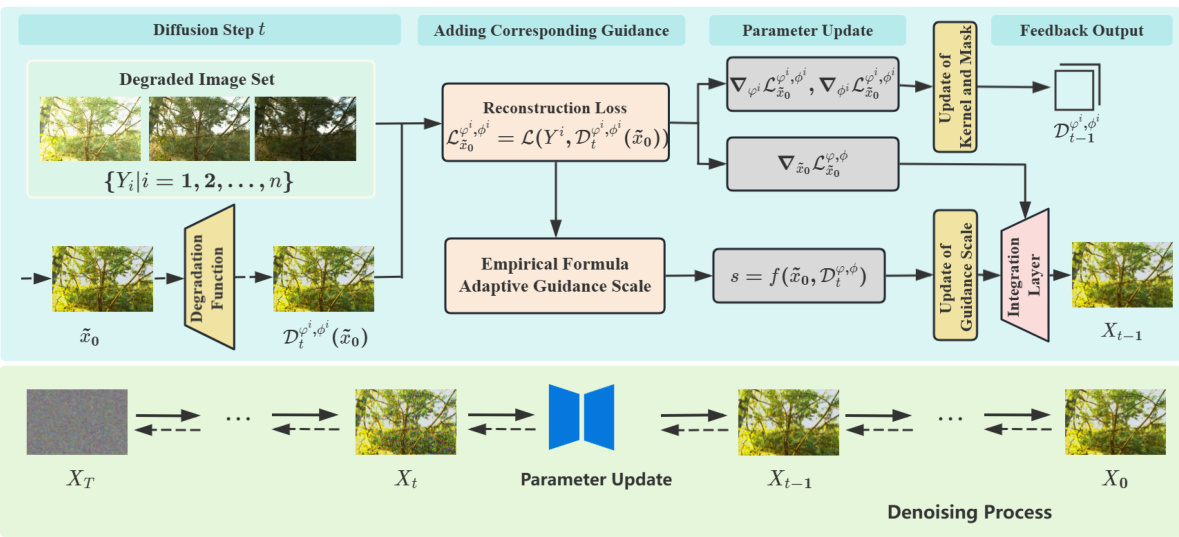

🔼 This figure provides a detailed overview of the BIR-D model, highlighting its key components and their interactions during the image restoration process. It shows how degraded images are used as guidance to optimize a dynamically updated degradation model within the diffusion framework. The adaptive guidance scale calculation method is also illustrated.

read the caption

Figure 2: Overview of BIR-D. Degraded image y was given during the sampling process. BIR-D systematically incorporates guidance from degraded images in the reverse process of the diffusion model and optimizes the degraded model at the same time. For degraded image y, pre-training is first performed to provide a better initial state for BIR-D. BIR-D introduces a distance function in each step of the reverse process of the diffusion model to describe the distance loss between the degraded image y and the generated image x0 after the degradation function, so that the gradient could be used to update and simulate a better degradation function. Based on the empirical formula, the adaptive guidance scale can be calculated to provide optimal guidance during the sampling process.

🔼 This figure provides a visual overview of the proposed Blind Image Restoration Diffusion Model (BIR-D). It shows how degraded images are used as guidance during the reverse diffusion process, and how an optimizable convolutional kernel dynamically simulates the degradation process. The adaptive guidance scale is also highlighted, showing how it improves the quality and versatility of the model.

read the caption

Figure 2: Overview of BIR-D. Degraded image y was given during the sampling process. BIR-D systematically incorporates guidance from degraded images in the reverse process of the diffusion model and optimizes the degraded model at the same time. For degraded image y, pre-training is first performed to provide a better initial state for BIR-D. BIR-D introduces a distance function in each step of the reverse process of the diffusion model to describe the distance loss between the degraded image y and the generated image x0 after the degradation function, so that the gradient could be used to update and simulate a better degradation function. Based on the empirical formula, the adaptive guidance scale can be calculated to provide optimal guidance during the sampling process.

🔼 This figure displays a comparison of the image quality achieved by three different blind face restoration methods: PGDiff, DiffBIR, and BIR-D. The comparison is made across two datasets: LFW and WIDER. For each dataset, sample degraded images are shown alongside their restorations by each method, enabling a visual assessment of the quality of restoration. This visual comparison allows one to quickly assess the relative performance of each method for restoring faces degraded by various forms of blur and other artifacts.

read the caption

Figure 3: Comparison of image quality for blind face restoration results on LFW [14] and WIDER dataset [15].

🔼 This figure compares the colorization results of BIR-D with those of DDRM, DDNM, and GDP on ImageNet 1k. The figure shows that BIR-D generates a variety of colorized images from the same grayscale input, highlighting its versatility and ability to produce diverse outputs.

read the caption

Figure 4: Comparison of colorization image on ImageNet 1k[18]. BIR-D can generate various outputs on the same input image.

🔼 This figure showcases the capabilities of the BIR-D model in handling various image degradation types. It demonstrates the model’s performance on three categories of image restoration problems: (a) Linear inverse problems where the degradation function is known (e.g., deblurring, super-resolution). (b) Blind issues where the degradation function is unknown (e.g., low-light enhancement, motion blur reduction). (c) Mixed degradations and real-world scenarios combining multiple degradation types.

read the caption

Figure 1: Blind Image Restoration Diffusion Model (BIR-D) can achieve high-quality restoration for different types of degraded images. BIR-D not only has the capability to restore (a) linear inverse problems when the degradation function is known. BIR-D can also achieve high-quality image restoration in (b) blind issues with unknown degradation functions, as well as in (c) mixed degradation and real degradation scenarios.

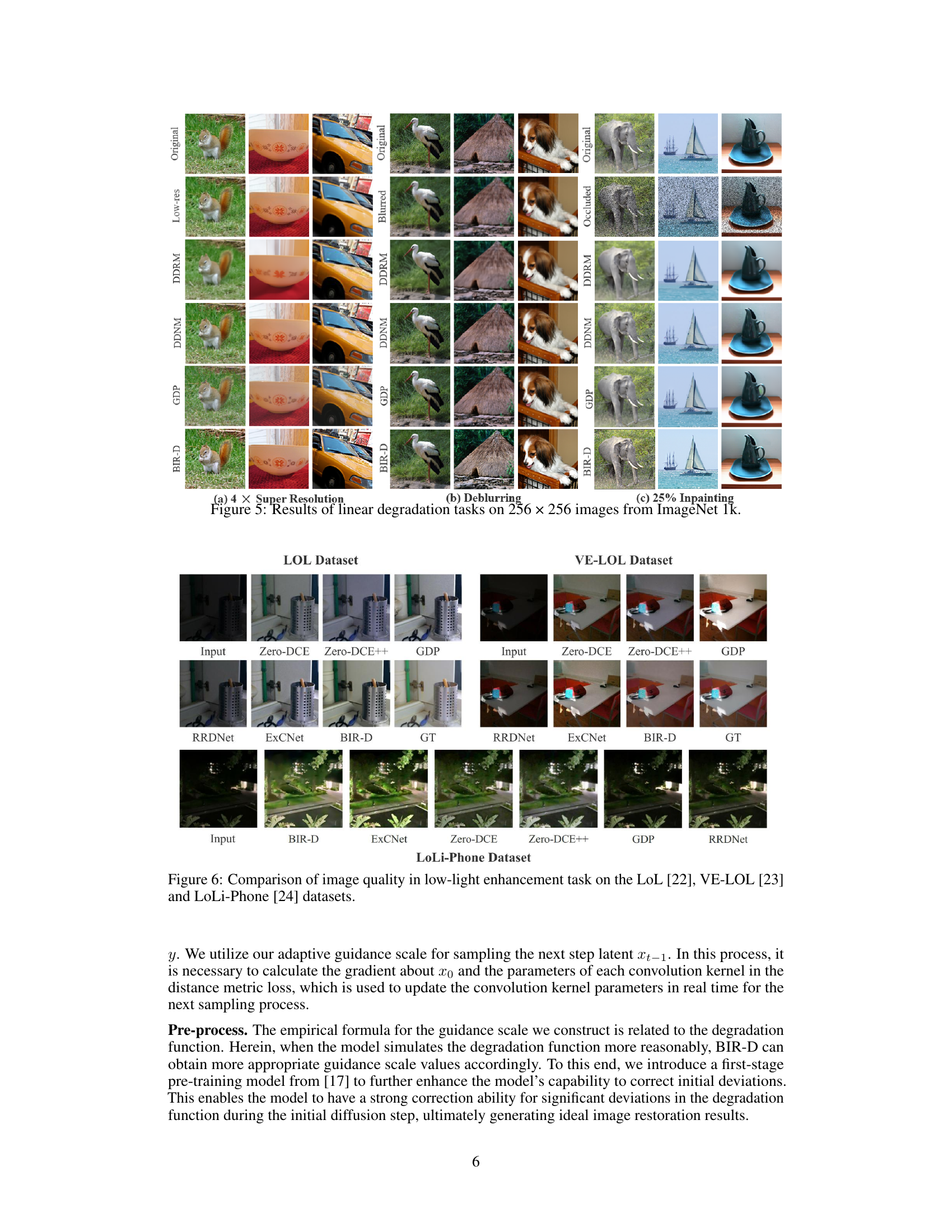

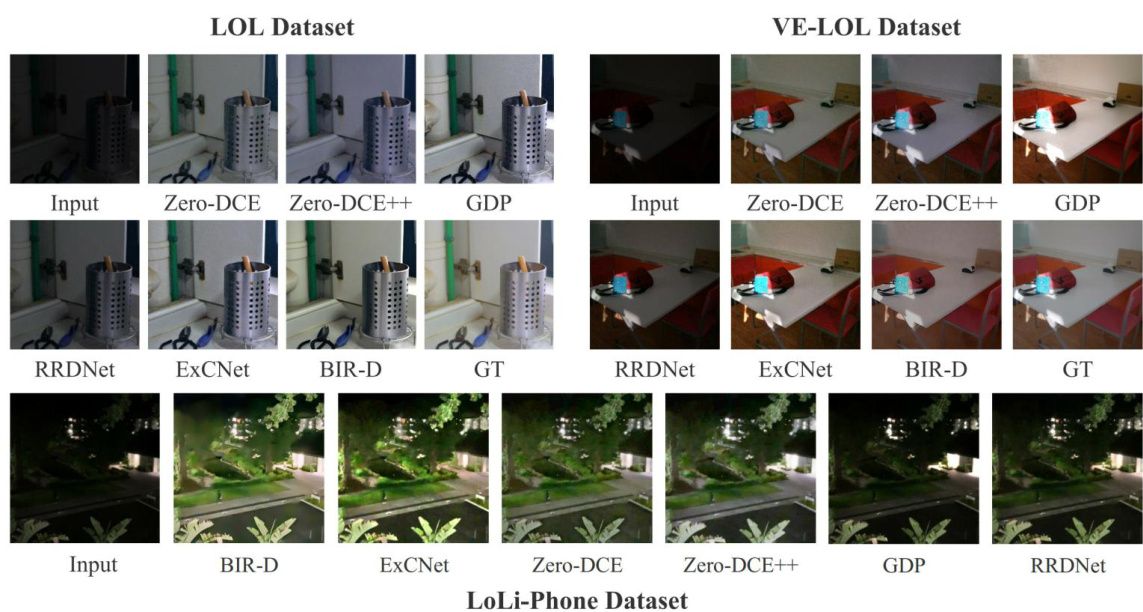

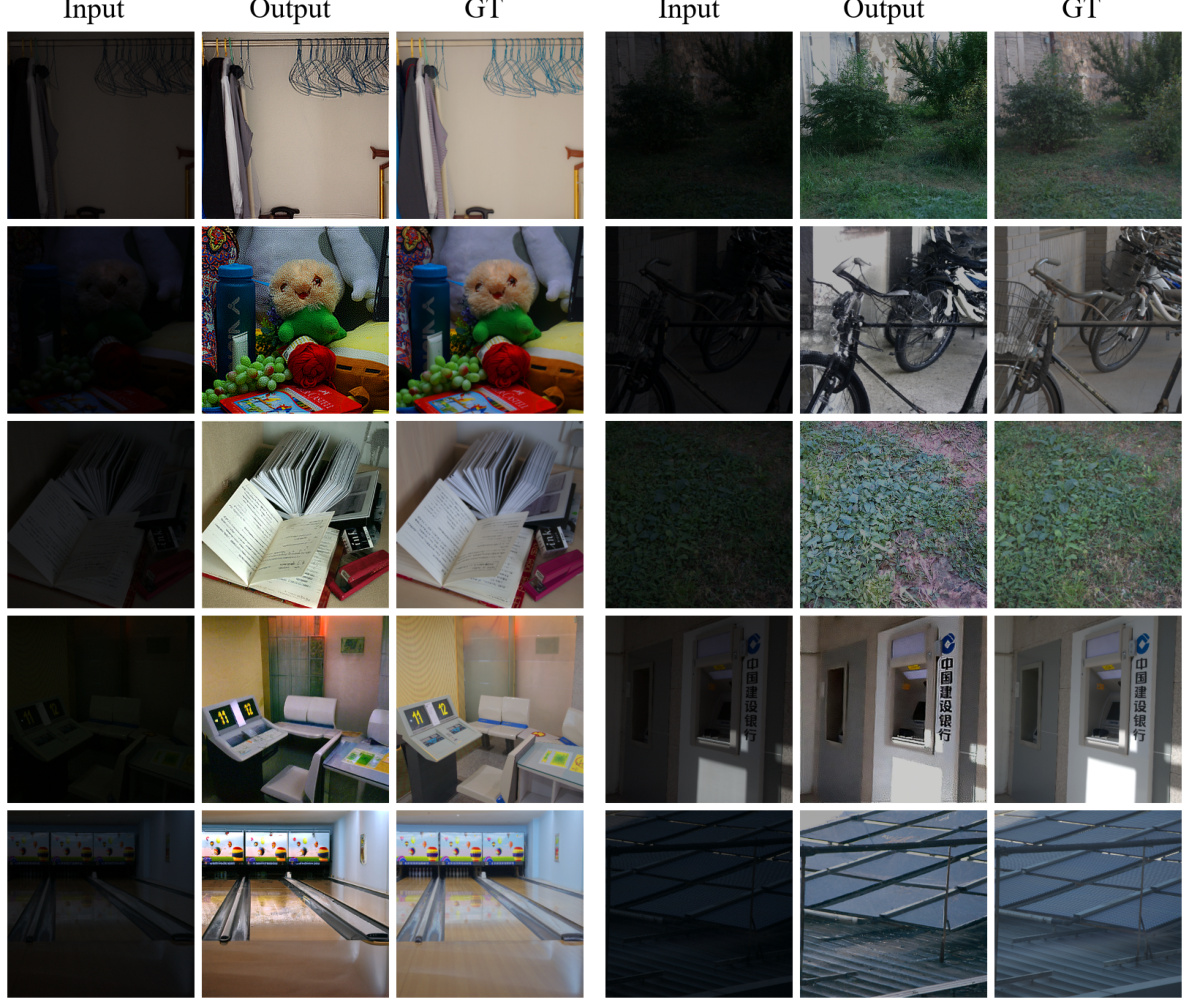

🔼 This figure compares the performance of different low-light enhancement methods on three datasets: LOL, VE-LOL, and LoLi-Phone. Each dataset presents a unique challenge in terms of image quality and lighting conditions. The figure visually demonstrates the relative improvements in image quality achieved by each method compared to the ground truth (GT). The methods compared include BIR-D, Zero-DCE, Zero-DCE++, GDP, RRDNet, and ExCNet. The results showcase BIR-D’s ability to enhance low-light images across various datasets, highlighting its versatility and effectiveness compared to other state-of-the-art methods.

read the caption

Figure 6: Comparison of image quality in low-light enhancement task on the LoL [22], VE-LOL [23] and LoLi-Phone [24] datasets.

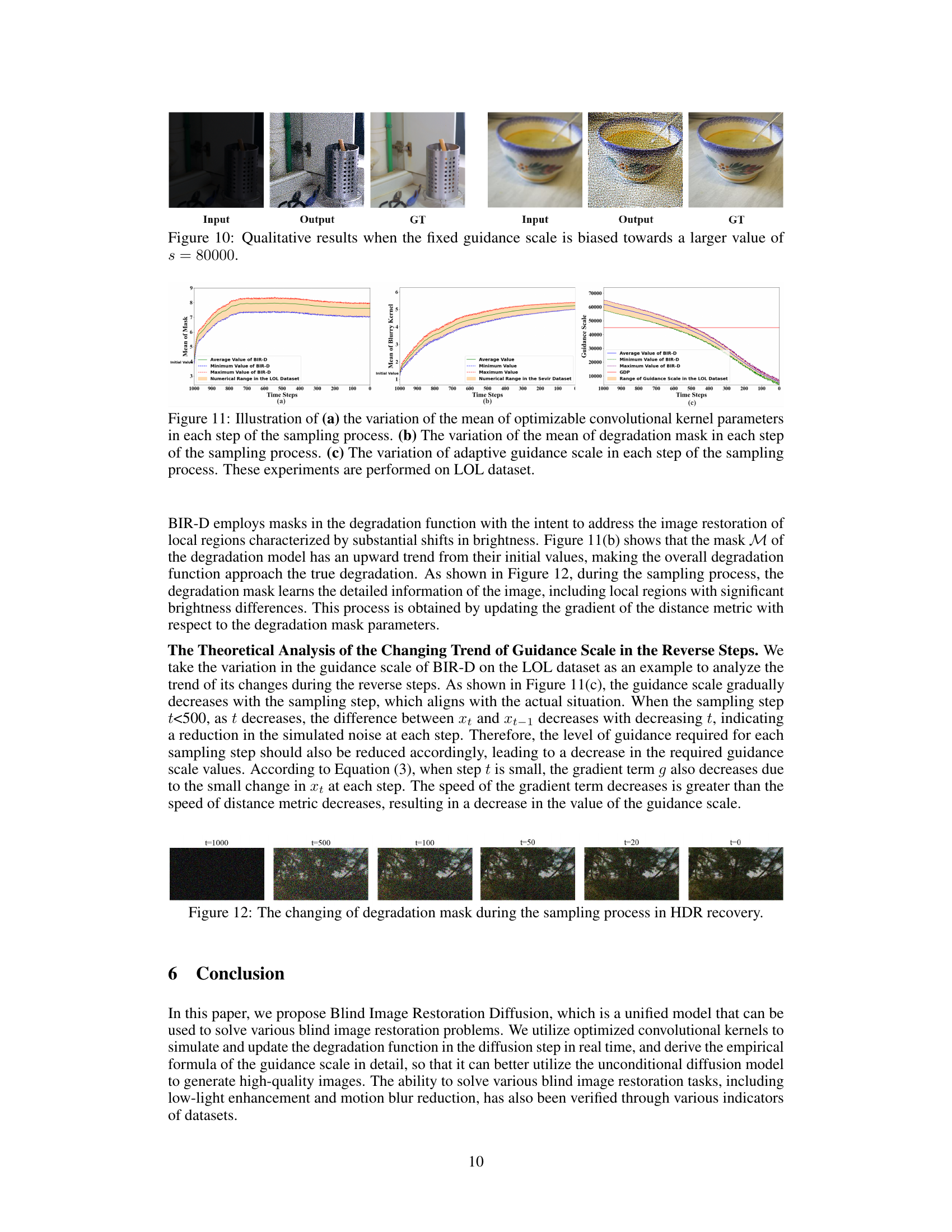

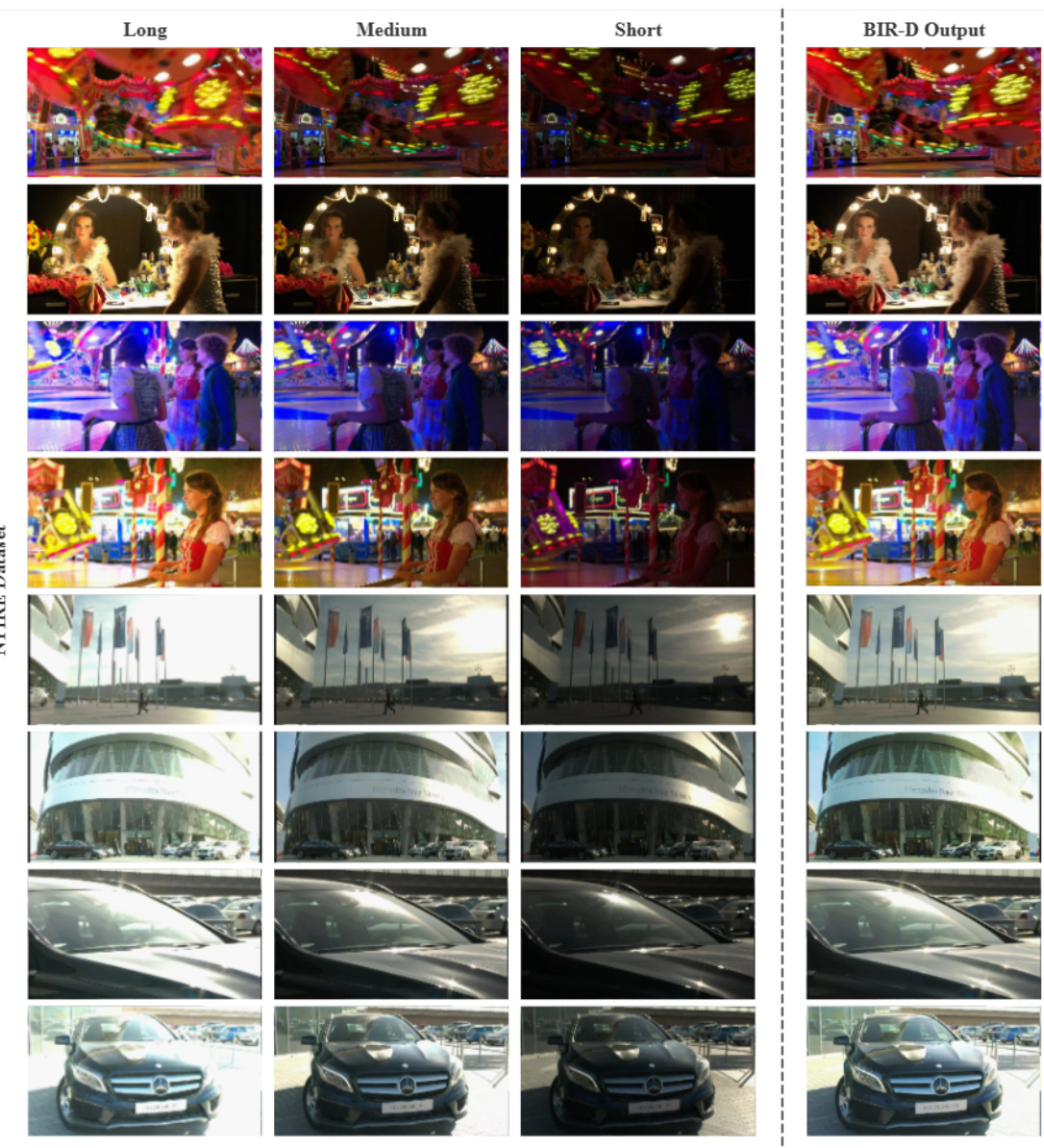

🔼 This figure compares the image quality of HDR image recovery results obtained using BIR-D with those from the NTIRE2021 Multi-Frame HDR Challenge dataset [29]. The figure shows that BIR-D achieves superior results in terms of visual quality and detail preservation compared to the ground truth.

read the caption

Figure 7: Comparison of image quality for HDR image recovery results on NTIRE [29].

🔼 This figure shows a comparison of the image quality achieved by different methods (PGDiff, DiffBIR, and BIR-D) for blind face restoration on two benchmark datasets: LFW and WIDER. The left side displays results from the LFW dataset, and the right shows results from the WIDER dataset. Each column represents a different method. Within each column, the top row shows the input degraded images, and the subsequent rows show the restored images produced by each method. The visual comparison allows one to assess the relative performance of each method in restoring facial details and overall image quality for challenging, real-world conditions.

read the caption

Figure 3: Comparison of image quality for blind face restoration results on LFW [14] and WIDER dataset [15].

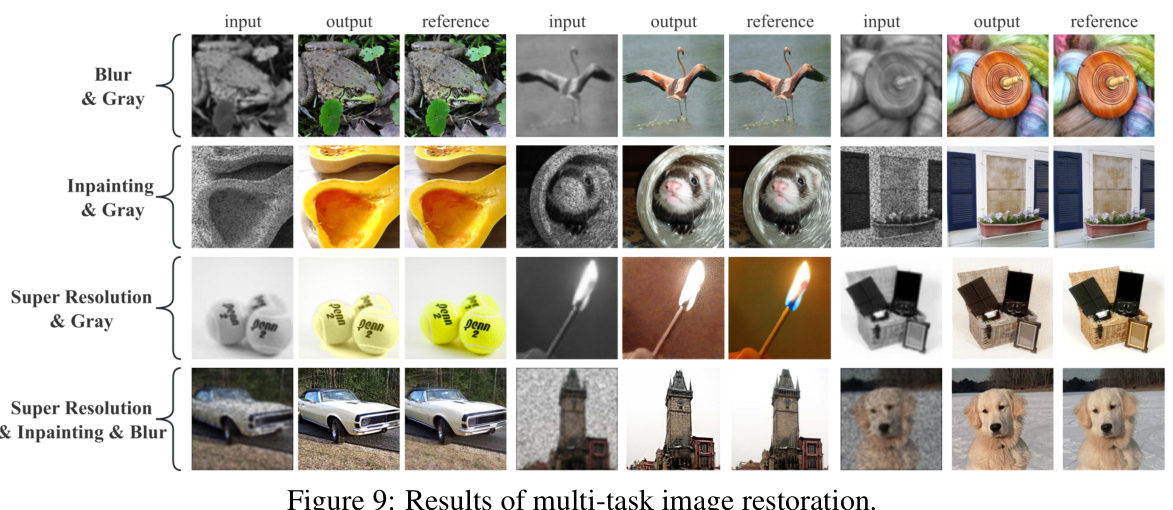

🔼 This figure shows the results of BIR-D on multi-task image restoration. Each row represents a different combination of degradations (blur, gray, inpainting, and super-resolution). The figure visually demonstrates the model’s ability to handle complex, real-world image degradation scenarios by showcasing input images, corresponding outputs generated by BIR-D, and ground truth images.

read the caption

Figure 9: Results of multi-task image restoration.

🔼 This figure showcases the model’s (BIR-D) ability to restore images degraded in various ways, including blurring, low resolution, occlusion, and combinations of these. The examples demonstrate its capability to handle both linear inverse problems (where the degradation is known) and blind restoration (where it is unknown). It highlights the model’s versatility and robustness.

read the caption

Figure 1: Blind Image Restoration Diffusion Model (BIR-D) can achieve high-quality restoration for different types of degraded images. BIR-D not only has the capability to restore (a) linear inverse problems when the degradation function is known. BIR-D can also achieve high-quality image restoration in (b) blind issues with unknown degradation functions, as well as in (c) mixed degradation and real degradation scenarios.

🔼 This figure showcases the versatility of the BIR-D model in handling various image restoration tasks. Panel (a) demonstrates its effectiveness on linear inverse problems where the degradation function is known. Panel (b) highlights its ability to restore images with unknown degradation functions, showcasing its ‘blind’ image restoration capabilities. Finally, panel (c) illustrates BIR-D’s performance on complex scenarios involving mixed or real-world degradations.

read the caption

Figure 1: Blind Image Restoration Diffusion Model (BIR-D) can achieve high-quality restoration for different types of degraded images. BIR-D not only has the capability to restore (a) linear inverse problems when the degradation function is known. BIR-D can also achieve high-quality image restoration in (b) blind issues with unknown degradation functions, as well as in (c) mixed degradation and real degradation scenarios.

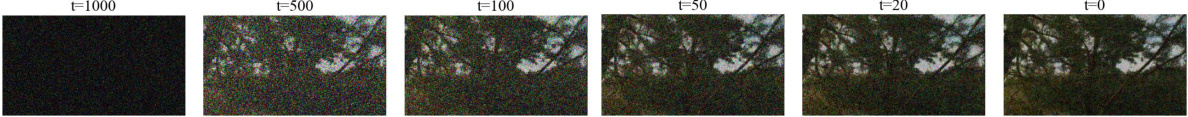

🔼 This figure shows the evolution of the degradation mask used in BIR-D’s low-light enhancement process during the sampling process (from t=1000 to t=0). The change in the mask’s appearance demonstrates how the model learns to correct for low-light conditions as the sampling progresses, refining its understanding of the image’s detailed structure and noise patterns.

read the caption

Figure 15: The changing of degradation mask during the sampling process in low light enhancement.

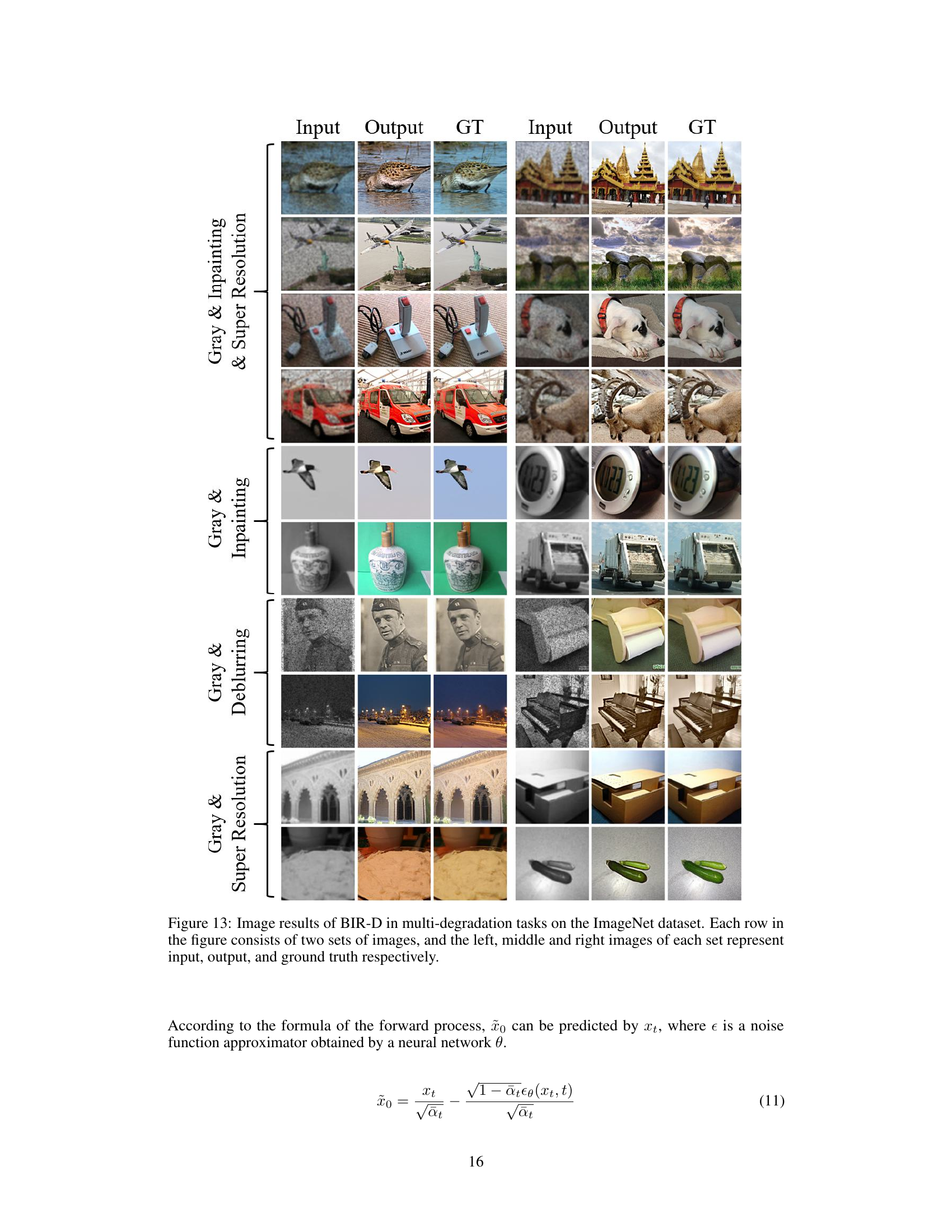

🔼 This figure shows the results of BIR-D on various multi-degradation tasks. Each row presents a different combination of degradations (e.g., gray, super-resolution, deblurring, inpainting). For each task, three images are shown: the original degraded input image, the restored image produced by BIR-D, and the ground truth (original, undegraded image) for comparison. The results demonstrate BIR-D’s ability to handle a variety of complex degradation scenarios.

read the caption

Figure 13: Image results of BIR-D in multi-degradation tasks on the ImageNet dataset. Each row in the figure consists of two sets of images, and the left, middle and right images of each set represent input, output, and ground truth respectively.

🔼 This figure provides a detailed overview of the Blind Image Restoration Diffusion Model (BIR-D). It illustrates how the model uses degraded images as guidance during the sampling process of a pre-trained diffusion model. A key innovation is the use of an optimizable convolutional kernel to dynamically model and update the degradation process. The figure also highlights the calculation of an adaptive guidance scale using an empirical formula, eliminating the need for manual parameter tuning. This adaptive scale enhances the quality of the image restoration process across diverse tasks.

read the caption

Figure 2: Overview of BIR-D. Degraded image y was given during the sampling process. BIR-D systematically incorporates guidance from degraded images in the reverse process of the diffusion model and optimizes the degraded model at the same time. For degraded image y, pre-training is first performed to provide a better initial state for BIR-D. BIR-D introduces a distance function in each step of the reverse process of the diffusion model to describe the distance loss between the degraded image y and the generated image x0 after the degradation function, so that the gradient could be used to update and simulate a better degradation function. Based on the empirical formula, the adaptive guidance scale can be calculated to provide optimal guidance during the sampling process.

🔼 This figure shows the variation of the degradation mask during the sampling process in low-light enhancement. The images visually represent the mask’s transformation across different time steps (t = 1000, 500, 100, 50, 20, 0). As the sampling progresses (t decreases), the mask adapts and refines its representation of the image’s noisy areas, becoming progressively more detailed in its depiction of the nuanced brightness variations. The initial stages display a more homogeneous mask, whereas the later stages show a granular mask focusing on specific regions.

read the caption

Figure 15: The changing of degradation mask during the sampling process in low light enhancement.

🔼 This figure shows a comparison of the image quality achieved by three different blind face restoration methods: PGDiff, DiffBIR, and BIR-D. The comparison is performed on two widely used datasets for evaluating face restoration: LFW and WIDER. The figure visually demonstrates the performance differences between the three models across different input images (degraded faces). Each column represents a different method, and each row showcases the results obtained on a different input image. By visually inspecting this figure, we can qualitatively assess the relative effectiveness of each restoration technique in recovering clear and high-quality facial images from degraded versions.

read the caption

Figure 3: Comparison of image quality for blind face restoration results on LFW [14] and WIDER dataset [15].

🔼 This figure shows several examples of images restored by the proposed BIR-D model. It demonstrates the model’s ability to handle various types of image degradation, including blur, low resolution, occlusion, grayscale conversion, and combinations thereof. The figure highlights BIR-D’s versatility in addressing both linear inverse problems (where the degradation is known) and blind issues (where the degradation is unknown).

read the caption

Figure 1: Blind Image Restoration Diffusion Model (BIR-D) can achieve high-quality restoration for different types of degraded images. BIR-D not only has the capability to restore (a) linear inverse problems when the degradation function is known. BIR-D can also achieve high-quality image restoration in (b) blind issues with unknown degradation functions, as well as in (c) mixed degradation and real degradation scenarios.

🔼 This figure compares the image quality of motion blur reduction results obtained using different methods on two datasets: GoPro and HIDE. The left side shows results from the GoPro dataset and the right from the HIDE dataset. Each column represents: Ground Truth (GT), Input (degraded image), and BIR-D (results from the proposed method). The comparison visually demonstrates BIR-D’s performance in motion blur reduction compared to other methods (NAFNet and UFPNet) across different scene types.

read the caption

Figure 8: Comparison of image quality for motion blur reduction results on GoPro [30] and HIDE dataset [31].

🔼 This figure shows the results of HDR image recovery using the BIR-D model on the NTIRE2021 Multi-Frame HDR Challenge dataset. For each scene, three input images (long, medium, and short exposures) are shown alongside the corresponding BIR-D output. This demonstrates BIR-D’s ability to reconstruct high dynamic range images from multiple low dynamic range images with varying exposures.

read the caption

Figure 19: More image restoration results of HDR image recovery task on NTIRE2021 Multi-Frame HDR Challenge dataset.

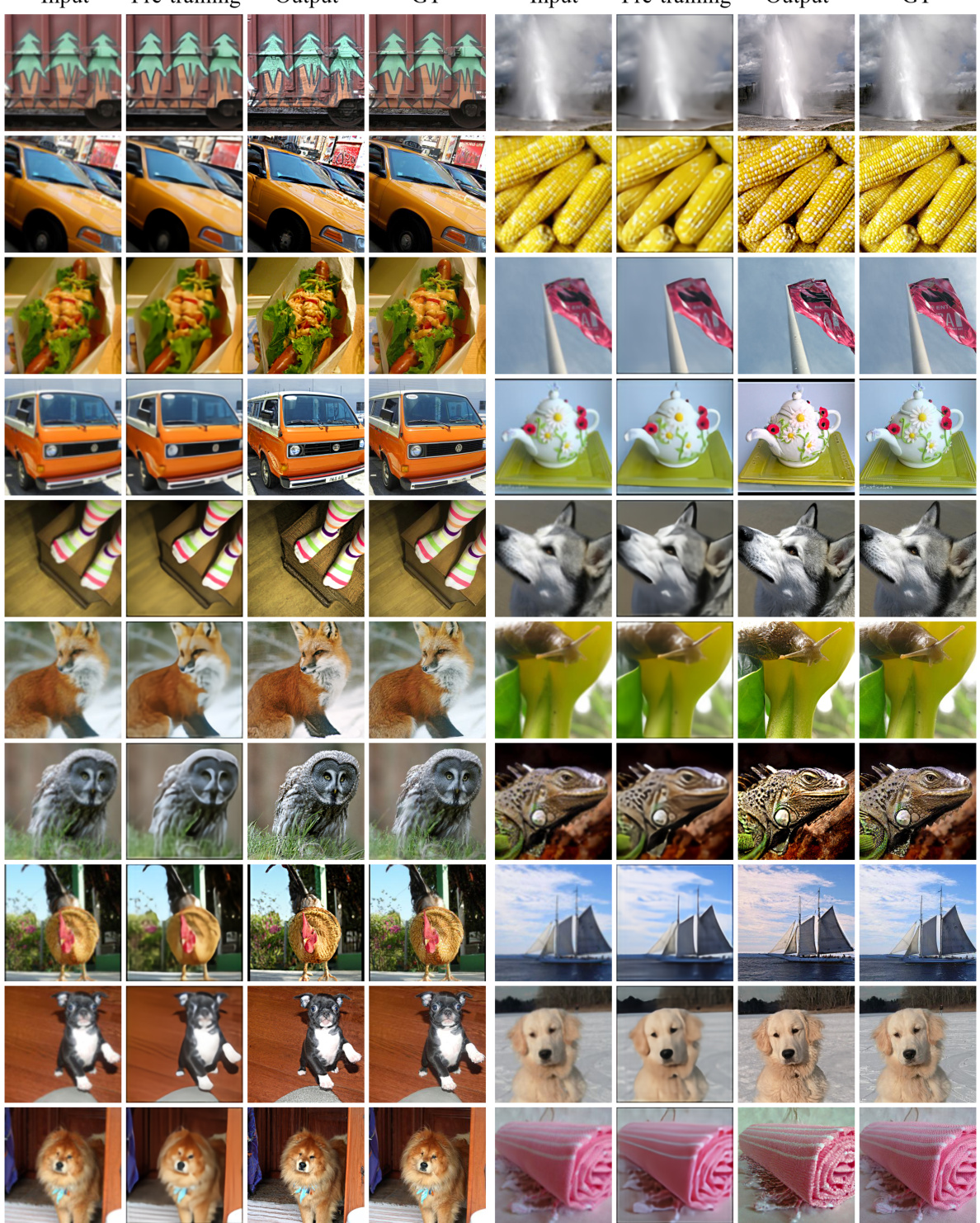

🔼 This figure shows the results of the deblurring task performed by the BIR-D model. Each row displays four images: the original blurry image, the image after a pre-training step (to improve the model’s initialization), the deblurred image produced by BIR-D, and the corresponding ground truth (the original sharp image). This visual comparison demonstrates the model’s ability to deblur various types of images.

read the caption

Figure 20: The image generation result of the deblurring task, where each horizontal row is composed of two sets of images, each set of images representing the input image, the image after pre-training model, the output image of BIR-D, and the ground truth from left to right.

🔼 This figure showcases the versatility of the BIR-D model in handling various image degradation types. It presents examples of image restoration across three categories: (a) linear inverse problems with known degradation functions (e.g., deblurring, super-resolution); (b) blind problems with unknown degradation functions (e.g., low-light enhancement, colorization); and (c) complex scenarios involving multiple or mixed degradations. The figure visually demonstrates the model’s ability to restore high-quality images even in challenging, real-world situations.

read the caption

Figure 1: Blind Image Restoration Diffusion Model (BIR-D) can achieve high-quality restoration for different types of degraded images. BIR-D not only has the capability to restore (a) linear inverse problems when the degradation function is known. BIR-D can also achieve high-quality image restoration in (b) blind issues with unknown degradation functions, as well as in (c) mixed degradation and real degradation scenarios.

🔼 This figure displays a comparison of the image quality achieved by three different blind face restoration methods: PGDiff, DiffBIR, and BIR-D. The comparison is shown across two benchmark datasets: LFW and WIDER. Each column represents a method, and each row shows the restoration results for the same image. The figure visually demonstrates the superior performance of BIR-D in restoring high-quality facial images from degraded versions compared to PGDiff and DiffBIR.

read the caption

Figure 3: Comparison of image quality for blind face restoration results on LFW [14] and WIDER dataset [15].

🔼 This figure showcases the versatility of BIR-D in handling various image degradation types. It presents examples of BIR-D’s restoration capabilities across different tasks, including deblurring, super-resolution, inpainting, colorization, low-light enhancement, and HDR image recovery. The results demonstrate that BIR-D can effectively restore images even with unknown or complex degradation functions, highlighting its effectiveness in real-world scenarios.

read the caption

Figure 1: Blind Image Restoration Diffusion Model (BIR-D) can achieve high-quality restoration for different types of degraded images. BIR-D not only has the capability to restore (a) linear inverse problems when the degradation function is known. BIR-D can also achieve high-quality image restoration in (b) blind issues with unknown degradation functions, as well as in (c) mixed degradation and real degradation scenarios.

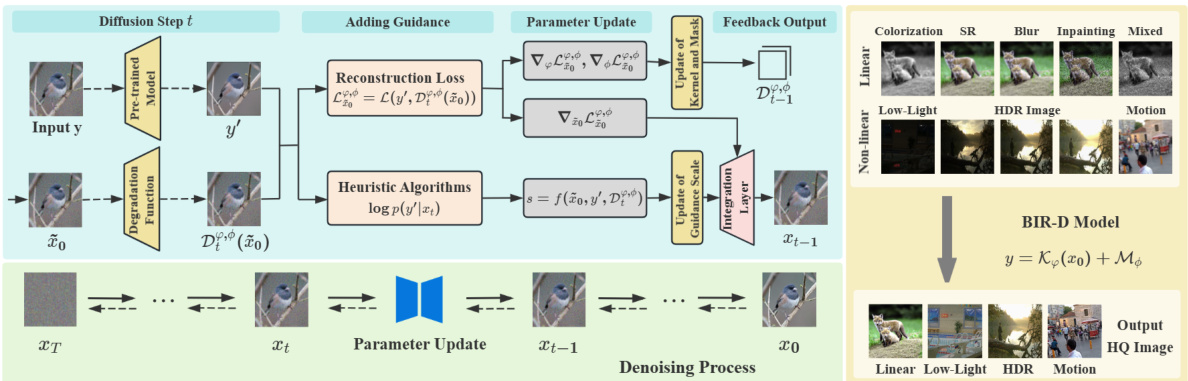

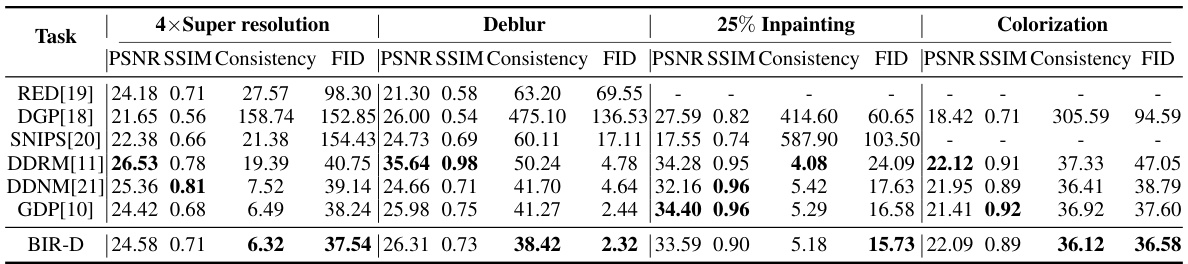

More on tables

🔼 This table presents a quantitative comparison of different linear inverse problems, including 4x super-resolution, deblurring, 25% inpainting, and colorization. The comparison is made across several state-of-the-art methods (RED, DGP, SNIPS, DDRM, DDNM, GDP) and the proposed BIR-D method. The metrics used for comparison are PSNR, SSIM, Consistency, and FID. Higher PSNR and SSIM values generally indicate better image quality, while lower FID and Consistency values suggest better image fidelity and fewer inconsistencies.

read the caption

Table 2: Quantitative comparison of linear inverse problems on ImageNet 1k[18].

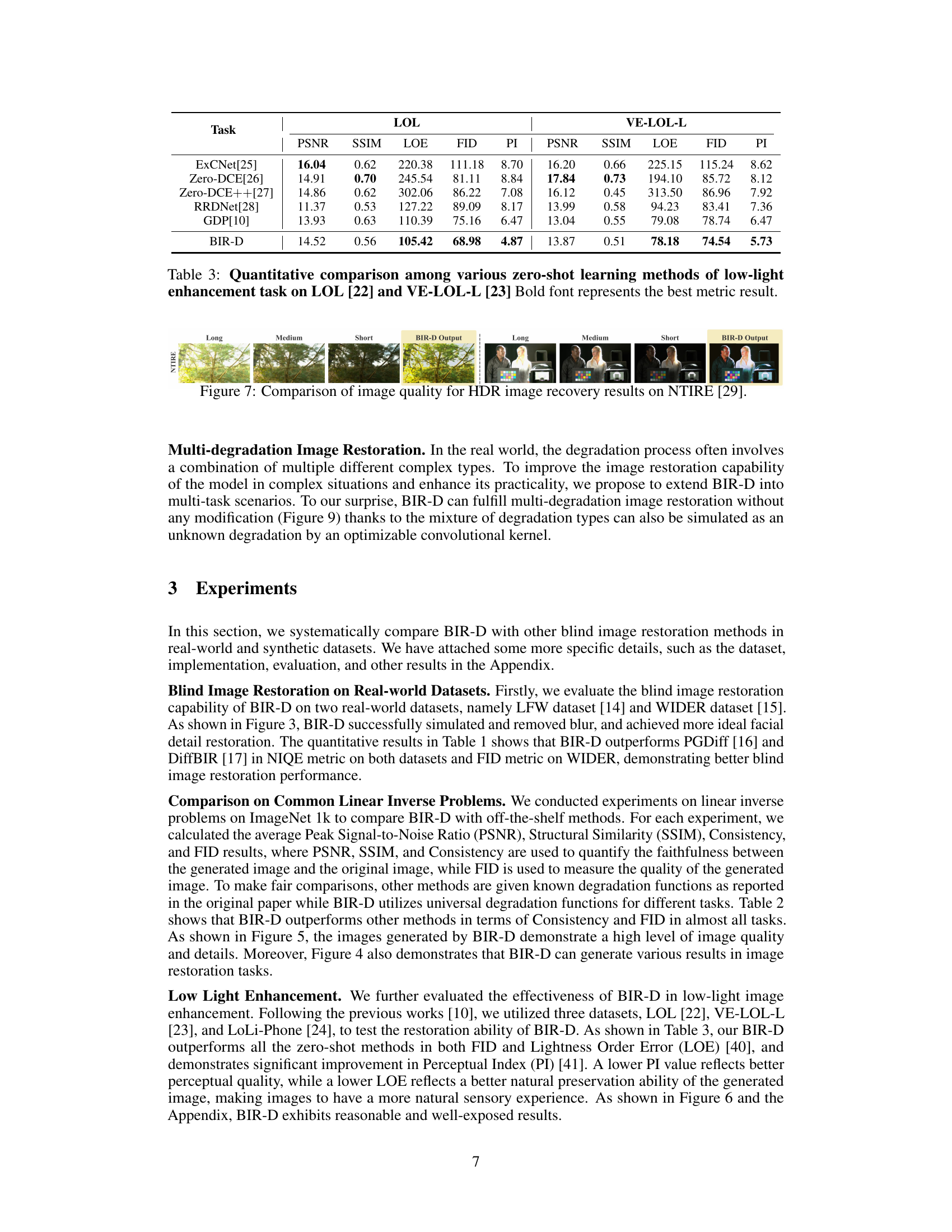

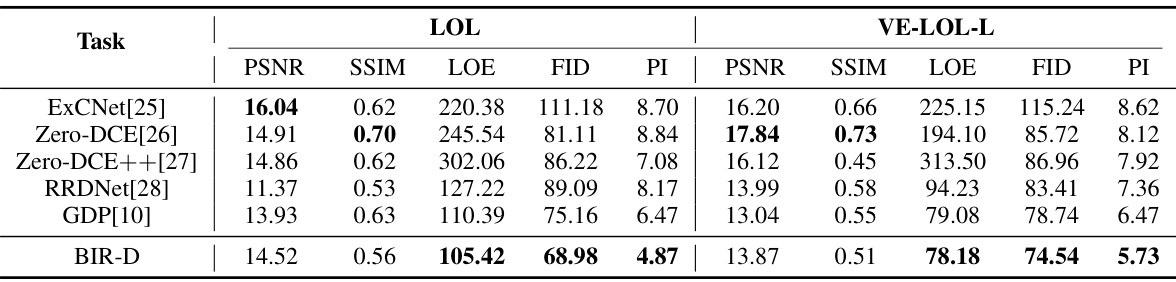

🔼 This table presents a quantitative comparison of different zero-shot learning methods for low-light image enhancement. It evaluates the performance on two datasets, LOL and VE-LOL-L, using metrics such as PSNR, SSIM, LOE, FID, and PI. The best result for each metric is highlighted in bold font. This allows for a direct comparison of the effectiveness of different methods in enhancing the quality of low-light images.

read the caption

Table 3: Quantitative comparison among various zero-shot learning methods of low-light enhancement task on LOL [22] and VE-LOL-L [23] Bold font represents the best metric result.

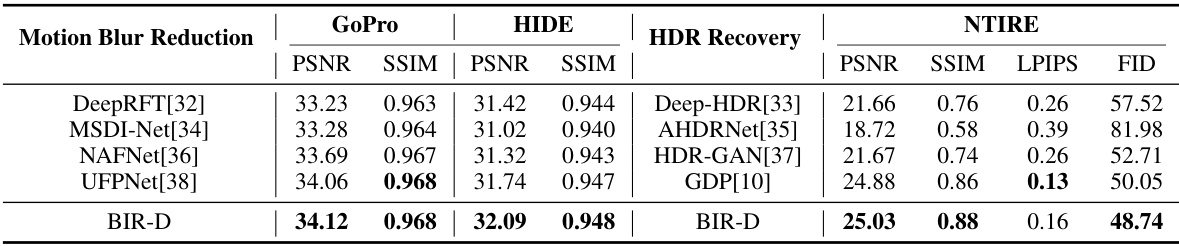

🔼 This table presents a quantitative comparison of the performance of BIR-D and other state-of-the-art methods on motion blur reduction and HDR image recovery tasks. The metrics used for comparison are PSNR, SSIM, LPIPS, and FID for HDR recovery and PSNR and SSIM for motion blur reduction. The table shows that BIR-D achieves superior or comparable performance across all metrics compared to the other methods.

read the caption

Table 4: Quantitative comparison of motion blur reduction and HDR image recovery tasks.

🔼 This table presents the ablation study results on the impact of using an optimizable convolutional kernel and the proposed adaptive guidance scale. It compares the performance of four models: Model A uses a fixed kernel and fixed guidance scale; Model B uses a fixed kernel and an adaptive guidance scale; Model C uses an optimizable kernel and a fixed guidance scale; and BIR-D uses both an optimizable kernel and an adaptive guidance scale. The performance is evaluated using PSNR, SSIM, LOE, FID, and PI metrics on the LOL and LoLi-Phone datasets. The results show that using both an optimizable kernel and an adaptive guidance scale significantly improves the performance of the model.

read the caption

Table 5: The ablation study on the optimizable convolutional kernel and the empirical settings of guidance scale.

🔼 This table presents a quantitative comparison of blind face restoration results using different models on two benchmark datasets: LFW and WIDER. The models compared include PGDiff, DiffBIR, and BIR-D. The metrics used for comparison are the Frechet Inception Distance (FID) and the Natural Image Quality Evaluator (NIQE). Lower FID indicates better performance, and lower NIQE indicates better image quality. The results demonstrate the superior performance of BIR-D in terms of both FID and NIQE scores on both datasets.

read the caption

Table 1: Quantitative comparison of blind face restoration on LFW and WIDER datasets

🔼 This table presents a quantitative comparison of the blind face restoration performance of three different methods: PGDiff, DiffBIR, and BIR-D. The comparison is done using two metrics: Frechet Inception Distance (FID) and Natural Image Quality Evaluator (NIQE) on two datasets: LFW and WIDER. Lower FID scores indicate better image quality, and lower NIQE scores indicate better perceptual quality. The table shows that BIR-D outperforms the other two methods on both datasets according to these metrics.

read the caption

Table 1: Quantitative comparison of blind face restoration on LFW and WIDER datasets

Full paper#