TL;DR#

Existing research on transformers primarily focuses on asymptotic performance, leaving a gap in understanding their non-asymptotic training dynamics, particularly in next-token prediction (NTP). This lack of understanding hinders progress in improving model training and generalization. Furthermore, the theoretical underpinnings of their excellent empirical performance remain unclear, limiting our ability to design better models.

This research addresses these issues by providing a fine-grained non-asymptotic analysis of a one-layer transformer in NTP. The study introduces a novel mathematical framework and two-stage training algorithm, showcasing sub-linear convergence to near-optimal solutions. Importantly, it also demonstrates the non-trivial generalization ability of the transformer under dataset shifts. These findings provide valuable insights into transformer training and generalization, paving the way for improved model optimization and design.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers because it provides a non-asymptotic analysis of transformer training dynamics, an area where understanding is currently limited. The novel mathematical framework and two-stage training algorithm offer new approaches to optimizing training, which can improve model performance and generalization. This research opens new avenues for theoretical investigation, particularly regarding the generalization capabilities of large language models.

Visual Insights#

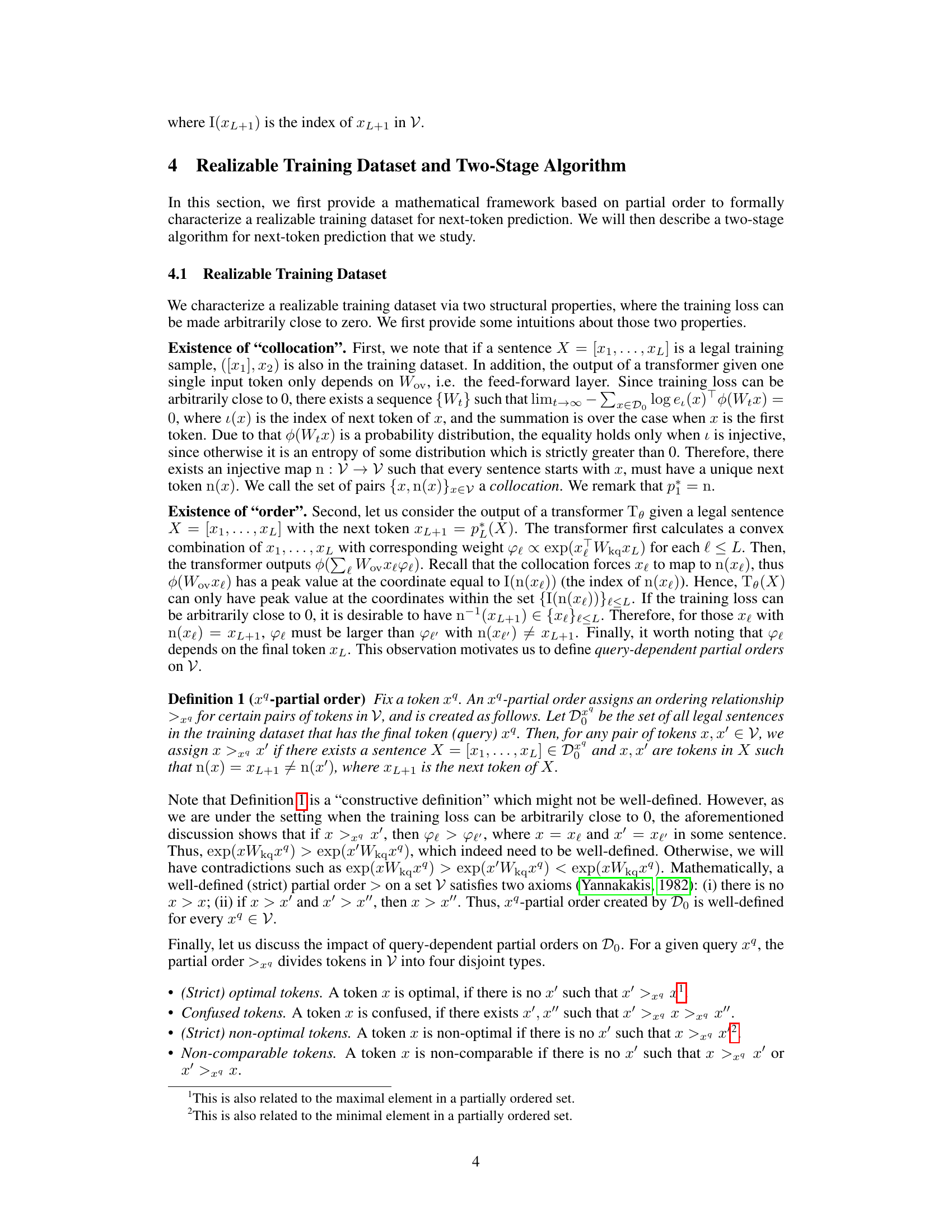

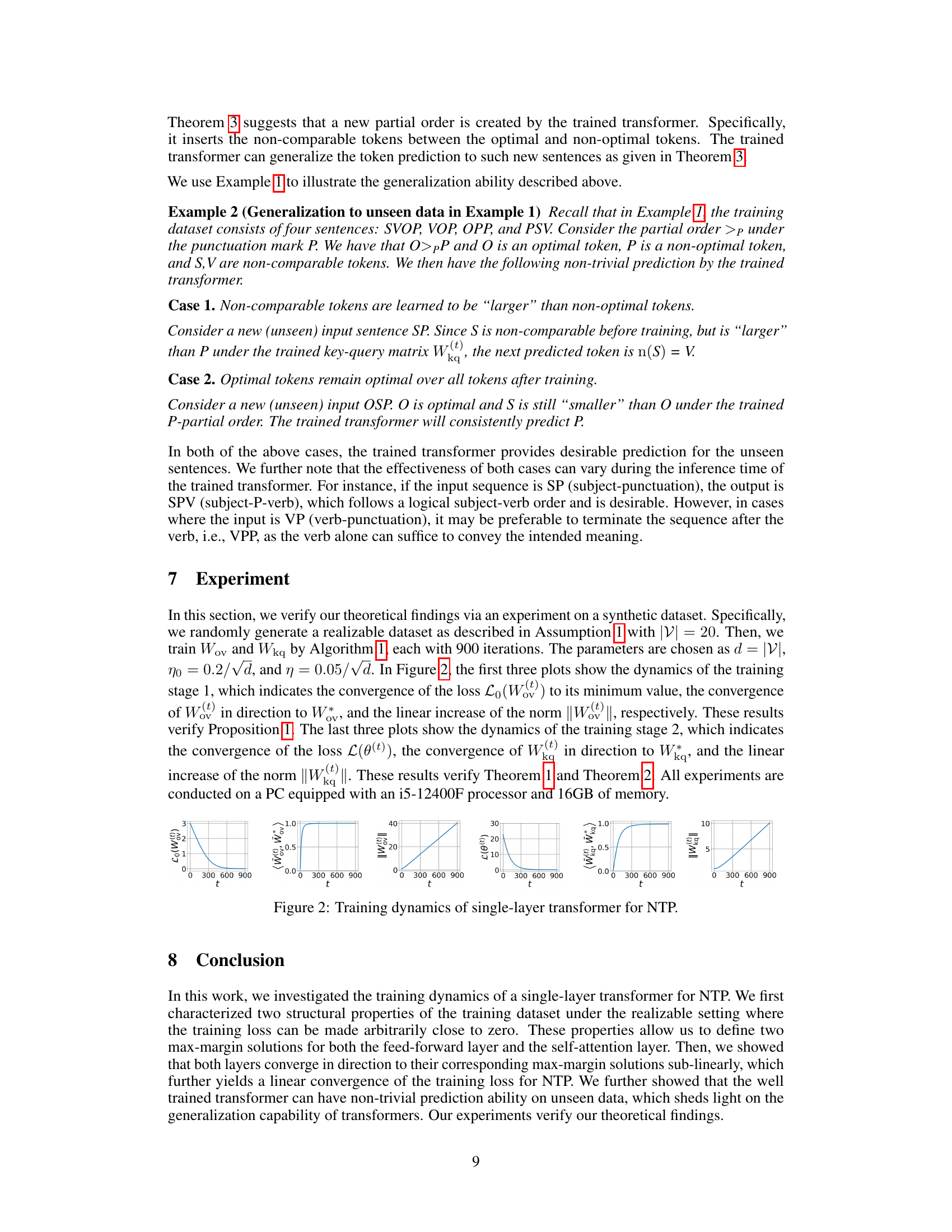

🔼 The figure is composed of two plots. The left plot illustrates the mapping from a sentence to its subsequent token. The optimal token in each sentence is highlighted by a red rectangle. This visualizes the concept of query-dependent partial orders, where the prediction of the next token depends on the tokens already present in the sentence. The right plot displays the concept of collocation which consists of token pairs where each token is directly paired with its subsequent token. It is a crucial component for training the feed-forward layer in the proposed two-stage training algorithm.

read the caption

Figure 1: The left plot shows the mapping from sentence to the next token. The red rectangle indicates the optimal token in the corresponding sentence. The right plot shows the collocation relationship.

Full paper#