↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Traditional shared autonomy systems often hinder human autonomy by constantly involving AI copilots, limiting adaptability. This paper identifies this limitation, emphasizing the need for dynamic and selective intervention based on task dynamics and pilot performance. Prior methods lacked this crucial adaptability and often relied on hyperparameters.

This research proposes Interventional Diffusion Assistance (IDA), a goal-agnostic system. IDA dynamically decides whether to use human or AI input, using an expert Q-function to evaluate actions. Experiments using simulated and human pilots demonstrate IDA’s improved performance and enhanced user experience, particularly in Lunar Lander. Human participants experienced enhanced autonomy and preferred IDA, highlighting its effectiveness in improving both performance and user satisfaction.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working on shared autonomy and human-AI collaboration. It introduces a novel approach that dynamically adjusts AI assistance based on human performance, leading to improved efficiency and user experience. This work directly addresses the limitations of traditional methods and offers new avenues for developing more intuitive and effective human-AI systems. Its findings could be widely applied in various fields such as robotics, autonomous driving, and human-computer interaction.

Visual Insights#

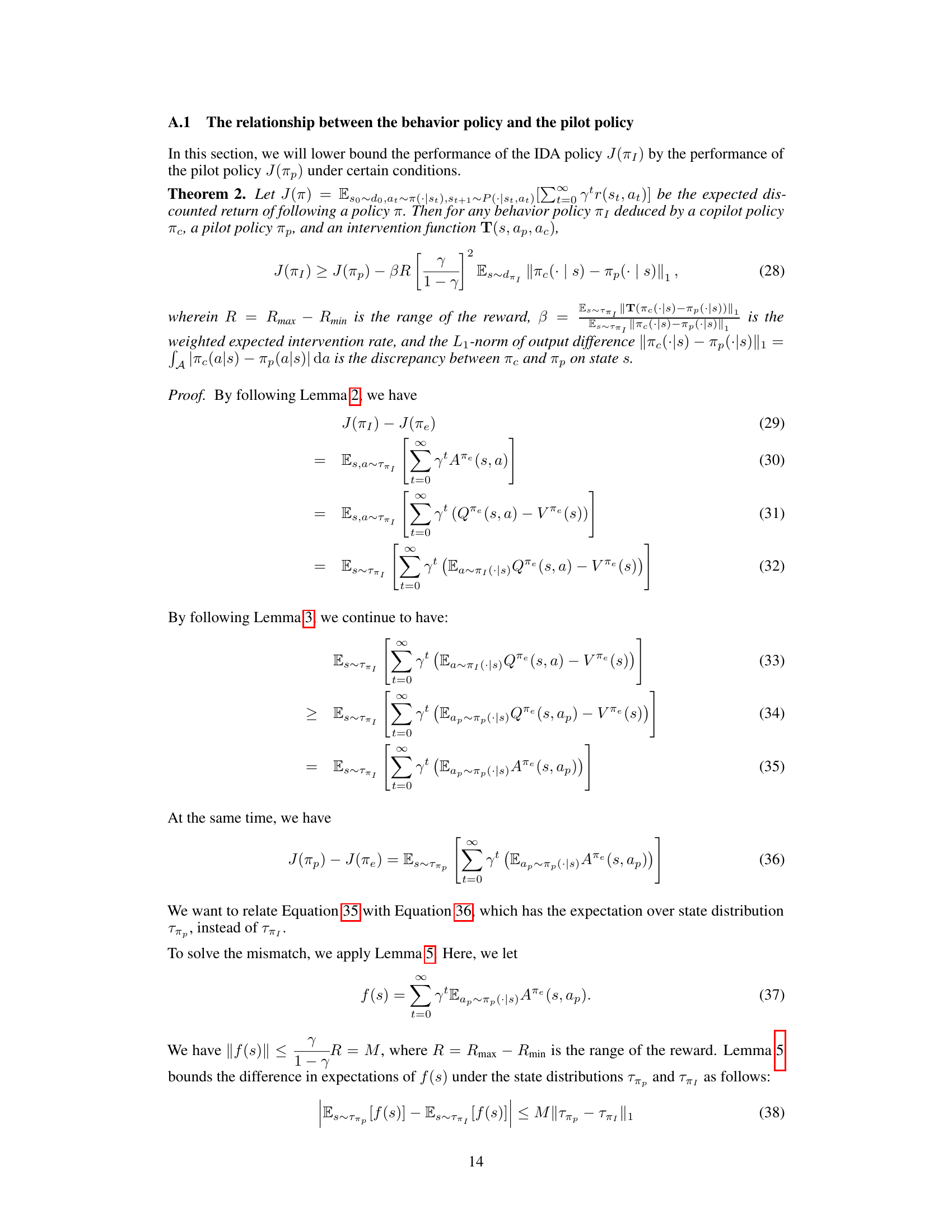

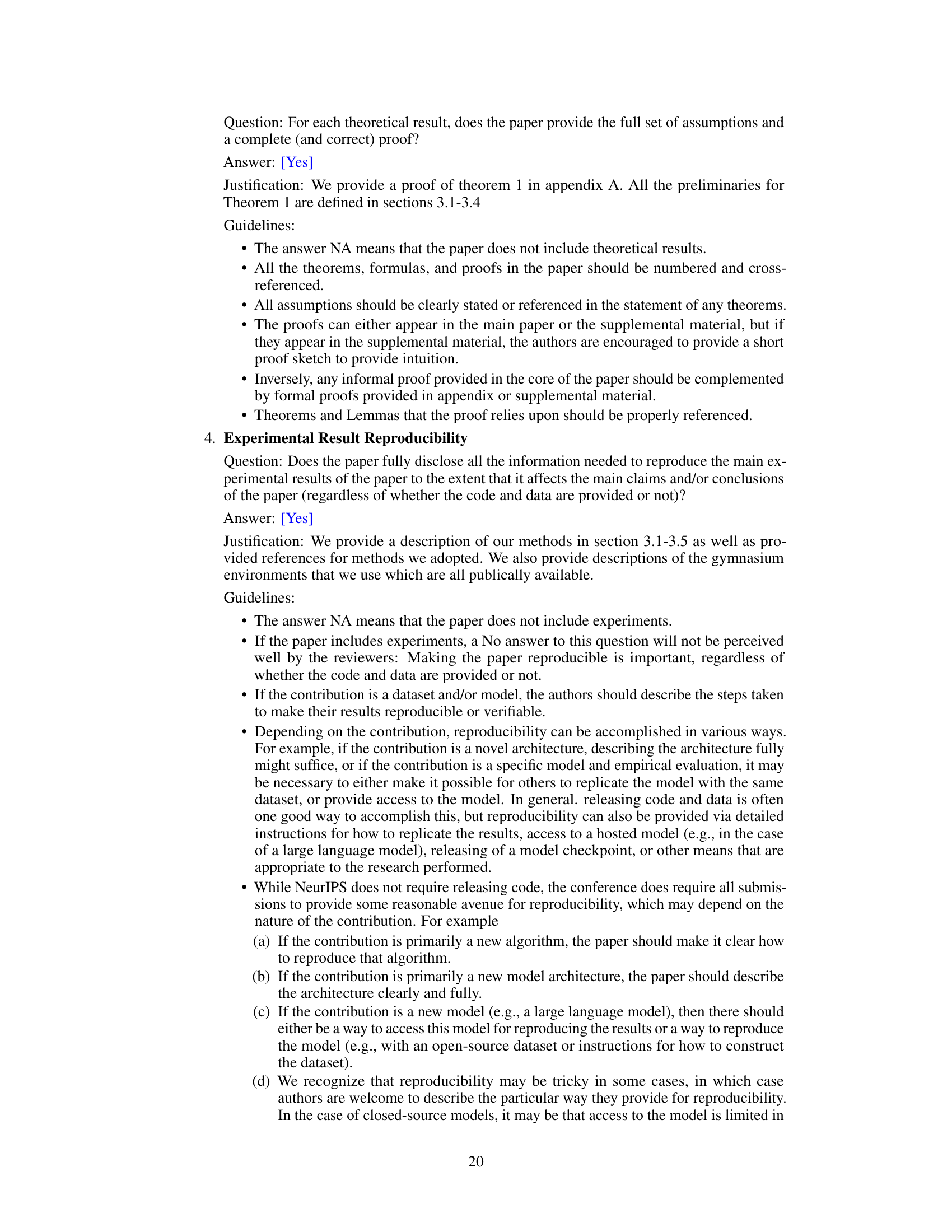

This figure compares the prior work on shared autonomy with the proposed Interventional Assist (IA) framework. (a) shows the traditional approach where the copilot always acts, blending its actions with the human pilot’s. (b) illustrates IA, where the copilot only intervenes when it’s predicted to improve performance based on a goal-agnostic evaluation. This dynamic intervention aims to enhance both performance and human autonomy.

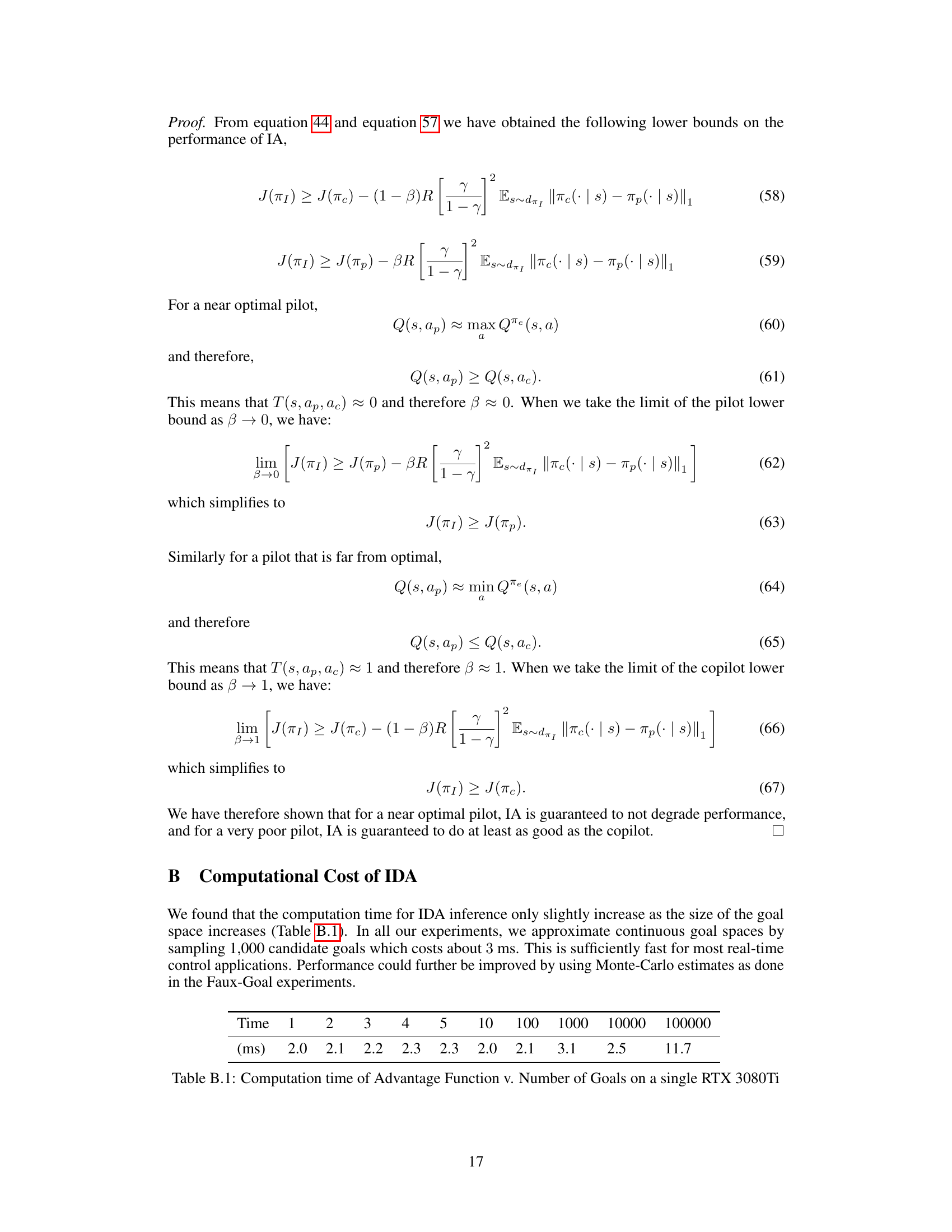

This table presents the results of experiments conducted using three different control methods (Pilot-Only, Copilot, and IDA) in three variations of the Reacher environment with surrogate pilots. The table shows the target hit rate (targets acquired per minute) for each control method in three goal space configurations: Continuous, Linear, and Quadrant. Each goal space has results for expert, noisy, and laggy surrogate pilots. The results demonstrate that the IDA method generally outperforms the other two, especially with noisy or laggy pilots.

In-depth insights#

IDA: IA Framework#

The heading ‘IDA: IA Framework’ suggests a system for Interventional Diffusion Assistance, likely employing a diffusion model. IDA’s core functionality centers on dynamically adjusting the level of AI assistance to a human user, only intervening when the AI’s predicted action demonstrably outperforms the human’s across all possible goals. This goal-agnostic approach is crucial; it avoids imposing a specific AI objective on the human, thus preserving the user’s autonomy and improving their experience. The framework’s strength lies in its adaptability to diverse human skill levels, avoiding the pitfall of excessive AI assistance that hinders learning or diminishes user engagement. By employing a diffusion model, IDA provides a smoother, less disruptive form of intervention, gracefully integrating AI assistance into the human’s control loop. The effectiveness of IDA hinges on its ability to accurately estimate the relative value of human versus AI actions, making precise evaluation critical to its performance and overall success.

Diffusion Copilot#

A diffusion copilot, within the context of shared autonomy, is a particularly interesting approach to AI-assisted control. It leverages the power of diffusion models, known for their ability to generate diverse and high-quality samples, to create an assistive AI that smoothly integrates with human input. The core strength is its ability to offer suggestions rather than outright commands, allowing for a more natural and less intrusive collaboration between human and machine. This is crucial in scenarios demanding human oversight or where full autonomy isn’t feasible or desirable. However, a critical aspect of a successful diffusion copilot design is careful calibration of the diffusion process to appropriately balance human autonomy and AI assistance. Too much diffusion might overwhelm the human, while insufficient diffusion might not offer sufficient assistance. The choice of a diffusion-based approach inherently introduces a probabilistic element; the copilot provides a distribution of possible actions rather than a single, deterministic choice, reflecting uncertainty. This aligns with real-world complexities, where perfect predictions are uncommon. Goal-agnostic training presents both advantages (generalization across diverse tasks) and limitations (potential for suboptimal recommendations if the AI misinterprets the human’s intentions). Therefore, the efficacy of a diffusion copilot hinges on the robustness and adaptability of its underlying diffusion model and the effectiveness of the methods used to integrate its suggestions with human control.

Goal-agnostic IA#

The concept of ‘Goal-agnostic IA’ presents a compelling approach to shared autonomy, suggesting that AI assistance should be provided not based on explicit user goals, but rather on a more fundamental evaluation of action quality. This goal-agnostic nature is crucial because it allows the AI to help even when the human’s goals are poorly defined or change dynamically. The system is designed to only intervene when the AI’s predicted action outcome exceeds the human’s, across all possible goals. This is a significant departure from prior work which usually relies on goal-specific assistance or fixed control-sharing parameters. By evaluating actions based on their intrinsic value (independent of a specific goal), the system increases its robustness and adaptability. A key advantage of this methodology is the preservation of human autonomy, as the system avoids unnecessary intervention, only stepping in when the situation truly demands assistance. The effectiveness of goal-agnostic IA rests heavily on the accuracy of the underlying AI model and its ability to accurately predict the value of different actions across a range of possible contexts. This approach is particularly valuable in complex systems where the human’s ability to explicitly define goals is limited.

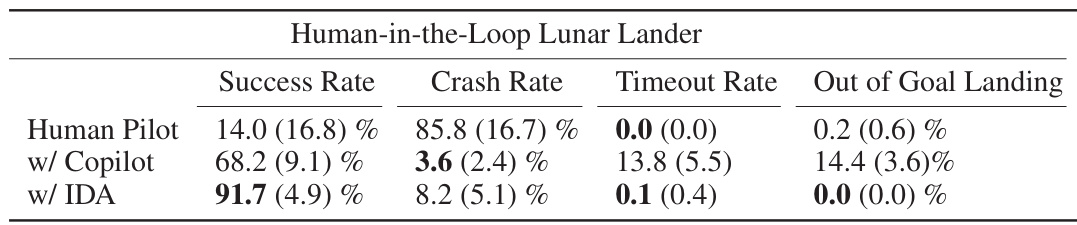

Human-in-the-loop#

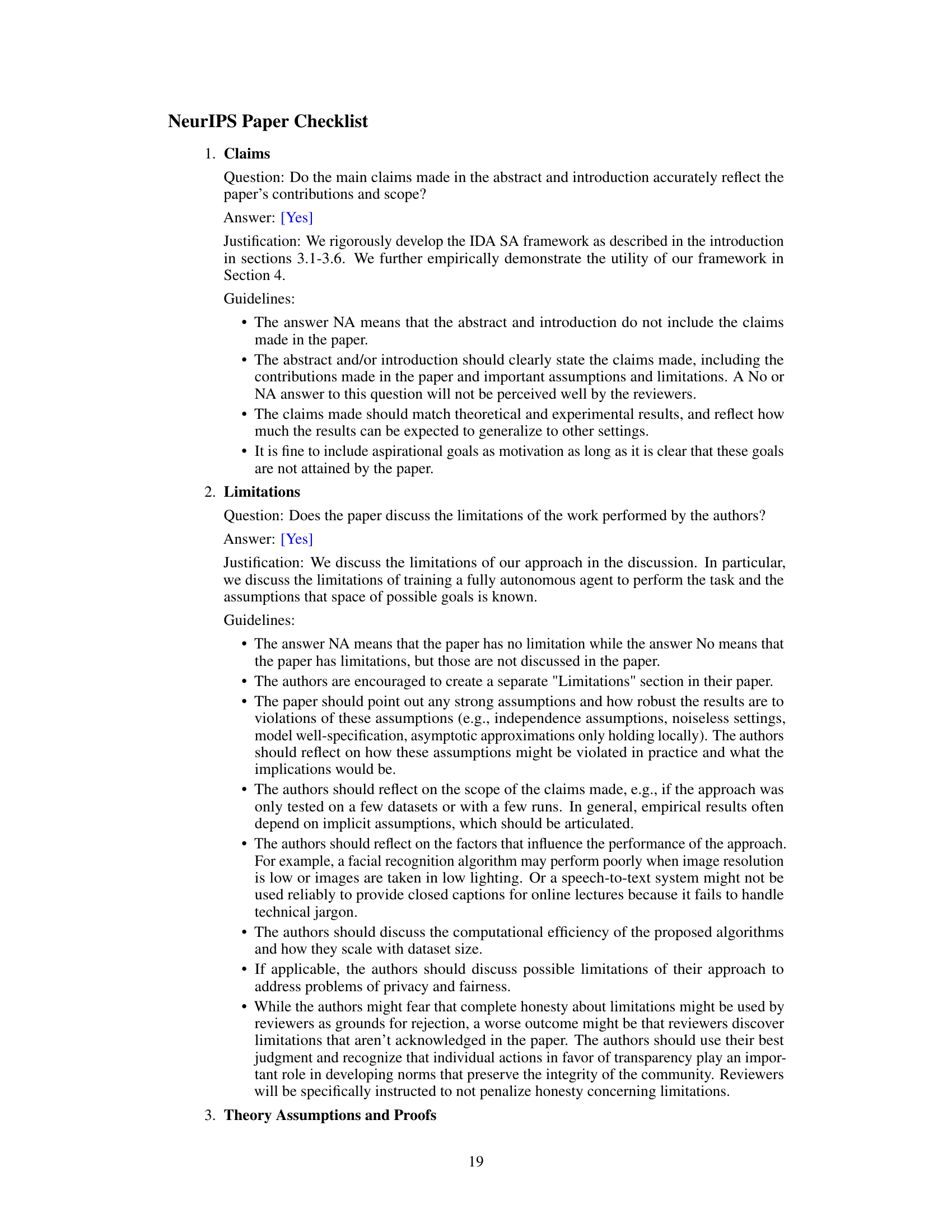

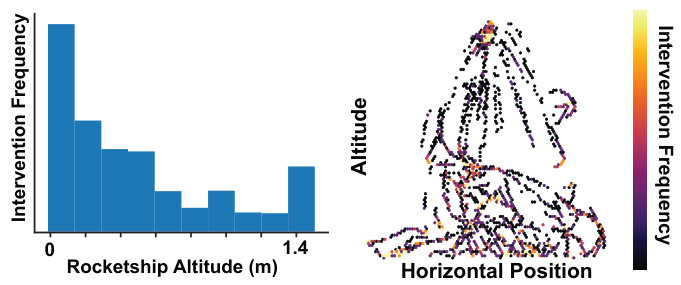

The human-in-the-loop (HITL) experiments provide crucial validation of the Interventional Diffusion Assistance (IDA) system’s effectiveness in real-world scenarios. The results demonstrate IDA’s ability to enhance both performance and user autonomy, contrasting with traditional shared autonomy methods that can overly restrict the user’s control. The participants’ subjective reports of increased ease, control, and autonomy further solidify these findings. The success of HITL testing hinges on the careful design of the experimental setup, including consideration of the task complexity (Lunar Lander), controller types (joystick), and the experimental design to isolate the impact of IDA. While simulated pilots offer a necessary first step, HITL trials are indispensable to capture the nuances of human-AI interaction and to address the practical implications of the proposed methodology. Further research should focus on expanding HITL testing to a more diverse participant pool and broader range of tasks to generalize the observed benefits of IDA.

Future of IDA#

The future of IDA (Interventional Diffusion Assistance) looks promising, building upon its demonstrated success in enhancing human-AI collaboration. Further research should focus on enhancing the goal-agnostic nature of IDA, allowing it to adapt to unforeseen goals more effectively. This could involve exploring more sophisticated methods for goal inference or developing a hybrid approach that combines goal-agnostic and goal-specific strategies. Improving the efficiency and scalability of the intervention function is also crucial for broader real-world applications. Investigating alternative architectures or utilizing more efficient value estimation techniques could significantly improve processing speed. Extending IDA’s application to a wider array of tasks and environments will be critical to establishing its generalizability and robustness. This requires rigorous testing across different domains and careful consideration of how to adapt the intervention strategy based on task dynamics and human expertise. Ensuring human-centered design remains paramount to maximize the benefits and minimize potential risks of increased automation. Future research should place a strong emphasis on user experience and subjective evaluation to assess human autonomy, trust, and overall satisfaction when working with IDA.

More visual insights#

More on figures

This figure presents the results of experiments conducted in the Reacher environment, a 2D simulation of a robotic arm. It shows a comparison of three different control methods: pilot-only, copilot, and IDA (Interventional Diffusion Assistance). Panel (a) illustrates the Reacher environment itself. Panels (b) and (c) display the performance of the three methods with a varying number of possible goal locations for both laggy and noisy pilots. The results show that IDA consistently outperforms the other methods, particularly in scenarios with noisy or laggy pilots, demonstrating its robustness and effectiveness in improving performance.

This figure analyzes the copilot’s interventions in a simulated Lunar Lander environment with noisy and laggy pilots. The left panels (a and b) show histograms comparing copilot advantage scores (the difference between copilot and pilot action values) for pilot actions versus corrupted actions. The right panel (c) illustrates two examples of IDA intervention: preventing a flip and assisting with a smooth landing. The plots demonstrate that IDA preferentially intervenes during corrupted actions, suggesting effective assistance in challenging situations.

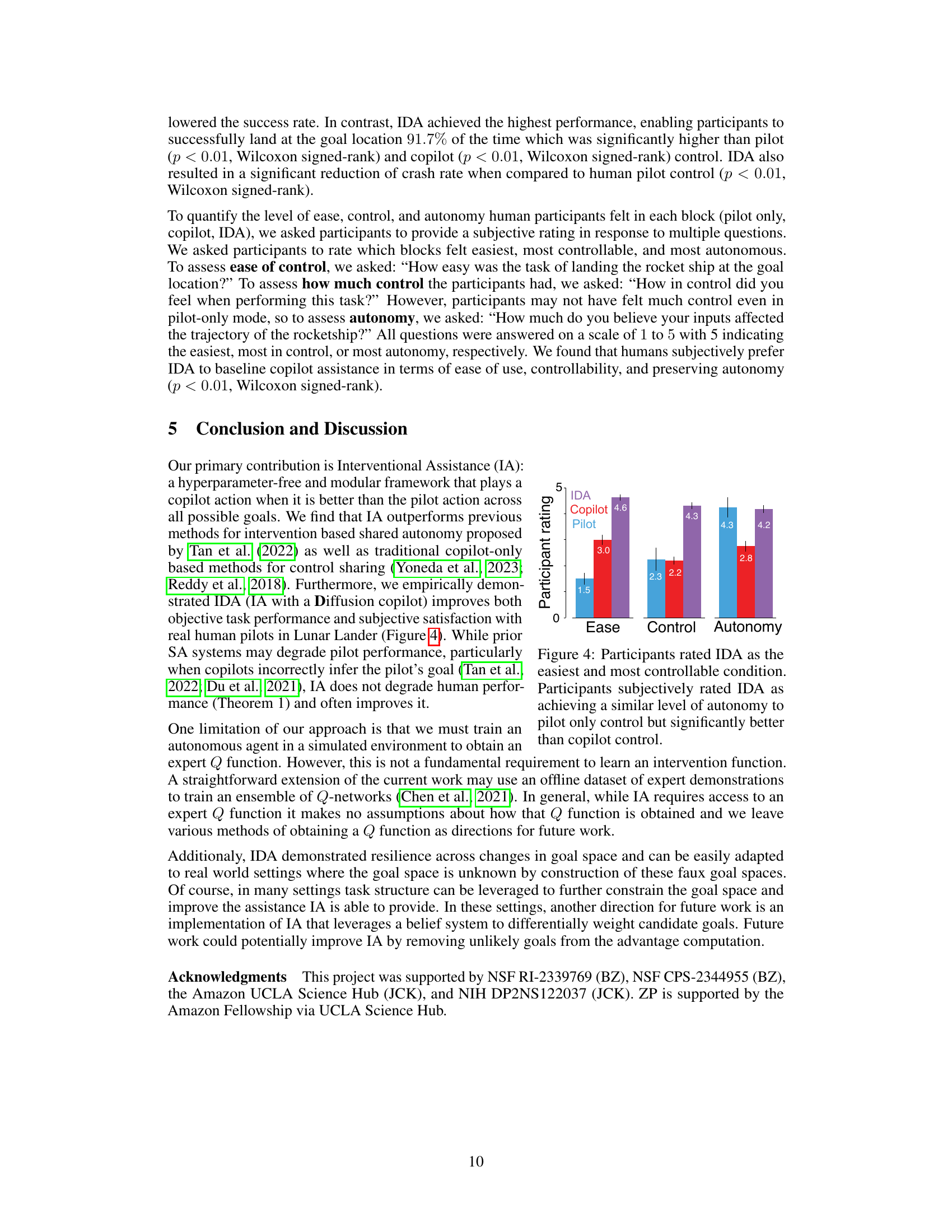

This figure shows the results of a subjective user study comparing user experience with three different control methods: pilot-only, copilot, and IDA. Participants rated IDA as significantly easier and more controllable than the other methods. Importantly, while IDA offered a similar level of autonomy to the pilot-only condition, it was rated as significantly more autonomous than the copilot condition. This suggests IDA successfully balanced performance and user autonomy.

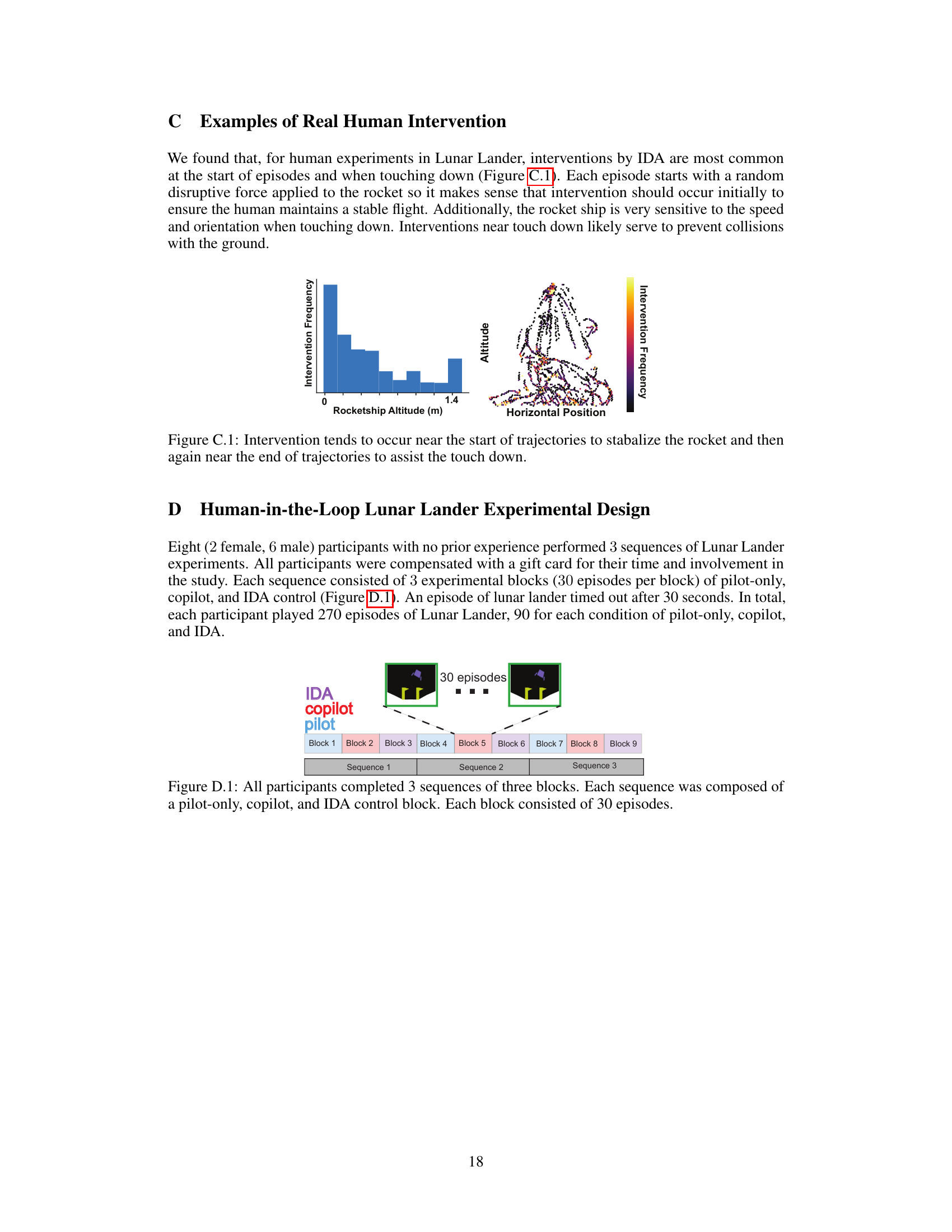

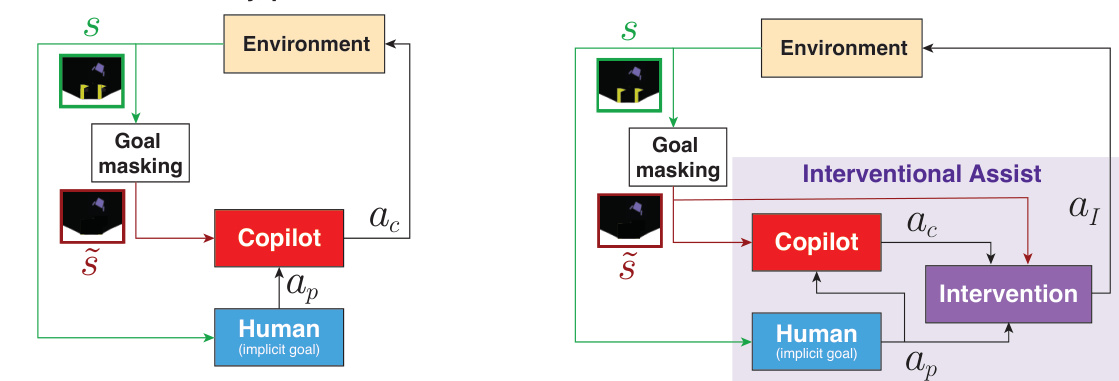

This figure shows the frequency of IDA interventions during human-in-the-loop Lunar Lander experiments plotted against the rocketship’s altitude and horizontal position. The histogram on the left illustrates a higher intervention frequency at lower altitudes (near the ground), suggesting that IDA assists with landings. The scatter plot on the right visually represents the same data, showing intervention frequency (color-coded) across various horizontal positions and altitudes during the experiment. Darker colors indicate more frequent interventions.

This figure compares two approaches to shared autonomy. (a) shows prior work, where the copilot always provides input, potentially limiting human autonomy. (b) shows the proposed Interventional Assist (IA) method, where the copilot only intervenes when it’s predicted to improve performance over the human pilot, preserving human autonomy.

More on tables

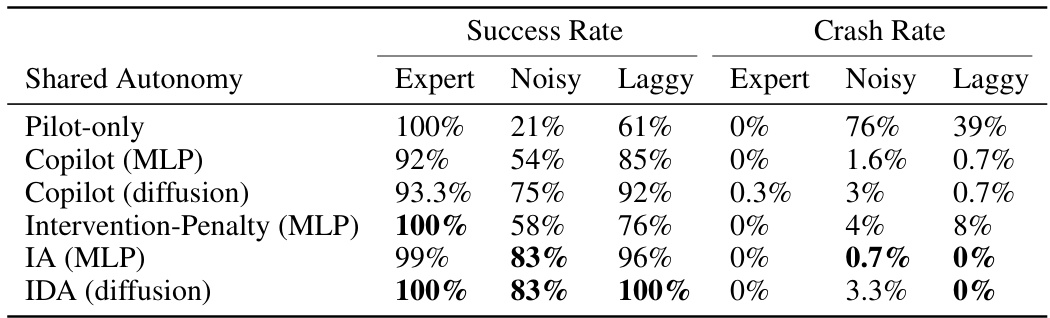

This table presents the success and crash rates for different shared autonomy methods in the Lunar Lander environment using surrogate pilots (expert, noisy, and laggy). The methods compared are: pilot-only, copilot with MLP, copilot with diffusion, intervention-penalty with MLP, IA with MLP, and IDA with diffusion. The results show how each method performs in terms of successful landings and crashes, highlighting IDA’s effectiveness in improving performance while preserving autonomy.

This table compares the success and crash rates of different control methods in the Lunar Lander environment using surrogate pilots (simulated agents mimicking human behavior). The methods compared are: pilot-only, copilot with an MLP, copilot with diffusion, intervention with penalty (MLP), intervention with MLP, and IDA (Interventional Diffusion Assistance) with a diffusion copilot. The results show IDA’s superior performance, especially concerning crash rate reduction while maintaining high success rates, even for noisy and laggy pilots.

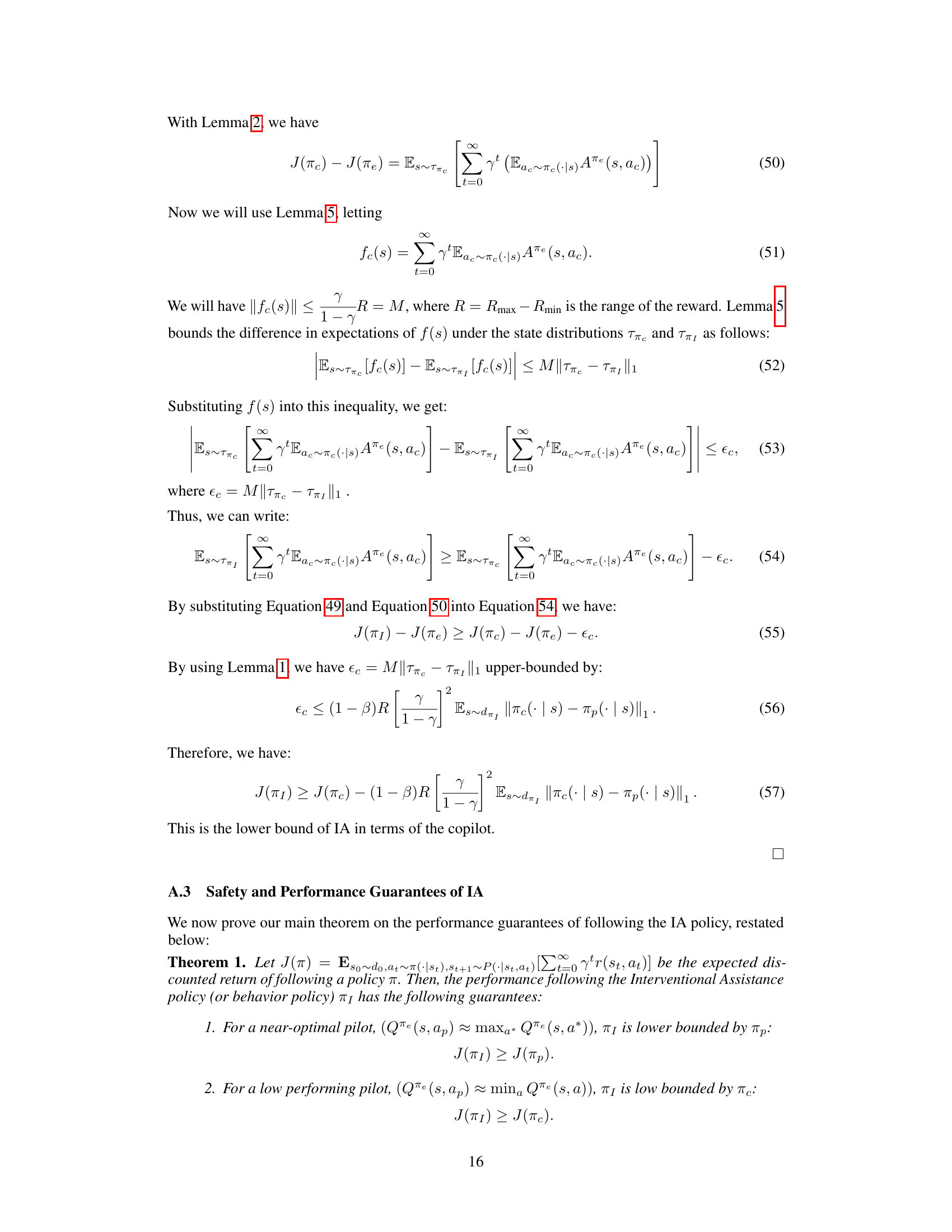

Full paper#