↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Neural circuits self-organize to perform computations efficiently, but biological noise and synaptic turnover threaten circuit stability. Existing models often rely on simplified rules, failing to capture the complexity of real neural systems. This paper aims to find robust, biologically realistic plasticity rules that organize and maintain useful circuit dynamics despite these challenges.

The researchers used meta-learning to discover plasticity rules that generated sequential activity in networks. They introduced biological noise (synaptic turnover) to evaluate the rules’ robustness. The results show that a temporally asymmetric generalization of Oja’s rule effectively organizes sequential activity, while a learned homeostasis mechanism further enhances its robustness to perturbation. Adding inhibitory plasticity to the model further enhanced its performance, demonstrating how plasticity on different synapse types can collectively maintain circuit function.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces a novel meta-learning approach to discover robust plasticity rules for self-organizing neural circuits. It addresses the challenge of maintaining stable neural dynamics despite biological noise and perturbations, offering valuable insights into neural circuit organization and learning. The findings could inspire new biologically plausible learning algorithms and enhance our understanding of brain plasticity.

Visual Insights#

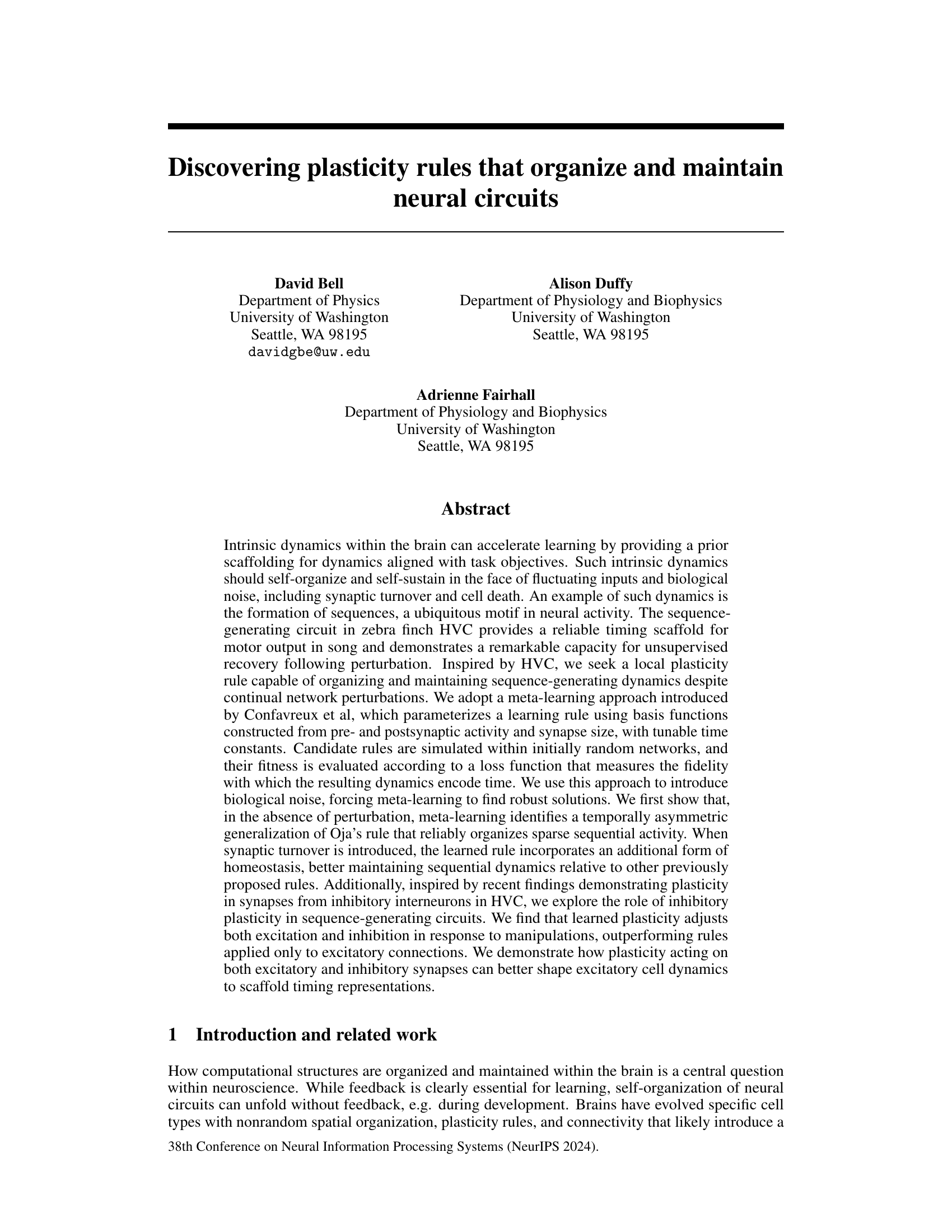

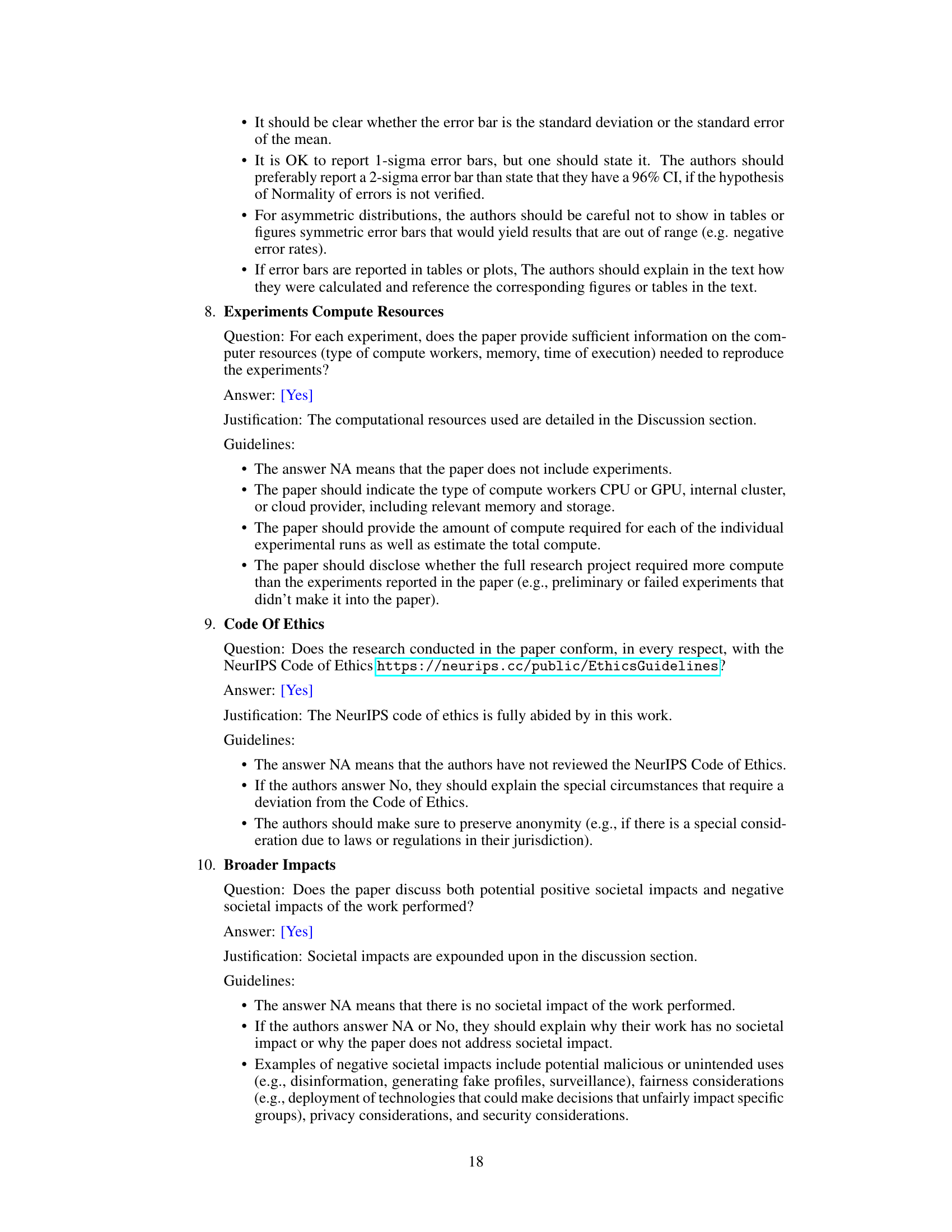

This figure illustrates the meta-learning approach used in the paper to discover plasticity rules that organize and maintain sequential neural activity. Panel (a) shows the context of the zebra finch HVC circuit in song learning. Panel (b) depicts the network structure used in the simulations, including excitatory and inhibitory neurons. Panel (c) outlines the meta-learning process: simulating candidate plasticity rules, evaluating a loss function, and adjusting the rules to minimize the loss. Panel (d) shows how the time representation is tested by training and testing a decoder on neural activity. Panel (e) demonstrates how synaptic turnover (stochastic removal and addition of synapses) is introduced to test the robustness of the learned plasticity rules.

In-depth insights#

Metaplasticity Rules#

The concept of ‘Metaplasticity Rules’ introduces a fascinating layer of complexity to our understanding of neural plasticity. It moves beyond the simple idea of strengthening or weakening individual synapses, suggesting that the rules governing plasticity themselves are subject to change. This meta-level regulation allows for adaptive responses to varying conditions, such as fluctuations in network activity or synaptic turnover. Exploring these rules is crucial, as they likely underlie the brain’s capacity for learning and adaptation throughout life. By studying metaplasticity, we can potentially gain insights into the mechanisms that govern synaptic homeostasis and long-term circuit stability, revealing how the brain dynamically reconfigures its processing power to match ongoing demands. Unraveling the interplay between synaptic and metaplastic changes is key to understanding learning, memory, and potentially even neurological disorders, offering avenues for developing more effective treatments and therapies. Therefore, research into this area promises to reveal fundamental insights into neural computation and dynamics.

Sequential Dynamics#

The concept of sequential dynamics in neural circuits is central to understanding complex brain functions. Self-organization of these dynamics, where temporally ordered patterns emerge spontaneously, is a particularly intriguing aspect. The paper explores this self-organization through the lens of plasticity rules, focusing on how local learning mechanisms can generate and maintain sequences in the face of inherent biological noise and network perturbations. Meta-learning, a technique that learns the learning rules themselves, proves to be a powerful tool for discovering plasticity rules that robustly organize sequences. The study highlights the importance of temporally asymmetric Hebbian learning and homeostatic mechanisms in ensuring the reliability and stability of these sequential patterns. Furthermore, the findings suggest that including inhibitory plasticity, reflecting biological realities, significantly enhances the robustness and flexibility of sequence generation. The interplay of excitatory and inhibitory plasticity appears crucial for shaping the timing and amplitude of neural responses, forming a robust attractor for specific sequential activity. The research emphasizes the biological plausibility of the discovered rules and highlights their potential for providing valuable insights into the fundamental organization and maintenance of neural circuits.

Synaptic Turnover#

Synaptic turnover, the continuous process of synapse formation and elimination, is a crucial aspect of neural plasticity and brain function. The research explores how synaptic turnover impacts the ability of neural circuits to maintain stable, sequential activity patterns critical for tasks such as motor control and timing. Introducing stochastic synaptic turnover into simulations forces the meta-learning algorithm to discover more robust plasticity rules that can compensate for the loss and addition of synapses. The study highlights that the learned plasticity rules not only organize sequential activity but also incorporate homeostasis mechanisms, improving circuit resilience against network perturbations. A particularly interesting finding is the discovery that including inhibitory plasticity significantly enhances the robustness of the system to perturbations. This suggests that the interplay between excitatory and inhibitory synapses is critical for maintaining stable temporal dynamics, despite the continuous structural changes imposed by synaptic turnover. The findings reveal valuable insights into how the brain maintains computational structures in the face of ongoing biological noise and structural change, emphasizing the importance of considering both excitatory and inhibitory plasticity mechanisms for comprehensive understanding of neural circuit organization and function.

Inhibitory Plasticity#

The study investigates the role of inhibitory plasticity in neural circuit organization and maintenance, particularly focusing on how it interacts with excitatory plasticity to shape neural dynamics. Inhibitory plasticity, alongside excitatory plasticity, is crucial for the self-organization and stability of sparse sequential activity in neural circuits. The findings reveal that incorporating inhibitory plasticity into models leads to more robust and stable sequence generation, particularly when the network experiences perturbations such as synaptic turnover. This suggests that inhibitory and excitatory plasticity mechanisms act in concert to maintain network homeostasis and stability. Meta-learning demonstrates that rules governing both excitatory and inhibitory plasticity are essential for adapting to network disruptions and maintaining the fidelity of timing representations. The learned rules reveal a homeostatic mechanism where adjustments in inhibitory synaptic strength compensate for changes in excitatory input, ensuring robust neural activity. This work highlights the importance of considering both excitatory and inhibitory plasticity in models of neural computation, demonstrating its significance for reliable neural circuit functioning and adaptation.

Homeostatic Mechanisms#

Homeostatic mechanisms are crucial for maintaining the stability of neural circuits in the face of perturbations. The research paper likely explores how these mechanisms, such as synaptic scaling and inhibitory plasticity, contribute to the robustness of neural activity patterns. Understanding how these processes operate and interact is key to understanding how neural circuits adapt and learn. The findings probably highlight the importance of both excitatory and inhibitory synaptic plasticity in stabilizing neural activity and maintaining sequence generation. The paper might delve into how these homeostatic mechanisms interact to ensure the fidelity of timing representations in the neural circuit, possibly focusing on how different plasticity rules affect different aspects of the postsynaptic response. The research likely examines the robustness of these mechanisms under biologically realistic levels of noise and perturbation, such as synaptic turnover, and compare them with previously proposed models of sequence generation. The results may reveal that the discovered homeostatic mechanisms are superior at maintaining network stability and sequential activity in the presence of noise. Overall, the paper sheds light on the adaptive capacity of neural circuits to maintain functional dynamics despite constant changes in their structure and activity levels.

More visual insights#

More on figures

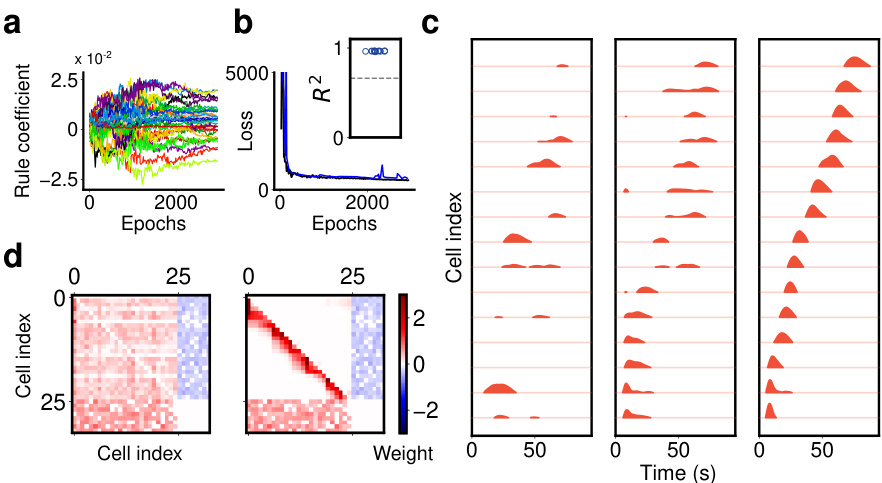

This figure shows the results of meta-learning plasticity rules for organizing sequential activity. Panel (a) displays the evolution of rule coefficients and time constants during the learning process. Panel (b) shows the training and testing loss curves, indicating successful learning. Panel (c) visualizes the network activity at different time points, demonstrating the emergence of sequential dynamics. Finally, panel (d) illustrates the weight matrices, revealing a feedforward structure in the network connectivity.

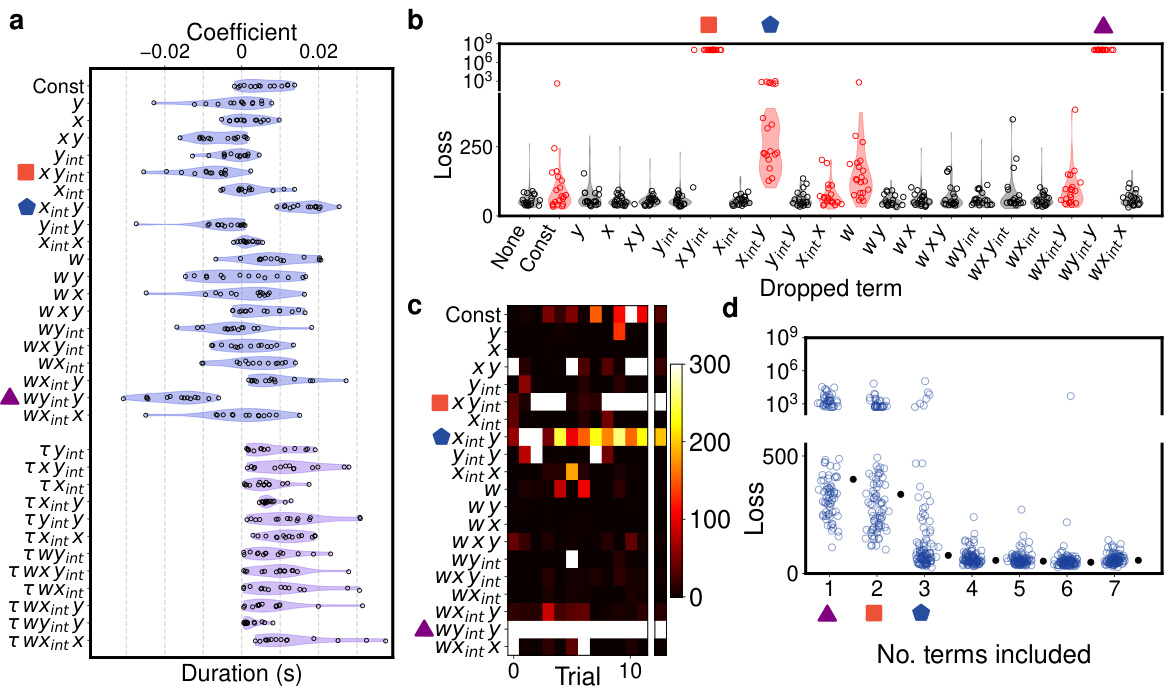

This figure explores the impact of different terms in the learned plasticity rule on the performance of the model. Panel (a) shows the distribution of coefficients and time constants for each term across multiple training instances. Panel (b) shows the loss when individual coefficients are set to zero, indicating the importance of each term. Panel (c) shows the difference in median loss between the full model and models with one term removed, further highlighting the importance of specific terms. Panel (d) shows the progressive refitting of the model, adding terms one by one in order of their impact on the loss, to demonstrate how the key terms contribute to the model’s performance.

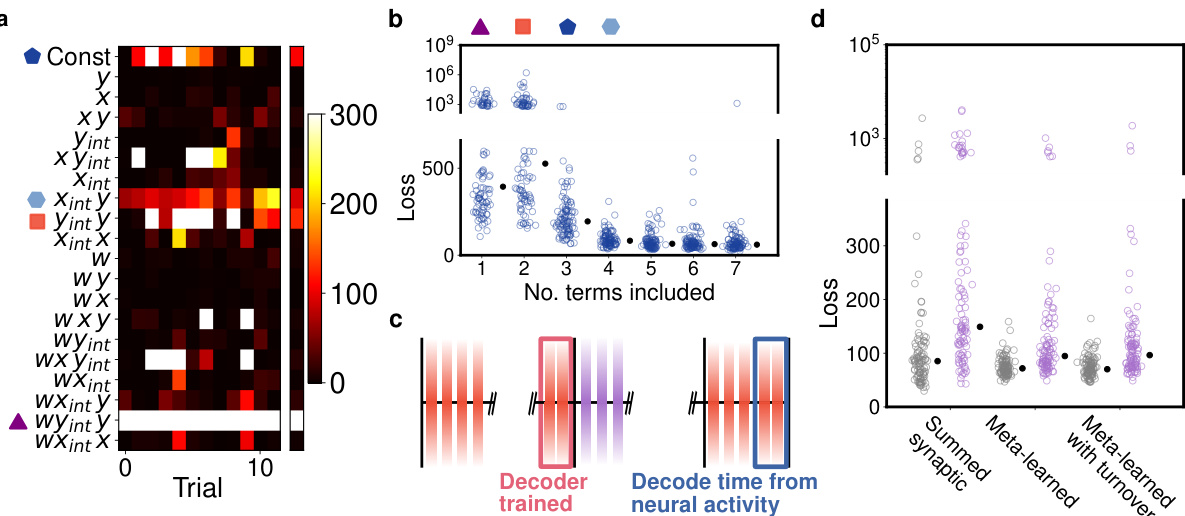

This figure analyzes the learned plasticity rules by systematically perturbing them. Panel (a) shows the distribution of coefficients and time constants across different training instances. Panel (b) assesses the impact of setting individual coefficients to zero on the performance loss. Panel (c) compares the median loss of full models with models where one term is removed. Finally, panel (d) illustrates the iterative process of adding terms back to the model based on their impact on the loss, highlighting the most crucial components of the learned rule.

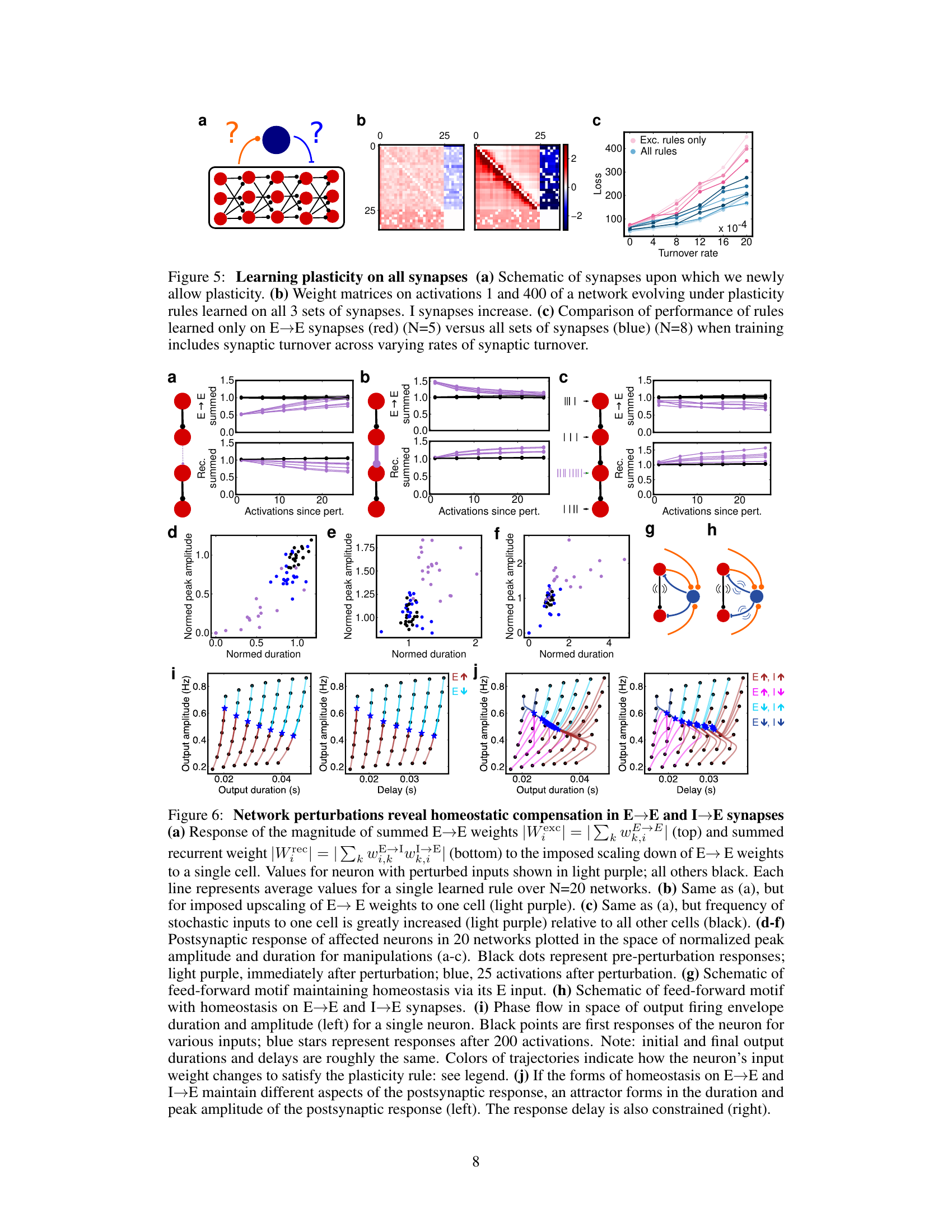

This figure shows the results of learning plasticity rules on all three types of synapses (E→E, E→I, and I→E) in a network. Panel (a) illustrates the network structure with the three synapse types. Panel (b) displays weight matrices at activations 1 and 400, showing that inhibitory synapse weights increase over time. Panel (c) compares the performance of rules learned with plasticity only on excitatory synapses (E→E) versus rules learned with plasticity on all three synapse types. The comparison is made across various rates of synaptic turnover, revealing that the inclusion of inhibitory plasticity enhances network performance.

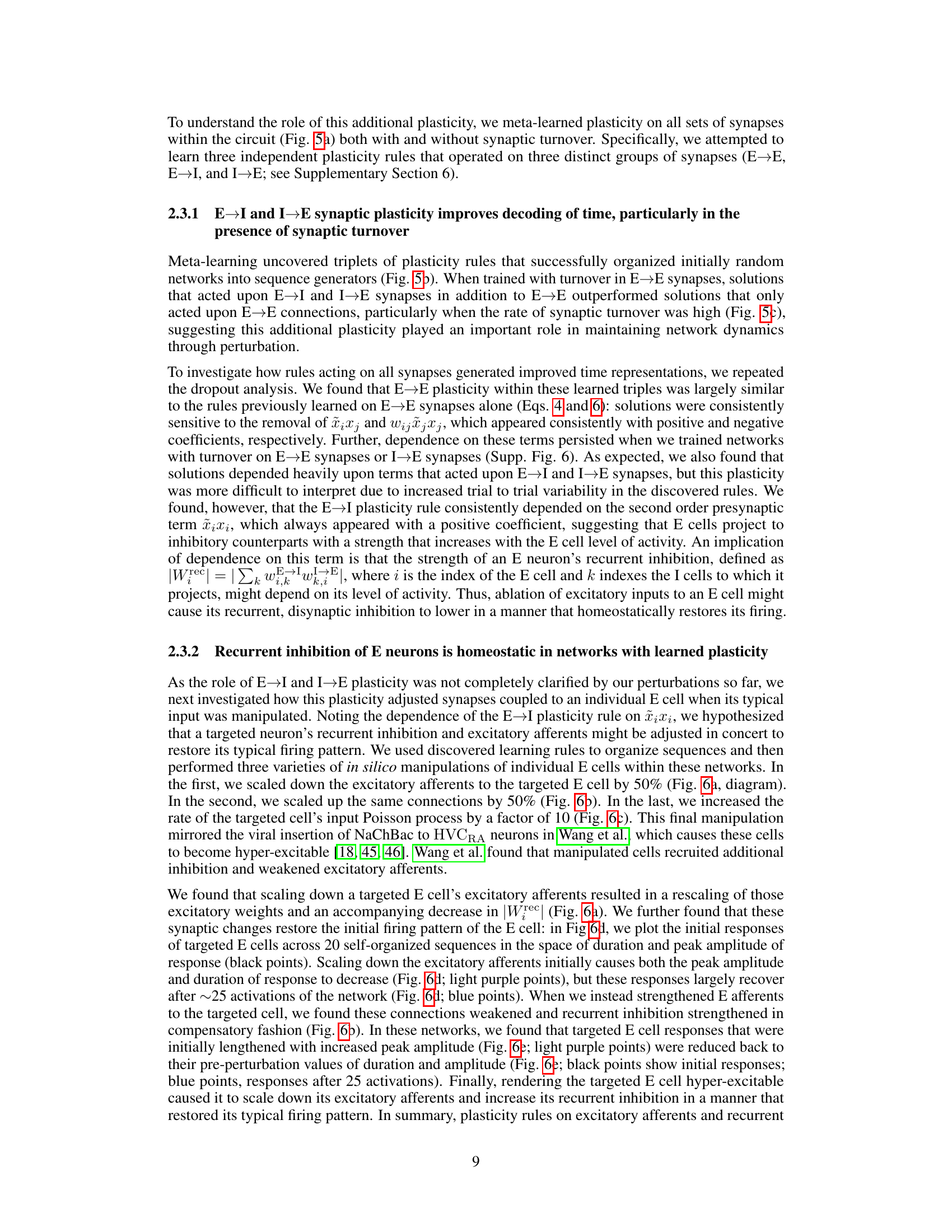

This figure displays the results of network perturbation experiments, showing how homeostatic mechanisms maintain network dynamics under different manipulations. The figure illustrates that plasticity rules on excitatory and inhibitory synapses work together to control different aspects of the postsynaptic responses, ensuring robust performance despite disruption.

Full paper#