↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Cross-spectral re-identification struggles with significant differences between visible and infrared images, hindering accurate matching. Existing methods often fail to model this modality discrepancy effectively, leading to suboptimal performance. Some methods try to bridge the gap by transforming images, but the results are often visually poor. Others attempt to make the network more robust to such a difference using image transformation strategies, but lack a thorough understanding of the real cross-spectral transformation.

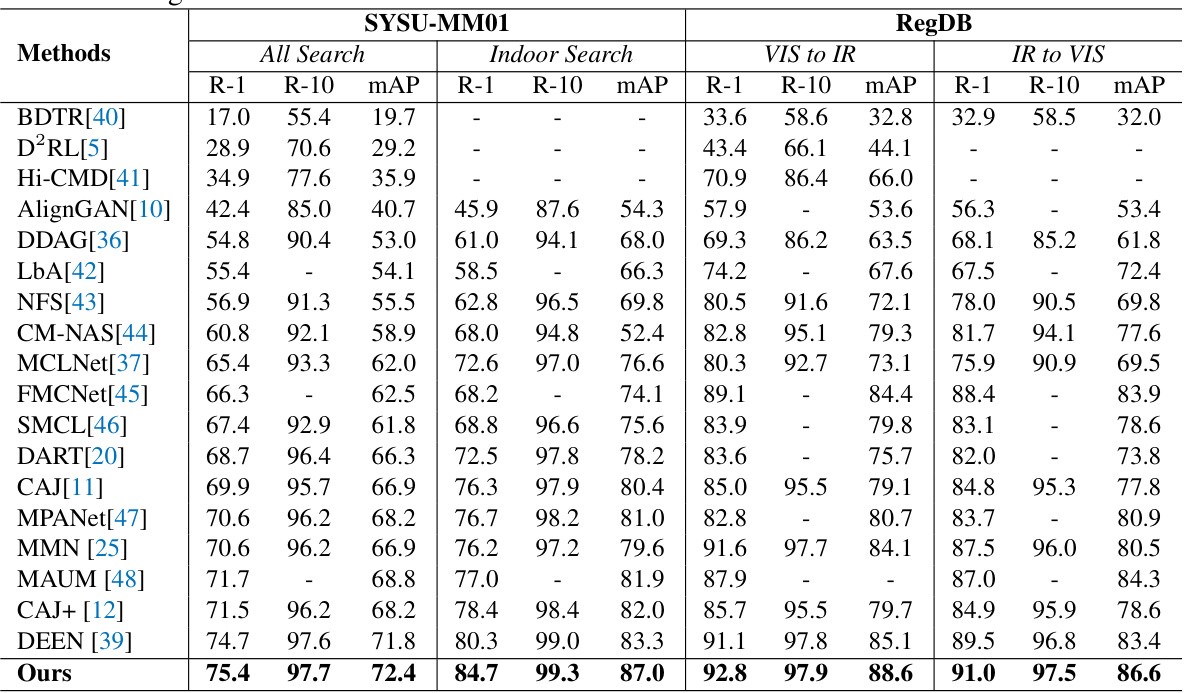

This paper proposes a novel data augmentation strategy called Random Linear Enhancement (RLE) that directly tackles the modality discrepancy. By modeling the transformation based on the Lambertian reflection model, RLE categorizes augmentation strategies into moderate and radical transformations, proposing Moderate Random Linear Enhancement (MRLE) and Radical Random Linear Enhancement (RRLE). RLE achieves state-of-the-art results on benchmark datasets, demonstrating its effectiveness and generality for improving cross-spectral re-identification.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in cross-spectral re-identification because it introduces a novel, unified data augmentation strategy (RLE). RLE significantly improves the performance of existing models and offers a new perspective on addressing modality discrepancies, opening exciting avenues for future research in this active area of computer vision.

Visual Insights#

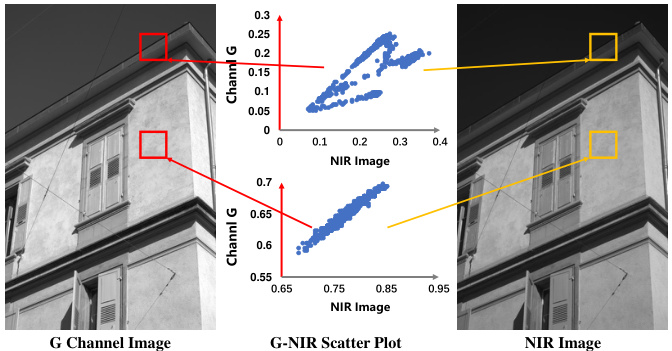

The figure shows an example of cross-spectral transformation between visible and NIR images. It highlights that while a linear transformation exists at the pixel level for materials with similar surfaces, the overall image-level transformation is nonlinear because of the diversity of materials with different reflective properties. This nonlinearity is a key aspect that the paper addresses.

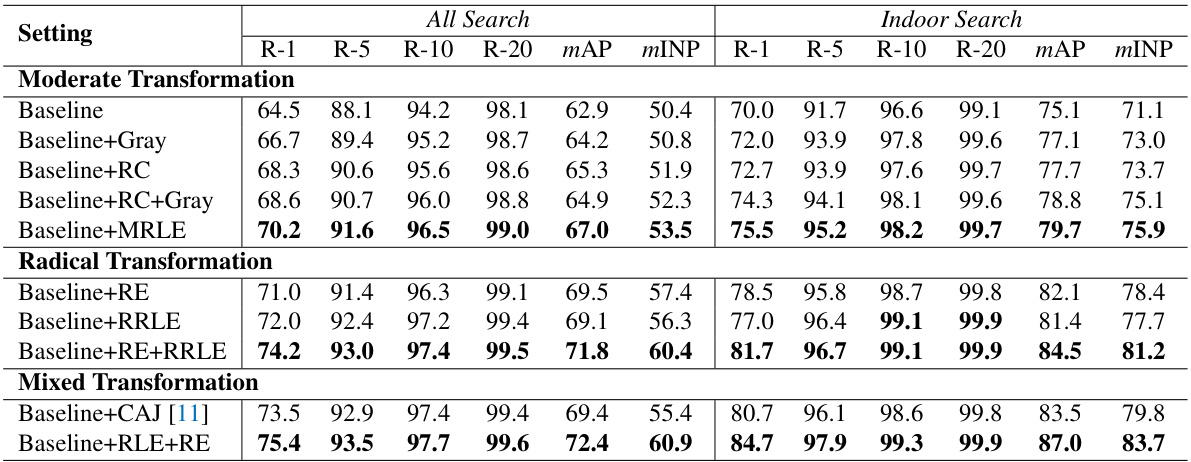

This table presents the ablation study results, comparing different data augmentation methods for cross-spectral re-identification. It shows the impact of using grayscale transformation, random channel selection, moderate random linear enhancement (MRLE), random erasing (RE), and radical random linear enhancement (RRLE), both individually and in combinations, on the performance metrics (rank-1 accuracy, rank-5 accuracy, rank-10 accuracy, rank-20 accuracy, mean average precision (mAP), and mean inverse negative penalty (mINP)) for both ‘All Search’ and ‘Indoor Search’ scenarios of the cross-spectral re-identification task. The baseline results without data augmentation are also included for comparison.

In-depth insights#

Cross-Spectral Re-ID#

Cross-spectral Re-identification (Re-ID) presents a unique challenge in visual recognition, focusing on matching individuals across different spectral images, such as visible and near-infrared (NIR). The core difficulty lies in bridging the modality gap between these images, which arises from variations in spectral reflectance properties of objects and materials. This paper approaches this problem by proposing a novel data augmentation strategy, Random Linear Enhancement (RLE), inspired by the Lambertian reflection model. RLE unifies previous augmentation methods by mimicking local linear transformations inherent in cross-spectral image formation, offering a more interpretable and robust approach. It is divided into moderate and radical transformations, which address different aspects of the spectral discrepancy. This framework is shown to improve performance significantly and provides a more generalized strategy for future research in cross-spectral Re-ID.

RLE Augmentation#

The Random Linear Enhancement (RLE) augmentation strategy, proposed within the context of cross-spectral re-identification, presents a unified perspective on data augmentation techniques. RLE categorizes existing methods into moderate and radical transformations, based on their impact on original image linear correlations. Moderate RLE (MRLE) maintains these correlations, employing a controlled linear mixing of visible image channels, extending beyond simpler techniques like grayscale conversion or random channel selection. In contrast, Radical RLE (RRLE) directly applies random linear transformations to local image regions, thereby introducing more diverse, non-linear changes and proving particularly useful for single-channel infrared images. The effectiveness of RLE stems from its ability to mimic the inherent modality discrepancies observed in cross-spectral data, arising from varying linear transformations across different material surfaces. Experiments highlight RLE’s superiority, demonstrating improved performance across various cross-spectral re-identification datasets and suggesting its potential as a generalizable data augmentation strategy beyond the specific task considered.

Modality Discrepancy#

The concept of “Modality Discrepancy” in cross-spectral re-identification centers on the inherent differences between images captured using different spectral bands, such as visible (VIS) and near-infrared (NIR). These discrepancies stem from how materials reflect light at various wavelengths, creating non-linear transformations between spectral domains. The paper highlights that these discrepancies aren’t simply global differences, but rather, local variations arising from the diverse surface materials and their unique spectral signatures. This localized nature complicates direct translation approaches, making data augmentation strategies crucial. The proposed RLE method addresses this challenge by modeling the discrepancy as diverse linear transformations, unifying various augmentation strategies into “moderate” and “radical” categories to tackle both types of transformations effectively. The key insight is that understanding and mimicking these local, material-specific linear transformations is paramount to bridging the modality gap and improving cross-spectral re-identification accuracy.

Linear Transformation#

The concept of linear transformation is central to the paper’s approach to cross-spectral re-identification. The authors propose that modality discrepancies between visible and infrared images stem primarily from diverse linear transformations acting on the surfaces of different materials. This observation allows them to unify various data augmentation strategies under a common framework. They categorize these strategies into moderate transformations, which preserve original linear correlations, and radical transformations, which generate local linear transformations without relying on external information. This framework forms the basis for the novel Random Linear Enhancement (RLE) method. The effectiveness of RLE is demonstrated experimentally, highlighting the importance of directly modeling the linear transformations underlying cross-spectral differences. RLE’s unified perspective is a key contribution, providing a theoretically grounded and generalizable approach to augmenting data for this challenging task.

Future of RLE#

The future of Random Linear Enhancement (RLE) in cross-spectral re-identification hinges on extending its applicability beyond the current limitations. While RLE shows promise in unifying data augmentation strategies by mimicking local linear transformations, future work should focus on improving robustness to diverse illumination conditions and material variations. Addressing the reliance on Lambertian reflection models and exploring alternative models for handling complex surface interactions is crucial. Further research should investigate the integration of RLE with other advanced techniques such as Generative Adversarial Networks (GANs) and exploring its effectiveness in various visual tasks beyond cross-spectral re-identification. Developing more sophisticated methods for adaptive linear transformation selection could significantly boost performance. Finally, comprehensive evaluation across larger and more diverse datasets with varying weather conditions would solidify RLE’s position as a robust and versatile data augmentation technique.

More visual insights#

More on figures

This figure shows example images from the VIS-NIR scene dataset. The images are divided into visible (VIS) and near-infrared (NIR) channels, and further processed to compute chromaticity band ratios (R-NIR, G-NIR, B-NIR). The ratios are shown as color-coded images. The key observation is that pixels from surfaces with similar material properties exhibit nearly constant ratios across the different spectral bands, suggesting a linear relationship between the visible and NIR spectral responses for homogenous surfaces.

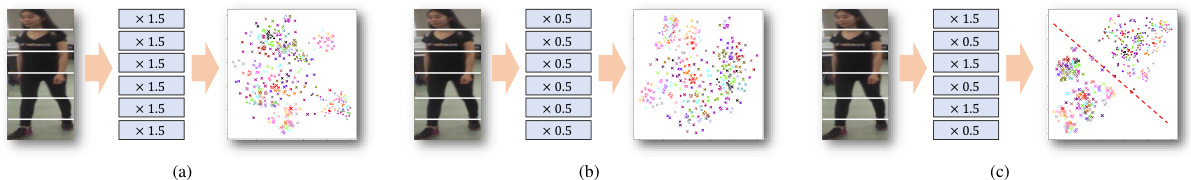

This figure shows how modality discrepancy happens in cross-spectral re-identification. It uses feature space visualization of 100 randomly selected images to illustrate three scenarios: (a) and (b) show that applying the same linear factor across the whole image results in limited modality discrepancy. (c) shows that applying variable linear factors to different parts of the image leads to significant modality discrepancy. This highlights the importance of considering local linear transformations in cross-spectral re-identification.

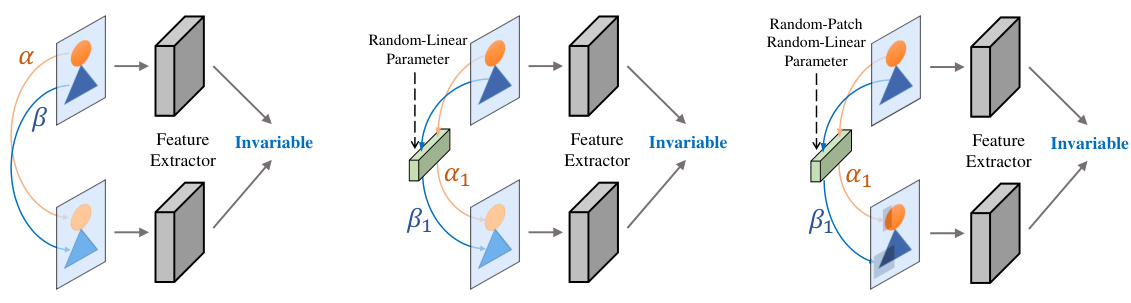

This figure illustrates the motivation behind the Random Linear Enhancement (RLE) method proposed in the paper for cross-spectral re-identification. It shows three scenarios: (a) a definite linear transformation on a definite image patch, (b) a random linear transformation on a definite image patch, and (c) a random linear transformation on a random image patch. The figure highlights how RLE aims to make the network robust to linear transformations by applying random linear transformations on random image patches, mimicking the modality discrepancy in cross-spectral images.

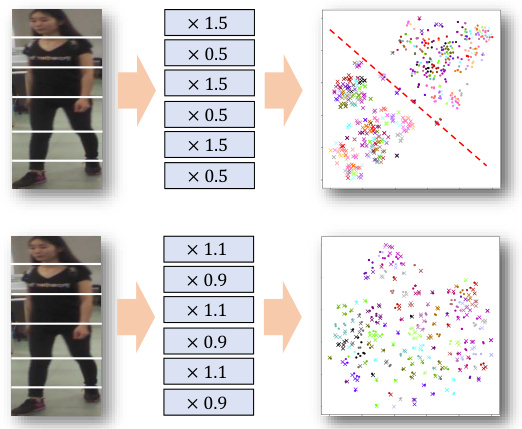

This figure visualizes the results of the Random Linear Enhancement (RLE) data augmentation method on both visible and infrared images. The top row shows an example where MRLE (Moderate Random Linear Enhancement) and RRLE (Radical Random Linear Enhancement) are applied to visible images, producing transformations that maintain original linear correlations (MRLE) and transformations that don’t rely on linear correlations (RRLE). The bottom row shows another example with different regions highlighted. The infrared images show the effects of MRLE and RRLE, while ‘~’ indicates that MRLE can’t directly affect infrared images. The figure demonstrates the diversity achievable through the RLE technique.

This figure illustrates the motivation behind the Random Linear Enhancement (RLE) data augmentation strategy. It shows three scenarios: (a) a definite linear transformation on a definite image patch, (b) a random linear transformation on a definite image patch, and (c) a random linear transformation on a random image patch. Scenario (c) represents the RRLE approach, which encourages network robustness to linear transformations by applying them randomly to image regions. This contrasts with (a) and (b), where transformations are either consistent or only applied to specific locations.

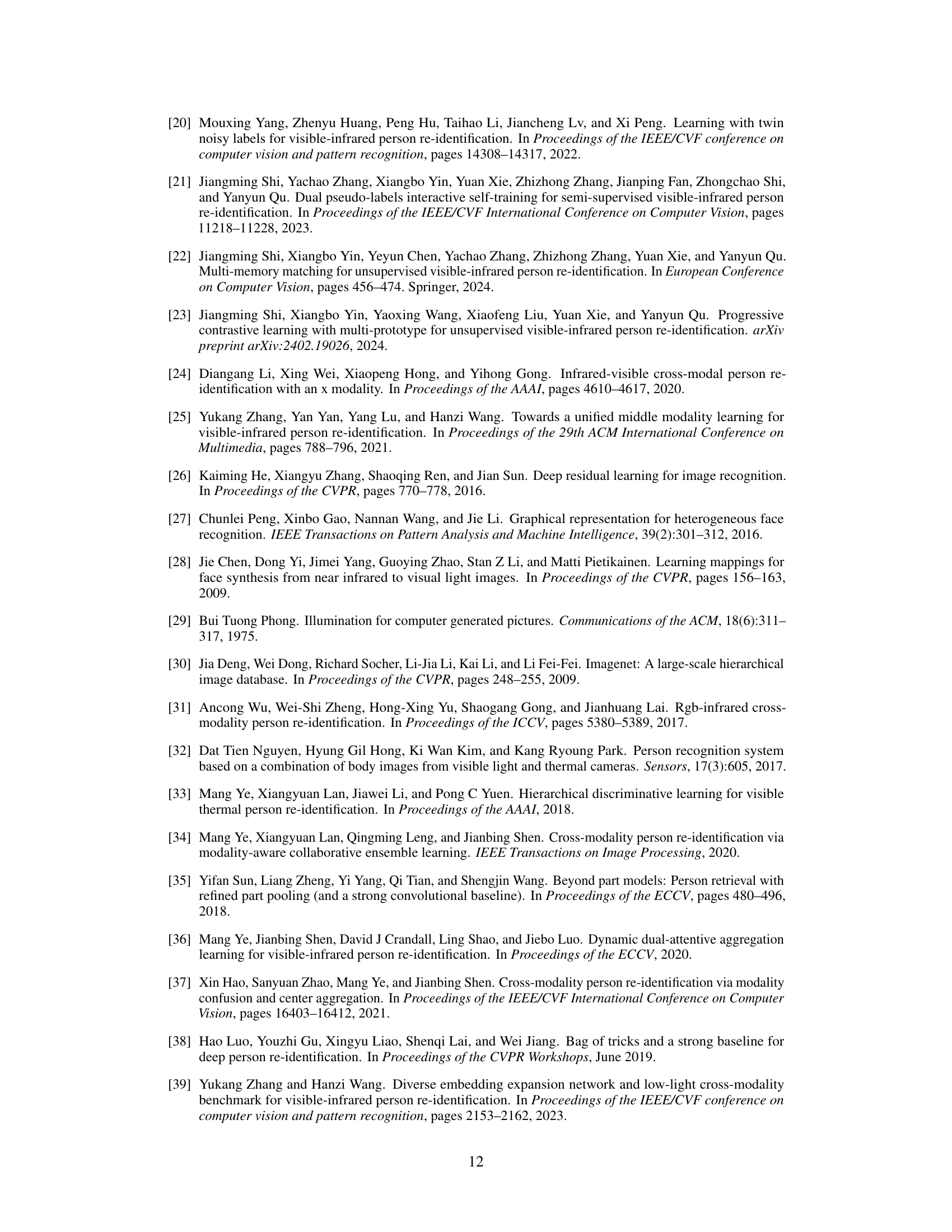

This figure shows an example of how modality discrepancy occurs when applying linear transformations with small linear factors. The top row demonstrates an image that has been divided into sections and multiplied by varying factors (1.5, 0.5, etc.). The bottom row shows a similar process but with smaller factors (1.1, 0.9, etc.). The resulting feature space visualizations (right side) reveal that the smaller factors result in less significant separation, highlighting the inadequacy of small linear factors in creating a notable modality gap during training.

More on tables

This table presents the ablation study results, comparing different data augmentation methods for cross-spectral re-identification. It shows the performance of various methods, including baseline, grayscale transformation, random channel selection, moderate random linear enhancement, random erasing, radical random linear enhancement, and combinations thereof. The results are broken down by different evaluation metrics (R-1, R-5, R-10, R-20, mAP, mINP) and search modes (All Search, Indoor Search).

This table presents the results of an ablation study comparing different data augmentation methods for cross-spectral person re-identification. It shows the impact of using grayscale transformation, random channel selection, moderate random linear enhancement (MRLE), random erasing (RE), and radical random linear enhancement (RRLE), both individually and in combination, on the performance of the re-identification task. The performance is measured using rank-1 accuracy (R-1), rank-5 accuracy (R-5), rank-10 accuracy (R-10), rank-20 accuracy (R-20), mean average precision (mAP), and mean inverse negative penalty (mINP), for both all-search and indoor-search scenarios on SYSU-MM01 dataset.

This table presents the ablation study results of different data augmentation strategies used in cross-spectral re-identification. It compares the performance of several methods, including the proposed Moderate Random Linear Enhancement (MRLE) and Radical Random Linear Enhancement (RRLE), against baselines and other common techniques like grayscale transformation and random channel selection. The results are evaluated using standard metrics for person re-identification.

Full paper#