TL;DR#

Traditional 3D occupancy prediction methods rely on dense, regular grids, leading to high computational costs and loss of detail for small objects. This is a significant hurdle for real-time applications like autonomous driving that need precise and efficient 3D scene understanding. The limitations of dense grid approaches, particularly concerning computational cost and lack of detail for smaller objects, are well-known problems.

OctreeOcc proposes a novel solution using octree data structures to address these issues. By adaptively adjusting the granularity based on object shapes and sizes, it achieves significantly improved prediction accuracy compared to state-of-the-art methods. Furthermore, the use of image semantic information for initialization and an iterative rectification mechanism further enhances accuracy and robustness, resulting in a 15-24% reduction in computational costs compared to dense-grid approaches.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces OctreeOcc, a novel approach to 3D occupancy prediction that significantly improves efficiency and accuracy. OctreeOcc addresses limitations of traditional dense-grid methods by using octree data structures. This has implications for various applications relying on 3D scene understanding, opening avenues for research in efficient and accurate 3D perception systems.

Visual Insights#

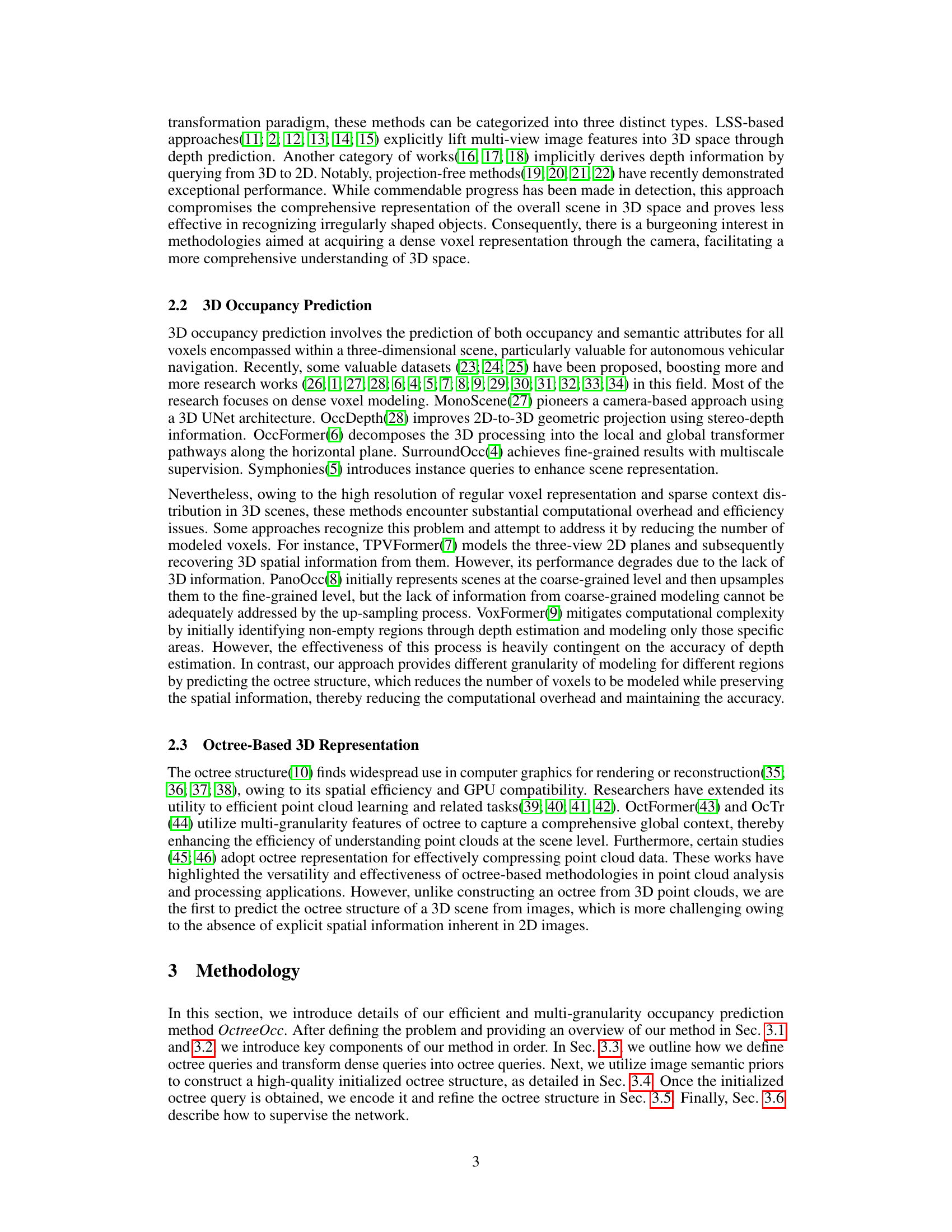

🔼 This figure illustrates the motivation behind using octrees for 3D occupancy prediction. Subfigure (a) shows the varying sizes of objects in a typical scene, highlighting the inefficiency of using a uniform grid representation. Subfigure (b) demonstrates how an octree adapts its resolution to different object scales, thus capturing details effectively with less computation.

read the caption

Figure 1: Scale difference of various categories and octree representation. (a) compares the average space occupied by different object types, indicating varying granularities needed for different semantic regions. (b) demonstrates the advantage of octree representations, enabling specific granularities for different objects and even parts of objects, reducing computational overhead while retaining spatial information.

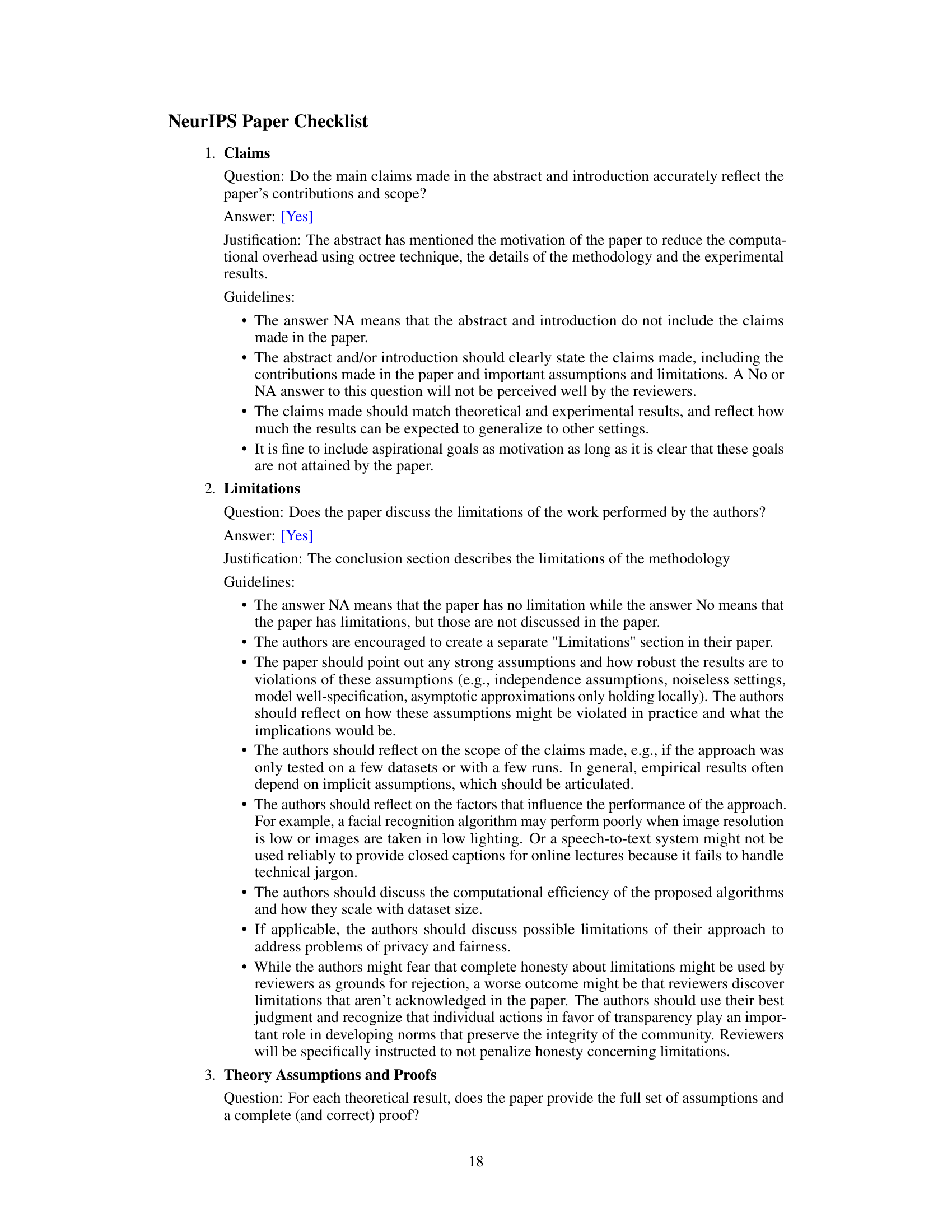

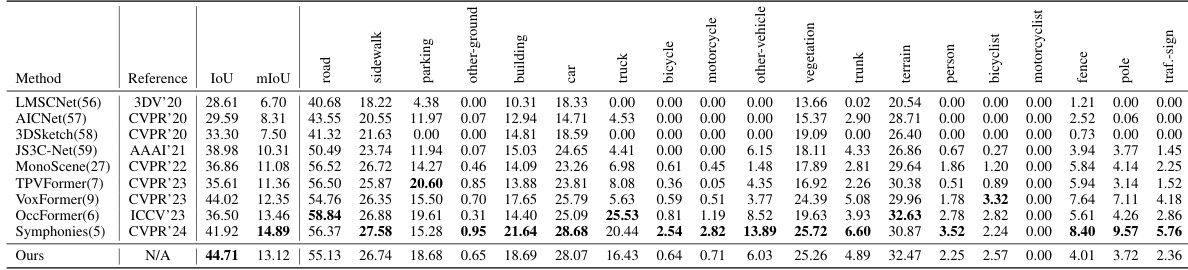

🔼 This table presents a comparison of the proposed OctreeOcc model’s performance against several state-of-the-art (SOTA) methods for 3D occupancy prediction on the Occ3D-nuScenes dataset. The results are reported as mean Intersection over Union (mIoU) scores for each of 18 semantic classes, along with details on the model’s backbone network, image resolution, and whether or not camera masks were used during training. The table helps to illustrate the superiority of OctreeOcc in terms of accuracy and efficiency.

read the caption

Table 1: 3D Occupancy prediction performance on Occ3D-nuScenes dataset. '*' denotes training with the camera mask.

In-depth insights#

Octree for Occupancy#

Utilizing octrees for occupancy prediction offers a compelling approach to address the limitations of traditional dense grid-based methods. Octrees’ hierarchical structure allows for adaptive resolution, efficiently representing sparse regions with coarse granularity while focusing on detailed areas with finer resolution. This multi-granularity aspect is crucial for handling the inherent variability in 3D scenes, where objects and semantic regions exist at vastly different scales. The efficiency gains stem from significantly reducing the number of voxels required for scene representation, leading to substantial computational savings. However, challenges remain in effectively learning and predicting the octree structure from 2D image data, especially with respect to handling occlusion and depth ambiguities. Incorporating semantic information into the octree initialization step is vital for improving prediction accuracy, while iterative refinement mechanisms can further rectify and enhance the predicted octree structure. Despite these challenges, octree-based occupancy prediction represents a promising avenue for achieving both efficiency and accuracy in 3D scene understanding.

Semantic-guided Init#

The heading “Semantic-guided Init” suggests a method for initializing a model or process using semantic information. This is a crucial step, as a well-informed initialization can significantly improve the efficiency and effectiveness of subsequent computations. The ‘semantic’ aspect implies the use of higher-level information, such as object categories or scene context, to guide the initialization process. Instead of relying solely on random initialization or low-level features, semantic guidance allows the model to start with a more meaningful representation. This can dramatically reduce training time by providing a strong starting point that aligns well with the task. This method likely involves leveraging pre-trained models for image segmentation or object recognition, creating a ‘semantic map’ of the input scene. The ‘semantic map’ might encode information about object locations, classes, and relationships. This ‘semantic map’ is then used to inform the initialization process, perhaps by guiding the assignment of initial weights or by pre-defining certain model parameters. Effective semantic-guided initialization is critical for tasks such as scene understanding and 3D reconstruction, where a meaningful understanding of the scene’s content is crucial for accurate predictions. This approach contrasts with traditional methods that might ignore or inadequately utilize semantic information during initialization, resulting in slower convergence and less accurate final results.

Iterative Refinement#

Iterative refinement, in the context of 3D occupancy prediction, is a crucial technique for improving prediction accuracy and efficiency. The core idea is to iteratively refine an initial prediction using feedback from subsequent processing steps. This iterative process allows the model to correct initial errors or inconsistencies and learn more from the data. Octree-based methods particularly benefit from iterative refinement because the hierarchical nature of octrees allows for efficient correction of errors at different levels of granularity. A key aspect is how the feedback is generated and integrated into the refinement process. This could involve using additional sensor data, comparing the initial prediction to ground truth data, or employing a different algorithm to identify and correct prediction errors. The iterative structure rectification module in OctreeOcc elegantly demonstrates this principle. By initially predicting a rough structure and then iteratively refining it based on features extracted from the data, OctreeOcc demonstrates substantial improvement in both prediction accuracy and computational efficiency. The strategy is adaptive, focusing refinement efforts on regions of uncertainty, making the overall process efficient and effective.

Efficiency Gains#

Analyzing efficiency gains in 3D occupancy prediction reveals significant potential for improvement. Traditional methods using dense grids suffer from high computational costs, especially when dealing with detailed scenes or small objects. Octree-based approaches offer a promising solution by adaptively adjusting resolution based on scene complexity. This variable granularity allows for efficient representation of both large and small objects, significantly reducing memory consumption and processing time. The integration of semantic information further refines octree structure, improving prediction accuracy without sacrificing efficiency. The 15-24% reduction in computational overhead compared to dense-grid methods demonstrated in the paper is a compelling result, highlighting the practical value of this approach. However, further investigation is needed to assess how this efficiency scales with increasingly complex scenes and larger datasets. The impact of the octree structure initialization and refinement steps on overall performance requires more detailed analysis. Additionally, exploring the trade-off between efficiency and accuracy at different octree depths is crucial for optimizing the method’s performance in various applications.

Future Works#

Future work could explore several promising avenues to enhance OctreeOcc. Improving the robustness of the octree structure prediction is crucial; this might involve exploring alternative loss functions, refining the iterative structure rectification module, or incorporating more sophisticated geometric priors. Investigating adaptive octree depth strategies that dynamically adjust the resolution based on local scene complexity would improve efficiency and accuracy. Exploring alternative query mechanisms beyond octree queries, such as point cloud-based methods or graph-based representations, could potentially offer advantages in specific scenarios. Furthermore, extending OctreeOcc to handle dynamic scenes would broaden its applications, requiring effective temporal modeling strategies. Finally, a thorough investigation into the generalizability of OctreeOcc to diverse sensor modalities (e.g., LiDAR, radar) and datasets is warranted to confirm its versatility and wide applicability in real-world environments.

More visual insights#

More on figures

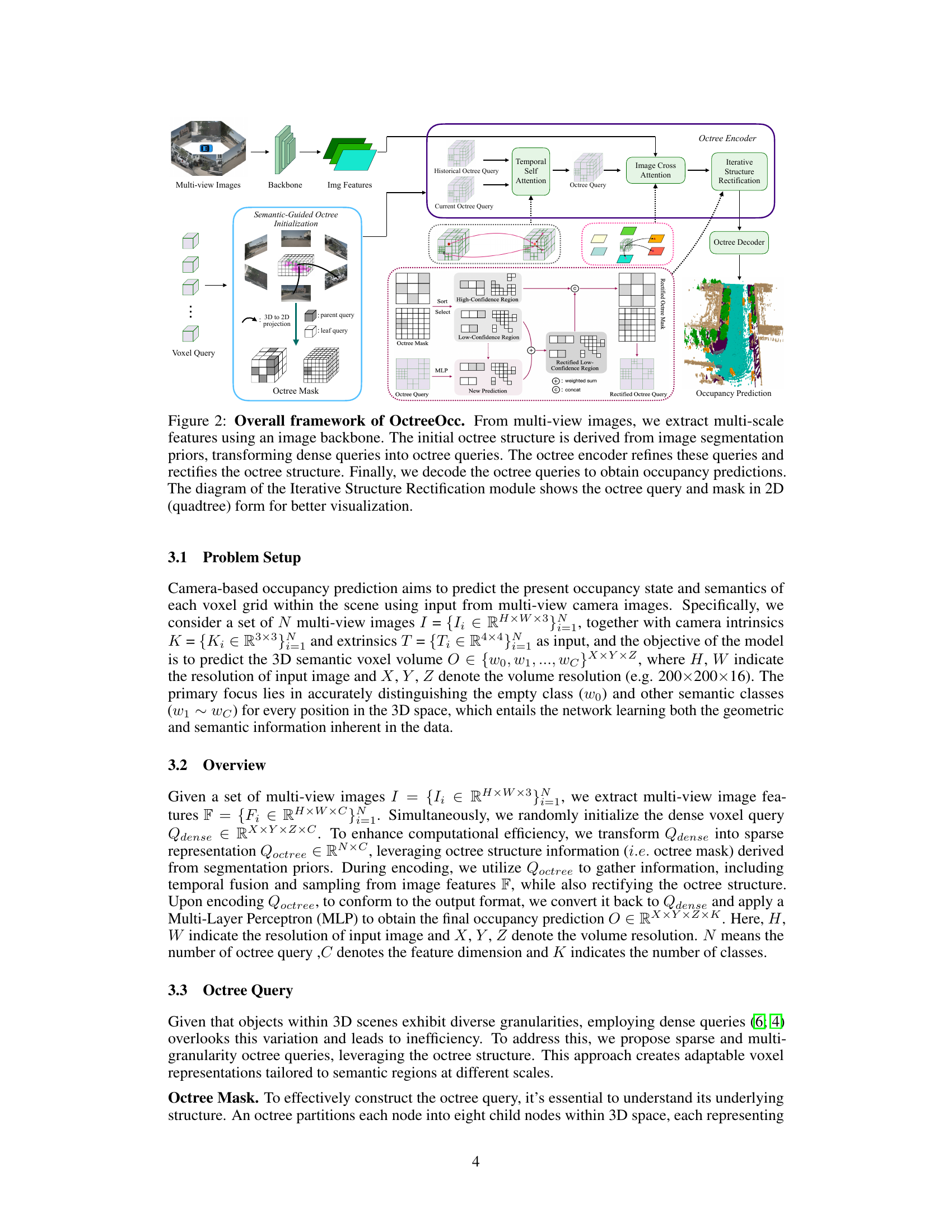

🔼 This figure illustrates the overall framework of the OctreeOcc model. It starts by extracting multi-scale features from multi-view images using a backbone network. A semantic-guided octree initialization module leverages image segmentation to create an initial octree structure. This structure is then used to convert dense voxel queries into sparse octree queries. An octree encoder processes these queries using temporal self-attention and image cross-attention to refine the queries and iteratively rectify the octree structure via an iterative structure rectification module. Finally, an octree decoder generates the occupancy prediction from the refined octree queries. A simplified 2D representation (quadtree) is used to visualize the iterative structure rectification process.

read the caption

Figure 2: Overall framework of OctreeOcc. From multi-view images, we extract multi-scale features using an image backbone. The initial octree structure is derived from image segmentation priors, transforming dense queries into octree queries. The octree encoder refines these queries and rectifies the octree structure. Finally, we decode the octree queries to obtain occupancy predictions. The diagram of the Iterative Structure Rectification module shows the octree query and mask in 2D (quadtree) form for better visualization.

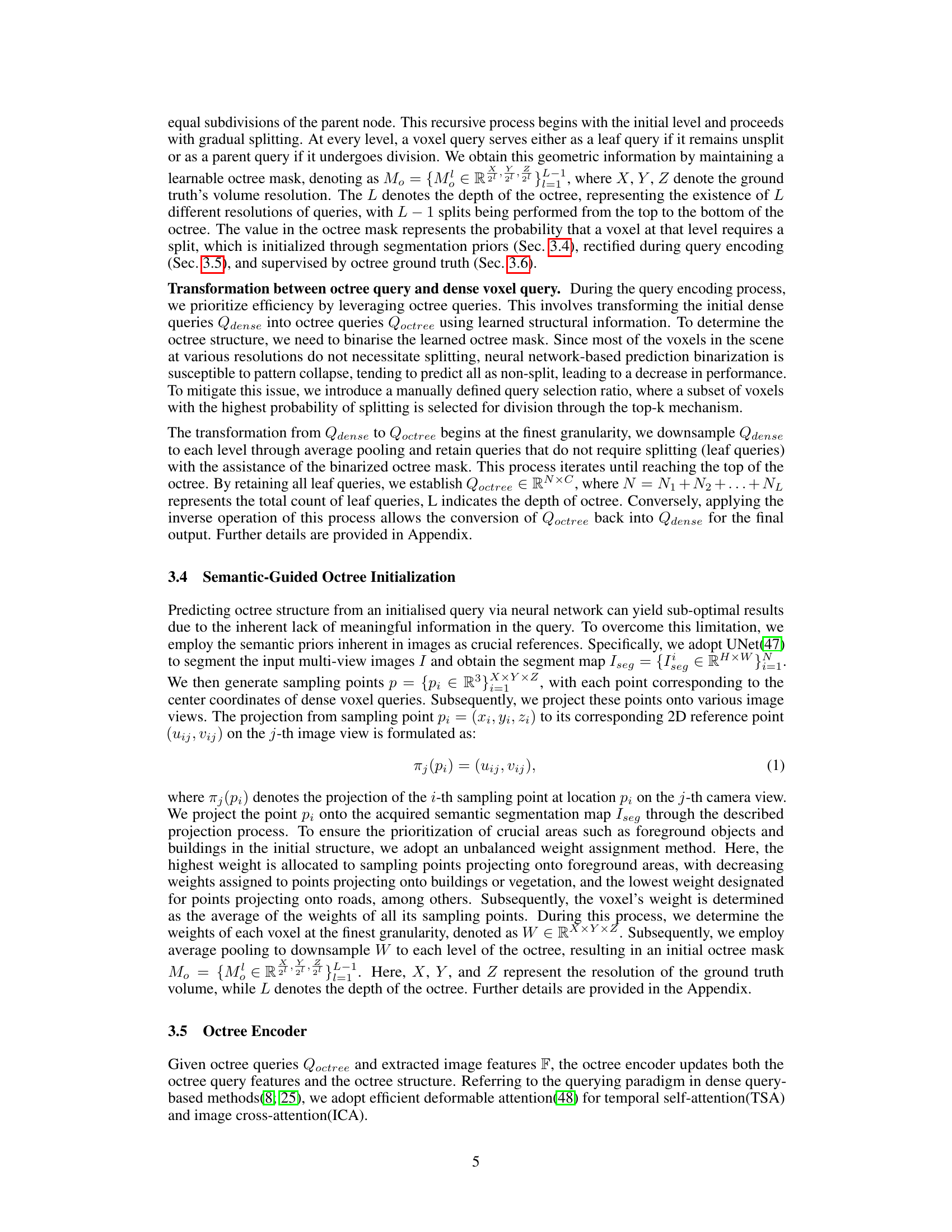

🔼 This figure demonstrates the improvement in octree structure prediction after applying the Iterative Structure Rectification module. The left side shows the initial, less accurate prediction, while the right side displays a refined structure that better aligns with the shapes of the objects in the scene. The rectification module enhances the accuracy and consistency of the octree representation.

read the caption

Figure 3: Illustration of octree structure rectification. The left figure shows the initially predicted octree structure, while the right figure displays the structure after rectification. It's evident that the rectification module improves the consistency of the octree structure with the object's shape.

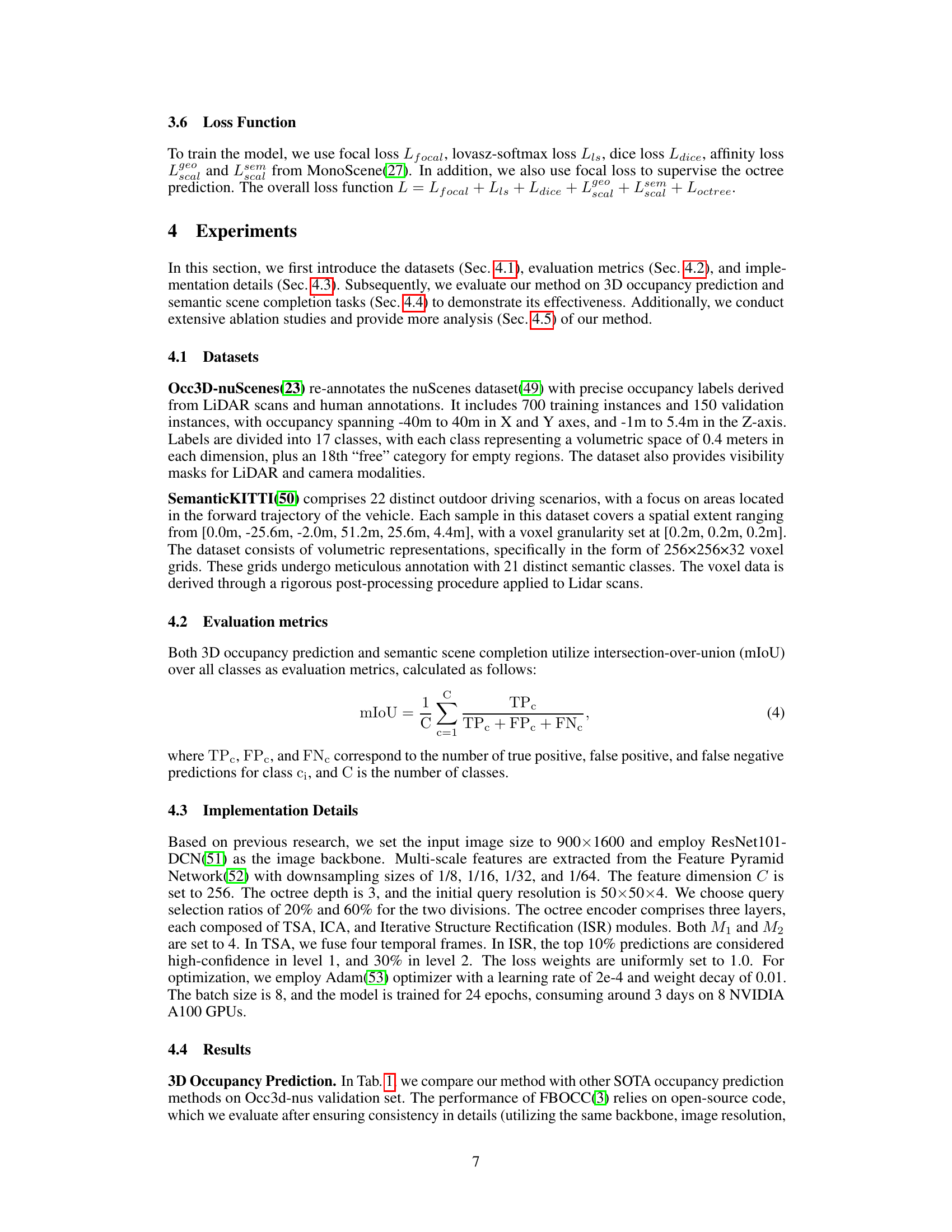

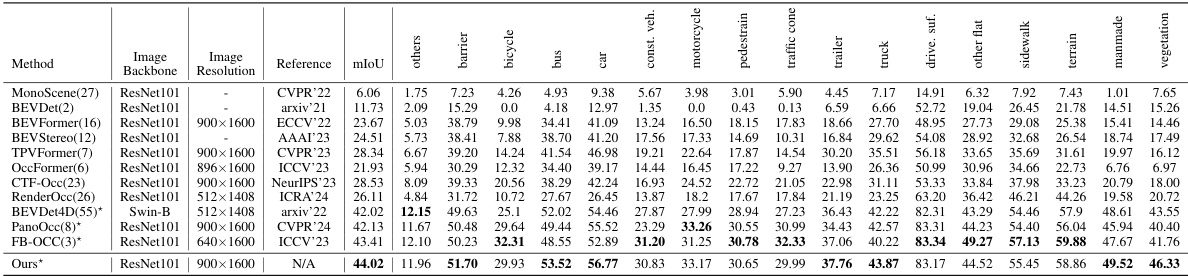

🔼 This figure shows a qualitative comparison of occupancy prediction results from different methods on the Occ3D-nuScenes validation set. The predictions are visualized as 3D point clouds colored by semantic class, and are compared to the ground truth. The resolution of the voxel predictions is 200x200x16. The figure highlights the improvements in accuracy and detail provided by the proposed OctreeOcc method compared to existing methods like PanoOcc and FB-OCC.

read the caption

Figure 4: Qualitative results on Occ3D-nuScenes val set, where the resolution of the voxel predictions is 200×200×16.

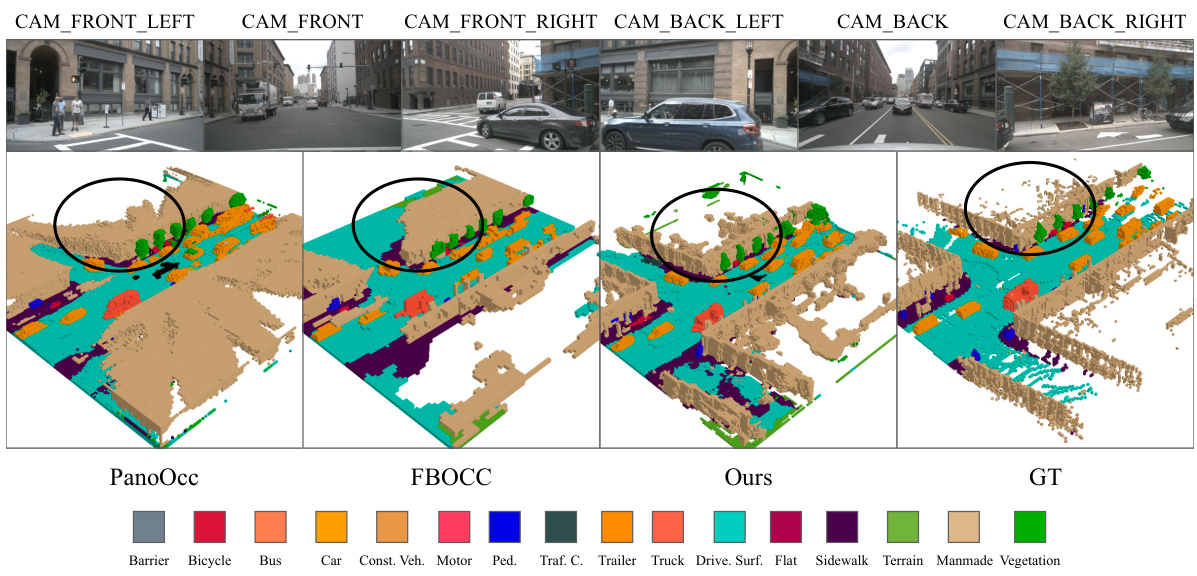

🔼 This figure compares the occupancy prediction results of three different methods (PanoOcc, FB-OCC, and OctreeOcc) with the ground truth. The first row shows the input multi-view images used for prediction. The second row displays the occupancy predictions from each method, visually demonstrating the differences in their performance. Each method’s prediction is presented alongside the ground truth for direct comparison and evaluation. Circular highlights are used to point out the differences and highlight specific areas of interest for better visualization.

read the caption

Figure 5: More visualization on Occ3D-nuScenes validation set. The first row displays input multi-view images, while the second row showcases the occupancy prediction results of PanoOcc(8), FBOCC(3), our methods, and the ground truth.

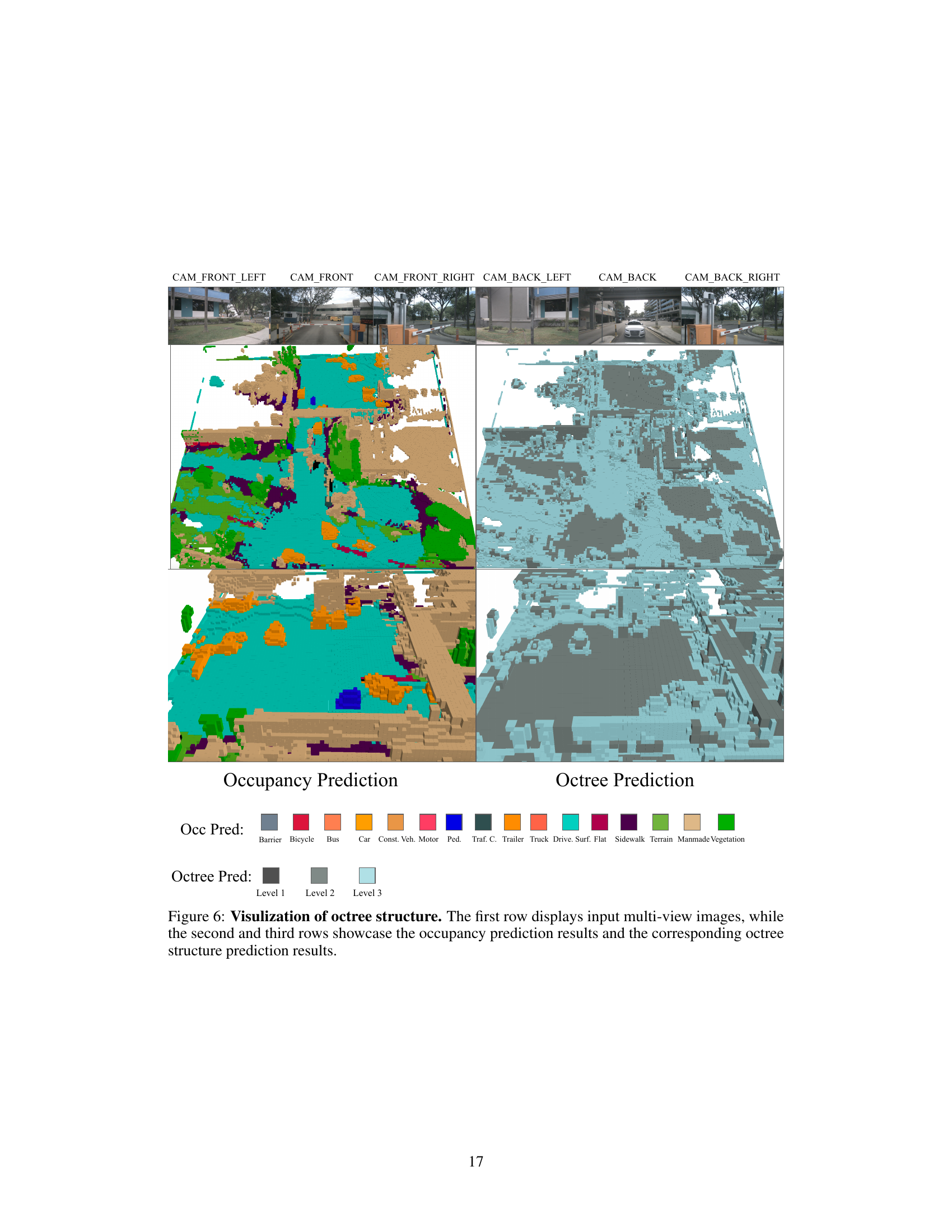

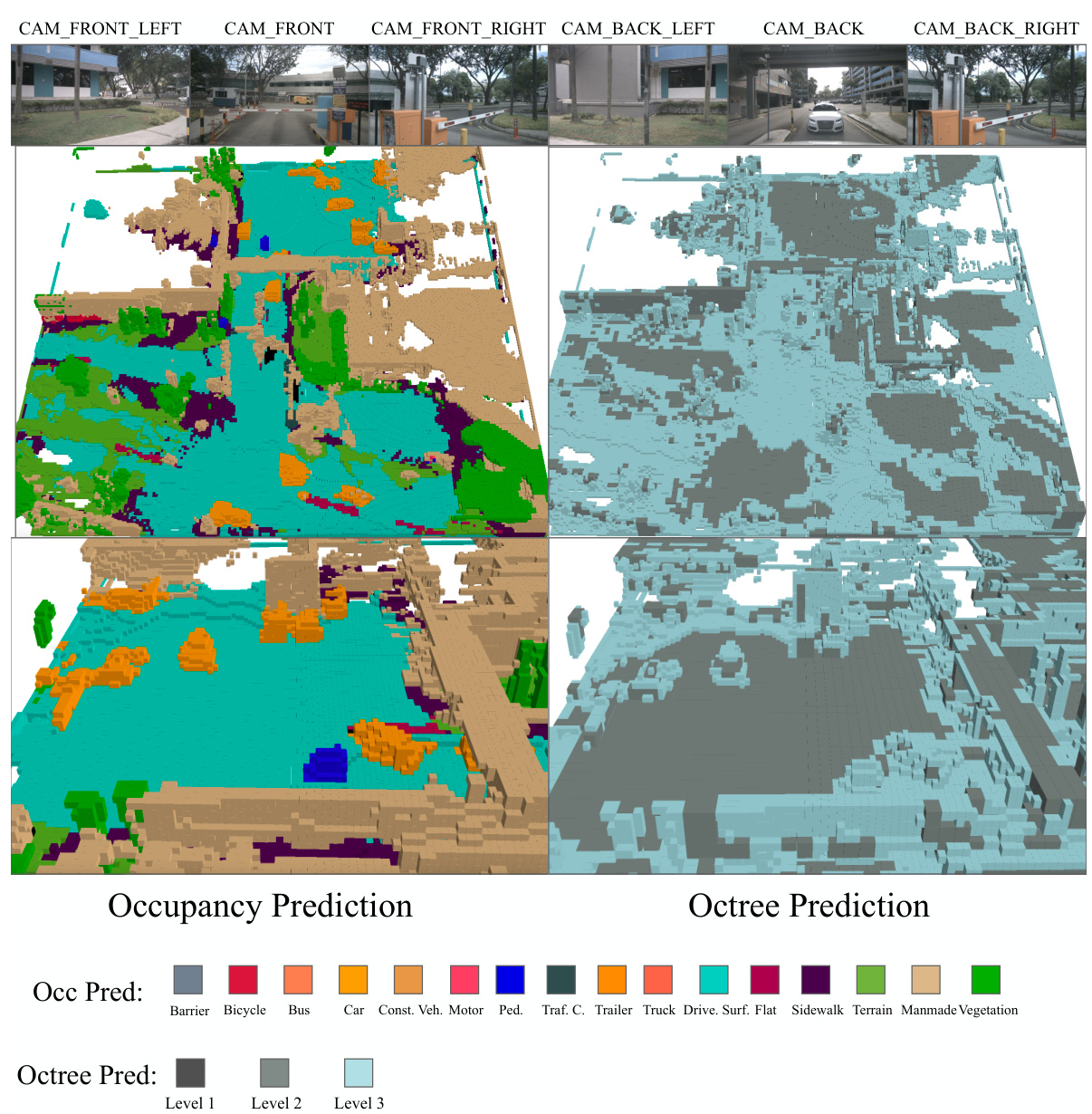

🔼 This figure visualizes the OctreeOcc model’s output. The top row shows the input multi-view images used by the model. The second row displays the final occupancy prediction generated by the model in a 3D voxel grid. The third row presents a visualization of the octree structure created during the prediction process. Different colors in the occupancy prediction represent different semantic classes, while the different gray levels in the octree structure represent different levels of detail in the octree, showing the varying granularities used to represent different parts of the scene.

read the caption

Figure 6: Visualization of octree structure. The first row displays input multi-view images, while the second and third rows showcase the occupancy prediction results and the corresponding octree structure prediction results.

More on tables

🔼 This table presents a comparison of the 3D occupancy prediction performance of various methods on the Occ3D-nuScenes dataset. The performance is measured using mean Intersection over Union (mIoU) across different object categories. The asterisk (*) indicates methods that were trained using camera masks, highlighting the impact of incorporating this additional data. The table shows that the proposed OctreeOcc method significantly outperforms other state-of-the-art methods.

read the caption

Table 1: 3D Occupancy prediction performance on Occ3D-nuScenes dataset. '*' denotes training with the camera mask.

🔼 This table presents a comparison of the 3D occupancy prediction performance of several state-of-the-art methods on the Occ3D-nuScenes dataset. The metrics used are mean Intersection over Union (mIoU) for different object categories. The asterisk (*) indicates that those models were trained using the camera mask, highlighting the impact of this additional data on performance. The table allows for a direct comparison of different approaches and their relative strengths and weaknesses in predicting occupancy across various object classes. The table shows the method name, their reference, and the mIoU for different object classes.

read the caption

Table 1: 3D Occupancy prediction performance on Occ3D-nuScenes dataset. '*' denotes training with the camera mask.

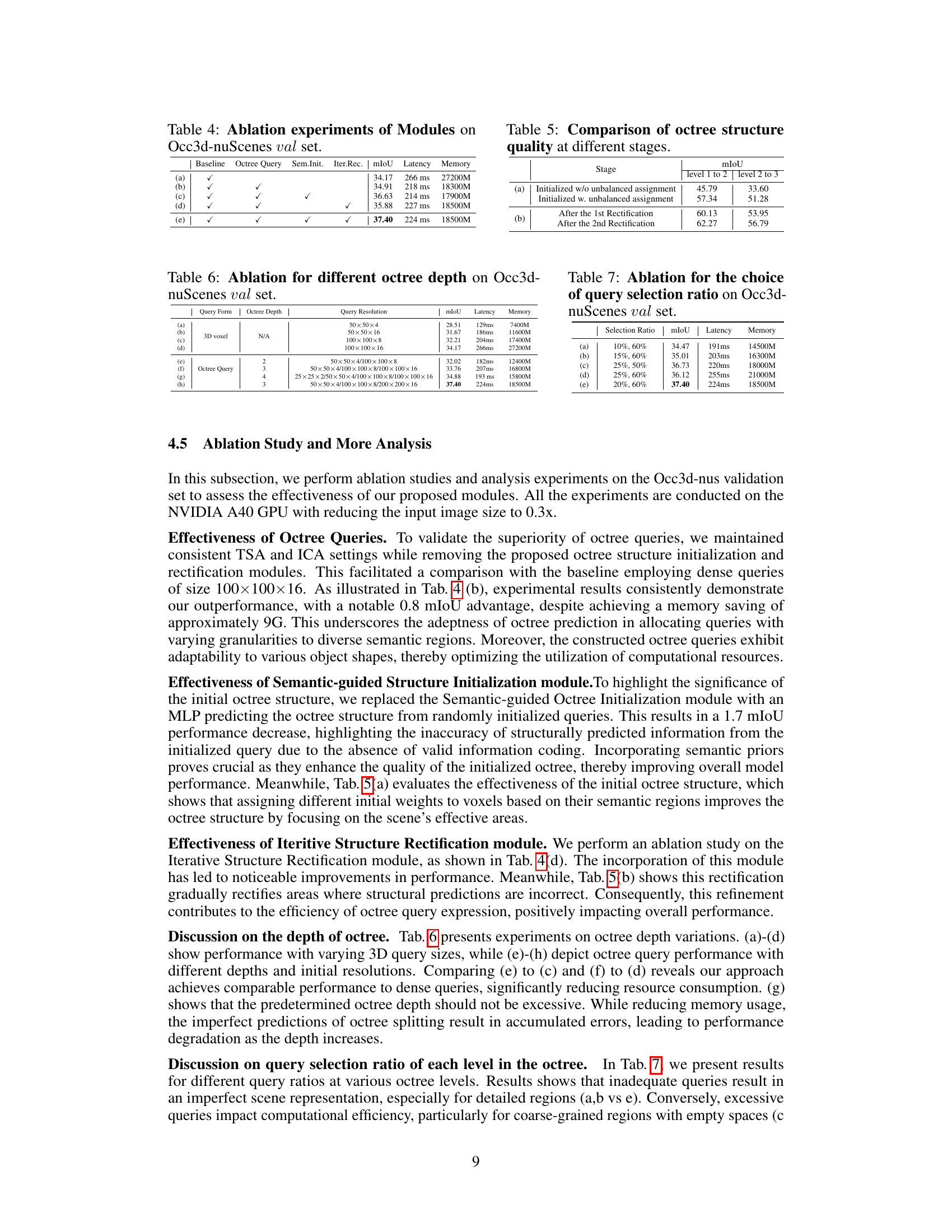

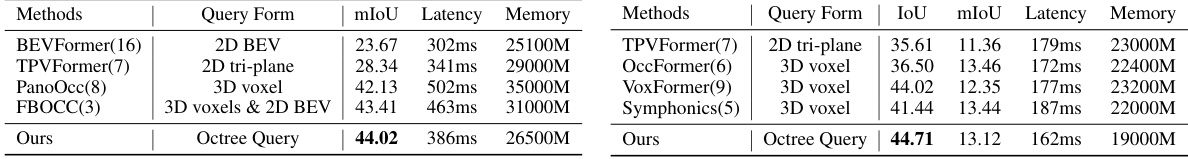

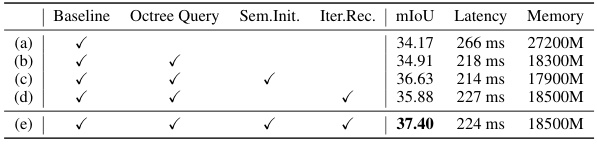

🔼 This table presents the ablation study results on the Occ3d-nuScenes validation set. It shows the impact of different modules on the model’s performance, measured by mIoU, latency, and memory usage. The modules evaluated include Octree Query, Semantic Initialization (Sem. Init.), and Iterative Rectification (Iter.Rec.). Each row represents a different model configuration, with checkmarks indicating the inclusion of specific modules. The baseline model uses dense queries instead of octree queries. The results demonstrate that incorporating all the modules leads to improved performance.

read the caption

Table 4: Ablation experiments of Modules on Occ3d-nuScenes val set.

🔼 This table shows the comparison of octree structure quality at different stages. The first row shows the mIoU of level 1 to 2 and level 2 to 3 when the octree is initialized without unbalanced assignment. The second row shows the mIoU of level 1 to 2 and level 2 to 3 when the octree is initialized with unbalanced assignment. The third row shows the mIoU of level 1 to 2 and level 2 to 3 after the first rectification. The fourth row shows the mIoU of level 1 to 2 and level 2 to 3 after the second rectification.

read the caption

Table 5: Comparison of octree structure quality at different stages.

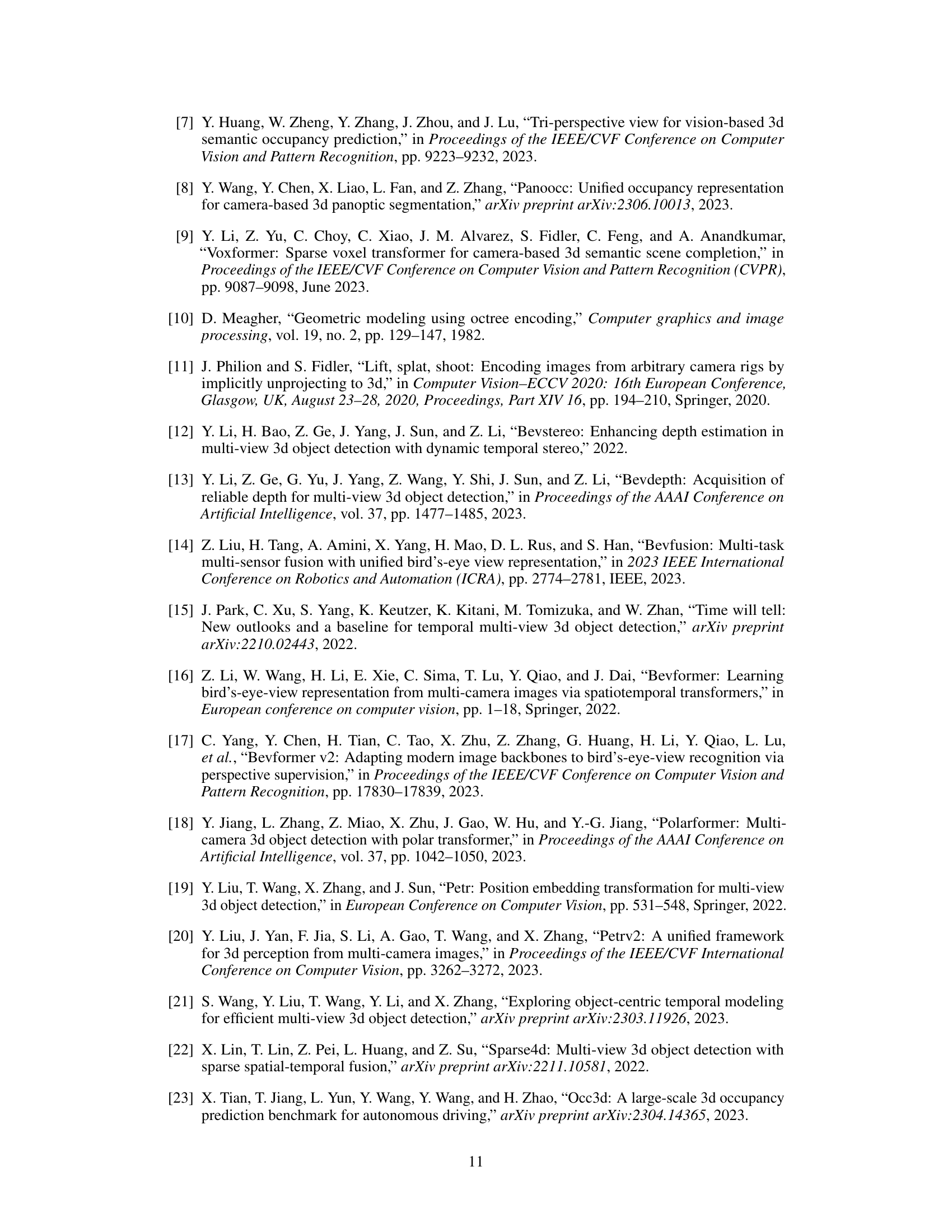

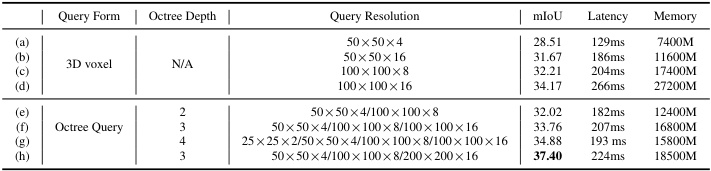

🔼 This table presents the ablation study on different octree depths. It compares the performance (mIoU, Latency, and Memory) of using different octree depths (2, 3, 4) and query resolutions. The results show how the choice of octree depth and resolution impacts the efficiency and accuracy of the occupancy prediction.

read the caption

Table 6: Ablation for different octree depth on Occ3d-nuScenes val set.

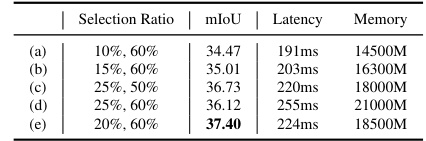

🔼 This table presents an ablation study on the effect of different query selection ratios on the performance of the OctreeOcc model. The experiment varied the percentage of voxels selected for splitting at different levels of the octree. The results show that a selection ratio of 20%, 60% yields the best performance in terms of mIoU, although increasing this ratio further can improve performance but also increases latency and memory consumption.

read the caption

Table 7: Ablation for the choice of query selection ratio on Occ3d-nuScenes val set.

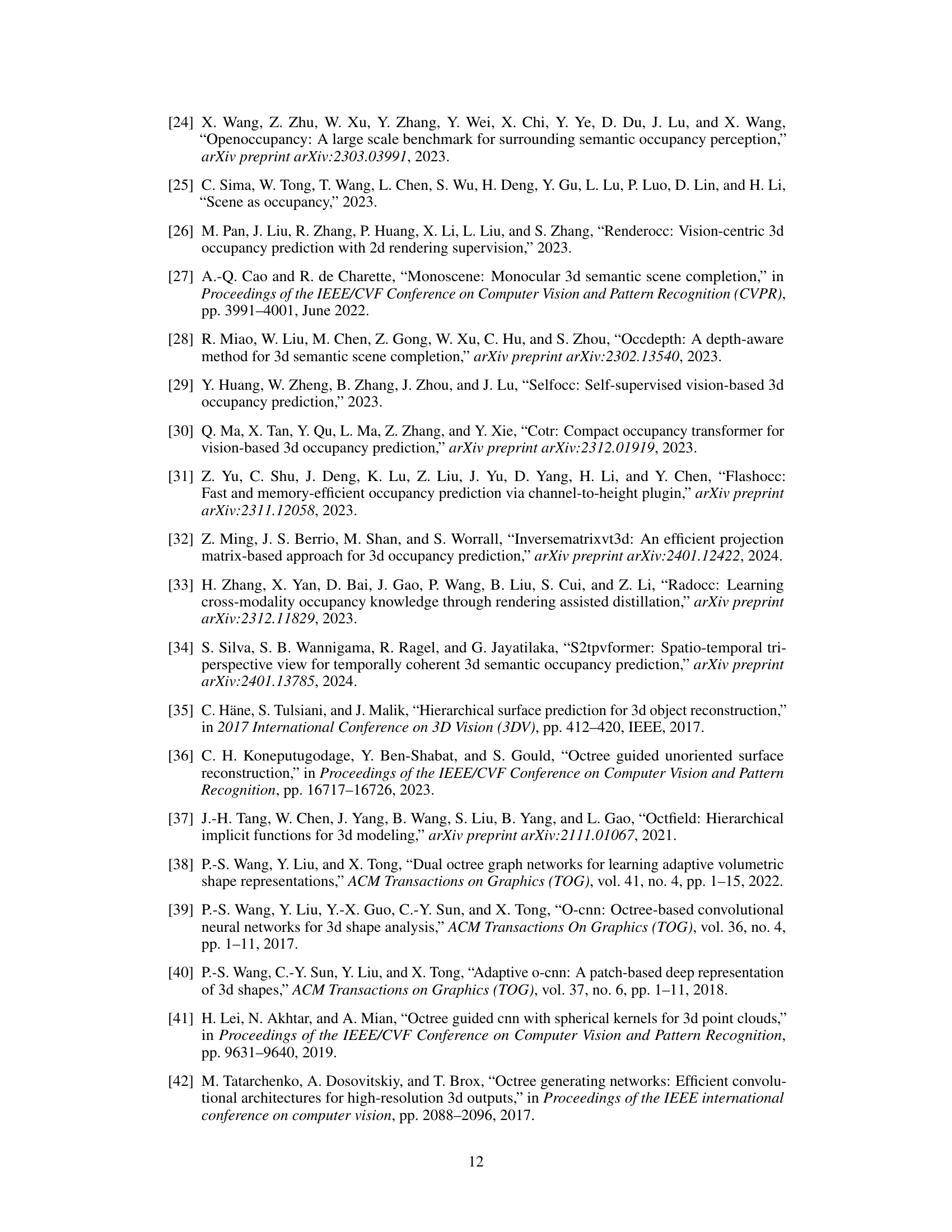

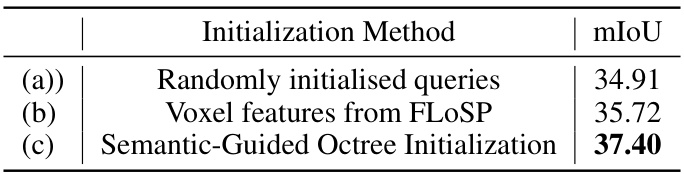

🔼 This table presents the ablation study results for different octree initialization methods. It compares the mIoU achieved using randomly initialized queries, voxel features from the FloSP method, and the proposed Semantic-Guided Octree Initialization. The results demonstrate the superior performance of the Semantic-Guided Octree Initialization, highlighting its effectiveness in improving the accuracy of the initial octree structure.

read the caption

Table 8: More ablation of octree initialization

🔼 This table compares the performance of the proposed OctreeOcc method against a baseline and another octree-based method (OGN) in terms of mIoU, latency, and memory usage. The results show that OctreeOcc achieves the highest mIoU while maintaining relatively low latency and memory consumption compared to the other methods.

read the caption

Table 9: Comparison with another octree method.

Full paper#