↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Differentially Private Stochastic Convex Optimization (DP-SCO) is a crucial problem in machine learning, aiming to find optimal solutions while preserving data privacy. However, existing DP-SCO algorithms often rely on the unrealistic assumption that data gradients have uniformly bounded Lipschitz constants. This assumption often breaks down when real-world data exhibits heavy tails, where the probability distribution has extreme values. Therefore, there’s a need to improve the robustness and efficiency of DP-SCO algorithms in heavy-tailed settings.

This research introduces new reduction-based techniques to develop DP-SCO algorithms that achieve near-optimal convergence rates, even with heavy-tailed gradients. The key innovation is a novel population-level localization framework that effectively handles the challenges posed by heavy-tailed data. The study also offers a range of optimized algorithms, showcasing improvements under specific conditions like known Lipschitz constants or smooth functions. These contributions significantly advance the state-of-the-art in DP-SCO by providing more practical and efficient solutions for real-world applications.

Key Takeaways#

Why does it matter?#

This paper is crucial because it addresses the limitations of existing differentially private stochastic convex optimization (DP-SCO) algorithms. Current DP-SCO methods often assume uniformly bounded gradients (Lipschitz continuity), which is unrealistic for many real-world datasets with heavy tails. This work provides near-optimal algorithms for DP-SCO under a more realistic heavy-tailed assumption, significantly advancing the field and enabling broader applications of private machine learning.

Visual Insights#

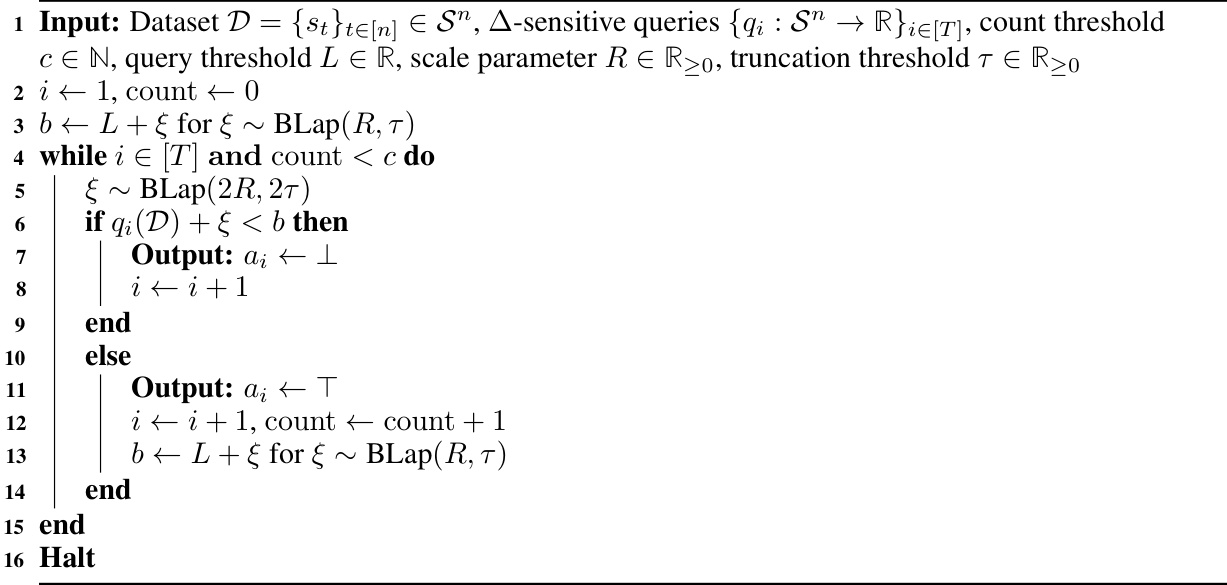

Algorithm 6 is a subroutine used in Algorithm 7, which is the main algorithm for smooth functions. This algorithm implements the sparse vector technique (SVT) to ensure differential privacy while handling heavy-tailed gradients. It takes as input a dataset D, a sequence of queries {qi}, a count threshold c, a query threshold L, a scale parameter R, and a truncation threshold τ. The algorithm iteratively processes the queries, adding bounded Laplace noise, and if a query is below the threshold, outputs 1; otherwise, outputs T and increments a counter. The algorithm halts either when the counter reaches c or all queries are processed. The outputs, a sequence of 1s and Ts, indicating whether each query was below the threshold or not, are used to determine the algorithm’s stability in Algorithm 7, enabling privacy.

Full paper#