TL;DR#

In-context learning (ICL) in pre-trained transformer models has shown promising results in single-agent settings, but its capabilities in multi-agent scenarios remain largely unexplored. This paper focuses on the in-context game-playing (ICGP) capabilities of these models in competitive two-player zero-sum games. The study highlights the challenges of applying ICL to multi-agent scenarios due to game-theoretic complexities and the need to learn Nash Equilibrium. Previous work primarily focused on ICRL in single-agent settings, limiting the broader applicability of the findings.

This research addresses these challenges by providing theoretical guarantees demonstrating that pre-trained transformers can effectively approximate Nash Equilibrium for both decentralized (each player uses a separate transformer) and centralized (one transformer controls both players) learning settings. The authors provide concrete constructions to show that transformers can implement well-known multi-agent game-playing algorithms. These results expand our understanding of ICL in transformers and offer insights into developing more robust and efficient AI agents in competitive scenarios.

Key Takeaways#

Why does it matter?#

This paper is crucial because it bridges the gap between empirical observations of transformer in-context learning and theoretical understanding, especially in multi-agent settings. It opens new avenues for research in in-context reinforcement learning (ICRL), potentially leading to more efficient and robust AI agents for various applications. The theoretical guarantees provided offer valuable insights for designing and improving such systems.

Visual Insights#

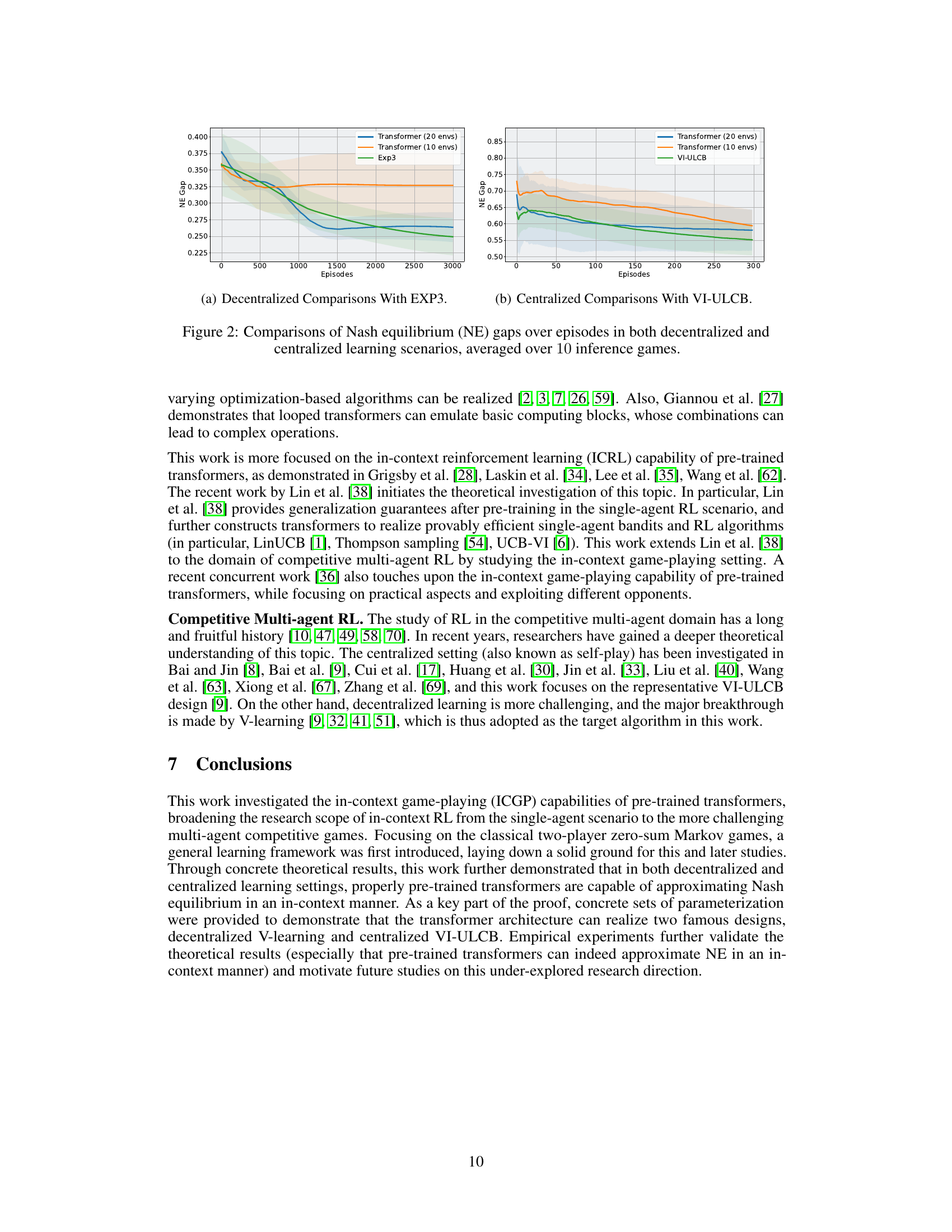

🔼 This figure illustrates the framework for in-context game playing using pre-trained transformers. It shows two scenarios: centralized learning, where a single transformer controls both players’ actions, and decentralized learning, where two separate transformers independently control each player. Both scenarios involve a pre-training phase using a context algorithm to generate a dataset of interactions and an inference phase where the pre-trained transformers are prompted with a limited set of interactions in a new game environment to generate actions and predict the game’s outcome. The orange arrows represent the supervised pre-training phase, and the blue arrows represent the inference phase.

read the caption

Figure 1: An overall view of the framework, where the in-context game-playing (ICGP) capabilities of transformers are studied in both decentralized and centralized learning settings. The orange arrows denote the supervised pre-training procedure and the blue arrows mark the inference procedure.

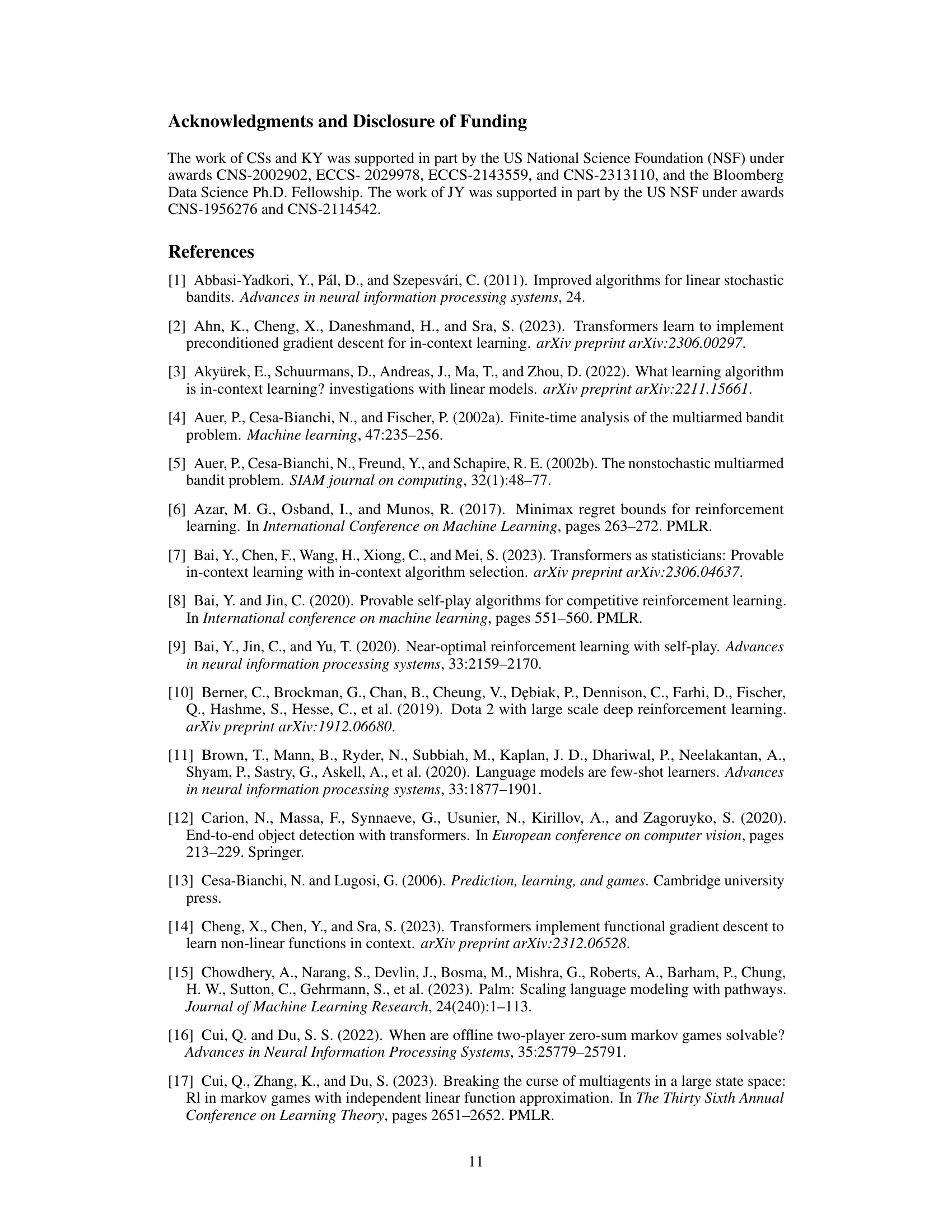

🔼 This figure presents the results of the NE gap comparison between the pre-trained transformers and the context algorithms (EXP3 and VI-ULCB) over episodes in both decentralized and centralized learning settings. The NE gap is averaged over 10 inference games. It empirically validates the theoretical result that more pre-training games benefit the final game-playing performance during inference, and that the obtained transformers can indeed learn to approximate NE in an in-context manner.

read the caption

Figure 2: Comparisons of Nash equilibrium (NE) gaps over episodes in both decentralized and centralized learning scenarios, averaged over 10 inference games.

Full paper#