TL;DR#

This paper investigates the problem of learning a hidden structure of a discrete set of tokens based solely on their interactions. The interactions are represented by a function whose value depends only on the class memberships of the involved tokens. The authors find that recovering the class memberships is computationally hard (NP-complete) in the general case. This highlights the challenge of understanding the structure in complex systems that only reveal information about individual entities via sparse interactions.

The paper then shifts to an information-theoretic and gradient-based analysis of the problem. It shows that, surprisingly, a relatively small number of samples (on the order of N ln N) is sufficient to recover the cluster structure in random cases. Furthermore, the paper shows that gradient flow dynamics of token embeddings can also be used to uncover the hidden structure, albeit requiring more samples and under more restrictive conditions. This provides valuable theoretical insights into how models might capture complex concepts during training, demonstrating the potential of gradient-based methods to recover the structure even if it is computationally hard to solve the problem exactly.

Key Takeaways#

Why does it matter?#

This paper is crucial because it tackles a fundamental challenge in modern machine learning: understanding how complex relationships are learned from data through interactions. Its findings about learning hidden structures from sparse interactions are highly relevant to the development and analysis of large language models, paving the way for more efficient and interpretable AI systems. The NP-completeness result highlights the inherent difficulty, guiding future research towards efficient approximation algorithms. The study of gradient descent dynamics offers insights into how such structures emerge during model training.

Visual Insights#

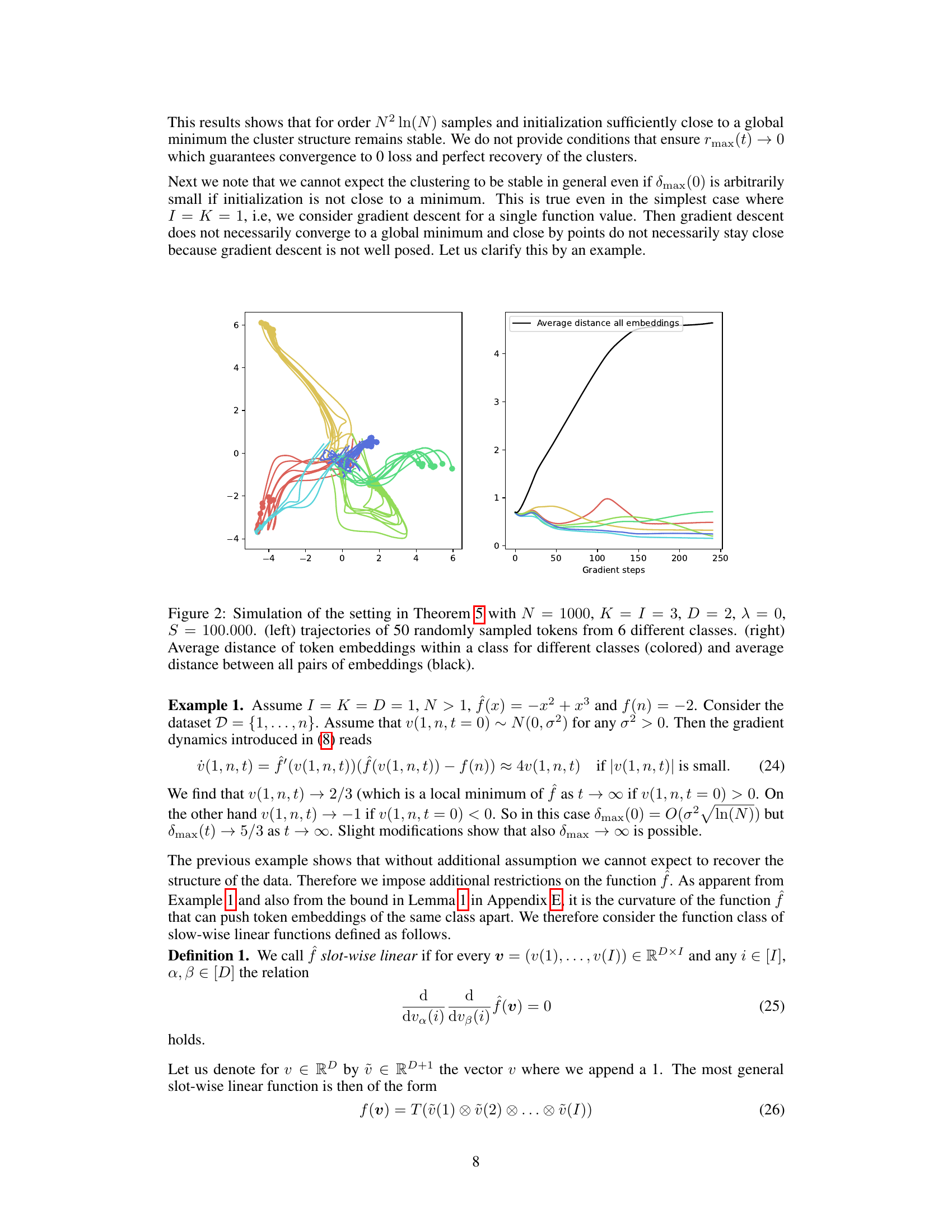

🔼 This figure illustrates an example of the setting described in the paper, where tokens are grouped into a small number of classes. The figure shows three sets (i=1,2,3) of tokens, each partitioned into subgroups (clusters). The dashed lines represent samples of the interaction function f, demonstrating how the function’s output depends only on the class membership of the input tokens. This example helps visualize how the hidden structure of token classes is learned based on observations of their interactions.

read the caption

Figure 1: Illustration of the setting for I = 3 different groups clustered in 3, 2, and 3 subgroups respectively. Samples consist of one element of each group, the dashed lines indicate samples (1, 3, 1) and (3, 7, 6).

Full paper#