↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Training unnormalized models is challenging because the gradient of the log-likelihood involves an intractable expectation. Contrastive Divergence (CD) is a popular approximation method that uses Markov Chain Monte Carlo (MCMC) to estimate the gradient. However, existing analysis mostly focused on asymptotic convergence rates, lacking precise non-asymptotic guarantees. This paper addresses this gap.

The authors perform a non-asymptotic analysis of CD, showing that under certain regularity conditions, CD can converge at the optimal parametric rate (O(n⁻¹⁄²)). They analyze both online and offline settings with various data batching schemes. Furthermore, they show that averaging the CD iterates yields a near-optimal estimator with asymptotic variance close to the Cramér-Rao lower bound. This work significantly advances our theoretical understanding of CD and provides practical guidance for algorithm design and performance evaluation.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers working with unnormalized models, a common challenge in machine learning. It offers non-asymptotic analysis of contrastive divergence, providing strong theoretical guarantees and paving the way for more efficient and reliable algorithms in various applications. The study also opens new avenues for research into near-optimal estimators and their asymptotic properties.

Visual Insights#

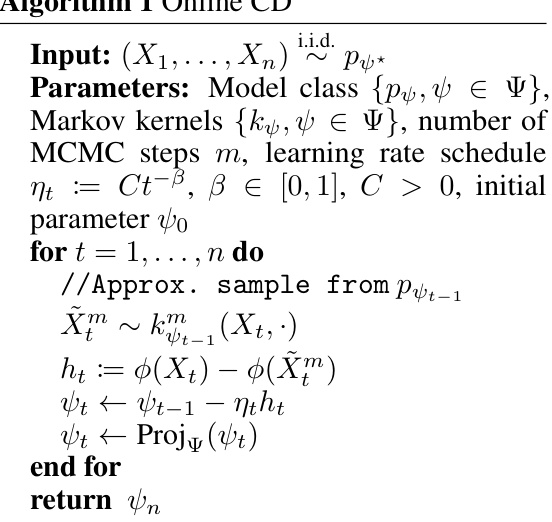

This algorithm describes the online contrastive divergence method. It iteratively updates model parameters using a single data point at each step. The algorithm approximates the gradient of the log-likelihood using a Markov Chain Monte Carlo (MCMC) method, and projects the updated parameters onto the feasible parameter space.

Full paper#