TL;DR#

Stochastic gradient bandit algorithms have been widely used in machine learning and reinforcement learning due to their simplicity and scalability. However, their theoretical understanding has been lacking, with most existing analyses relying on restrictive assumptions like small or decaying learning rates. This is because standard optimization techniques fail to adequately handle the exploration-exploitation trade-off present in online bandit settings.

This paper provides a new theoretical understanding of these algorithms, proving that they converge to a globally optimal policy almost surely using any constant learning rate. The key insight is that the algorithm implicitly balances exploration and exploitation, ensuring that it samples all actions infinitely often. This holds despite the use of non-convex optimization and stochastic approximations. The proofs utilize novel findings about action sampling rates and the relationship between cumulative progress and noise. The theoretical results are supported by simulations.

Key Takeaways#

Why does it matter?#

This paper significantly advances our understanding of stochastic gradient bandit algorithms. It demonstrates global convergence for any constant learning rate, a surprising result that challenges existing assumptions and opens up new avenues for algorithm design and analysis. This impacts the development of more robust and scalable reinforcement learning methods, especially for problems with complex, high-dimensional action spaces. The theoretical findings are validated through simulations, adding practical significance to the work.

Visual Insights#

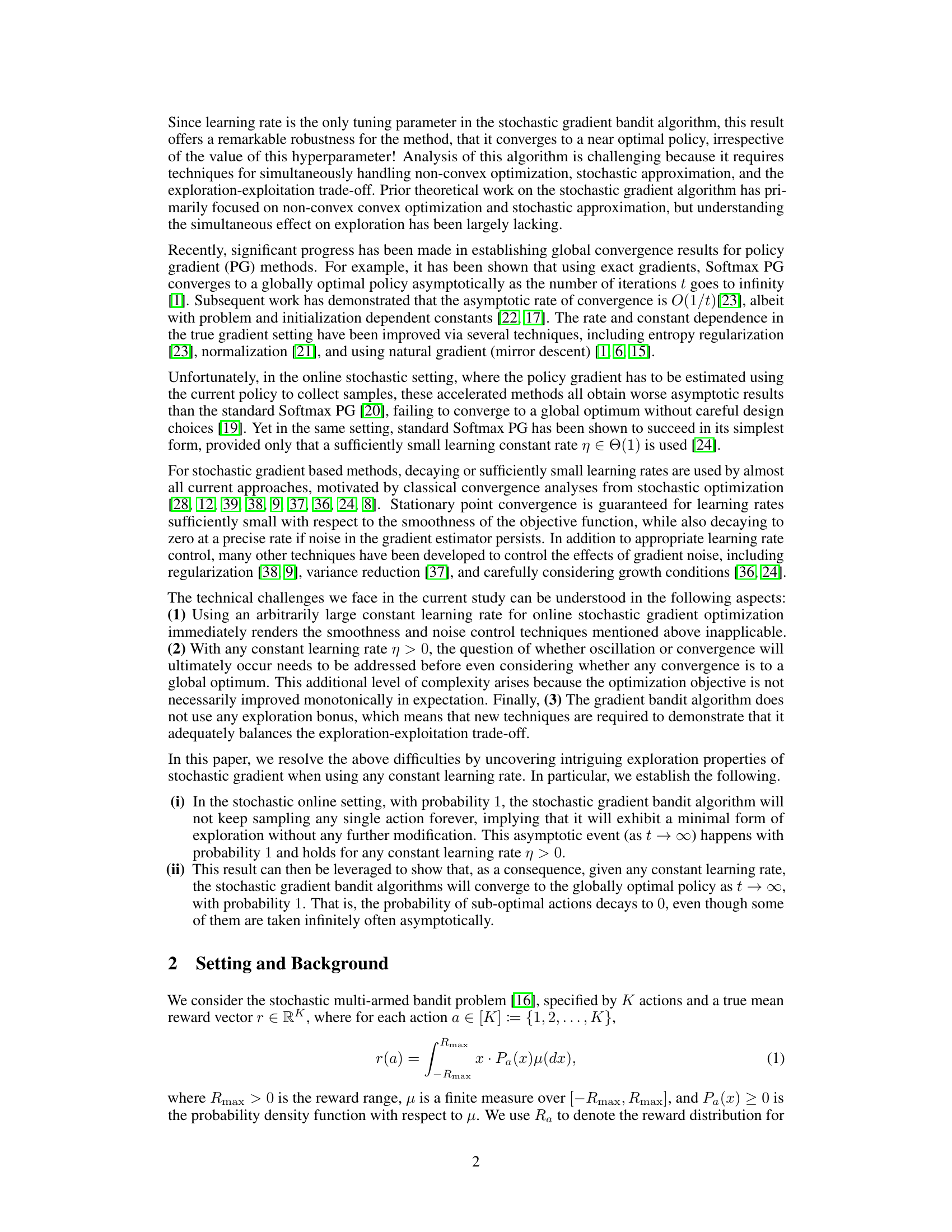

🔼 This figure visualizes the convergence of the stochastic gradient bandit algorithm across different learning rates (η = 1, 10, 100, 1000) in a 4-action bandit problem. Each subplot displays 10 independent runs, each represented by a separate curve, showing the log sub-optimality gap (log(r(a*) - πθr)) over time (log(t)). The y-axis represents the log sub-optimality gap, indicating how far the current policy’s expected reward is from the optimal reward. The x-axis shows the log of the number of iterations. The plots illustrate the algorithm’s convergence to the optimal policy even with large learning rates, though higher learning rates show more variance and potentially slower convergence in the initial stages.

read the caption

Figure 1: Log sub-optimality gap, log(r(a*) - πθr), plotted against the logarithm of time, log t, in a 4-action problem with various learning rates, η. Each subplot shows a run with a specific learning rate. The curves in a subplot correspond to 10 different random seeds. Theory predicts that essentially all seeds will lead to a curve converging to zero (-∞ in these plots). For a discussion of the results, see the text.

Full paper#