↗ arXiv ↗ Hugging Face ↗ Hugging Face ↗ Chat

TL;DR#

3D Gaussian splatting (3D-GS) excels at real-time rendering, but struggles with accurately modeling specular and anisotropic surfaces due to the limitations of spherical harmonics in representing high-frequency information. This paper introduces Spec-Gaussian, a novel approach that leverages anisotropic spherical Gaussians (ASGs) instead of spherical harmonics to represent the view-dependent appearance of 3D Gaussians. This allows for a more accurate representation of complex surface properties, leading to higher quality rendering.

Spec-Gaussian also introduces a coarse-to-fine training strategy to improve learning efficiency and eliminate artifacts caused by overfitting in real-world scenes. Experimental results demonstrate that Spec-Gaussian outperforms existing methods in rendering quality, especially for scenes with specular and anisotropic components. The use of ASGs significantly improves 3D-GS’s ability to model these complex visual effects without requiring a significant increase in the number of Gaussians; This enhancement expands the applicability of 3D-GS to more intricate and realistic scenarios.

Key Takeaways#

Why does it matter?#

This paper is crucial for researchers in computer graphics and related fields because it significantly improves the quality and efficiency of 3D scene rendering. Its novel approach using anisotropic spherical Gaussians addresses the limitations of existing methods in handling specular and anisotropic surfaces, which opens new possibilities for creating more realistic and visually appealing 3D models and virtual environments. The availability of code and datasets further enhances its impact, allowing other researchers to build upon this work and accelerate progress in the field.

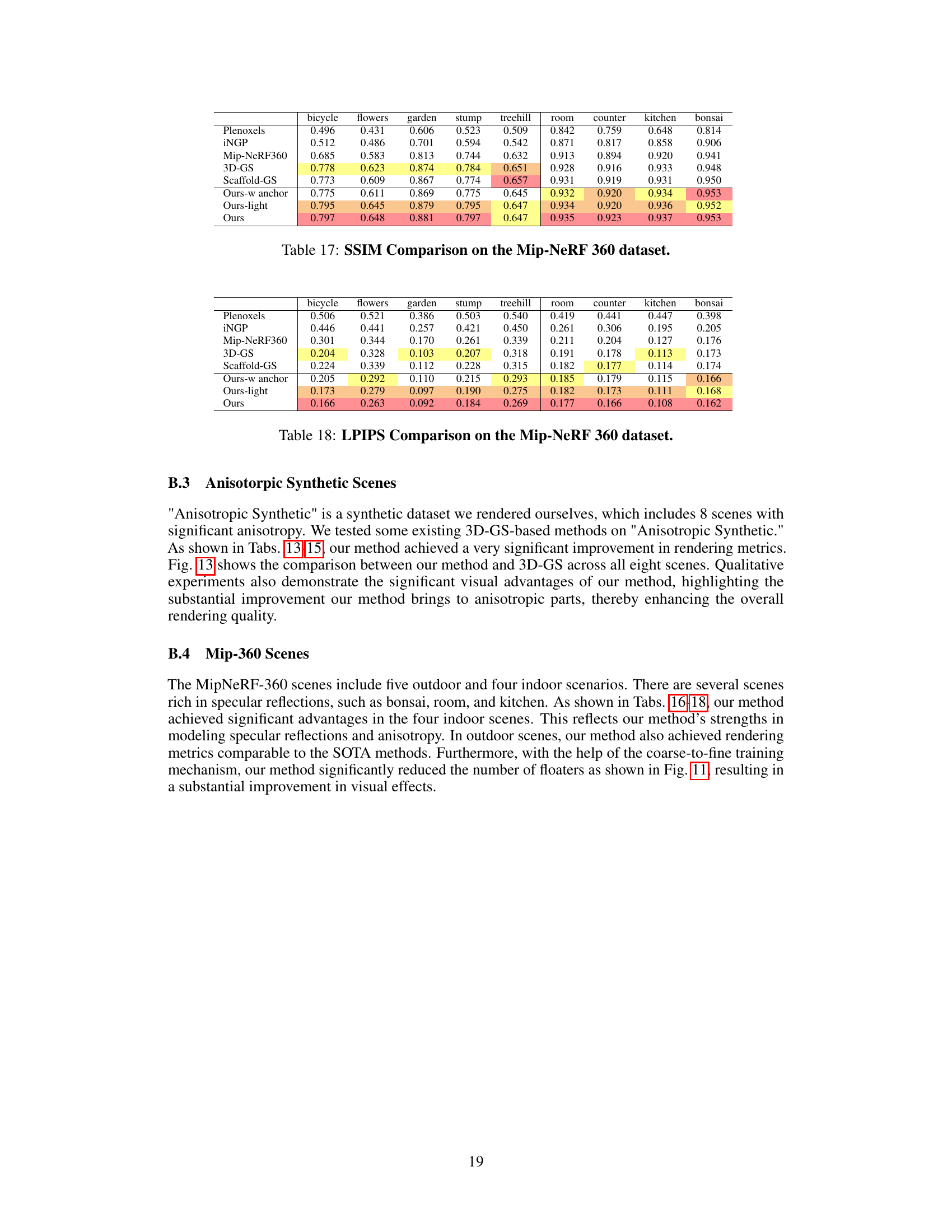

Visual Insights#

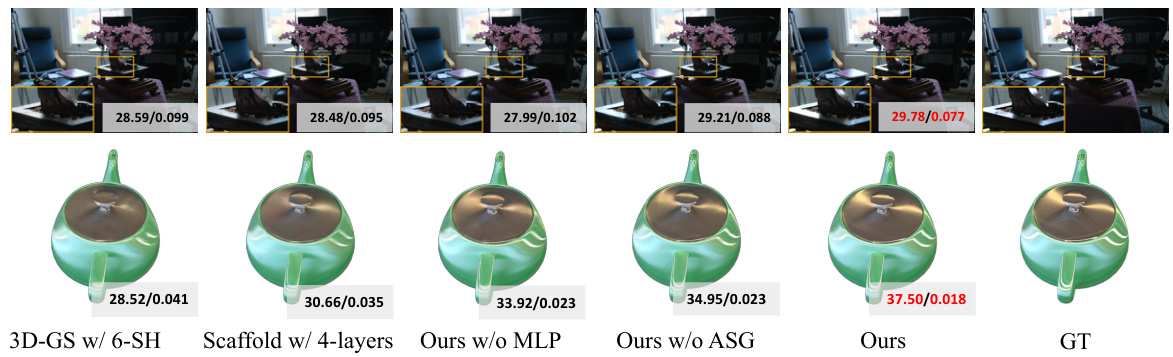

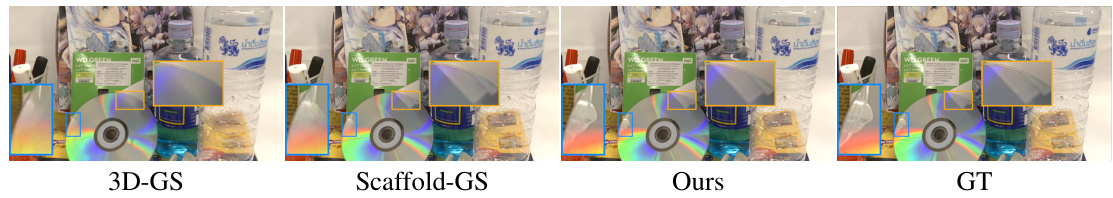

🔼 This figure showcases the improved rendering quality achieved by the proposed Spec-Gaussian method compared to existing methods (3D-GS and Scaffold) on scenes containing specular and anisotropic components. The top row shows a teapot rendering, highlighting the improved detail and realism. The bottom row presents a CD, demonstrating the method’s ability to model complex specular effects that were previously challenging for standard 3D Gaussian Splatting.

read the caption

Figure 1: Our method not only achieves real-time rendering but also significantly enhances the capability of 3D-GS to model scenes with specular and anisotropic components. Key to this enhanced performance is our use of ASG appearance field to model the appearance of each 3D Gaussian, which results in substantial improvements in rendering quality for both complex and general scenes.

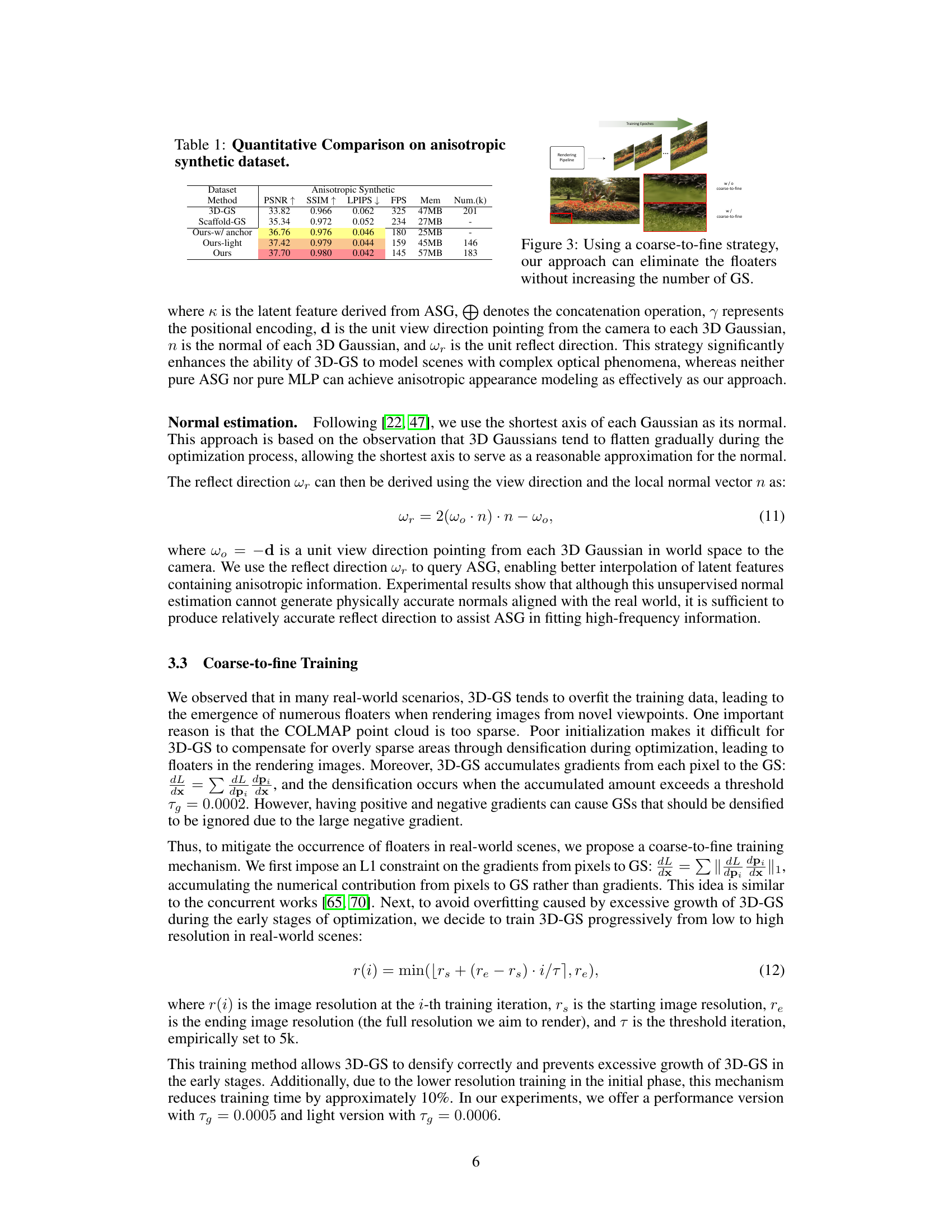

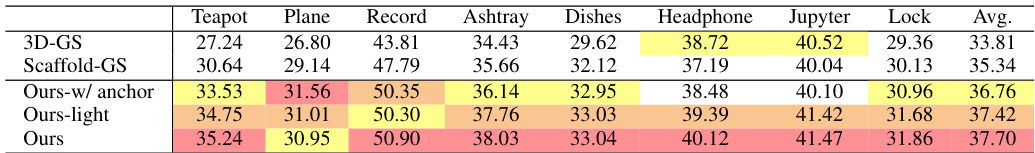

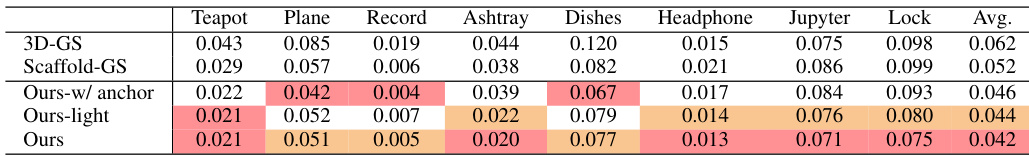

🔼 This table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. The methods compared include 3D-GS, Scaffold-GS, and three versions of the proposed Spec-Gaussian method (with anchor, light, and full versions). The metrics used for comparison are PSNR, SSIM, LPIPS, FPS (frames per second), memory usage (Mem), and the number of Gaussians (Num.(k)). The results show that the Spec-Gaussian methods outperform the baseline methods in terms of rendering quality (higher PSNR and SSIM, lower LPIPS), while maintaining reasonable real-time performance and memory efficiency.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

In-depth insights#

ASG Appearance Field#

The proposed ASG appearance field is a novel approach to modeling view-dependent appearance in 3D Gaussian splatting. It replaces the traditional spherical harmonics (SH) representation with an anisotropic spherical Gaussian (ASG) function. This change is motivated by the limitations of SH in capturing high-frequency details, particularly specular and anisotropic effects. ASG’s ability to model high-frequency information using fewer parameters is seen as a key advantage, allowing for more accurate representation of complex surface appearances without increasing the computational burden. The ASG parameters are learned through a compact, learnable MLP which processes local 3D Gaussian features. This decoupling of ASG parameter generation allows for a more effective representation of both diffuse and specular components. Overall, the ASG appearance field is presented as a critical component in improving the visual quality and accuracy of specular and anisotropic components in 3D Gaussian splatting rendering.

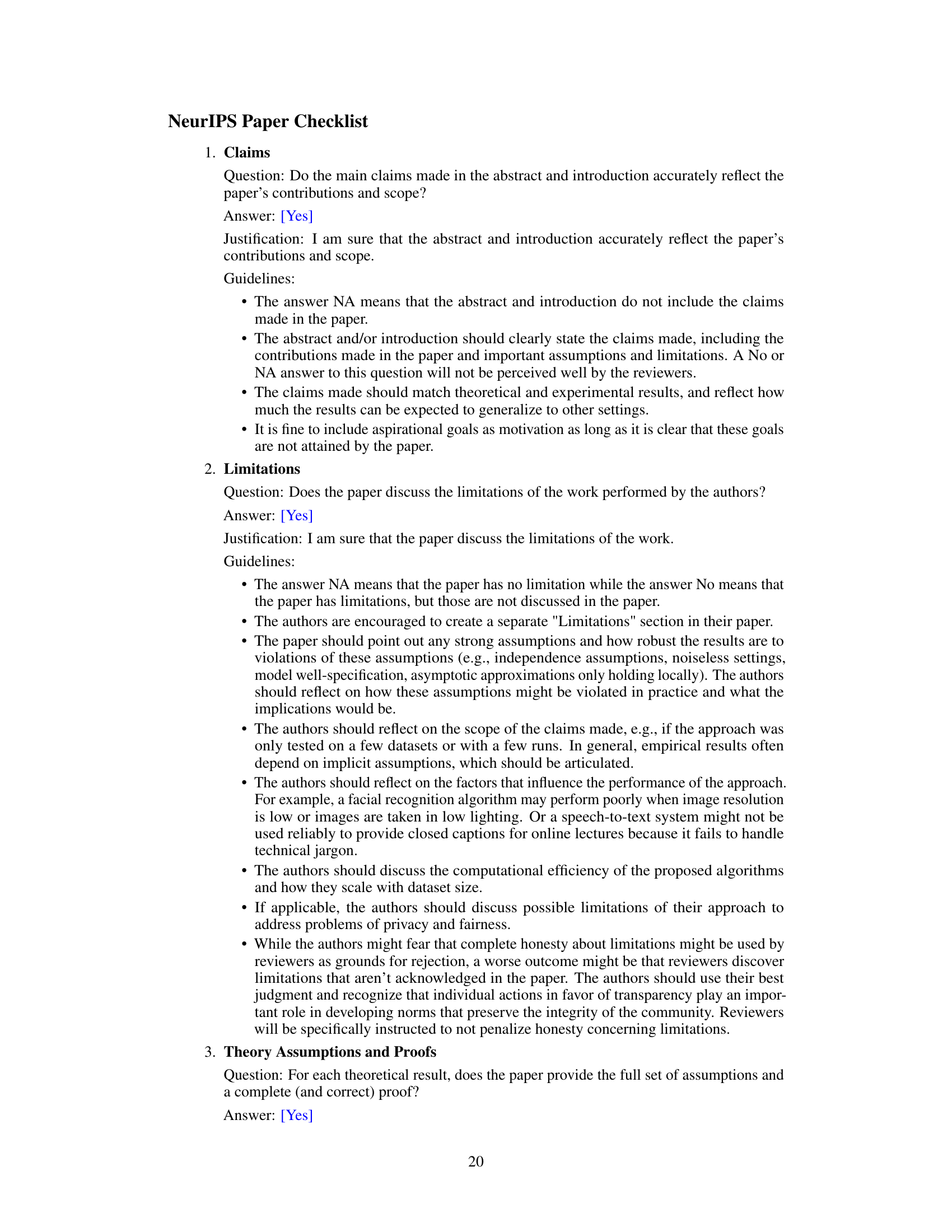

Coarse-to-fine Training#

The coarse-to-fine training strategy addresses the overfitting and floater issues common in 3D Gaussian splatting. Starting with low-resolution rendering, the model learns a simplified representation of the scene, preventing over-densification and the generation of spurious geometric structures (floaters). As the resolution gradually increases, the model refines its representation, incorporating more detail while maintaining stability. This approach significantly improves learning efficiency by focusing optimization resources on crucial scene structures first. The method effectively regularizes training, reducing the need for increased Gaussian density, which directly impacts rendering speed and memory consumption. This strategy achieves high-quality results with specular highlights and anisotropy, demonstrating a significant improvement over standard 3D Gaussian splatting techniques.

3D-GS Enhancements#

The heading ‘3D-GS Enhancements’ suggests improvements made to the 3D Gaussian Splatting (3D-GS) method. The core of 3D-GS involves representing scenes using numerous 3D Gaussians, each possessing attributes like position, opacity, and covariance. Enhancements likely focus on enhancing the visual realism and efficiency of 3D-GS. This might involve improvements to the appearance model (e.g., using anisotropic spherical Gaussians instead of spherical harmonics for more accurate specular and anisotropic reflections), optimization of the rendering pipeline (e.g., leveraging CUDA for faster processing), or developing more efficient training strategies (e.g., incorporating coarse-to-fine training to mitigate overfitting and reduce artifacts). Ultimately, the goal of these enhancements is to push the boundaries of real-time rendering, producing higher quality visuals at faster speeds for more complex scenes. Addressing the shortcomings of traditional methods (like meshes or point clouds that struggle with representing continuous surfaces accurately) may be another motivation behind these enhancements. Improving the ability to model complex lighting and material properties is also likely a key focus, ensuring that the rendered images closely resemble reality.

Specular Modeling#

Specular modeling in computer graphics aims to realistically simulate the glossy reflections observed on shiny surfaces. Accurate specular highlights are crucial for photorealism, conveying material properties and enhancing scene realism. Traditional methods often rely on simplified models like Phong or Blinn-Phong, which, while efficient, lack the fidelity to capture complex, anisotropic reflections. Advanced techniques use microfacet-based models, considering the distribution and orientation of microscopic surface irregularities. These models, though more accurate, are computationally expensive. Recent research explores data-driven approaches, leveraging neural networks to learn complex reflectance functions from real-world data. This offers improved realism but introduces challenges in terms of training data requirements and computational cost. The choice of specular model depends on the desired balance between realism and efficiency, with simpler models being suitable for real-time applications and more complex methods preferred when rendering quality takes precedence.

Future Research#

Future research directions stemming from this work could explore several promising avenues. Extending Spec-Gaussian to handle dynamic scenes is crucial for broader applicability. The current method focuses on static scenes, and adapting it to handle time-varying elements and motion blur would significantly enhance its capabilities. Another key area is improving the handling of reflections. While Spec-Gaussian excels at specular and anisotropic effects, reflections present a unique challenge requiring a more sophisticated approach potentially incorporating explicit geometry information or techniques like those used in NeRF. Investigating a more efficient training strategy is important. Although a coarse-to-fine approach is presented, further optimizations may reduce training times and resource usage. Exploring alternative representations for the appearance field beyond ASG could lead to performance improvements or the ability to handle different material properties. Finally, a thorough analysis of the limitations of the current ASG and training methods is needed to further refine the model and guide future development, especially concerning generalization and robustness in diverse scenarios.

More visual insights#

More on figures

🔼 This figure illustrates the pipeline of the Spec-Gaussian method. It starts with Structure from Motion (SfM) points, either obtained from COLMAP or generated randomly, which initialize the 3D Gaussians. To improve the modeling of high-frequency details in appearance, anisotropic spherical Gaussians (ASGs) are used with a feature decoupling Multi-Layer Perceptron (MLP) to model the view-dependent appearance. Finally, Gaussians with opacity greater than zero are rendered using a differentiable rasterization pipeline, effectively capturing specular and anisotropic effects.

read the caption

Figure 2: Pipeline of Spec-Gaussian. The optimization process begins with SfM points derived from COLMAP or generated randomly, serving as the initial state for the 3D Gaussians. To address the limitations of low-order SH and pure MLP in modeling high-frequency information, we additionally employ ASG in conjunction with a feature decoupling MLP to model the view-dependent appearance of each 3D Gaussian. Then, 3D Gaussians with opacity σ > 0 are rendered through a differentiable Gaussian rasterization pipeline, effectively capturing specular highlights and anisotropy in the scene.

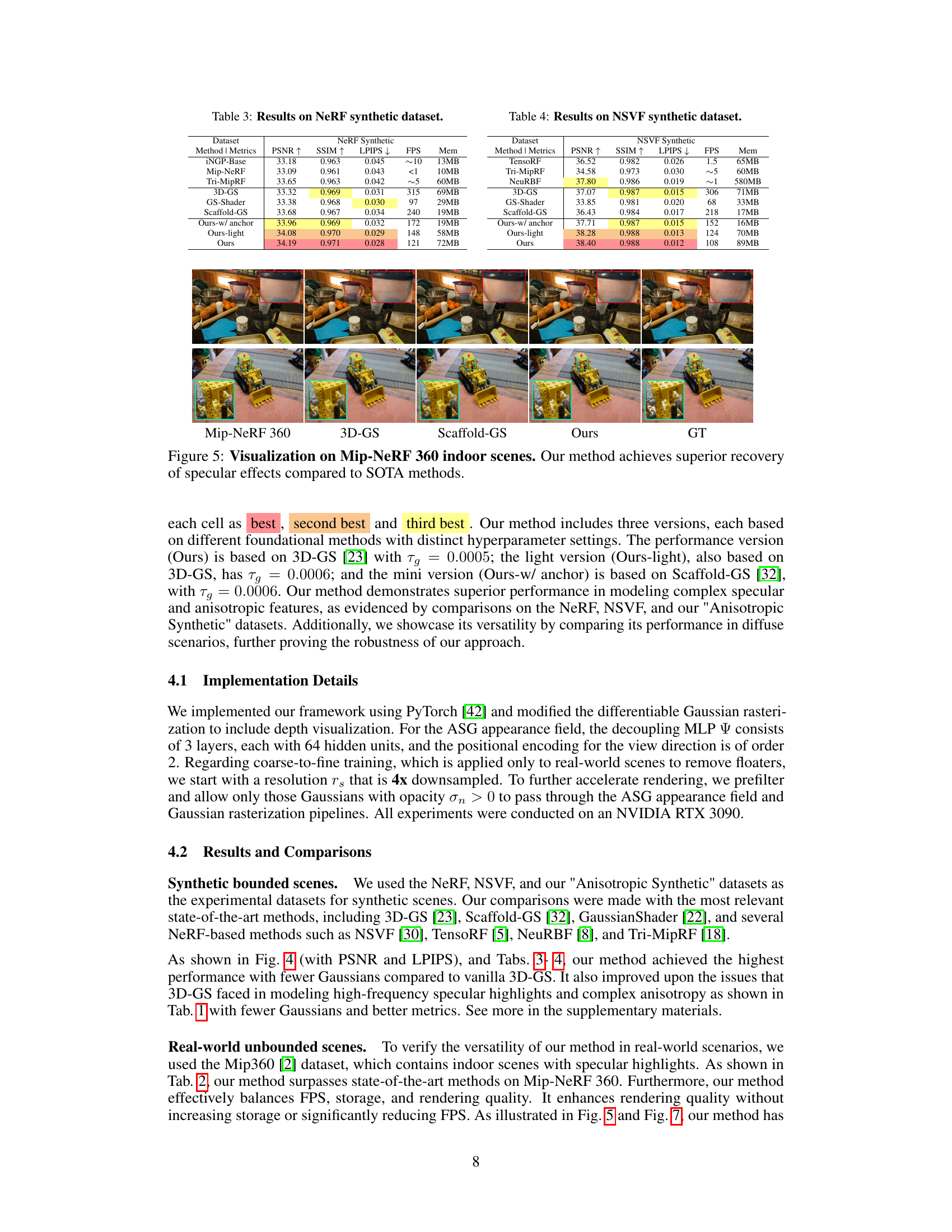

🔼 This figure demonstrates the effectiveness of the coarse-to-fine training strategy in eliminating floaters (erroneous, unnatural artifacts) in 3D Gaussian Splatting. The left side shows the rendering pipeline feeding into the training process, where the resolution of the rendered images increases with each training epoch. The top right shows a sequence of increasingly higher-resolution renderings during training. The bottom right shows a comparison of rendering results with and without the coarse-to-fine strategy. The image with the coarse-to-fine strategy exhibits a significantly cleaner rendering with far fewer floaters, demonstrating the success of this approach in improving the quality and efficiency of the training process.

read the caption

Figure 3: Using a coarse-to-fine strategy, our approach can eliminate the floaters without increasing the number of GS.

🔼 This figure showcases the results of the Spec-Gaussian method on the NeRF dataset. It highlights the superior performance of the proposed method in modeling specular highlights compared to existing 3D Gaussian splatting approaches. The results demonstrate that Spec-Gaussian accurately renders specular highlights while maintaining real-time rendering speed.

read the caption

Figure 4: Visualization on NeRF dataset. Our method has achieved specular highlights modeling, which other 3D-GS-based methods fail to accomplish, while maintaining fast rendering speed.

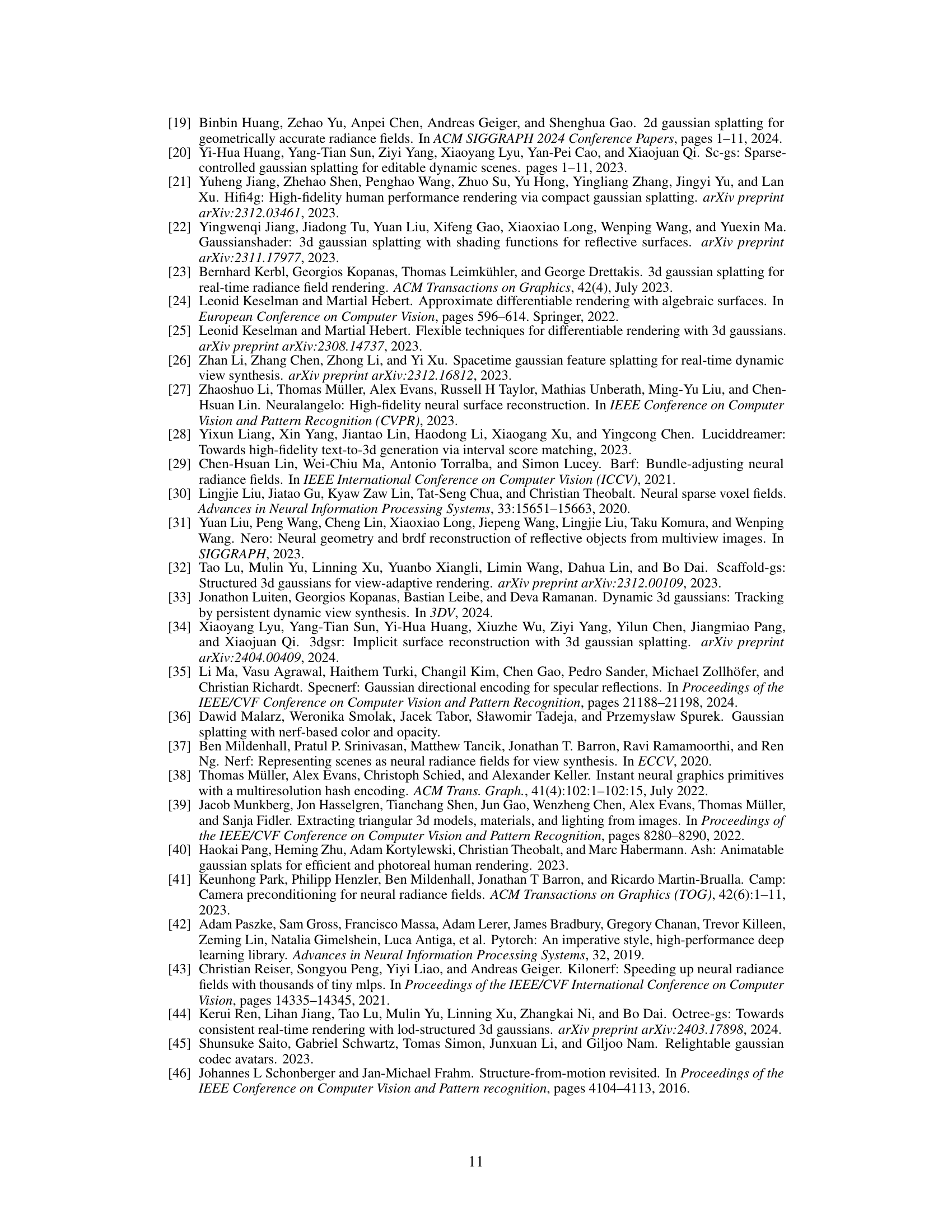

🔼 This figure compares the results of different methods on the Mip-NeRF 360 indoor scenes dataset. It focuses on the ability of the methods to accurately capture specular highlights. The comparison shows that the proposed method, ‘Ours’, significantly outperforms other state-of-the-art (SOTA) methods in terms of visual quality and detail preservation, especially in specular regions.

read the caption

Figure 5: Visualization on Mip-NeRF 360 indoor scenes. Our method achieves superior recovery of specular effects compared to SOTA methods.

🔼 This figure demonstrates an ablation study on the ASG (Anisotropic Spherical Gaussian) appearance field used in Spec-Gaussian. It shows that directly using ASG for color modeling fails to accurately capture anisotropy and specular highlights. However, by decoupling the ASG features through an MLP (Multi-Layer Perceptron), the model successfully models complex optical phenomena, significantly improving the rendering quality.

read the caption

Figure 6: Ablation on ASG appearance field. We show that directly using ASG to model color leads to the failure in modeling anisotropy and specular highlights. By decoupling the ASG features through MLP, we can realistically model complex optical phenomena.

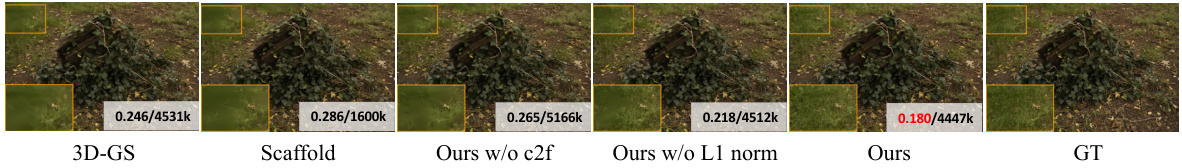

🔼 This figure shows an ablation study on the coarse-to-fine training strategy used in the Spec-Gaussian method. It compares the results of 3D-GS, Scaffold, Spec-Gaussian without coarse-to-fine training, Spec-Gaussian without L1 norm constraint, the full Spec-Gaussian method, and the ground truth. The images demonstrate that the coarse-to-fine training strategy effectively reduces the number of floaters in the rendered scene, improving the overall quality of the reconstruction.

read the caption

Figure 7: Ablation on coarse-to-fine training. Experimental results demonstrate that our simple yet effective training mechanism can effectively remove floaters without increasing the number of 3D Gaussians, thereby alleviating the overfitting problem prevalent in 3D-GS-based methods.

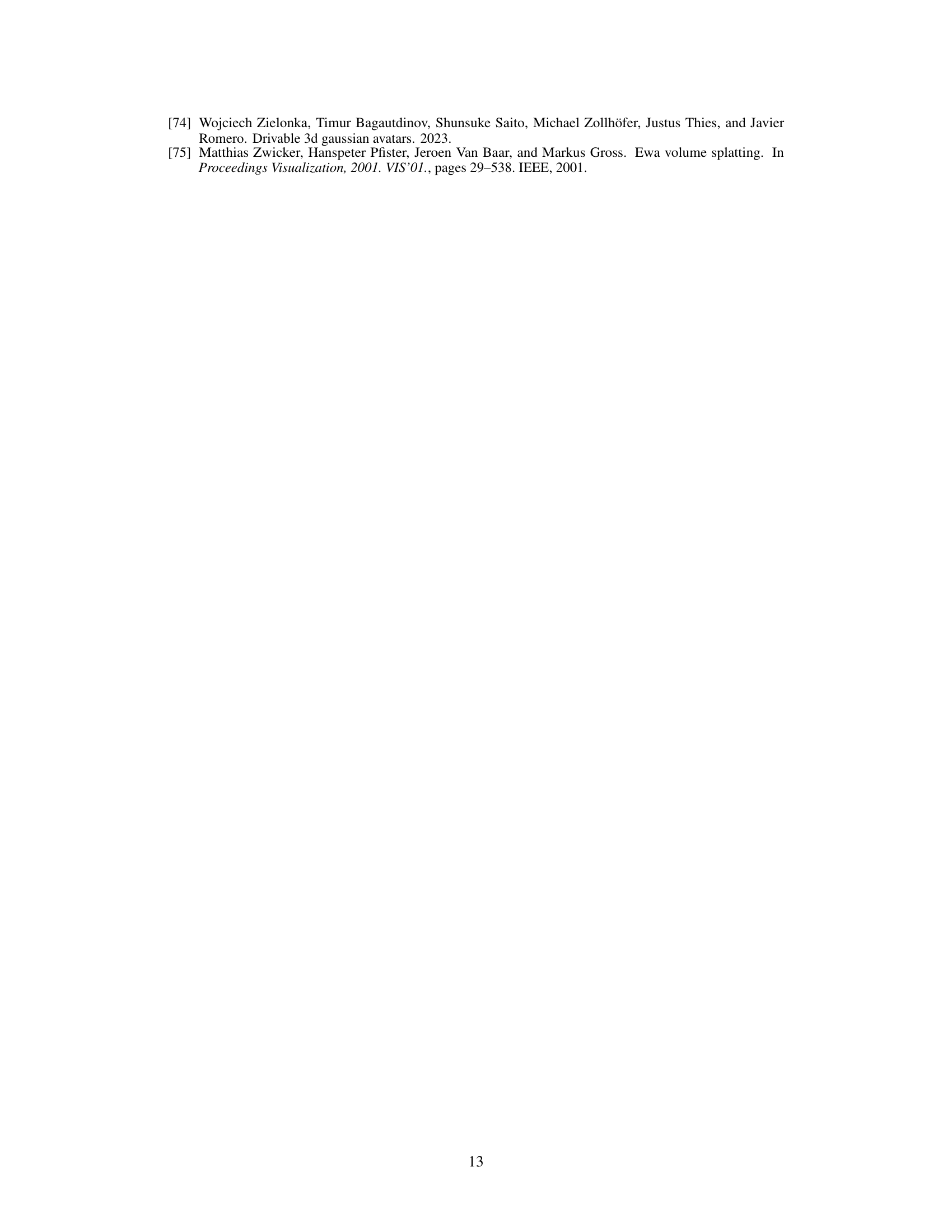

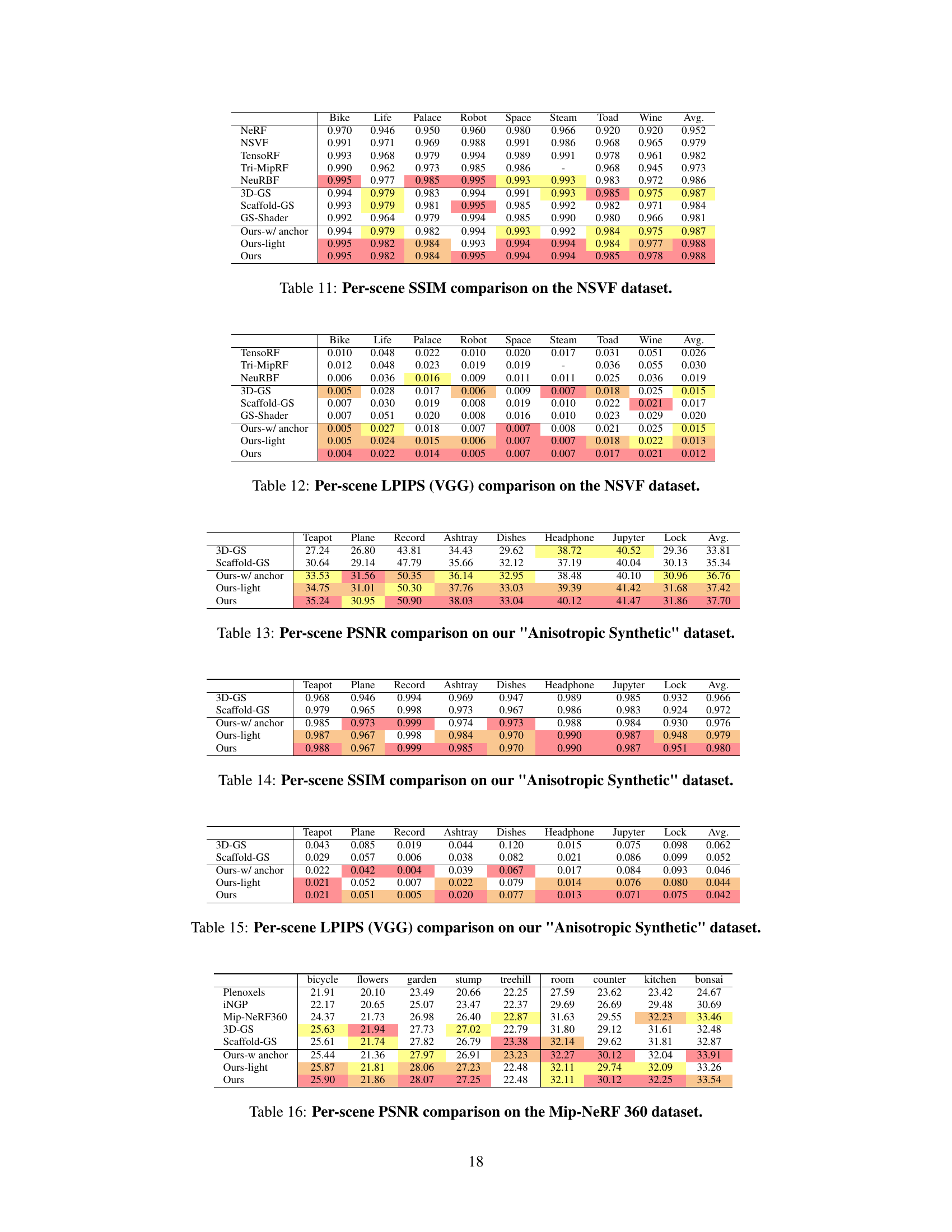

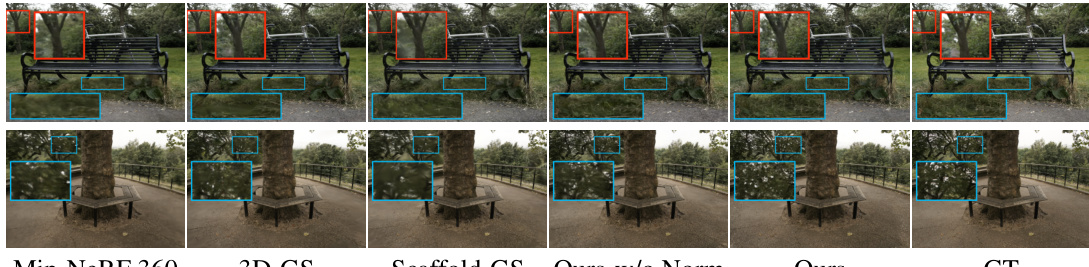

🔼 This figure compares the performance of different methods (3D-GS, Scaffold-GS, Ours-w/o Norm, Ours) on the Mip-NeRF 360 dataset, focusing on the removal of floaters. The images show that the proposed ‘Ours’ method effectively eliminates floaters in the rendered scene compared to the other baselines, especially in challenging areas such as foliage. This improved robustness is directly attributed to the proposed coarse-to-fine training strategy.

read the caption

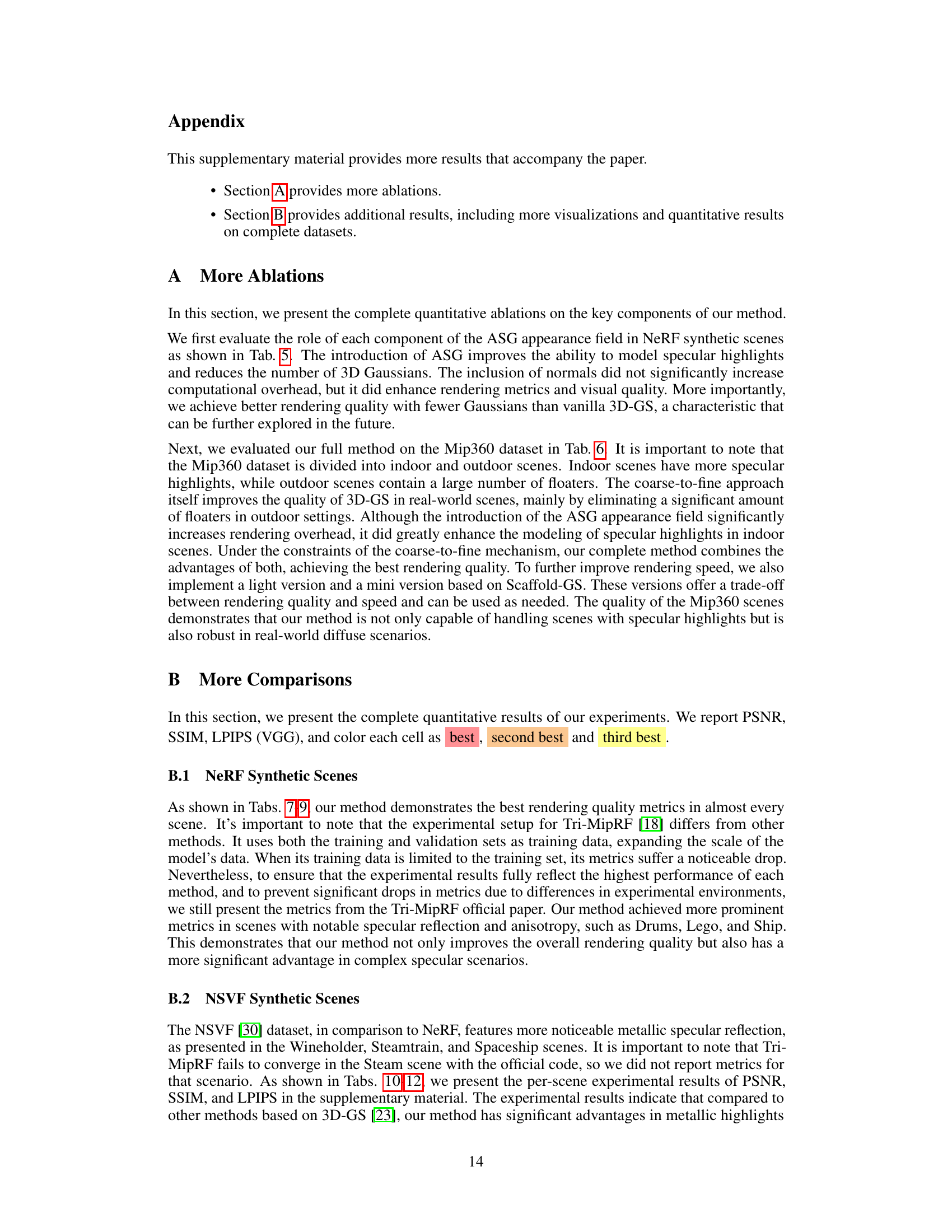

Figure 9: More comparisons with baselines. Our method achieves robust floater removal by coarse-to-fine training.

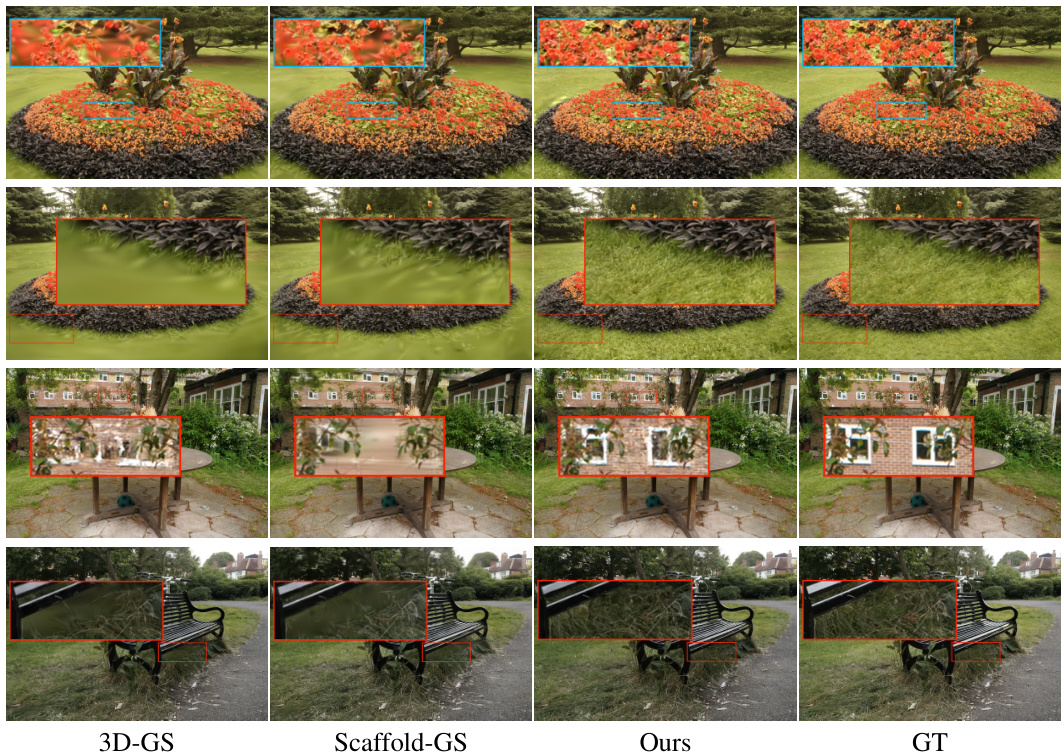

🔼 This figure shows a comparison of 3D-GS, Scaffold-GS, and the proposed Spec-Gaussian method on four real-world scenes. Each row displays the results for a different scene, showing the rendered image from each method alongside the ground truth. The red boxes highlight areas where floaters (erroneous structures) are present in the baseline methods but successfully removed by Spec-Gaussian thanks to its coarse-to-fine training strategy. This demonstrates the effectiveness of the proposed method in improving visual quality and reducing artifacts in challenging real-world scenes.

read the caption

Figure 9: More comparisons with baselines. Our method achieves robust floater removal by coarse-to-fine training.

🔼 The figure illustrates the difference in rendering specular highlights and reflections between different methods. The left side shows a close-up of a cymbal with specular highlights, and a drum with reflections. The right shows how GS-Shader, the proposed ‘Ours’ method, and the ground truth (GT) render these effects. The comparison highlights the improved ability of the ‘Ours’ method to accurately capture both specular highlights and reflections compared to the GS-Shader.

read the caption

Figure 10: Illustration of specular highlights and reflections.

🔼 This figure shows a comparison of rendering results for a scene containing specular and anisotropic objects. The figure compares the results obtained using 3D-GS, Scaffold-GS, the proposed Spec-Gaussian method, and the ground truth. Spec-Gaussian demonstrates a significant improvement in rendering quality, particularly in capturing specular highlights and anisotropic reflections, that neither 3D-GS nor Scaffold-GS could achieve.

read the caption

Figure 1: Our method not only achieves real-time rendering but also significantly enhances the capability of 3D-GS to model scenes with specular and anisotropic components. Key to this enhanced performance is our use of ASG appearance field to model the appearance of each 3D Gaussian, which results in substantial improvements in rendering quality for both complex and general scenes.

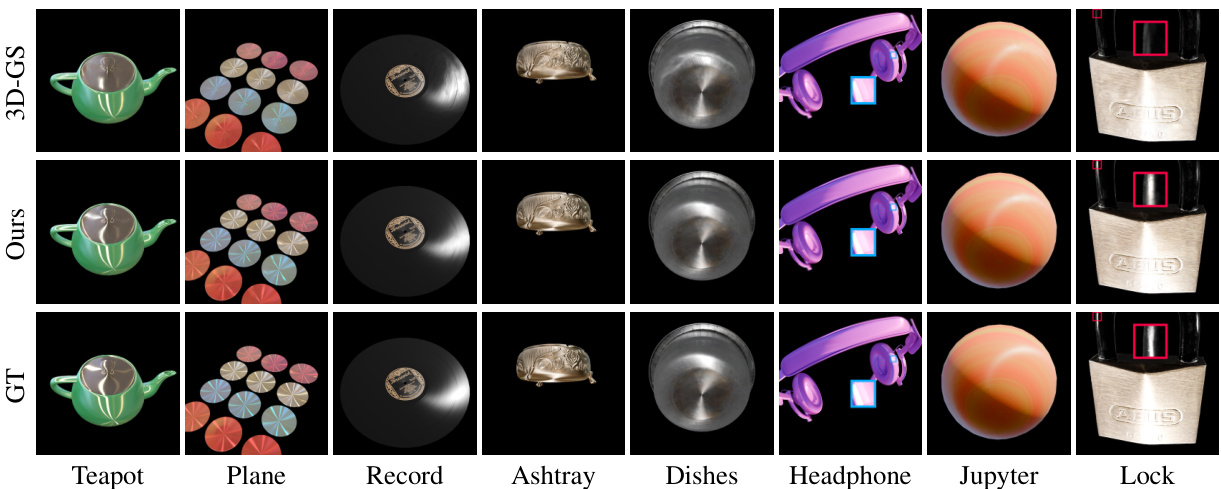

🔼 This figure shows a qualitative comparison of rendering results between the proposed Spec-Gaussian method and the baseline 3D-Gaussian splatting (3D-GS) method on eight different scenes from the ‘Anisotropic Synthetic’ dataset. The comparison highlights the improved ability of Spec-Gaussian to accurately model anisotropic surface properties, resulting in more realistic and detailed renderings, particularly in scenes with specular and reflective elements. Each row displays a scene rendered using: 3D-GS, the proposed Spec-Gaussian method, and the ground truth. The differences are most notable in rendering the specular highlights and reflections accurately.

read the caption

Figure 13: Visualization on our 'Anisotropic Synthetic' dataset. We show the comparison between our method and 3D-GS across all eight scenes. Qualitative experimental results demonstrate the significant advantage of our method in modeling anisotropic scenes, thereby enhancing the rendering quality of 3D-GS.

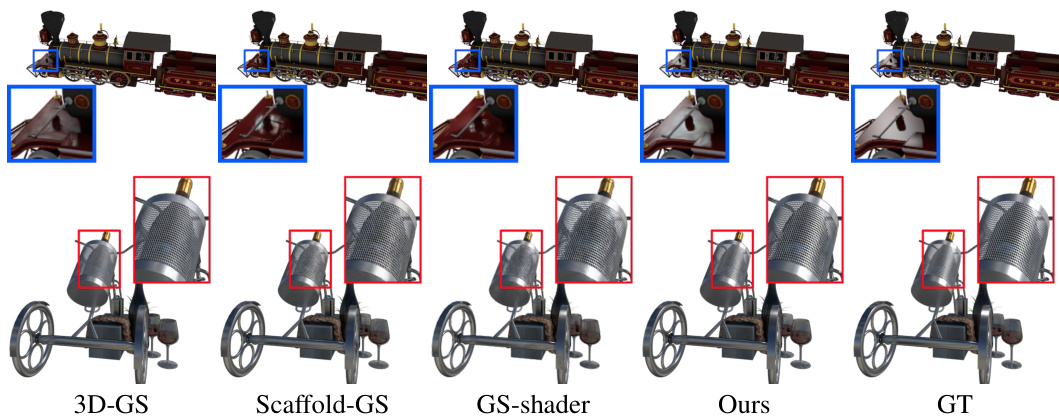

🔼 This figure compares the performance of the proposed Spec-Gaussian method with other existing methods (3D-GS, Scaffold-GS, and GS-Shader) on the NSVF dataset. It showcases the improved ability of Spec-Gaussian to render metallic surfaces and reflective materials, highlighting its superior performance in handling complex materials.

read the caption

Figure 14: Visualization on NSVF dataset. Our method significantly improves the ability to model metallic materials compared to other GS-based methods. At the same time, our method also demonstrates the capability to model refractive parts, reflecting the powerful fitting ability of our method.

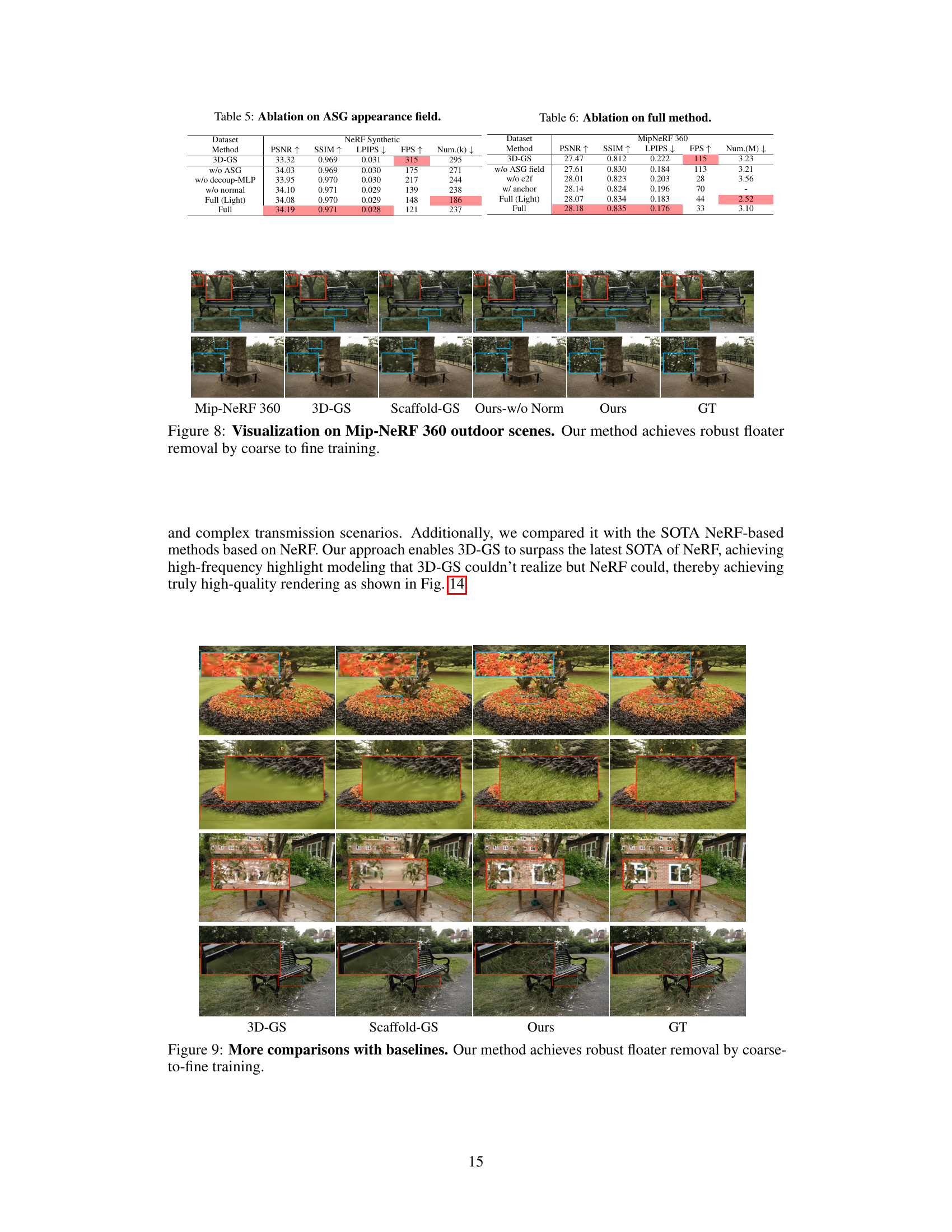

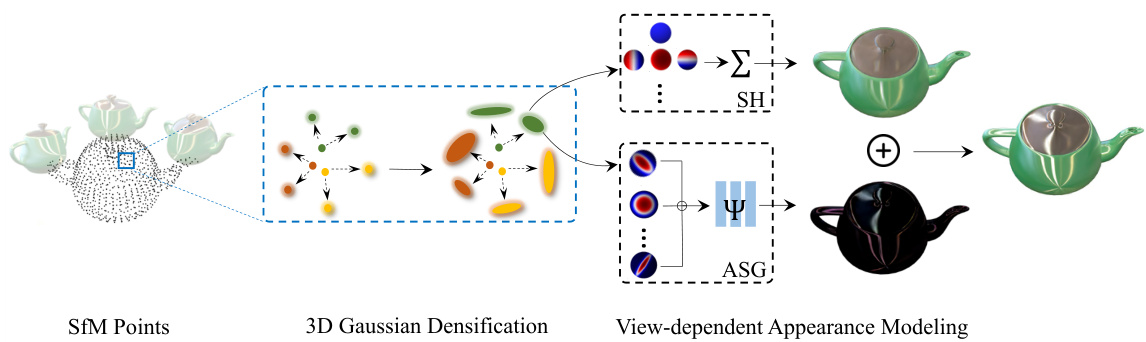

More on tables

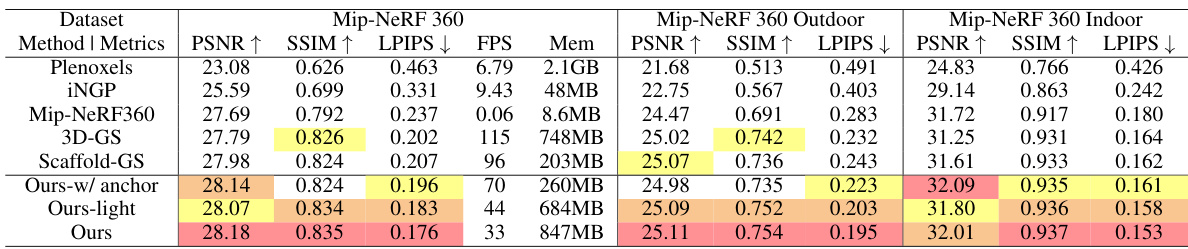

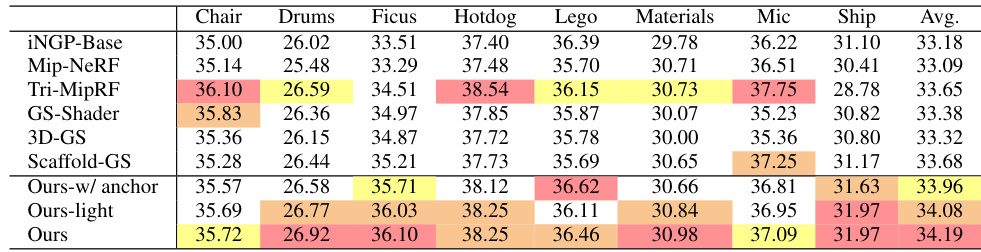

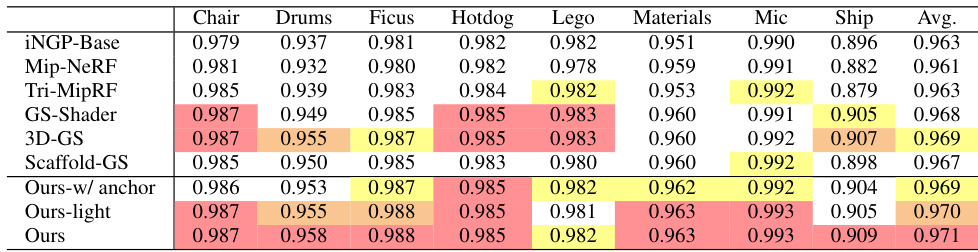

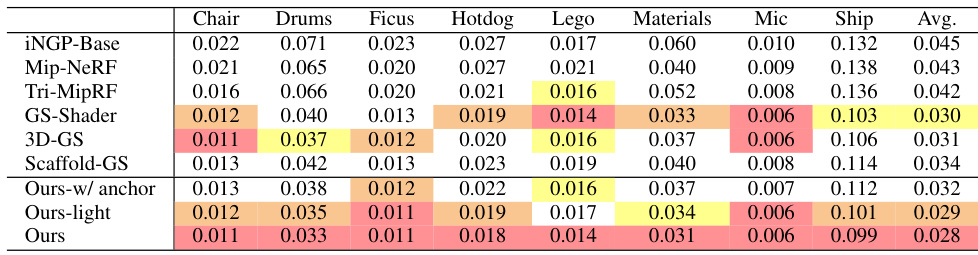

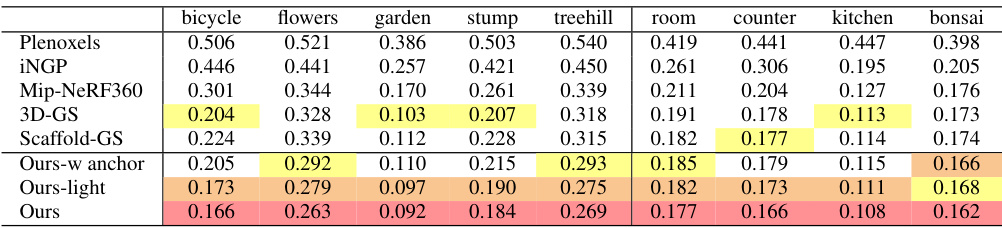

🔼 This table presents a quantitative comparison of different methods on real-world datasets. The metrics used are PSNR, SSIM, and LPIPS (VGG). Each metric’s best, second-best, and third-best results are highlighted by color-coding. The results show that the proposed method achieves the best rendering quality while maintaining a good balance between frames per second (FPS) and memory usage.

read the caption

Table 2: Quantitative comparison of on real-world datasets. We report PSNR, SSIM, LPIPS (VGG) and color each cell as best, second best and third best. Our method has achieved the best rendering quality, while striking a good balance between FPS and the storage memory.

🔼 This table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. The methods compared include 3D-GS, Scaffold-GS, and three variants of the proposed Spec-Gaussian method (Ours-w/anchor, Ours-light, and Ours). The metrics used for comparison are PSNR, SSIM, LPIPS, FPS (frames per second), memory usage (Mem), and the number of Gaussians (Num.(k)). Higher PSNR and SSIM values indicate better image quality, while lower LPIPS values indicate better perceptual similarity to the ground truth. Higher FPS values indicate faster rendering speed, and lower memory usage and Gaussian count values indicate greater efficiency. The table highlights the superior performance of Spec-Gaussian in terms of both image quality and efficiency.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

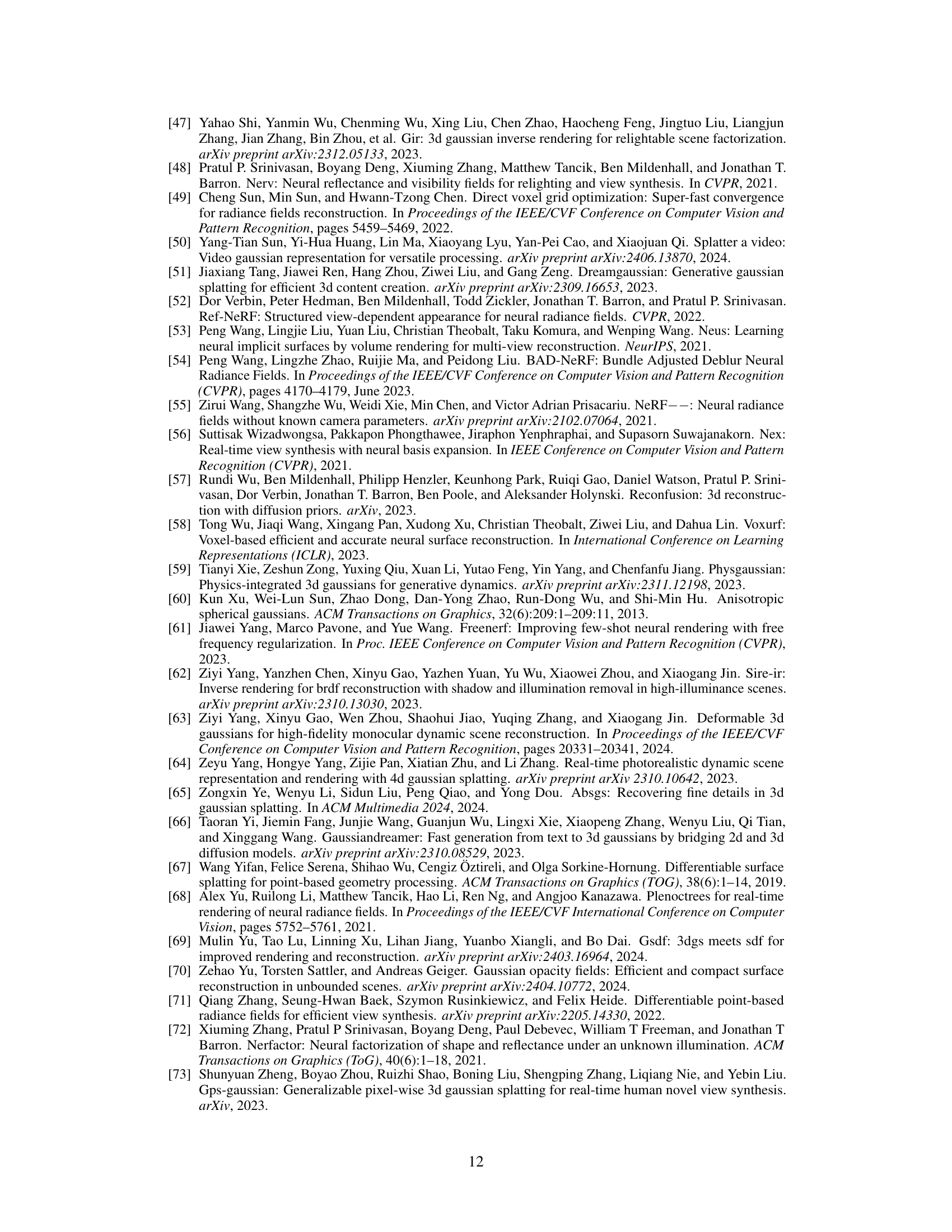

🔼 This table presents a quantitative comparison of different methods on the NeRF synthetic dataset. The metrics used are PSNR, SSIM, LPIPS, FPS (frames per second), and memory usage. The methods compared include iNGP-Base, Mip-NeRF, Tri-MipRF, NeuRBF, 3D-GS, GS-Shader, Scaffold-GS, and three variations of the proposed Spec-Gaussian method (Ours-w/anchor, Ours-light, and Ours). The table shows that Spec-Gaussian outperforms existing methods in terms of PSNR, SSIM, and LPIPS, while maintaining competitive FPS and memory usage.

read the caption

Table 3: Results on NeRF synthetic dataset.

🔼 This table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. The metrics used for comparison include PSNR, SSIM, LPIPS, FPS (frames per second), memory usage, and the number of Gaussians used. The methods compared include 3D-GS, Scaffold-GS, and three versions of the proposed Spec-Gaussian method (with and without anchor-based Gaussians, and a light version). The table shows that the Spec-Gaussian methods achieve higher PSNR and SSIM scores while using fewer Gaussians and maintaining real-time performance.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 This table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. The metrics used are PSNR, SSIM, LPIPS, FPS, and memory usage. The methods compared include 3D-GS, Scaffold-GS, and three versions of the proposed Spec-Gaussian method (with and without anchors, and a light version). The table shows that the Spec-Gaussian methods achieve higher PSNR, SSIM, and lower LPIPS values (better image quality), while maintaining relatively high FPS and comparable memory usage, especially compared to 3D-GS.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 This table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. The metrics used for comparison include PSNR, SSIM, LPIPS, FPS, memory usage, and the number of Gaussians used. The methods compared are 3D-GS, Scaffold-GS, and three versions of the proposed Spec-Gaussian method (with anchor, light version, and full version). The results show that Spec-Gaussian significantly outperforms existing methods in terms of rendering quality (PSNR, SSIM, LPIPS) while maintaining real-time performance (FPS).

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 This table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. Metrics include PSNR, SSIM, LPIPS, FPS, memory usage, and the number of Gaussians used. It compares the performance of 3D-GS, Scaffold-GS, and three versions of the proposed Spec-Gaussian method (with anchor-based splatting, a light version, and a full version). The table highlights the improved performance of the proposed methods in terms of rendering quality and efficiency.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 The table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. Metrics include PSNR, SSIM, LPIPS, FPS (frames per second), memory usage, and the number of 3D Gaussians used. It compares the performance of the proposed Spec-Gaussian method against several baselines (3D-GS, Scaffold-GS, etc.) to demonstrate its improved ability to model anisotropic scenes.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 The table quantitatively compares the performance of different methods on an anisotropic synthetic dataset. Metrics include PSNR, SSIM, LPIPS, FPS (frames per second), memory usage, and the number of Gaussians (k) used. The methods compared are 3D-GS, Scaffold-GS, and three variations of the proposed Spec-Gaussian method (with and without anchors, a light version). The results show the improvement of Spec-Gaussian in terms of rendering quality (PSNR, SSIM, LPIPS) while maintaining or even improving efficiency (FPS, memory usage) compared to existing methods.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 This table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. The methods compared include 3D-GS, Scaffold-GS, and three versions of the proposed Spec-Gaussian method (with anchor, light, and full). The metrics used for comparison are PSNR, SSIM, LPIPS, FPS, memory usage, and the number of Gaussians used. The results show that the Spec-Gaussian method outperforms the other methods in terms of rendering quality (PSNR, SSIM, LPIPS), while maintaining a reasonable frame rate and memory usage.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 This table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. The methods compared include 3D-GS, Scaffold-GS, and three variants of the proposed Spec-Gaussian method (with anchor, light, and full versions). The metrics used for comparison are PSNR, SSIM, LPIPS, FPS, memory usage, and the number of Gaussians used. The results show that the Spec-Gaussian method outperforms existing methods in terms of rendering quality (PSNR, SSIM, LPIPS), while maintaining comparable or better performance in terms of speed (FPS) and memory usage.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 The table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. Metrics include PSNR, SSIM, LPIPS, FPS (frames per second), memory usage, and the number of Gaussians used. It compares the proposed method (Ours) with several state-of-the-art methods, including 3D-GS and Scaffold-GS, demonstrating the improvements in rendering quality and efficiency achieved by the proposed approach.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 The table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. Metrics include PSNR, SSIM, LPIPS, FPS, memory usage, and the number of Gaussians used. It shows how the proposed method (Ours) compares to existing state-of-the-art approaches such as 3D-GS and Scaffold-GS, demonstrating improvements in rendering quality while maintaining relatively high frame rates.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

🔼 This table presents a quantitative comparison of different methods on an anisotropic synthetic dataset. The metrics used are PSNR, SSIM, LPIPS, FPS (frames per second), memory usage, and the number of Gaussians used. It shows the performance of the proposed Spec-Gaussian method compared to existing state-of-the-art methods, demonstrating improvements in rendering quality and efficiency.

read the caption

Table 1: Quantitative Comparison on anisotropic synthetic dataset.

Full paper#