↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Deep neural networks are susceptible to adversarial attacks, which involve adding imperceptible noise to inputs to mislead the model. Universal Adversarial Perturbations (UAPs) are particularly concerning since they can fool a model across various inputs. However, existing UAP generation methods struggle with Few-Shot Learning (FSL) scenarios due to issues such as task shift (differences between training and testing tasks) and semantic shift (differences in the underlying data distributions).

This paper introduces a new framework called FSAFW to overcome these challenges. FSAFW cleverly addresses task shift by aligning proxy tasks with the downstream tasks and handles semantic shift by leveraging the generalizability of pre-trained encoders. The method is shown to be effective across different FSL training paradigms, significantly improving the attack success rate. The contribution is a unified framework that addresses two crucial challenges in generating UAPs in FSL, namely task shift and semantic shift, resulting in a significant improvement in attack performance.

Key Takeaways#

Why does it matter?#

This paper is crucial because it addresses a critical vulnerability in the widely adopted Few-Shot Learning (FSL) paradigm. By demonstrating the ineffectiveness of traditional attack methods and proposing a novel framework, it significantly advances our understanding of adversarial robustness in FSL, paving the way for more secure and reliable FSL systems. This research is highly relevant to the growing field of FSL and its applications in various domains. The findings highlight the need for developing more robust and transferable adversarial defense mechanisms.

Visual Insights#

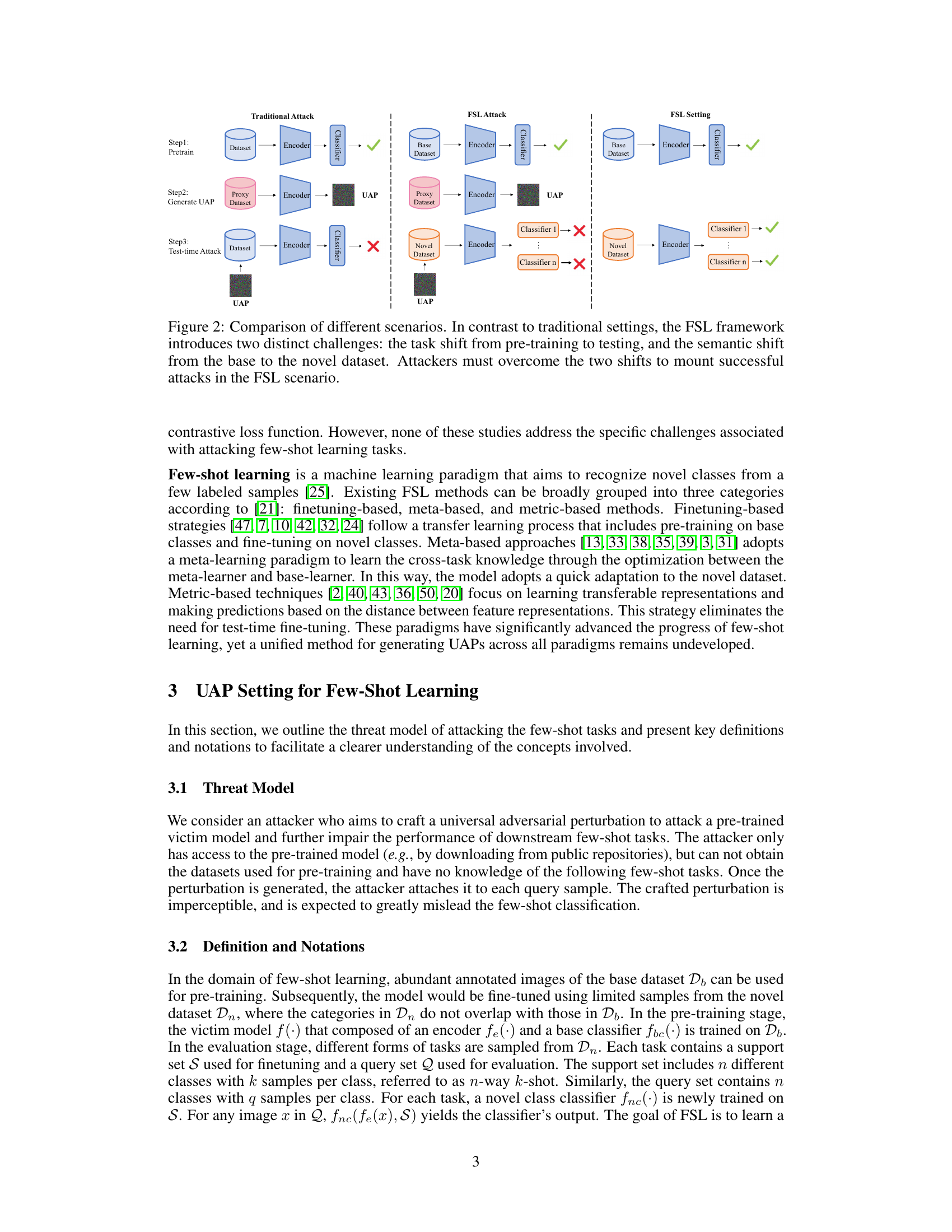

This figure shows the decrease in attack success rate (ASR) when using traditional universal adversarial perturbations (UAPs) in few-shot learning (FSL) scenarios compared to traditional settings. The left panel shows that the ASR is significantly lower in FSL scenarios across different training paradigms (finetuning, metric, and meta). The right panel illustrates the process, highlighting the challenges of task shift (different tasks during training and testing) and semantic shift (different datasets for base and novel tasks). The attacker only has access to the pre-trained model, limiting the effectiveness of UAPs in FSL.

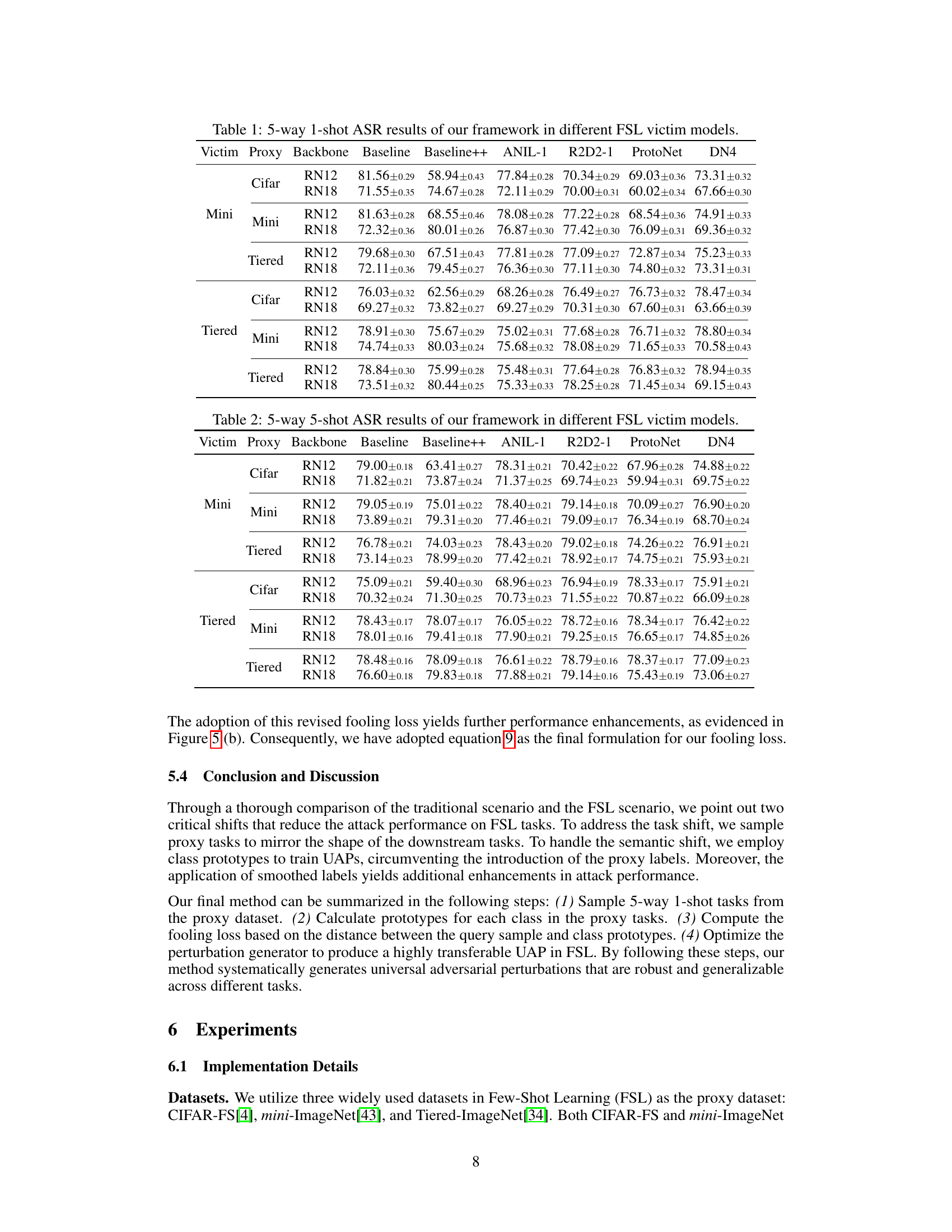

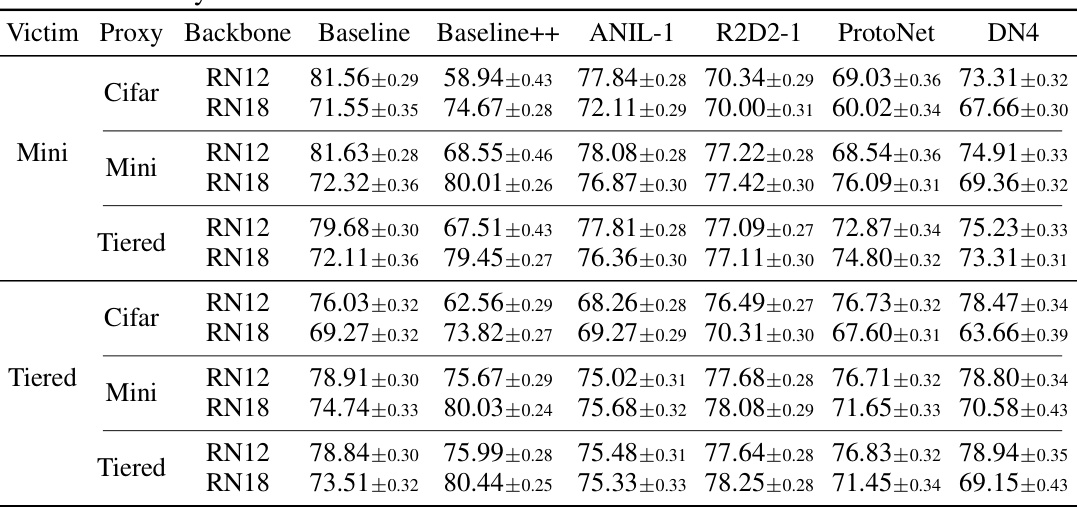

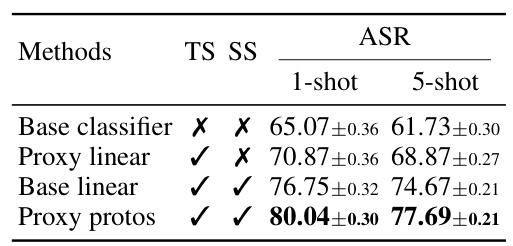

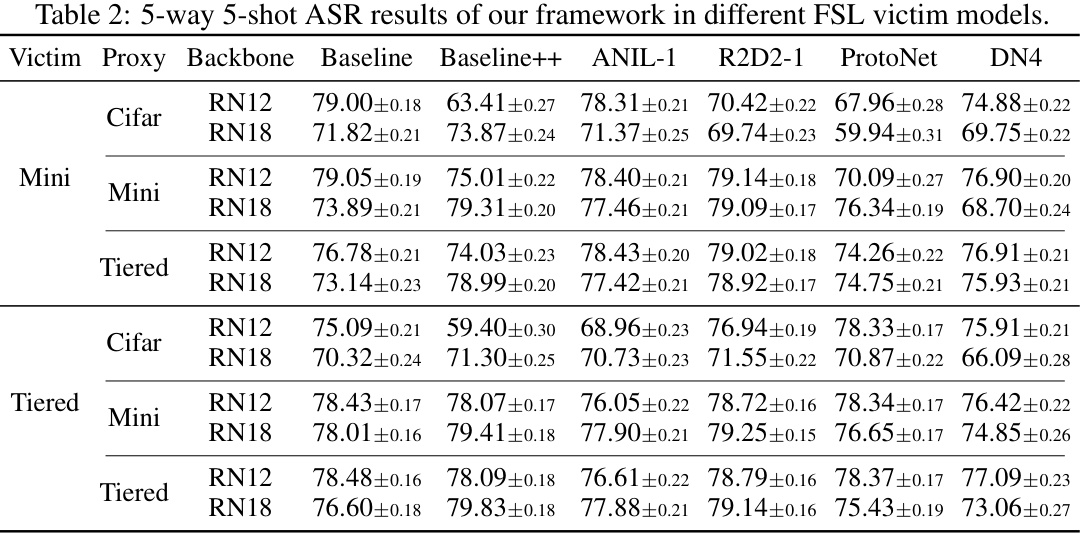

This table presents the Attack Success Rate (ASR) achieved by the proposed Few-Shot Attacking Framework (FSAFW) on various Few-Shot Learning (FSL) victim models. The ASR is a measure of the effectiveness of the universal adversarial perturbations (UAPs) generated by FSAFW in fooling the different FSL models. The table is broken down by victim model (Baseline, Baseline++, ANIL-1, R2D2-1, ProtoNet, DN4), proxy dataset (Cifar, Mini, Tiered), and backbone architecture (ResNet12, ResNet18). The results showcase the performance improvement of FSAFW over existing baselines across different FSL models and training paradigms.

In-depth insights#

UAPs in FSL#

The application of Universal Adversarial Perturbations (UAPs) within the context of Few-Shot Learning (FSL) presents unique challenges. Traditional UAP generation methods, effective in closed-set scenarios, struggle with the inherent task and semantic shifts present in FSL. These shifts arise from the difference between base-dataset pre-training and the novel-dataset testing phases. Task shift occurs because the downstream tasks in FSL are fundamentally different from the pre-training task, while semantic shift is due to the dissimilar data distributions between the base and novel datasets. Successfully generating effective UAPs in FSL therefore necessitates novel techniques addressing these challenges. A promising approach involves aligning proxy tasks to downstream tasks and leveraging the generalizability of pre-trained encoders to mitigate the semantic shift. This approach necessitates overcoming the limitations of traditional UAP generation methods by creating a more transferable perturbation, leading to enhanced attack success rates.

Task & Semantic Shift#

The concepts of “task shift” and “semantic shift” are crucial for understanding the challenges of applying traditional Universal Adversarial Perturbations (UAPs) to few-shot learning (FSL) scenarios. Task shift refers to the discrepancy between the task the model was pre-trained on and the novel tasks encountered during testing in FSL. This mismatch hinders the transferability of UAPs crafted for one task to others. Semantic shift highlights the difference in data distributions between the base dataset used for pre-training and the novel datasets used for testing. This difference in image characteristics makes perturbations crafted for one dataset ineffective on others, even if the tasks are similar. The paper’s key insight is that these two shifts significantly reduce the effectiveness of traditional UAPs in FSL, necessitating new frameworks and strategies such as those presented, which account for and address both these types of shift.

FSAFW Framework#

The FSAFW framework, designed for generating universal adversarial perturbations (UAPs) in few-shot learning (FSL), tackles the critical challenges of task shift and semantic shift. It addresses task shift by introducing proxy tasks that align with downstream tasks, mitigating the impact of task discrepancies. The framework cleverly leverages the generalizability of pre-trained encoders to handle the semantic shift between base and novel datasets, reducing reliance on dataset-specific fine-tuning. This unified approach significantly improves the transferability of UAPs across diverse FSL training paradigms. FSAFW’s strength lies in its systematic approach, achieving superior attack performance compared to traditional methods. The framework is especially valuable for evaluating the robustness and security of FSL models in open-set scenarios where the downstream tasks are unknown during the UAP generation phase.

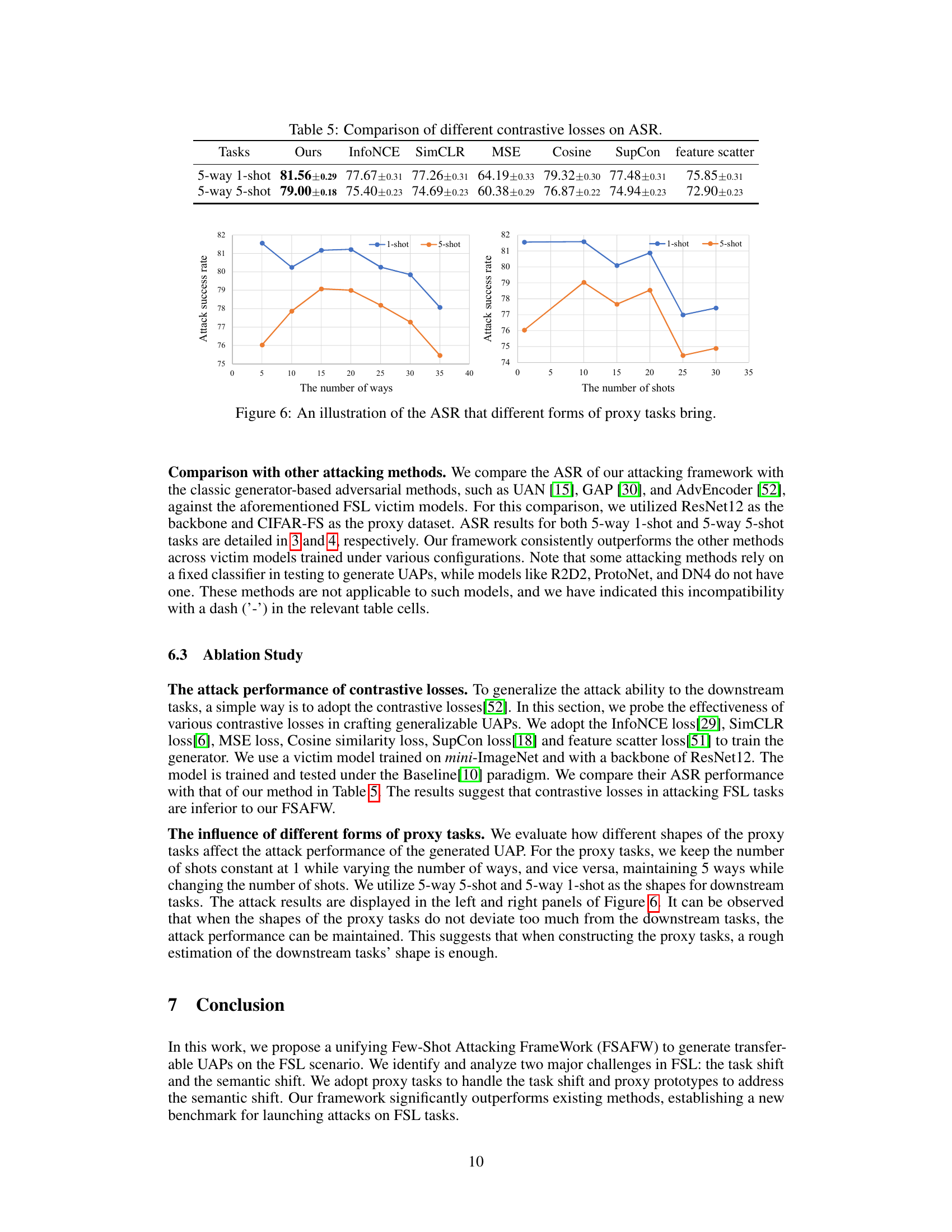

Ablation Studies#

Ablation studies systematically remove components of a model or approach to isolate their individual contributions and assess their impact on overall performance. In the context of a research paper, a well-executed ablation study would begin by identifying core elements of the system and then methodically removing or modifying them one at a time. The goal is to determine which components are essential and which are redundant or detrimental. By comparing the performance of the full model against the performance of the models with ablated components, researchers can gain crucial insights into the system’s behavior. This helps justify design choices, identify crucial aspects, and guide future development. A strong ablation study will consider various configurations and carefully control for confounding factors to ensure that the observed changes are indeed attributable to the removed components and not other influences. The results are often presented in tabular form, showing the performance metrics for each ablated version alongside the original system. This type of analysis provides a clear, quantitative understanding of the model’s functioning and is a critical element of rigorous scientific evaluation. The comprehensiveness of the study is key, as the more variations tested, the stronger the conclusions and the more useful the insights.

Cross-Domain UAPs#

Cross-domain universal adversarial perturbations (UAPs) aim to create a single perturbation effective across diverse datasets and model architectures. This is a challenging goal as UAPs are inherently sensitive to the specific characteristics of the training data and model. A successful cross-domain UAP would require robustness against dataset bias, domain shift, and variations in model architectures. Generating these perturbations would likely involve advanced techniques such as domain adaptation or generative models, aiming for perturbations that are both imperceptible and effective at fooling a wide range of models. The evaluation of cross-domain UAPs would require extensive testing across various datasets and model architectures, measuring both attack success rate and transferability. Future work may explore techniques such as adversarial training to improve model robustness against cross-domain UAPs.

More visual insights#

More on figures

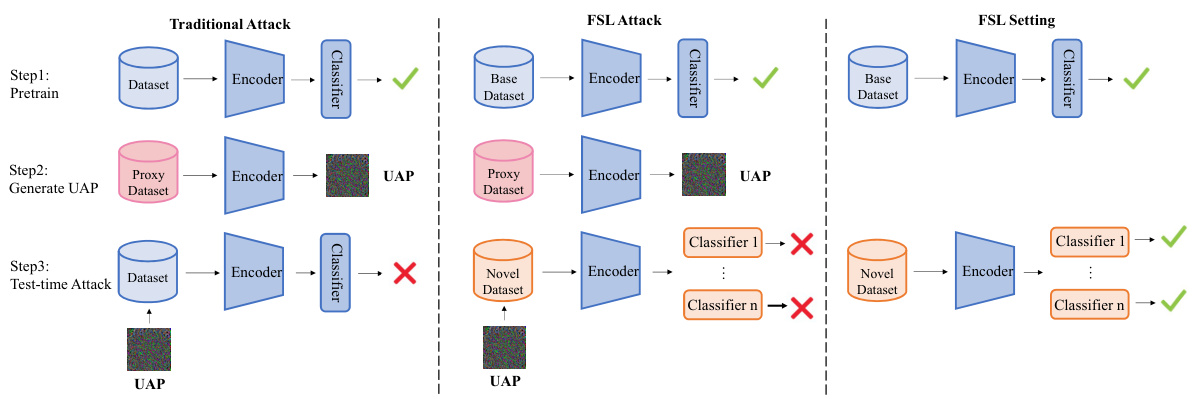

This figure compares the process of generating universal adversarial perturbations (UAPs) in traditional settings versus few-shot learning (FSL) settings. In traditional settings, the same classifier is used for training and testing, making UAP generation straightforward. However, FSL introduces two key challenges: 1) Task Shift: the pre-trained model is tested on different tasks than those it was trained on, and 2) Semantic Shift: the training and testing data come from different distributions (base vs. novel datasets). The figure illustrates how these shifts hinder the transferability of UAPs generated using traditional methods.

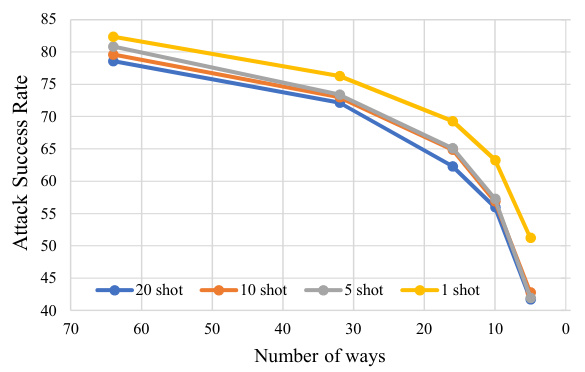

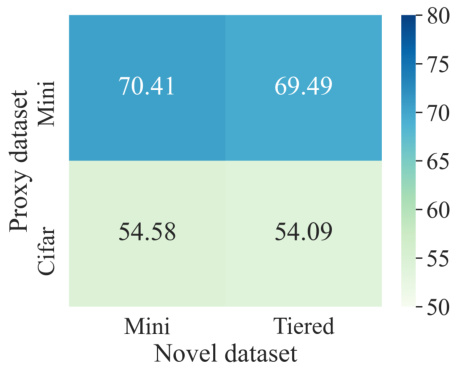

This figure shows the results of applying the traditional UAP generation method to different few-shot learning scenarios. The left panel (a) shows how the attack success rate (ASR) changes when varying the number of ways (different classification tasks) and shots (number of training examples per class) for the downstream tasks. As the tasks become more diverse (higher number of ways) or there are fewer training examples (fewer shots), the ASR decreases. The right panel (b) demonstrates how changing the datasets used for the novel classes also impacts the ASR. Different datasets are used (CIFAR, Mini, Tiered), and it shows that the ASR is highest when the novel dataset is similar to the proxy dataset used for generating UAPs.

This figure shows the impact of task and semantic shift on the attack success rate (ASR) in few-shot learning scenarios. The left panel (a) demonstrates how ASR decreases as the complexity of the downstream task increases (number of ways and shots). The right panel (b) shows how the ASR is affected by the choice of dataset: using a different dataset as the proxy dataset or the novel dataset negatively impacts performance, highlighting the impact of semantic shift.

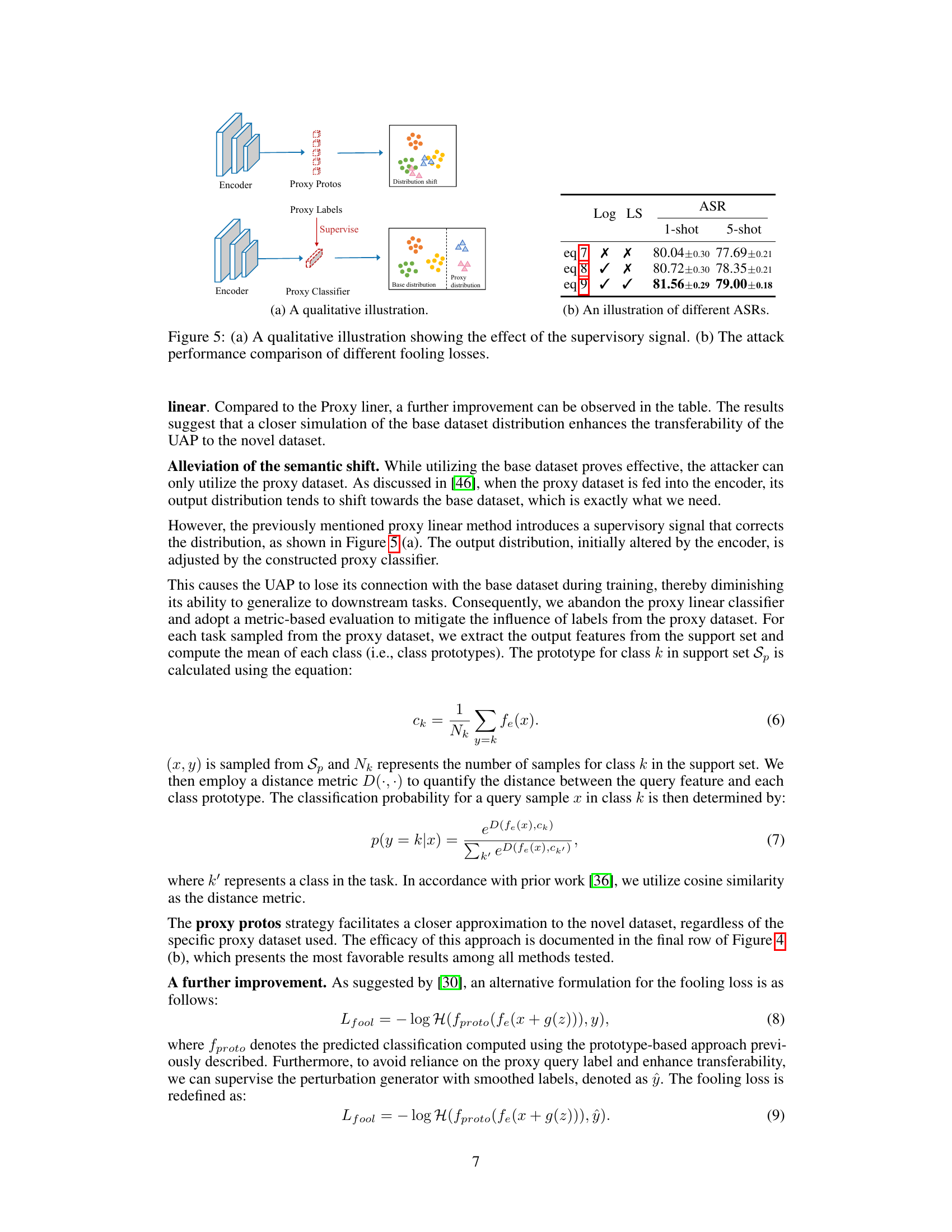

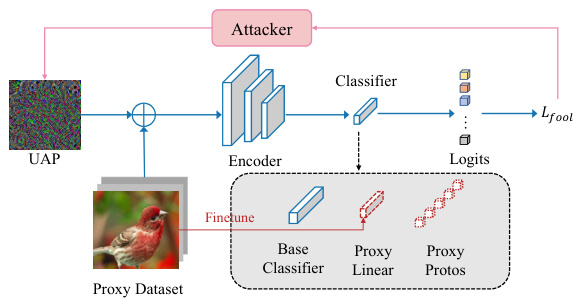

This figure illustrates the proposed attacking framework and its performance. Panel (a) shows the framework’s components: a pre-trained classifier, proxy linear classifier (for task alignment), and prototype-based classifier (for semantic alignment). The attacker generates universal adversarial perturbations (UAPs) that are added to input images before passing them through the encoder and the classifiers. The framework addresses task and semantic shifts prevalent in few-shot learning. Panel (b) presents a table showing the attack success rate (ASR) for different methods (baseline, addressing only task shift, addressing both shifts) and for different few-shot tasks (5-way 1-shot and 5-way 5-shot).

This figure shows the proposed Few-Shot Attacking FrameWork (FSAFW) for generating universal adversarial perturbations (UAPs) in few-shot learning (FSL). (a) illustrates the framework, starting with a pre-trained base classifier and progressively incorporating methods to address task shift (Proxy Linear, Proxy Protos) and semantic shift. (b) presents the attack success rates (ASR) of different methods on 5-way 1-shot and 5-way 5-shot tasks, highlighting the improvement achieved by FSAFW in handling both shifts.

Figure 5(a) shows how the supervisory signal influences the distribution of features generated by the encoder. Without supervision (top), the distribution shifts, impacting the attack’s transferability. With supervision (bottom), the distribution shift is mitigated, improving transferability. Figure 5(b) compares the Attack Success Rate (ASR) using different loss functions (equations 7, 8, and 9) for evaluating the UAP performance, showing that equation 9 achieves the highest ASR.

This figure shows the impact of task and semantic shifts on the attack success rate (ASR) in few-shot learning (FSL). The left panel (a) illustrates how ASR varies with the number of ways (different downstream tasks) and number of shots, showing a decrease in performance as the task becomes less similar to the pre-training task. The right panel (b) demonstrates the influence of the semantic shift by changing the datasets used in the attack, showing that ASR falls as the dissimilarity between the base, novel, and proxy datasets increases. This highlights the challenges of applying traditional universal adversarial perturbations (UAPs) directly to the FSL setting.

This figure shows some example images from the CIFAR-FS dataset. CIFAR-FS is a subset of the CIFAR-100 dataset, commonly used in few-shot learning research. The images depict a variety of objects and scenes, demonstrating the diversity within the dataset and showcasing the visual complexity of the classification tasks involved in few-shot learning.

This figure compares the traditional adversarial attack and the few-shot learning (FSL) attack. The traditional attack uses a pre-trained encoder and classifier on the base dataset to generate a universal adversarial perturbation (UAP). This UAP is then applied to the test-time attack on the same base dataset. In FSL, however, the task shift (pre-training to testing) and semantic shift (base to novel dataset) pose challenges. The attacker in FSL uses a pre-trained encoder but needs to overcome these shifts to generate an effective UAP.

This figure shows a comparison of attack success rates (ASR) for traditional universal adversarial perturbations (UAPs) versus UAPs applied to few-shot learning (FSL) scenarios. The left panel illustrates the significant drop in ASR when using traditional UAPs in various FSL settings compared to traditional close-set scenarios. The right panel provides a schematic illustrating the challenges posed by FSL for UAP generation, namely the task shift and semantic shift between pre-training and testing phases. The task shift involves different downstream tasks, while the semantic shift involves different datasets.

More on tables

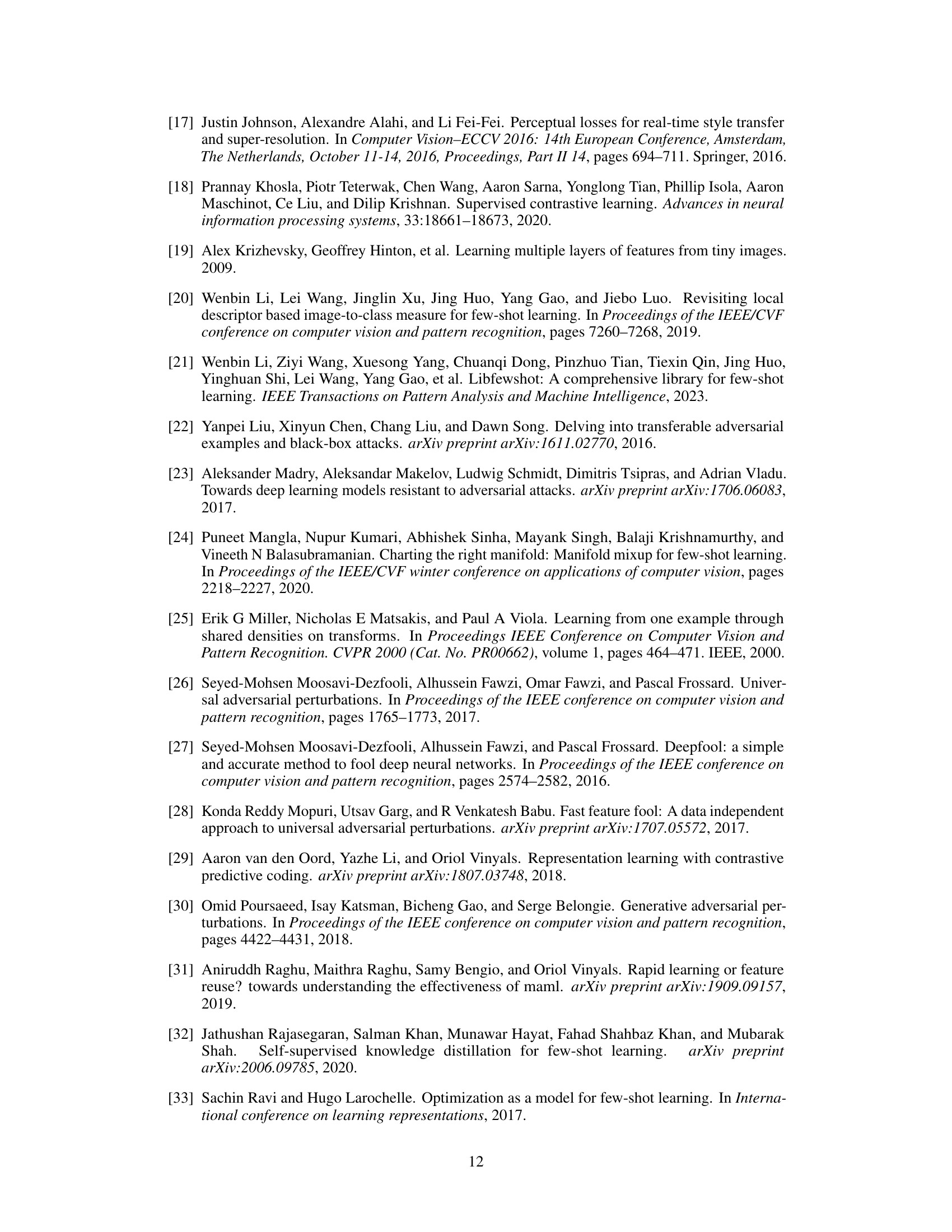

This table presents the Attack Success Rate (ASR) achieved by the proposed Few-Shot Attacking Framework (FSAFW) across various victim models in a 5-way 5-shot few-shot learning (FSL) setting. Different FSL training paradigms (finetuning-based, meta-based, and metric-based) are represented by the victim models (Baseline, Baseline++, ANIL-1, R2D2-1, ProtoNet, DN4). Results are shown for different backbone architectures (ResNet12 and ResNet18) and proxy datasets (Cifar, Mini, Tiered). The table demonstrates the effectiveness and generalizability of FSAFW across various FSL settings.

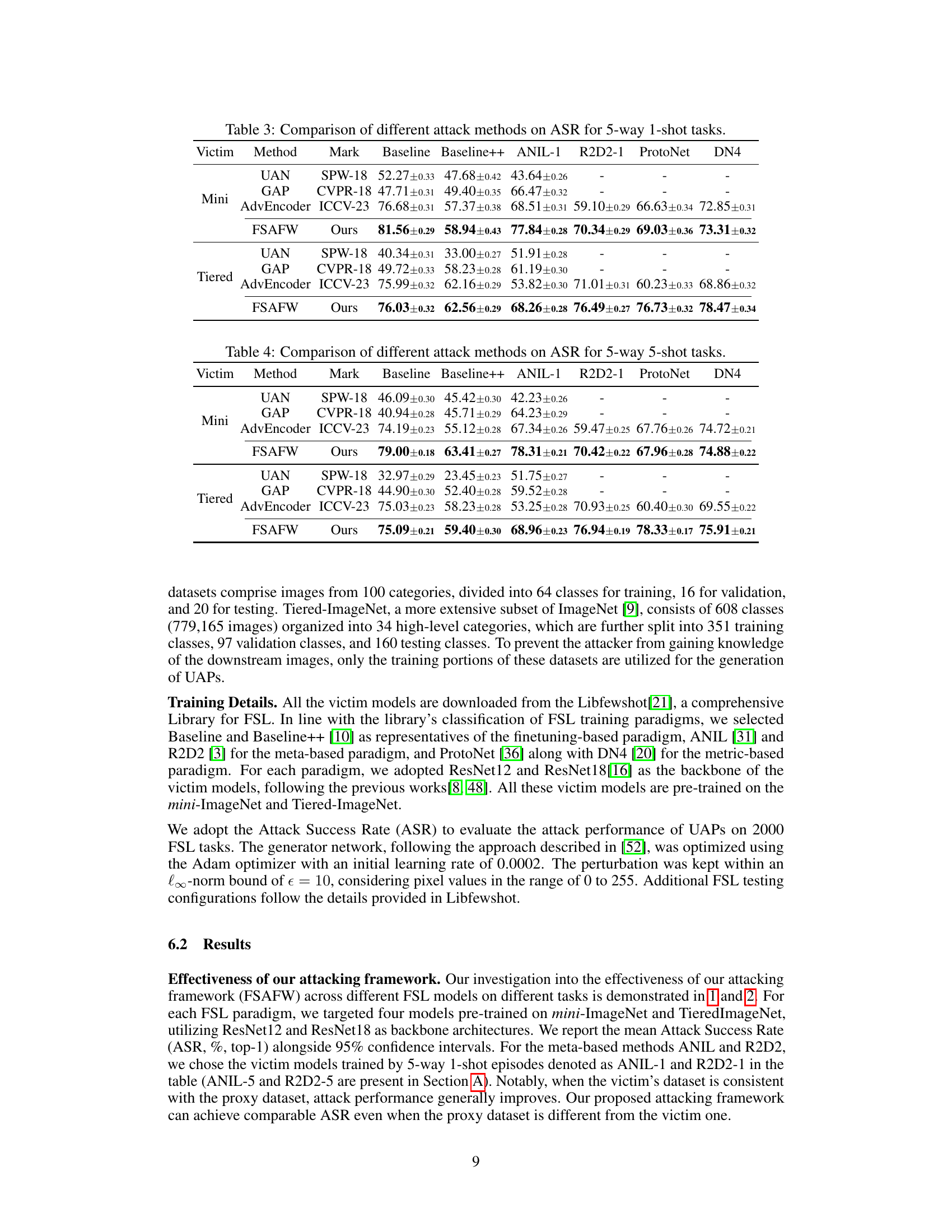

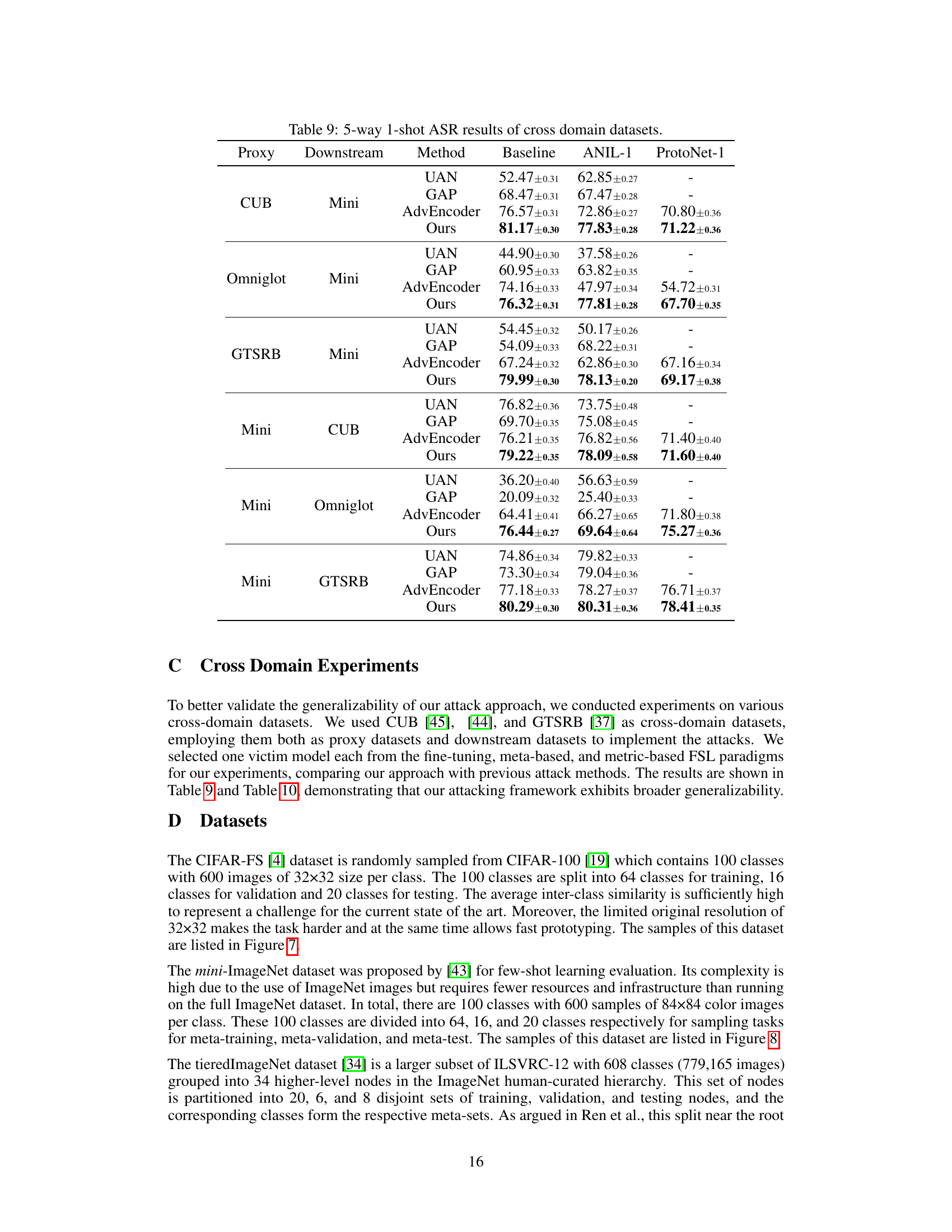

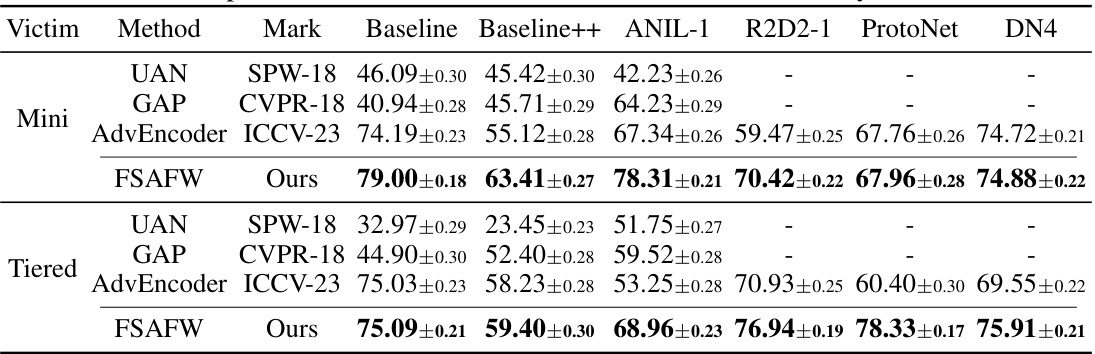

This table compares the attack success rate (ASR) of different universal adversarial perturbation (UAP) generation methods on 5-way 1-shot few-shot learning (FSL) tasks. It shows the ASR achieved by several methods including UAN, GAP, AdvEncoder, and the proposed FSAFW, across different victim models (ANIL-1, R2D2-1, ProtoNet, DN4) and datasets (Mini, Tiered). The results highlight the improvement in ASR achieved by the FSAFW approach.

This table compares the attack success rate (ASR) of different attack methods on 5-way 1-shot tasks. The methods include UAN, GAP, AdvEncoder, and the proposed FSAFW. The victim models are ANIL-1, R2D2-1, ProtoNet, and DN4, trained on Mini and Tiered datasets. The table shows the performance of each method across different victim models and datasets, demonstrating the effectiveness of FSAFW in comparison to other methods.

This table presents the results of an ablation study comparing the performance of different contrastive loss functions on the Attack Success Rate (ASR) for both 5-way 1-shot and 5-way 5-shot tasks. The goal was to determine the effectiveness of these losses in generating generalizable UAPs (Universal Adversarial Perturbations) for few-shot learning scenarios.

This table presents the Attack Success Rate (ASR) achieved by the proposed Few-Shot Attacking Framework (FSAFW) on various Few-Shot Learning (FSL) victim models. Different FSL training paradigms (ANIL, R2D2, ProtoNet, DN4) are tested using various backbone architectures (ResNet12, ResNet18). The table shows the ASR for 5-way 1-shot tasks, varying the proxy dataset (Cifar, Mini, Tiered) used for training the attacker’s model. The results demonstrate the framework’s effectiveness across different FSL settings and victim models.

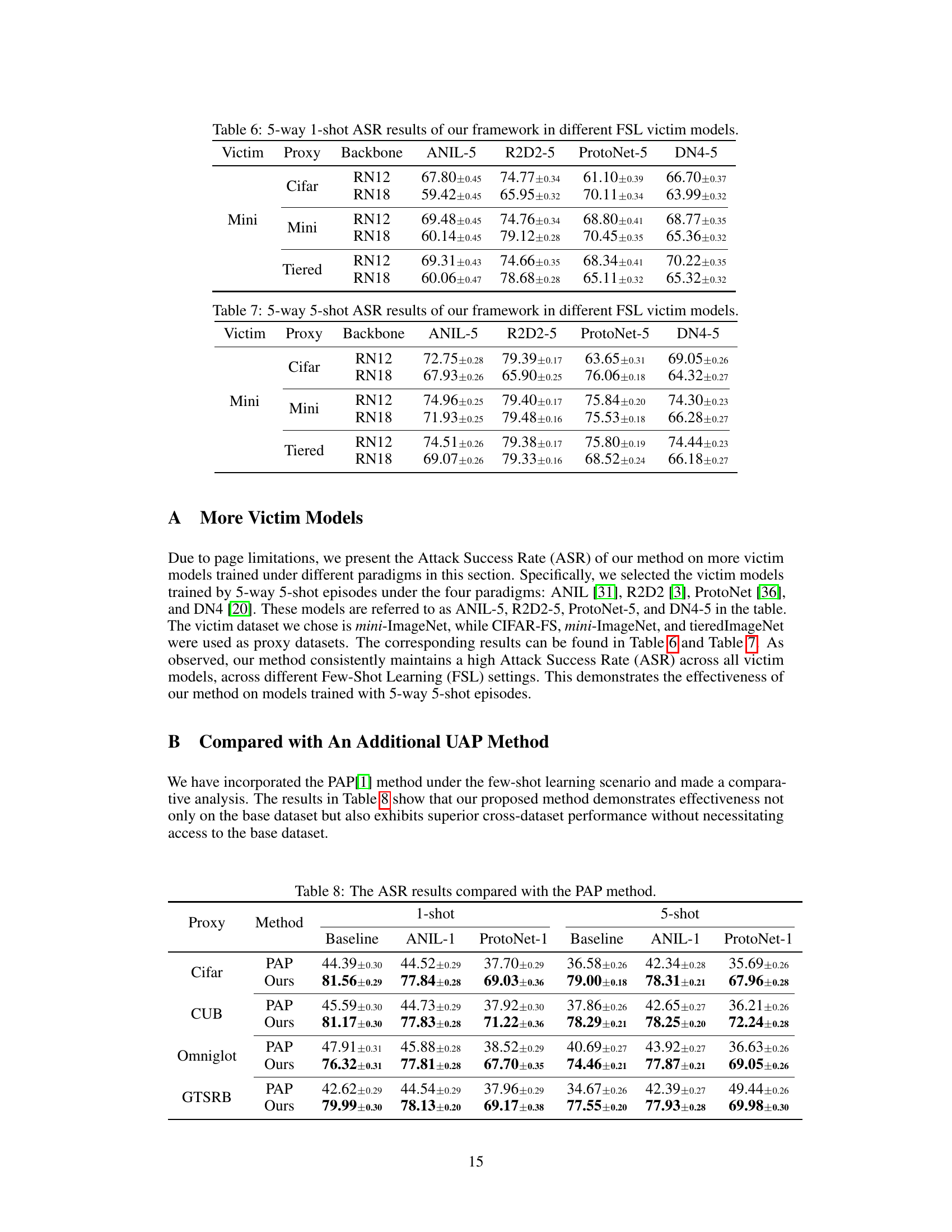

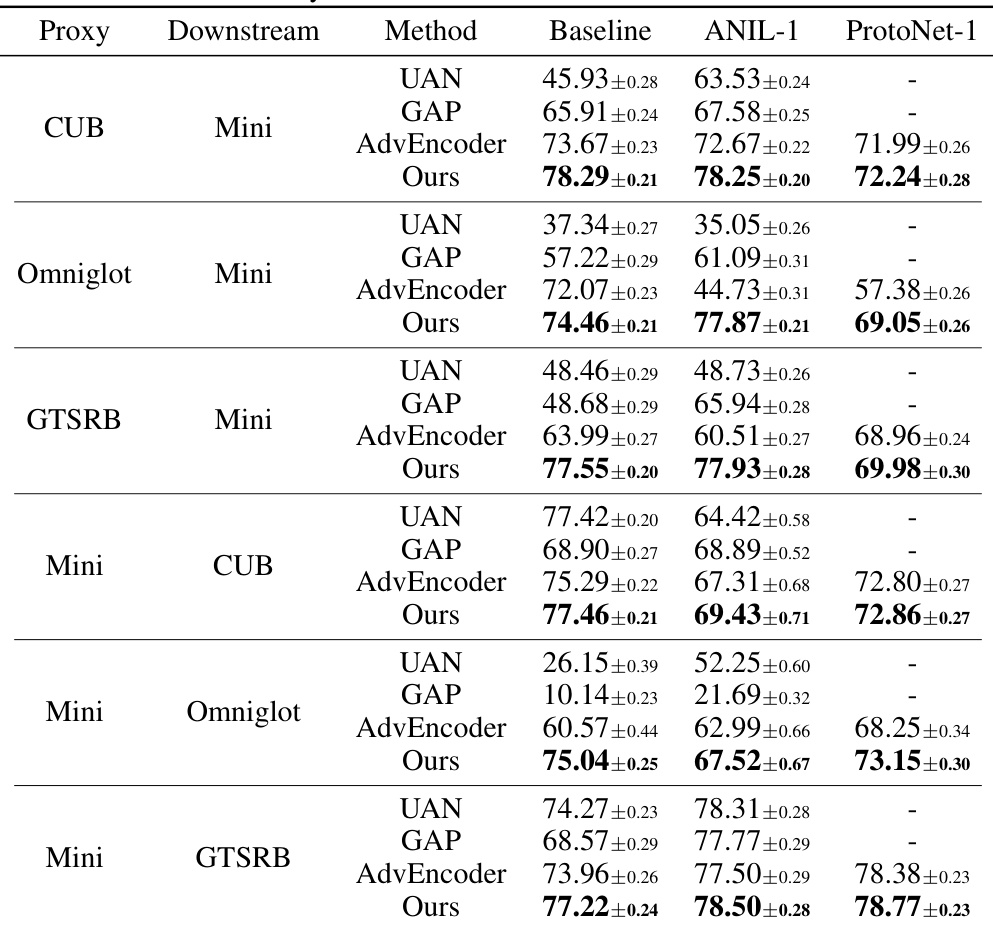

This table compares the attack success rate (ASR) of the proposed method (Ours) against the PAP method. It shows ASR for both 1-shot and 5-shot scenarios, across four different proxy datasets (Cifar, CUB, Omniglot, GTSRB), and three different victim models (Baseline, ANIL-1, ProtoNet-1). The results highlight the superior performance of the proposed method, particularly when the proxy dataset differs from the victim dataset.

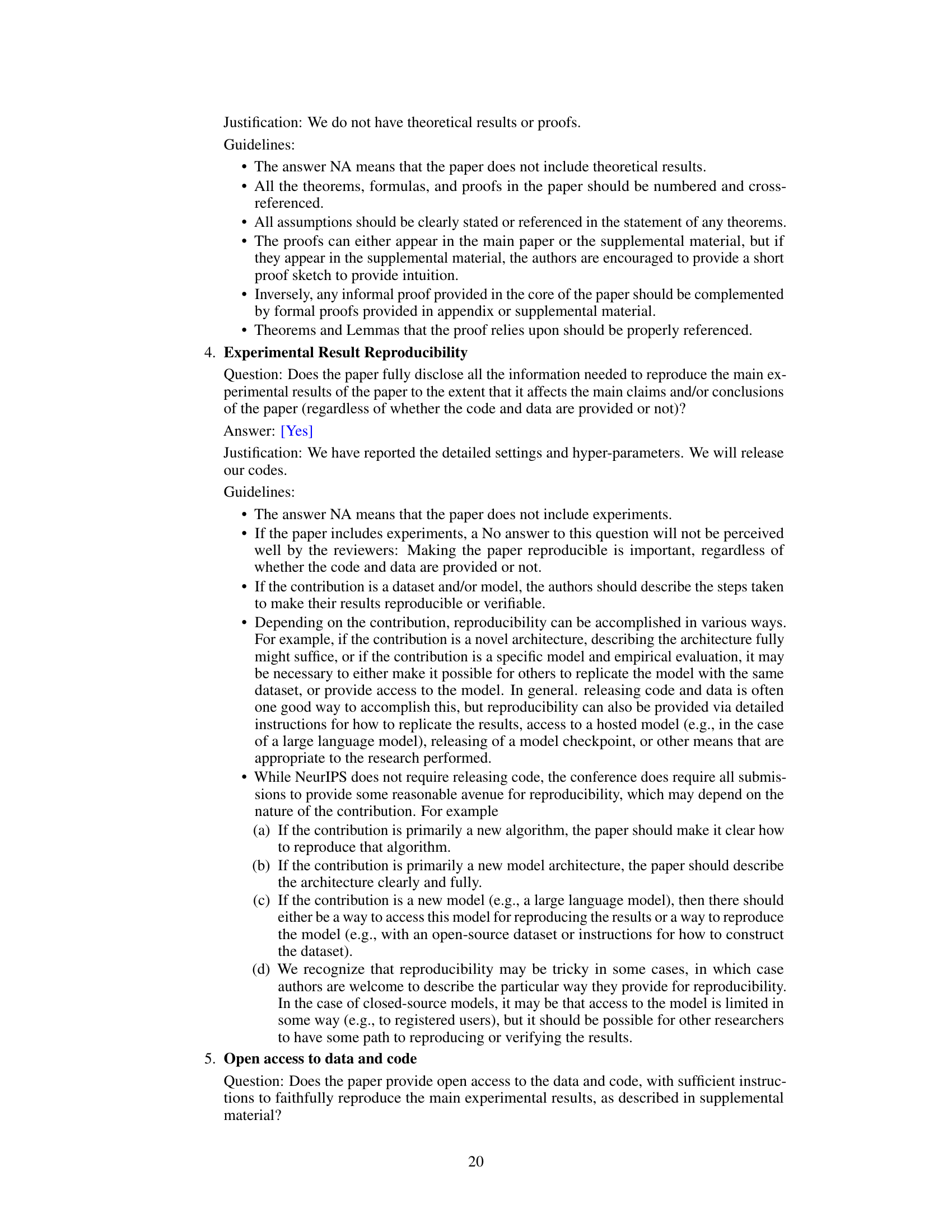

This table shows the Attack Success Rate (ASR) for 5-way 1-shot tasks using different methods (UAN, GAP, AdvEncoder, and Ours) across various cross-domain datasets. The proxy and downstream datasets vary, allowing for an assessment of the method’s generalizability across different data distributions. The ‘Ours’ method refers to the proposed Few-Shot Attacking FrameWork (FSAFW).

This table compares the attack success rate (ASR) of different attack methods on 5-way 1-shot tasks using various victim models (ANIL-1, R2D2-1, ProtoNet, DN4) and proxy datasets (CUB, Omniglot, GTSRB). It shows the effectiveness of the proposed Few-Shot Attacking Framework (FSAFW) compared to other state-of-the-art methods (UAN, GAP, AdvEncoder). The table highlights the improved ASR achieved by FSAFW across different victim models and datasets.

Full paper#