↗ OpenReview ↗ NeurIPS Homepage ↗ Chat

TL;DR#

Remote sensing often yields high-resolution panchromatic (PAN) and low-resolution multispectral (MS) images separately. Merging these to create high-resolution MS images (pansharpening) is crucial but challenging. Existing deep learning methods struggle with accurately capturing both spatial and spectral details simultaneously, and often yield blurry or spectrally distorted results. This paper addresses these challenges.

The proposed SSDiff model tackles pansharpening using a novel approach: subspace decomposition to separate spatial and spectral features into dedicated branches, followed by a designed fusion module (APFM) that combines them effectively. Furthermore, a frequency modulation inter-branch module (FMIM) refines the frequency balance between branches, resulting in sharper and more spectrally accurate images. The model also employs a branch-wise fine-tuning method for improved performance.

Key Takeaways#

Why does it matter?#

This paper is important because it introduces SSDiff, a novel approach to remote sensing pansharpening that significantly improves the quality of high-resolution multispectral images. It addresses limitations of existing methods by using subspace decomposition and a frequency modulation inter-branch module, leading to state-of-the-art results. This work is highly relevant to current trends in deep learning-based image fusion and opens up new avenues for research in remote sensing image processing and related fields.

Visual Insights#

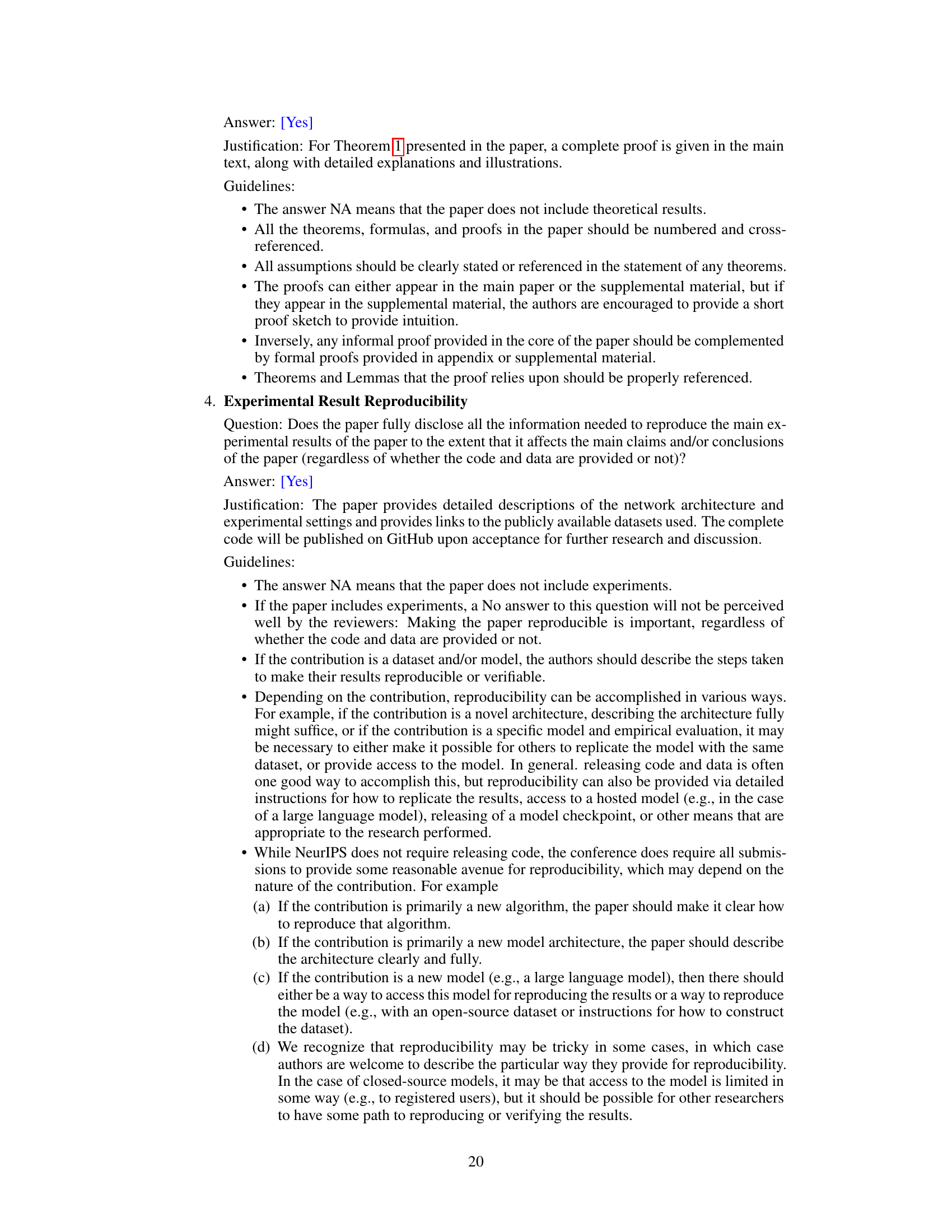

This figure illustrates three different approaches to pansharpening using deep learning. (a) shows a general supervised DL-based approach where a network combines PAN and LrMSI to produce HrMSI. (b) demonstrates LoRA (Low-rank Adaptation) applied to a DDPM (Denoising Diffusion Probabilistic Model) for pansharpening, showing how it modifies weights in the network. (c) introduces the paper’s proposed APFM (Alternating Projection Fusion Module) within the SSDiff model, highlighting the alternating projection between spatial (U) and spectral (G) domains to achieve the fusion of PAN and LrMSI to generate the HrMSI.

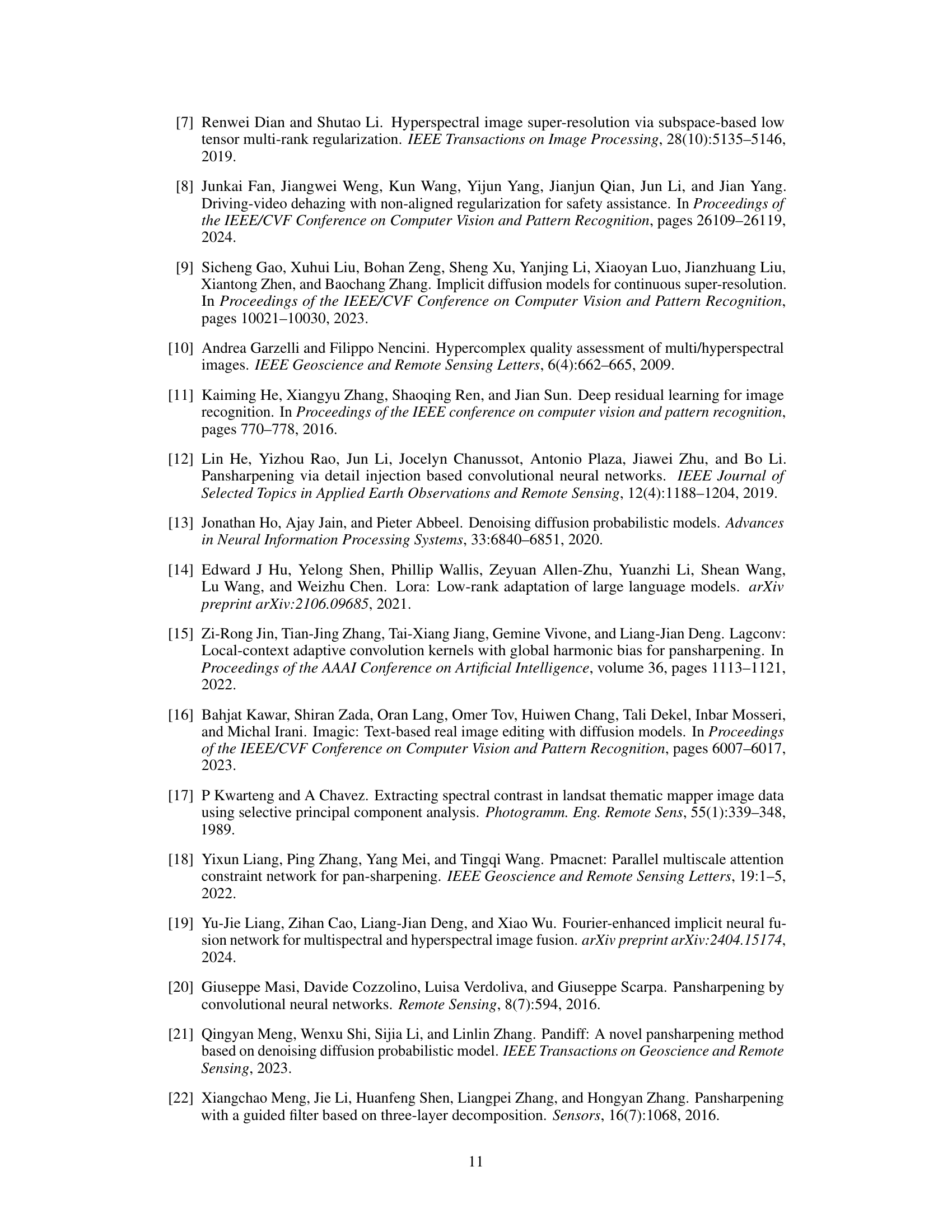

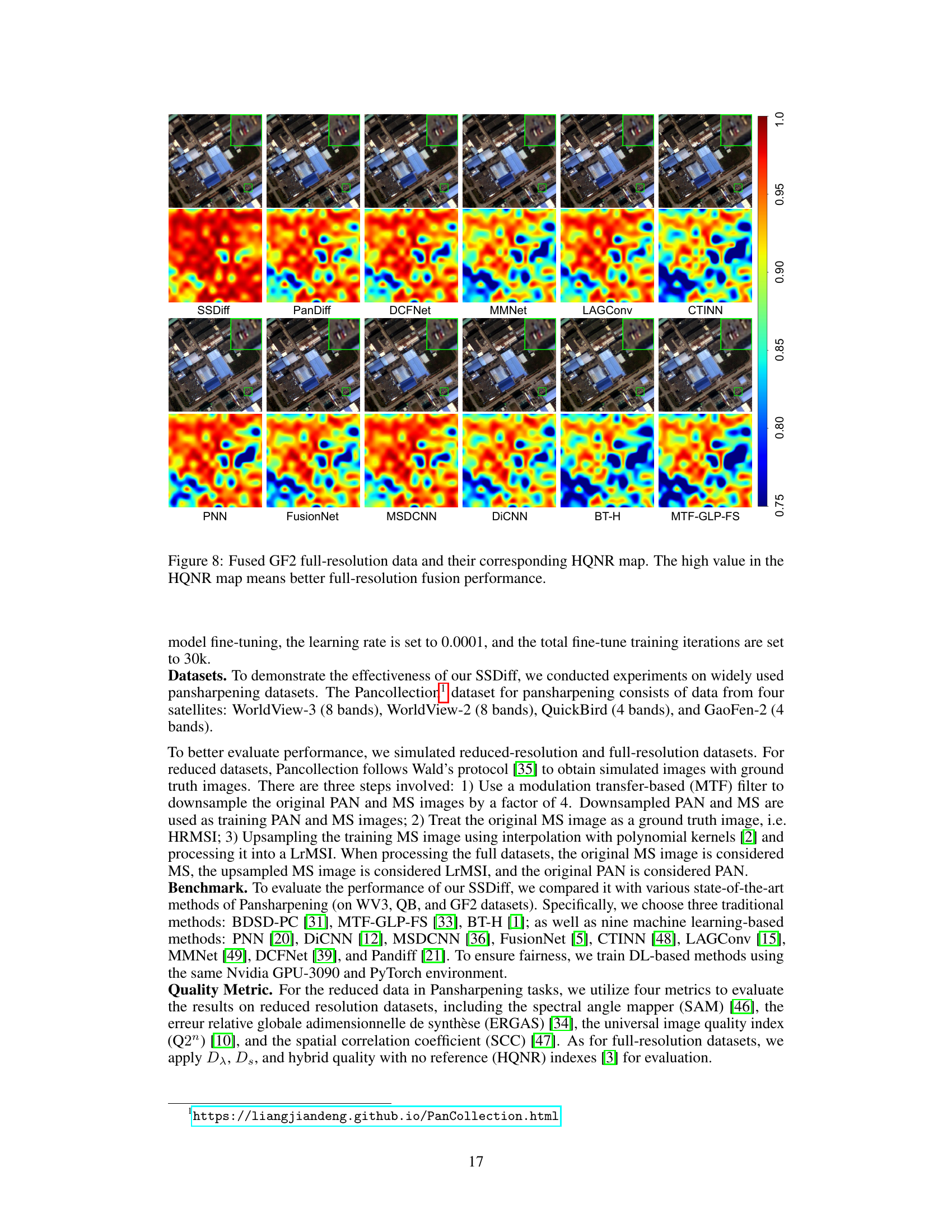

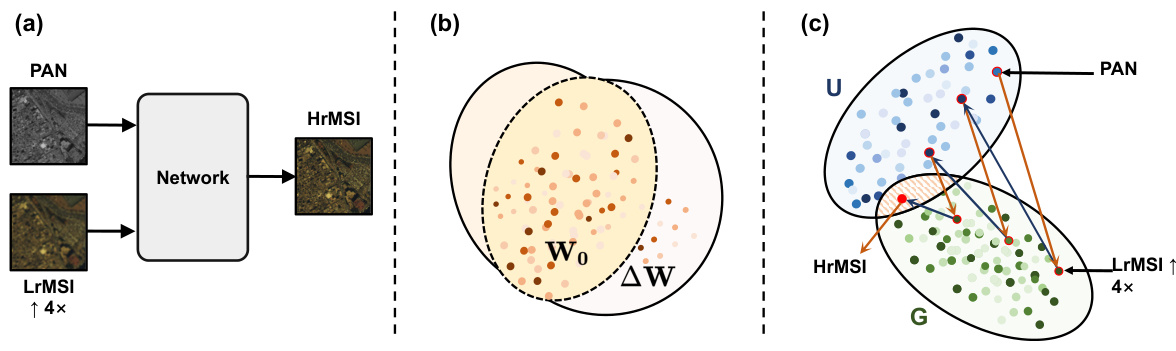

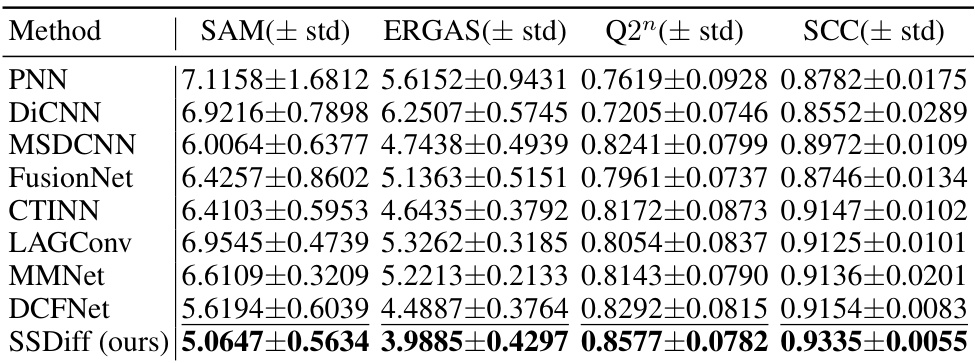

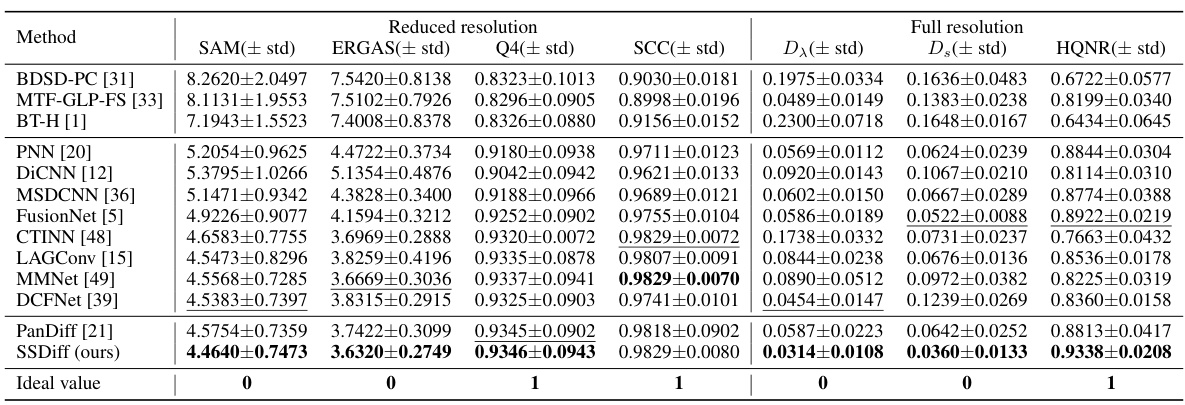

This table presents a quantitative comparison of different pansharpening methods on WorldView-3 (WV3) and GaoFen-2 (GF2) datasets. Both reduced-resolution and full-resolution results are shown, using several metrics to assess performance (SAM, ERGAS, Q2, SCC, Dx, Ds, HQNR). The best performing method for each metric is highlighted in bold, with the second-best underlined. This allows for a comprehensive evaluation of the methods’ accuracy and efficiency across different resolution levels and datasets.

In-depth insights#

SSDiff Architecture#

The SSDiff architecture is built upon the concept of subspace decomposition, dividing the network into spatial and spectral branches to process spatial and spectral information separately. This design allows for discriminative feature learning, capturing detailed spatial information from the PAN image and rich spectral information from the LrMSI. The Alternating Projection Fusion Module (APFM) cleverly merges these features, handling the fusion process as a series of alternating projections between spatial and spectral subspaces. Furthermore, the Frequency Modulation Inter-branch Module (FMIM) addresses the uneven frequency distribution between branches, enriching the spectral features with high-frequency information to improve fusion quality. The innovative LoRA-like branch-wise alternative fine-tuning (L-BAF) method refines the model, enabling efficient parameter updates without increasing the overall parameter count, ultimately leading to improved performance and generalization.

APFM & FMIM#

The research paper introduces two novel modules: the Alternating Projection Fusion Module (APFM) and the Frequency Modulation Inter-branch Module (FMIM). APFM leverages subspace decomposition, separating spatial and spectral information streams within a network to enable more effective feature extraction in separate branches. This contrasts with traditional approaches which often process spatial and spectral data in a single stream, potentially leading to information loss or suboptimal fusion. By implementing alternating projections, APFM facilitates the fusion of the independently processed spatial and spectral information, enhancing the quality and detail of the output. In parallel, FMIM addresses the uneven frequency distribution between the two branches, a common issue in pansharpening. This module modulates the frequency content to ensure a more balanced fusion process, avoiding an overemphasis on low-frequency information from the spectral branch and leading to sharper, more detailed images. The combination of these two modules is critical to the effectiveness of the proposed spatial-spectral integrated diffusion model (SSDiff). The APFM allows for a more discriminating capture of spatial and spectral features, while the FMIM refines the fusion process by optimizing frequency distribution, resulting in superior performance over existing methods.

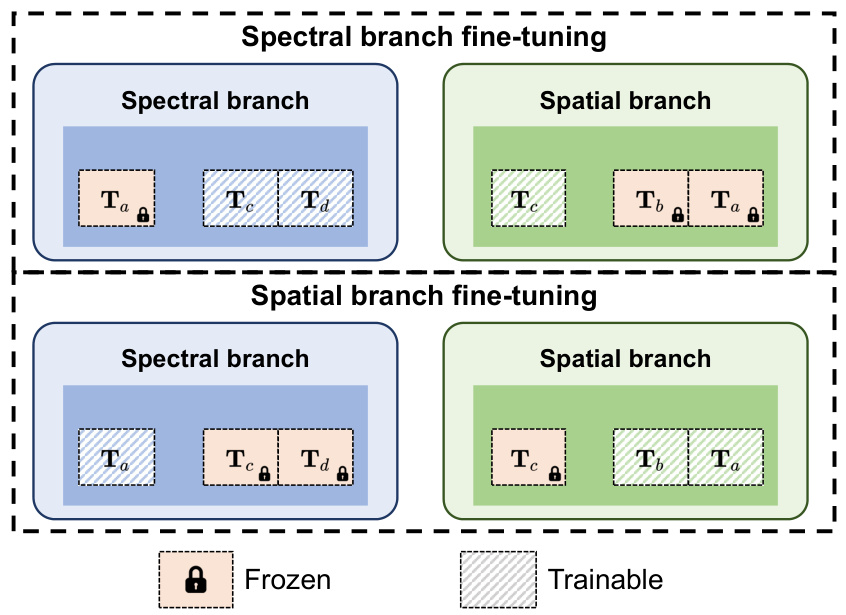

L-BAF Fine-tuning#

The proposed L-BAF (LoRA-like Branch-wise Alternative Fine-tuning) method tackles the challenge of balancing model training between spatial and spectral branches in pansharpening. Instead of simultaneously tuning both branches, L-BAF alternates between them, updating the parameters of one branch while freezing the other. This avoids the complexities of maintaining balance during simultaneous training, enabling the model to learn more discriminative features in each branch. The method is inspired by LoRA, which utilizes low-rank updates to efficiently fine-tune large models. By using this approach, L-BAF efficiently refines SSDiff by enabling each branch to learn its respective features more effectively. This is especially relevant to the pansharpening task because it addresses the inherent dissimilarity between spatial (high-resolution panchromatic) and spectral (low-resolution multispectral) data. The alternating nature of L-BAF avoids potentially detrimental interference between the two branches, potentially leading to superior performance compared to simultaneous fine-tuning.

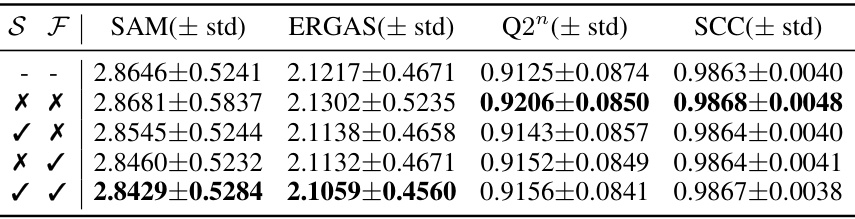

Ablation Studies#

Ablation studies systematically evaluate the contribution of individual components within a model. In the context of a remote sensing pansharpening model like SSDiff, this involves removing or modifying specific modules (e.g., the alternating projection fusion module, the frequency modulation inter-branch module) to assess their impact on overall performance. Key insights gained from such studies would include determining the relative importance of spatial and spectral branches, clarifying whether the designed fusion strategy effectively combines information from both sources, and validating the effectiveness of the proposed frequency modulation technique. Well-designed ablation experiments would isolate the effect of each component, offering strong support for the architectural choices. By comparing results across different configurations, one can quantify the individual contributions to accuracy metrics, such as SAM, ERGAS, and Q2, enabling a deeper understanding of the model’s strengths and weaknesses and justifying the overall design.

Future Works#

Future work in spatial-spectral pansharpening could explore more advanced deep learning architectures beyond diffusion models, such as transformers or graph neural networks, to better capture complex spatial-spectral relationships. Investigating alternative fusion strategies that go beyond simple concatenation or alternating projections, perhaps incorporating attention mechanisms or adversarial learning, could improve accuracy and generalization. Addressing the computational cost of diffusion models remains crucial; exploring more efficient sampling techniques or model compression methods is essential for practical applications. Furthermore, extending the approach to handle diverse remote sensing data (hyperspectral, LiDAR) and different sensor configurations would broaden its impact. Finally, a thorough investigation into the robustness of the model to noise and artifacts in the input images is needed, along with a deeper analysis of its limitations. Developing more robust evaluation metrics that comprehensively assess both spatial and spectral fidelity is also critical for the field’s advancement.

More visual insights#

More on figures

This figure illustrates the connection between subspace decomposition and the self-attention mechanism. Subspace decomposition is represented as matrix multiplication of a subspace matrix (D) and coefficients (C) to reconstruct the original matrix (X). The self-attention mechanism is shown similarly, with query (Q) and key (K) matrices multiplied by a value (V) matrix, using a self-similarity function f(Q, K) to generate the result matrix X. This shows that the self-attention mechanism can be seen as a specific instance of subspace decomposition via vector projection.

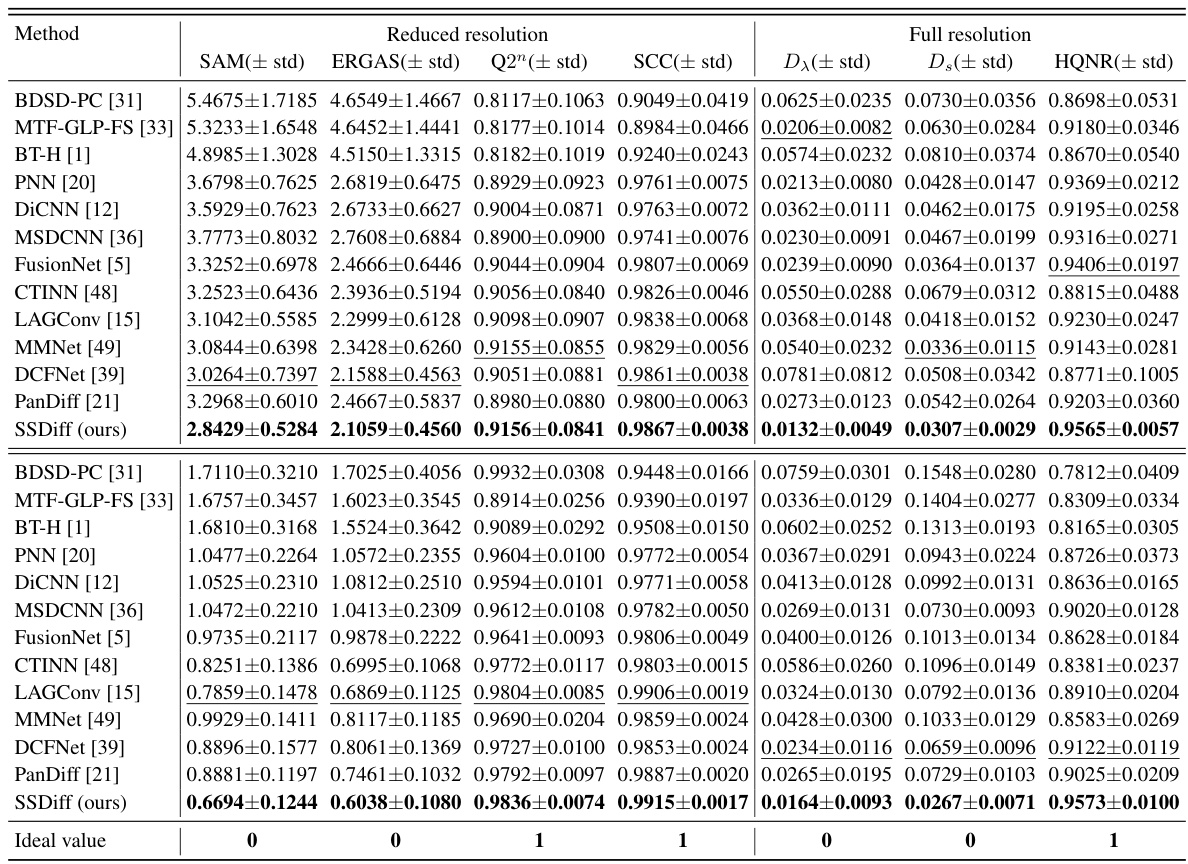

This figure illustrates the overall architecture of the SSDiff model. It shows two branches: a spatial branch and a spectral branch. Each branch processes either PAN or LrMSI images and extracts spatial or spectral features, respectively. The spatial branch uses ResNet blocks for feature extraction, while the spectral branch employs convolutional layers. A Frequency Modulation Inter-branch Module (FMIM) interacts between the branches. The features from each branch are then fused using an Alternating Projection Fusion Module (APFM), which uses alternating projections to create pansharpened images. Finally, a Multi-Layer Perceptron (MLP) produces the final output.

This figure shows the denoising process of the SSDiff model. The top row displays a series of images generated during the denoising process, showing a gradual increase in clarity and detail. The middle and bottom rows show the corresponding low and high-frequency components, respectively, extracted from the generated images using an inverse Fourier transform. This visual representation helps to illustrate how SSDiff separates and refines spatial information during image generation, ultimately producing a high-resolution pansharpened image.

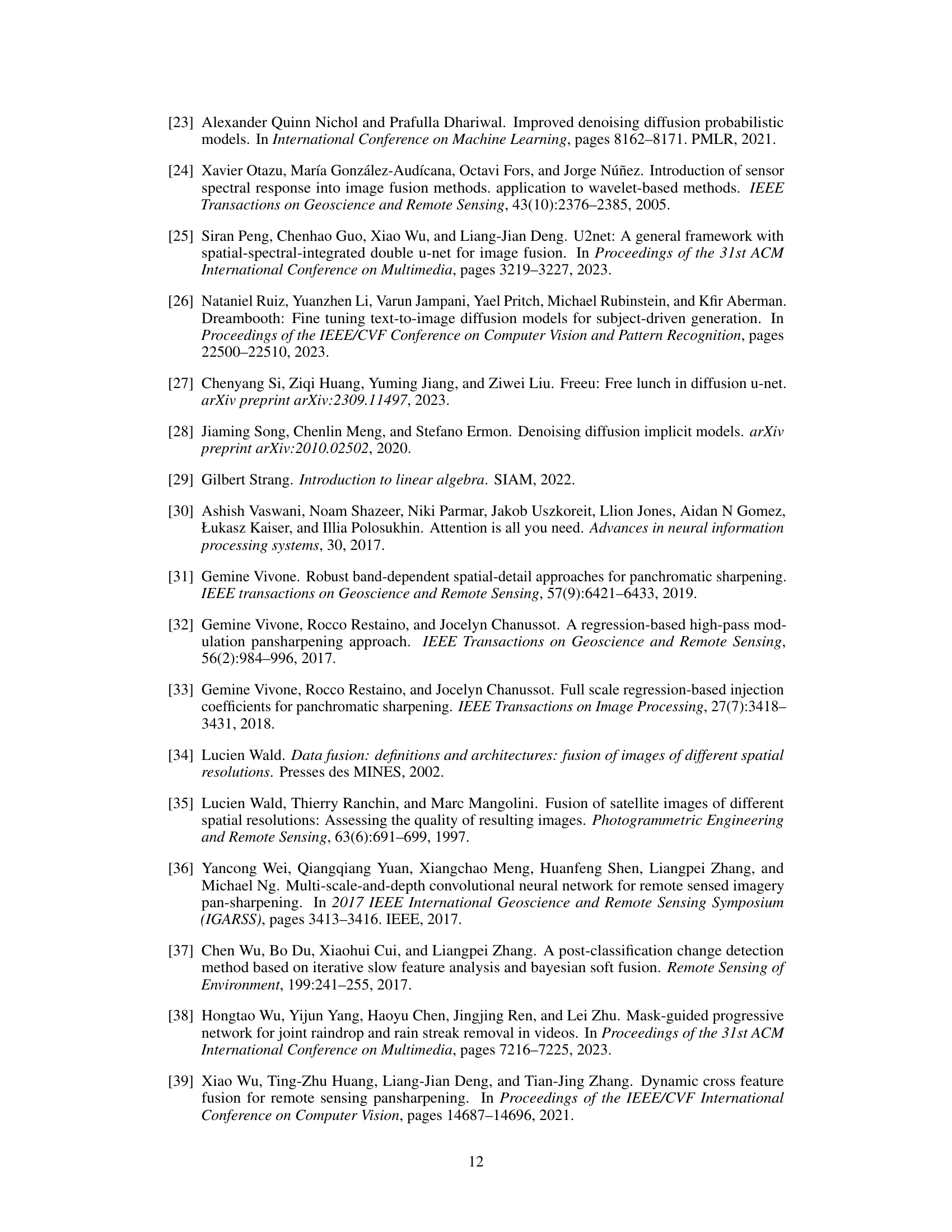

This figure presents a visual comparison of pansharpening results on WorldView-3 and GaoFen-2 datasets at reduced resolution. The top two rows showcase the pansharpened high-resolution multispectral images (HrMSI) generated by different methods for WorldView-3, while the bottom two rows show the results for GaoFen-2. The first and third rows display the HrMSI produced by various techniques, including the proposed SSDiff method. The second and fourth rows present error maps, which visually represent the differences between the generated HrMSI and the ground truth (GT). The green boxes highlight specific regions of interest for detailed comparison. This visual comparison demonstrates the relative performance of different pansharpening methods in terms of accuracy and detail preservation.

This figure illustrates the LoRA-like branch-wise alternative fine-tuning process used in SSDiff. The top half shows the process of fine-tuning the spectral branch, while the bottom half shows fine-tuning the spatial branch. In both cases, one branch’s parameters are frozen (locked) while the other branch’s parameters are updated (trainable). This alternating approach allows for more focused and discriminative feature learning in each branch without increasing the overall number of parameters.

This figure showcases visual comparisons of pansharpening results on reduced-resolution WorldView-3 and GaoFen-2 datasets. It presents the high-resolution multispectral images (HrMSI) generated by various methods (SSDiff, PanDiff, DCFNet, MMNet, LAGConv, CTINN, PNN, FusionNet, MSDCNN, DICNN, BT-H, MTF-GLP-FS, BDSD-PC) along with error maps comparing these results against the ground truth (GT). The error maps illustrate the differences between the predicted HrMSI and the actual GT, providing a visual assessment of the accuracy of each method.

This figure shows a visual comparison of pansharpening results on reduced-resolution WorldView-3 and GaoFen-2 datasets. It presents the pansharpened high-resolution multispectral images (HrMSI) produced by various methods (SSDiff, PanDiff, DCFNet, MMNet, LAGConv, CTINN, PNN, FusionNet, MSDCNN, DiCNN, BT-H, and MTF-GLP-FS) alongside error maps that quantify the difference between the generated HrMSI and the ground truth (GT). The visual comparison aids in assessing the accuracy and quality of each method’s pansharpening performance.

More on tables

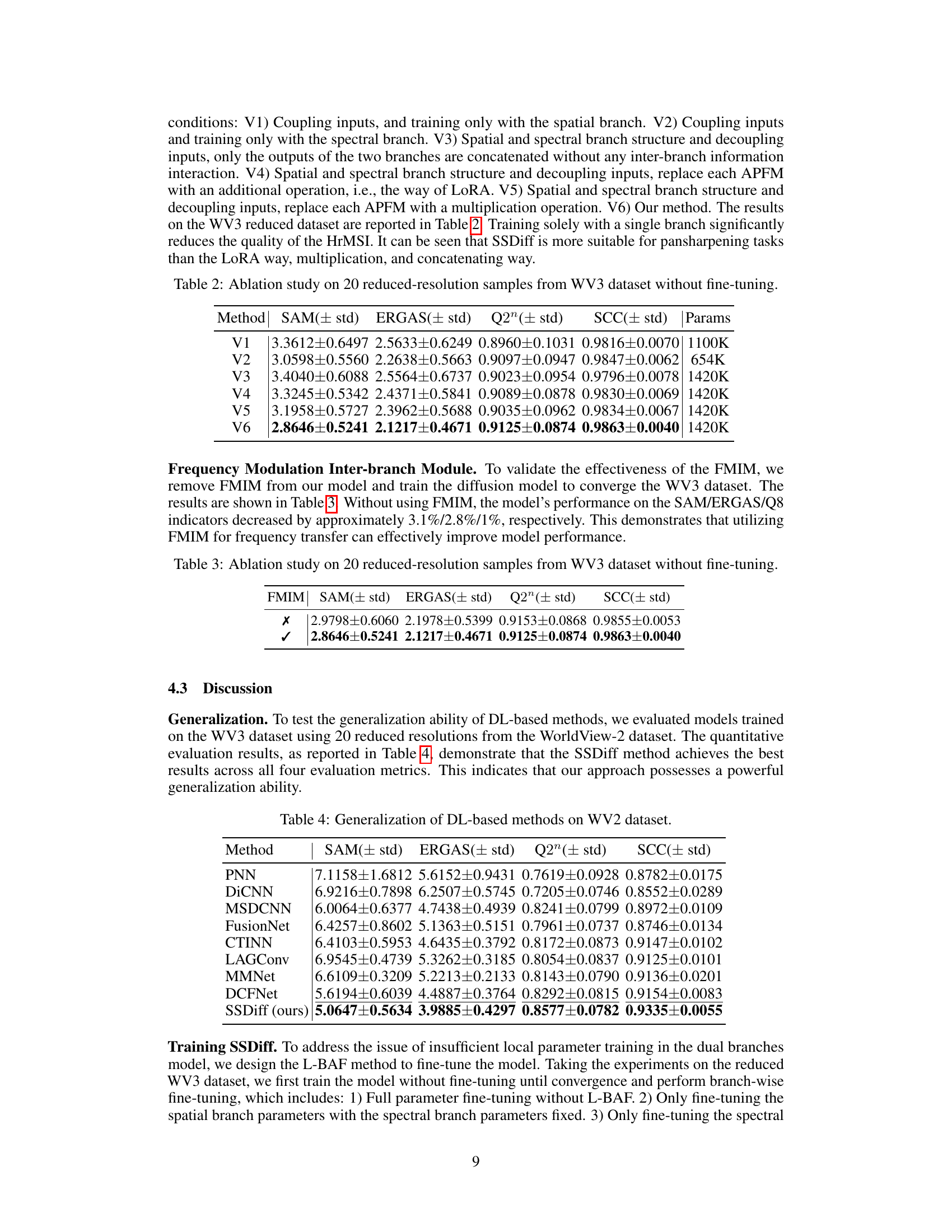

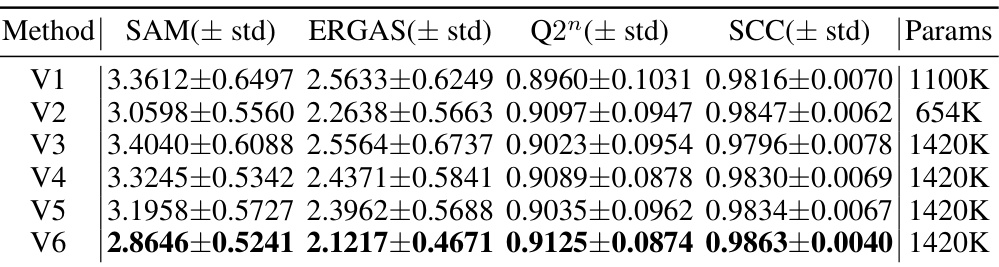

This table presents the results of an ablation study conducted on 20 reduced-resolution samples from the WorldView-3 dataset. The study evaluates the effectiveness of different design choices in the SSDiff model by comparing variations in the model’s performance without fine-tuning. Specifically, it examines variations of the SAM, ERGAS, Q2, and SCC metrics under different conditions (V1-V6) of the network structure and training strategies.

This table presents the ablation study results on 20 reduced-resolution samples from the WorldView-3 dataset. The study was conducted without fine-tuning to evaluate the impact of the Frequency Modulation Inter-branch Module (FMIM) on the model’s performance. The results show the SAM, ERGAS, Q2ⁿ, and SCC metrics with and without the FMIM, demonstrating its effectiveness in improving model performance.

This table presents a quantitative comparison of different pansharpening methods on the WorldView-3 and GaoFen-2 datasets. It shows the performance of each method in terms of SAM, ERGAS, Q2, SCC, Dx, Ds, and HQNR metrics for both reduced-resolution and full-resolution images. The best and second-best results for each metric are highlighted to easily compare the performance of SSDiff against other methods.

This table presents the ablation study results on the effectiveness of the proposed LoRA-like branch-wise alternative fine-tuning method. It compares different fine-tuning strategies: only spatial branch, only spectral branch, concatenating both branches, using LoRA-like method, using multiplication operation, and the proposed alternating fine-tuning method. The results show that alternating fine-tuning yields the best performance across different metrics.

This table presents a quantitative comparison of different pansharpening methods on two datasets, WorldView-3 (WV3) and GaoFen-2 (GF2). Both reduced-resolution and full-resolution results are shown. Evaluation metrics include SAM, ERGAS, Q2, SCC, Dx, Ds, and HQNR. The best-performing method for each metric is highlighted in bold, with the second-best underlined. This allows for a comprehensive comparison of the methods’ performance under different conditions and resolutions.

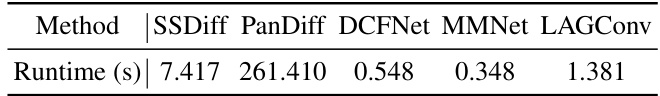

This table presents the runtime in seconds for different pansharpening methods on the WorldView-3 reduced-resolution dataset. The methods compared include SSDiff, PanDiff, DCFNet, MMNet, and LAGConv. The table highlights the relative efficiency of each method in terms of processing time.

Full paper#